Introduction to Data Scraping

In today’s digital-first world, data is the lifeblood of decision-making. Whether it’s understanding market trends, analyzing customer behavior, or optimizing business strategies, the ability to access and leverage data is more important than ever. However, the vast majority of this data resides across countless websites in unstructured formats, making it challenging to gather and utilize effectively.

This is where data scraping comes into play. By automating the extraction of publicly available web data, businesses can unlock actionable insights, streamline operations, and gain a competitive edge.

In this article, we’ll dive deep into the concept of data scraping, how it works, and why it’s an indispensable tool for modern businesses. Let’s start with the basics: What is Data Scraping?

What is Data Scraping?

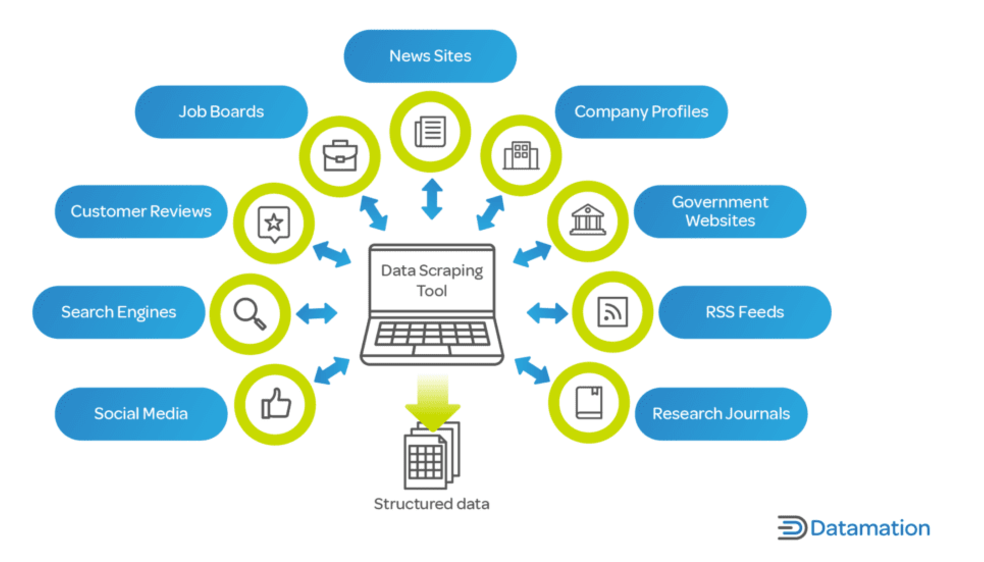

Data scraping, often referred to as web scraping is the process of extracting publicly available information from websites and transforming it into structured, usable formats. Businesses and individuals use data scraping to gather valuable insights from the vast amount of information available online, enabling data-driven decision-making across various domains. Data scraping means, analyzing large sources of websites to gather relevant information.

Whether it’s gathering product pricing from e-commerce sites, extracting customer reviews, or compiling datasets for research, data scraping allows users to automate the collection of large-scale information that would otherwise require extensive manual effort.

How Website Data Scraping Works?

At its core, data scraping involves interacting with websites in a way similar to how humans browse the internet. Here’s a breakdown of the fundamental process:

- Accessing the Target Website:

- Website data scraping tools or bots send HTTP requests to the target website to retrieve its HTML structure.

- Parsing HTML:

- The scraper analyzes the HTML and CSS code of the webpage to identify specific elements or data points, such as text, images, or tables.

- Extracting Relevant Data:

- Using predefined rules, the scraper extracts the desired information, such as product details, pricing, or contact information.

- Data Structuring:

- The extracted data is organized into structured formats like CSV files, Excel sheets, or JSON for easy analysis.

- Automating and Scaling:

- Advanced scraping tools automate the process for multiple pages or sites, enabling large-scale data collection with minimal human intervention.

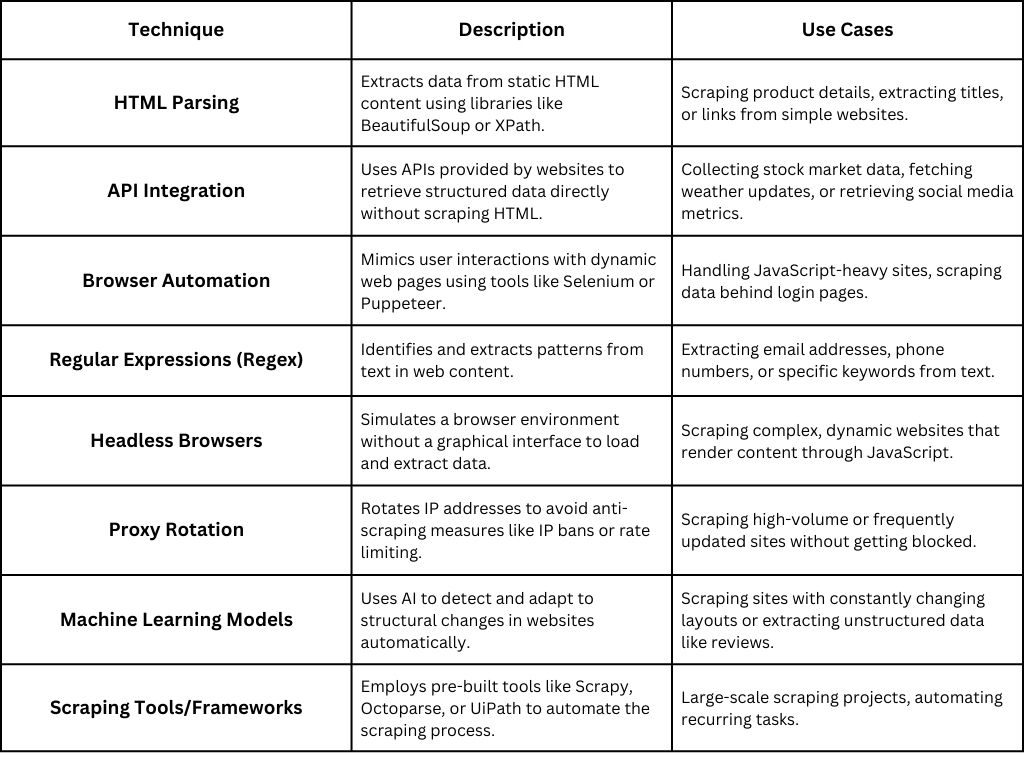

Top Data Scraping Techniques You Need to Know

- HTML Parsing: Extracting data from HTML structure of a webpage.

- API Integration: Using available APIs to retrieve structured data in a more efficient manner (if provided by the website).

- Browser Automation: Leveraging tools like Selenium to mimic human behavior for dynamic or JavaScript-heavy websites.

- XPath and CSS Selectors: Identifying specific elements within a webpage’s HTML using precise targeting methods.

Why Website Data Scraping Matters?

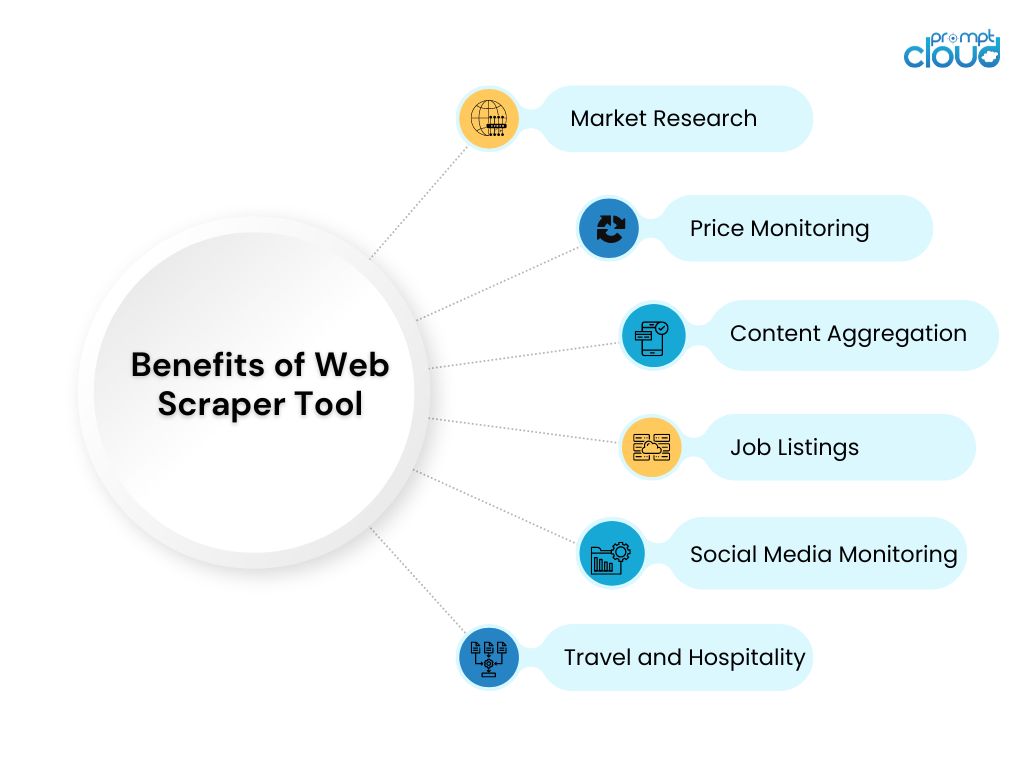

Data scraping is integral to modern businesses, powering applications in:

- Market Research: Monitoring competitor pricing, trends, and strategies.

- Sentiment Analysis: Gathering customer feedback from reviews and social media.

- AI Training: Collecting large datasets to train machine learning models.

Data scraping, when performed responsibly and ethically, is a game-changer for businesses looking to harness the power of data in a competitive landscape.

With PromptCloud, you gain access to tailored data scraping solutions that deliver high-quality, actionable insights – empowering smarter decisions and enhanced business strategies.

Why Data Scraping is Vital for Modern Businesses?

In today’s data-driven economy, businesses thrive on insights. The ability to access, analyze, and act on vast amounts of information gives organizations a competitive edge in understanding market trends, customer preferences, and competitor strategies. However, much of this valuable data resides on the web in unstructured formats. This is where data scraping comes into play, serving as a crucial tool for businesses to unlock actionable intelligence.

How Do Businesses Rely on Data Scraping for Competitive Insights?

Data scraping empowers businesses to gather publicly available information efficiently, enabling them to make informed decisions and stay ahead in competitive markets. Here are key reasons why companies leverage data scraping:

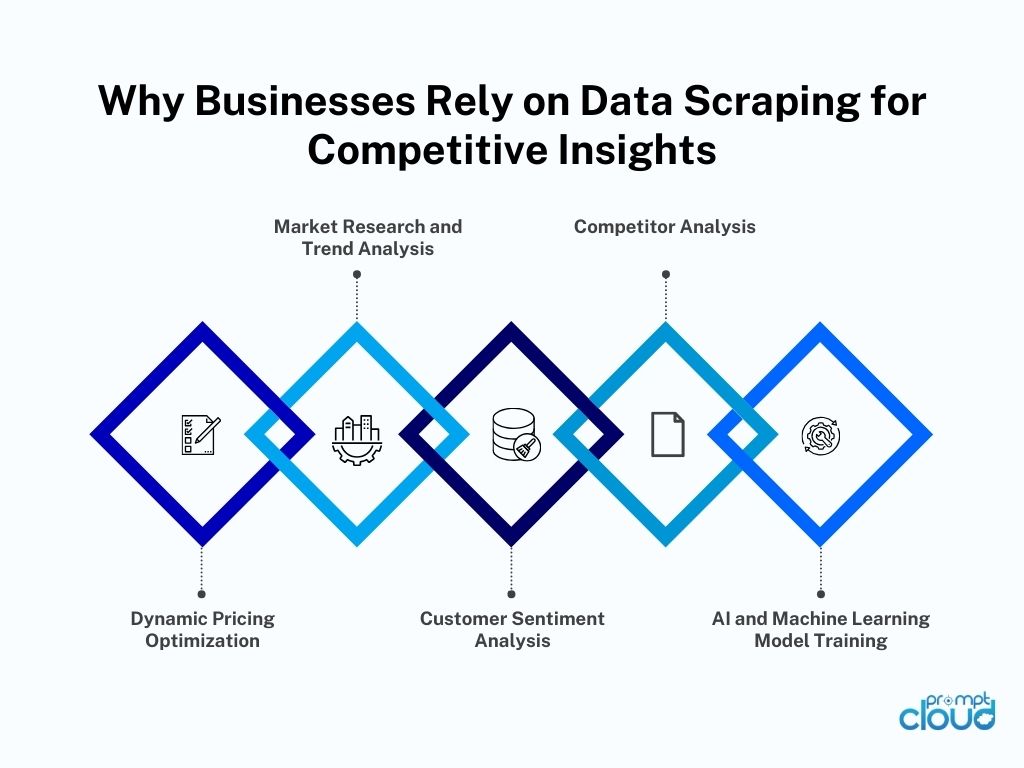

1. Market Research and Trend Analysis

Businesses use data scraping to monitor industry trends, consumer behavior, and emerging technologies. By analyzing scraped data, they can identify shifts in market demand, spot growth opportunities, and adapt strategies accordingly.

2. Competitor Analysis

Staying ahead requires knowing what competitors are doing. Data scraping provides insights into:

- Pricing Strategies: Monitor competitors’ pricing and promotional tactics.

- Product Offerings: Understand what products or services are gaining traction.

- Customer Feedback: Analyze reviews and testimonials to gauge competitor performance.

3. Dynamic Pricing Optimization

In industries like e-commerce and travel, where pricing fluctuates frequently, businesses rely on scraped data to implement competitive pricing strategies. Real-time data ensures their prices remain attractive while maximizing profits.

4. Customer Sentiment Analysis

By scraping customer reviews, social media posts, and feedback forums, companies can analyze sentiment around their brand or products. This helps businesses:

- Enhance customer experiences.

- Address pain points effectively.

- Build stronger customer loyalty.

5. AI and Machine Learning Model Training

Businesses leveraging AI rely on data scraping to collect large, diverse datasets for training predictive models. From sentiment analysis to image recognition, scraped data plays a foundational role in building robust AI systems.

6. Real-Time Decision Making

With the ability to scrape and analyze data in real time, businesses can make agile decisions. Whether it’s responding to a competitor’s price drop or seizing an emerging trend, timely insights are invaluable.

Best Data Scraping Tools and Services for Smarter Insights

Data scraping has become an essential process for businesses seeking to extract valuable insights from the web. While some organizations opt for in-house tools, others rely on professional data scraping services for more complex or large-scale requirements. With a variety of tools and service providers available, choosing the right solution can make all the difference in achieving your data-driven goals.

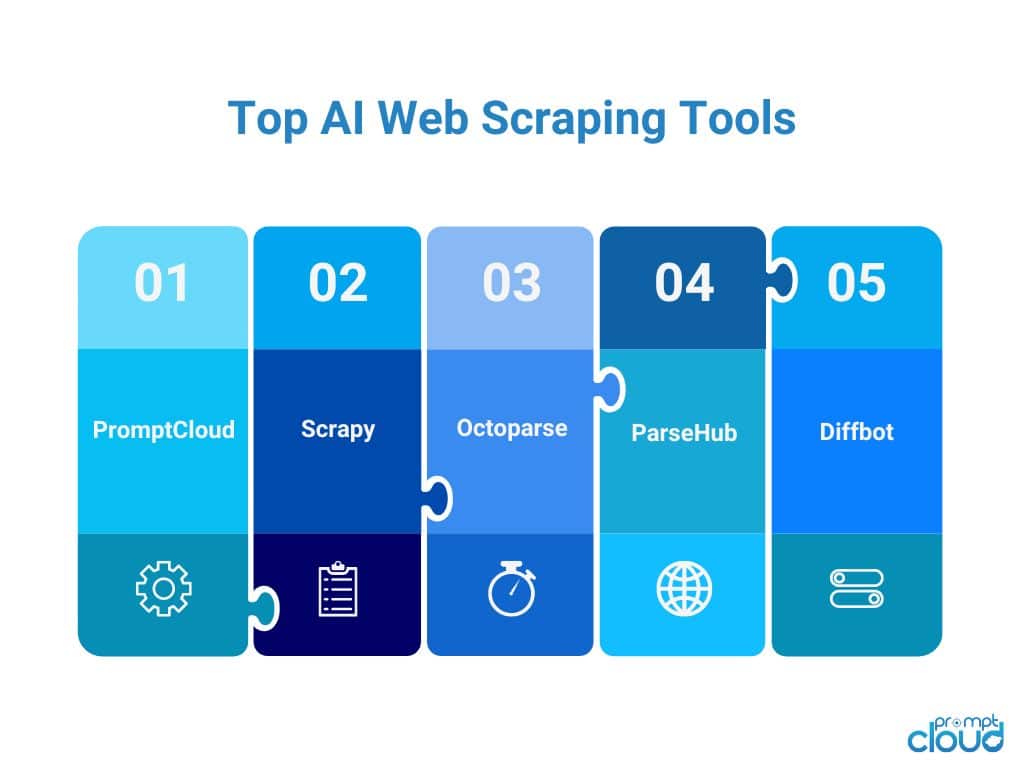

Popular Data Scraping Tools

Several data scraping tools cater to different levels of expertise and project complexity. These tools vary in terms of features, ease of use, scalability, and customization.

1. BeautifulSoup:

A Python-based library, BeautifulSoup is ideal for small-scale projects and for developers who are comfortable coding. It parses HTML and XML documents efficiently, making it a favorite among beginners.

2. Scrapy:

Scrapy is an open-source framework designed for web crawling and scraping. It’s developer-friendly and offers advanced functionalities like automation, data pipelines, and scalability for more complex projects.

3. Octoparse:

A no-code scraping tool, Octoparse is user-friendly and allows non-technical users to scrape data from websites through a drag-and-drop interface.

4. ParseHub:

ParseHub specializes in handling dynamic websites. It uses machine learning to navigate and scrape data from JavaScript-heavy pages.

5. Selenium:

Selenium is primarily a browser automation tool but is widely used for scraping data from websites. It mimics human interaction with web pages, making it ideal for extracting data from complex sites.

How to Select the Right Data Scraping Tool for Your Business?

The “best” tool depends on the specific needs of a business. Here’s what businesses should consider when selecting a tool:

- Ease of Use: Tools like Octoparse are excellent for non-technical users, while BeautifulSoup and Scrapy are better suited for developers.

- Scalability: For large-scale projects, tools like Scrapy or custom-built solutions often outperform basic scraping tools.

- Dynamic Content Handling: If the target websites use JavaScript, tools like Selenium or ParseHub provide the necessary capabilities.

- Automation Features: Businesses needing regular updates should prioritize tools with automation and scheduling features.

- Cost Efficiency: Open-source tools are cost-effective for small projects, but for ongoing or complex tasks, investing in a robust paid solution might be more beneficial.

When to Choose Data Scraping Services Over Tools?

While tools are ideal for DIY scraping, data scraping services are indispensable for businesses that:

- Lack in-house expertise or resources.

- Need large-scale or highly customized data extraction.

- Want to ensure compliance with data privacy regulations.

Data scraping service providers specialize in handling end-to-end scraping needs, including data extraction, cleaning, formatting, and delivery. These services often provide:

- Customization: Tailored solutions to meet specific business objectives.

- Scalability: The ability to extract large volumes of data across multiple sources.

- Compliance Assurance: Adherence to legal and ethical standards.

- Ongoing Support: Maintenance and updates for recurring scraping needs.

Top Data Scraping Service Providers

- PromptCloud:

A leader in customized data scraping services, PromptCloud offers scalable, real-time data extraction solutions for businesses across industries. With a focus on compliance and data quality, PromptCloud ensures that businesses get actionable insights tailored to their needs. - Diffbot:

Known for its AI-powered scraping capabilities, Diffbot provides structured data extraction using machine learning models. It’s ideal for businesses looking to process unstructured data at scale. - ScrapingBee:

ScrapingBee focuses on bypassing anti-scraping mechanisms, making it a reliable choice for businesses targeting complex or JavaScript-heavy websites. - WebHarvy:

WebHarvy provides an intuitive, point-and-click interface, making it accessible for non-technical users who need quick scraping solutions.

Top Reasons to Choose India for Data Scraping Solutions

India has emerged as a hub for affordable, high-quality data scraping services. Indian providers offer:

- Cost Efficiency: Competitive pricing without compromising on quality.

- Technical Expertise: A large pool of skilled developers and data scientists.

- Custom Solutions: Tailored scraping pipelines to meet diverse business requirements.

- Timely Delivery: Agile processes to ensure quick turnaround times.

Companies like PromptCloud are leading the charge, delivering world-class data scraping solutions to global clients.

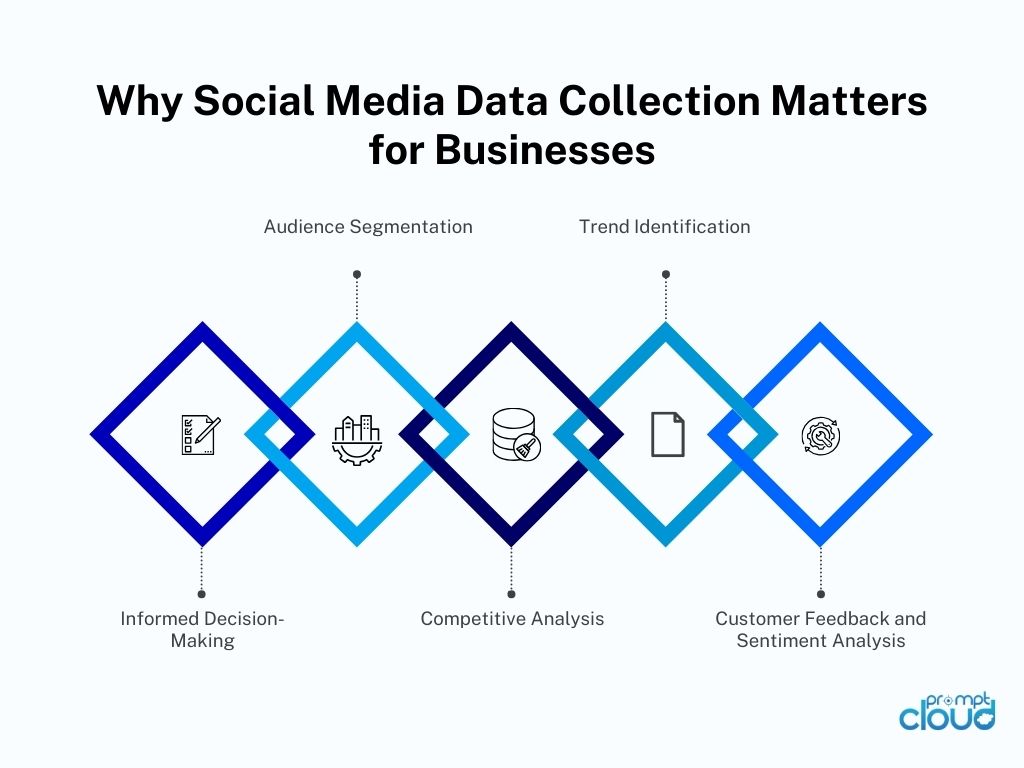

Why Social Media Data Scraping Matters for Businesses?

Social media platforms have become treasure troves of information for businesses, researchers, and analysts. From user behavior and trending topics to sentiment analysis and competitor strategies, social media data provides actionable insights that can transform decision-making processes. Social media data scraping is the process of extracting publicly available data from platforms like LinkedIn, Facebook, Twitter, and others for these purposes.

However, scraping social media comes with unique challenges, including platform restrictions, data privacy concerns, and dynamic content formats. In this section, we’ll explore how businesses can extract valuable insights from social media platforms, the tools and techniques involved, and how to navigate the ethical and technical complexities.

Social media data scraping involves gathering publicly accessible information, such as:

- User profiles and bios.

- Posts, comments, and hashtags.

- Followers, likes, and engagement metrics.

- Trending topics and sentiment indicators.

This data is typically used for purposes such as:

- Social media analytics: Understanding audience behavior, preferences, and sentiment.

- Market research: Analyzing trends, brand mentions, and competitor performance.

- Lead generation: Identifying potential customers or business prospects.

- Content strategy: Gaining insights into trending topics and user engagement patterns.

1. LinkedIn Data Scraping:

LinkedIn is a goldmine for B2B businesses and recruiters, offering access to professional profiles, job postings, and company information.

Applications of LinkedIn Data Scraping:

- Talent Acquisition: Extracting data on skills, experience, and job titles for targeted recruitment.

- Sales Prospecting: Gathering contact details and company information for lead generation.

- Market Research: Analyzing job trends, company growth, and industry insights.

Techniques for Scraping LinkedIn:

- Using tools like Selenium or Puppeteer to automate profile browsing and data extraction.

- Employing LinkedIn APIs for structured data access (when permission is granted).

- Adhering to ethical scraping practices to avoid violating LinkedIn’s terms of service.

2. Facebook Data Scraping

Facebook offers rich data, including posts, comments, group discussions, and events, which can be invaluable for audience analysis and sentiment tracking.

Applications of Facebook Data Scraping:

- Audience Insights: Analyzing user demographics, likes, and interests.

- Brand Monitoring: Tracking mentions, reviews, and feedback related to your brand.

- Event Analysis: Gathering data on popular events and user participation trends.

Techniques for Scraping Facebook:

- Scraping public posts and comments using browser automation tools or APIs.

- Monitoring pages or groups for relevant discussions.

- Extracting engagement metrics (likes, shares, comments) to gauge post performance.

3. Twitter Data Scraping

Twitter’s real-time nature makes it a vital platform for tracking trends, public sentiment, and breaking news.

Applications of Twitter Data Scraping:

- Sentiment Analysis: Understanding how users feel about brands, products, or events.

- Trend Monitoring: Tracking hashtags, keywords, and mentions for trending topics.

- Competitor Analysis: Analyzing competitors’ tweets, engagement, and follower growth.

Techniques for Scraping Twitter:

- Using the Twitter API for structured access to tweets and metadata.

- Scraping hashtags and mentions to identify trending topics and conversations.

- Analyzing engagement metrics (retweets, likes, replies) for performance insights.

Top Challenges in Scraping Social Media Data and How to Tackle Them?

While social media data scraping offers significant benefits, it also comes with technical and ethical challenges:

1. Platform Restrictions

- Social media platforms often implement anti-scraping mechanisms, such as rate limiting, CAPTCHAs, and IP blocking, to protect their data.

- APIs, while useful, may have limitations on the volume and type of data that can be accessed.

2. Dynamic Content

- Many platforms use JavaScript to dynamically load content, making it difficult to scrape using traditional HTML parsing methods.

3. Data Volume and Variability

- Social media data is vast and unstructured, requiring advanced tools to process and clean the extracted information.

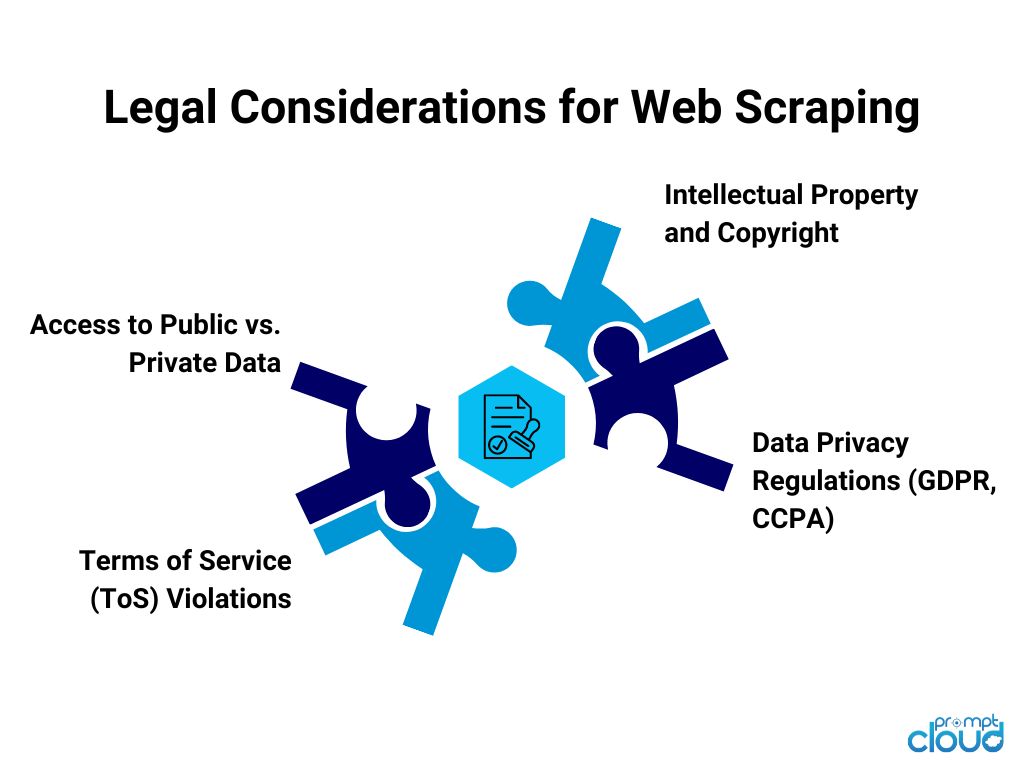

4. Compliance and Legal Risks

- Scraping content without permission may violate terms of service or data protection laws like GDPR or CCPA.

Social media data scraping is a powerful tool for gaining insights into user behavior, trends, and sentiment. From LinkedIn’s professional profiles to Twitter’s real-time updates, businesses can harness this data to refine strategies, improve engagement, and stay ahead in competitive markets.

However, the process must be approached responsibly, with a focus on privacy, compliance, and platform limitations. By leveraging ethical scraping practices and advanced tools, businesses can unlock the full potential of social media data while safeguarding trust and transparency.

PromptCloud offers tailored, compliant, and scalable social media data scraping solutions that empower businesses to make data-driven decisions. Whether you’re analyzing trends or monitoring brand performance, we’ve got you covered.

Power of Python and UiPath in Data Scraping Automation

Data scraping has become an essential process for businesses looking to harness the power of web data for actionable insights. Two powerful tools for data scraping Python and UiPath – stand out for their versatility and efficiency. Python offers unparalleled flexibility through its extensive libraries, while UiPath brings automation and user-friendliness to the forefront, making data scraping accessible to non-technical users.

In this section, we explore how these tools work, their advantages, and how AI is enhancing data scraping processes to make them smarter and more efficient.

Python for Data Scraping

Python is a go-to programming language for data scraping due to its simplicity, vast ecosystem of libraries, and ability to handle both structured and unstructured data efficiently.

Python Libraries for Data Scraping

- BeautifulSoup:

- A lightweight library for parsing HTML and XML documents.

- Extracts data from web pages with ease, ideal for beginners.

- Scrapy:

- A powerful framework designed for large-scale web scraping and crawling.

- Features automation, data pipelines, and scalability for complex scraping tasks.

- Selenium:

- Automates browsers for scraping dynamic websites that load content via JavaScript.

- Ideal for scraping sites with interactive elements like drop-downs or forms.

- Pandas:

- Not a scraping library per se, but essential for structuring and analyzing scraped data.

- Requests:

- Simplifies sending HTTP requests to retrieve webpage content.

Step-by-Step Guide to Data Scraping with Python

- Install Required Libraries:

- Use pip install to install libraries like BeautifulSoup, Scrapy, or Selenium.

- Send HTTP Requests:

- Use the requests library to fetch webpage content.

- Parse HTML Content:

- Employ BeautifulSoup to navigate and extract specific elements like titles, tables, or links.

- Handle Dynamic Content:

- For JavaScript-heavy sites, use Selenium to load the page and interact with elements.

- Clean and Structure Data:

- Use Pandas to clean and organize data into formats like CSV or JSON.

- Automate and Scale:

- For recurring tasks or multiple pages, use Scrapy to automate crawling and extraction.

Why Python is Popular for Data Scraping?

- Flexible and beginner-friendly.

- Extensive community support and documentation.

- Ideal for both small-scale projects and enterprise-level tasks.

UiPath for Data Scraping

UiPath is a leading Robotic Process Automation (RPA) platform that simplifies data scraping through automation. It’s particularly useful for businesses that lack technical expertise in coding but need efficient and reliable data extraction.

How to Use UiPath for Data Scraping?

- Launch UiPath Studio:

- Open the user-friendly UiPath interface to start creating workflows.

- Record Actions:

- Use the recorder feature to interact with the target website and capture data points.

- Extract Data:

- Leverage UiPath’s built-in scraping tools to select structured data (like tables) or unstructured data (like text).

- Automate Data Collection:

- Schedule workflows to scrape data at specific intervals, ensuring real-time updates.

- Export Data:

- Output data in formats like Excel, CSV, or JSON for further analysis.

Advantages of UiPath for Automated Data Scraping

- No Coding Required: Intuitive, drag-and-drop interface makes it accessible to non-developers.

- Dynamic Content Handling: Capable of scraping data from website and from JavaScript-heavy websites.

- Integration Ready: Seamlessly integrates with other business tools like CRMs, databases, or analytics platforms.

- Error Handling: Built-in features to manage exceptions, ensuring smoother workflows.

AI Data Scraping

AI is revolutionizing data scraping by introducing smarter and more efficient ways to extract, clean, and analyze information. Traditional scraping methods often struggle with complex websites, anti-scraping mechanisms, or unstructured data. AI bridges these gaps by enhancing every stage of the process.

How AI is Revolutionizing Data Scraping for Maximum Efficiency?

- Dynamic Adaptability:

- AI-powered scrapers can adapt to website structure changes automatically, reducing downtime.

- Content Recognition:

- Natural Language Processing (NLP) enables scrapers to extract meaningful insights from unstructured data like articles, reviews, or social media posts.

- Overcoming Anti-Scraping Mechanisms:

- AI-driven systems mimic human behavior, such as mouse movements or time delays, to bypass detection.

- Improved Data Quality:

- Machine learning algorithms clean and validate scraped data in real-time, ensuring accuracy and relevance.

- Sentiment Analysis:

- AI enables scrapers to analyze public sentiment by processing text data from forums, reviews, and social media.

- Real-Time Scalability:

- AI systems can handle massive volumes of data from multiple sources simultaneously without sacrificing performance.

How AI-Powered Scraping Transforms Data Collection?

- Market Research: Identifying trends and competitor strategies through real-time analysis.

- Predictive Analytics: Feeding scraped data into machine learning models to forecast market behaviors.

- Customer Insights: Extracting feedback and reviews to enhance products or services.

Python vs UiPath: Which is Better for Data Scraping?

Businesses with technical expertise often lean toward Python for its flexibility and scalability, while UiPath is perfect for teams seeking automation without delving into programming.

Whether you’re using Python’s powerful libraries for custom scraping solutions or UiPath’s intuitive platform for automated workflows, both tools offer unique advantages. When enhanced with AI capabilities, data scraping becomes a smarter, faster, and more reliable process, enabling businesses to gain actionable insights and stay competitive in a data-driven world.

PromptCloud combines the best of all worlds, offering scalable, AI-powered, and customizable data scraping solutions tailored to your business needs. Explore how our services can simplify your data journey today.

Instant Data Scraping

In the fast-paced digital era, businesses often require data that is not only accurate but also instantly available for decision-making. Instant data scraping refers to the ability to quickly extract and deliver real-time data from web sources without compromising on quality or reliability. This approach is crucial for industries like e-commerce, finance, and market research, where timely insights can significantly impact outcomes.

Let’s dive into the tools, solutions, methods, and innovative applications that make instant data scraping an essential component of modern data strategies.

Top 5 Best Instant Data Scraping Tools

The right tools can make all the difference in achieving instant and reliable data scraping. These tools are designed to handle high-speed extraction, dynamic websites, and large-scale data needs.

- Octoparse:

- A no-code tool offering instant extraction of data from websites, including dynamic and JavaScript-heavy pages.

- Ideal for non-technical users who need quick results.

- Scrapy:

- An open-source Python framework for fast and scalable data scraping.

- Enables advanced users to automate instant data extraction workflows.

- ParseHub:

- Specializes in scraping complex websites with minimal configuration.

- Offers cloud-based options for instant data delivery.

- UiPath:

- A robotic process automation (RPA) tool that simplifies instant data scraping through its intuitive interface.

- Best for businesses looking to integrate scraping into larger automation workflows.

- PhantomBuster:

- Focused on scraping data from websites and social media platforms like LinkedIn, Twitter, and Facebook.

- Delivers quick results for social listening and engagement analysis.

Why choose Instant Data Scraping Solutions?

For businesses needing customized and high-speed data scraping, service providers offer tailored solutions to meet specific requirements.

Key Features of Instant Data Scraping Solutions:

- Real-Time Data Delivery: Access fresh data on-demand for time-sensitive projects.

- Customizable Pipelines: Configure solutions to target specific websites or data types.

- Dynamic Content Handling: Scrape JavaScript-heavy or constantly changing web pages seamlessly.

- Scalability: Handle large volumes of data without delays or bottlenecks.

- API Integration: Streamline data delivery directly into your analytics or CRM platforms.

Best Practices for Instant Data Scraping

- Understand Your Goals:

- Clearly define the purpose of data scraping, whether it’s market research, price monitoring, or customer sentiment analysis.

- Target Specific Data:

- Avoid collecting unnecessary data by focusing on key elements like pricing, reviews, or metadata.

- Respect Website Policies:

- Follow the robots.txt guidelines of target websites to ensure ethical scraping.

- Optimize Frequency:

- Schedule scraping activities at intervals to avoid overloading the target website or triggering anti-scraping mechanisms.

- Ensure Data Quality:

- Use tools or algorithms to clean and validate data before analysis.

- Leverage Automation:

- Employ tools like UiPath or Scrapy to automate repetitive tasks and speed up data collection.

Advanced Techniques for Data Scraping

For those looking to push beyond the basics, advanced techniques can enhance both the efficiency and scope of instant data scraping.

- Headless Browsers:

- Tools like Puppeteer or Selenium simulate human browsing behavior to scrape complex web pages.

- Dynamic Content Handling:

- Use JavaScript-rendering tools to scrape data from sites that load content dynamically.

- Proxy Management:

- Rotate IPs to bypass anti-scraping mechanisms and ensure uninterrupted data collection.

- Machine Learning Integration:

- Train models to detect patterns in scraped data or predict future trends based on historical data.

- Real-Time APIs:

- Use APIs to fetch data instantly for time-sensitive applications like stock price monitoring.

Web Data Scraping Methods

Web data scraping involves multiple approaches tailored to different types of websites and data requirements.

- HTML Parsing:

- Extract data directly from the HTML structure using tools like BeautifulSoup or XPath.

- Best for static web pages with simple layouts.

- API Scraping:

- Use APIs provided by websites to collect structured data in a controlled manner.

- Ideal for real-time, high-frequency data needs.

- Browser Automation:

- Tools like Selenium automate user interactions with a website, mimicking human behavior for scraping dynamic content.

- Hybrid Methods:

- Combine API scraping with browser automation for complex websites that mix static and dynamic content.

Instant data scraping is a powerful capability that empowers businesses to access real-time information and act decisively. Whether using tools like Python and UiPath for DIY scraping or leveraging professional services for tailored solutions, businesses can harness data scraping to achieve unparalleled insights and efficiency.

With PromptCloud’s advanced, customizable data scraping solutions, you can achieve instant access to high-quality data, ensuring your business stays ahead in a competitive, data-driven world.

Legal and Ethical Considerations for Data Scraping

While data scraping is a powerful tool for gathering information, it’s crucial to address the legal and ethical considerations that come with it. Navigating these aspects responsibly ensures compliance with laws, protects privacy, and maintains trust with stakeholders.

Is Data Scraping Legal?

The legality of data scraping depends on the context, the data being scraped, and the terms of service of the target website. While scraping publicly available data is generally legal, certain factors can make it unlawful, such as:

- Violating Terms of Service: Scraping data from a website that explicitly prohibits such activities in its terms and conditions.

- Breaching Copyright or Intellectual Property Laws: Extracting proprietary data or content without authorization.

- Accessing Private Information: Collecting data that is behind a login or restricted by privacy regulations like GDPR or CCPA.

- Circumventing Security Measures: Using methods to bypass anti-scraping mechanisms like CAPTCHAs or firewalls.

Key legal frameworks, such as the Computer Fraud and Abuse Act (CFAA) in the U.S., govern data scraping activities. It’s essential to consult legal experts to ensure compliance with regional laws and website policies.

Ethical Practices for Data Scraping: Do’s and Don’ts

Following ethical practices not only protects your organization from legal risks but also reinforces a culture of responsible data use.

Do’s

- Scrape Publicly Available Data: Only collect information that is accessible without authentication or special permissions.

- Respect Robots.txt Files: Adhere to the directives specified in a website’s robots.txt file, which outlines scraping permissions.

- Seek Consent When Necessary: For sensitive or high-volume scraping, consider obtaining permission from the website owner.

- Comply with Data Privacy Regulations: Ensure data collection aligns with laws like GDPR, which protect user privacy.

- Monitor Scraping Frequency: Avoid overloading a website’s servers with excessive requests, which can disrupt normal operations.

Don’ts

- Scrape Sensitive Personal Data: Avoid collecting data like financial details, passwords, or health information without explicit consent.

- Ignore Website Terms of Service: Disregarding rules set by a website can lead to legal disputes and reputational harm.

- Use Data for Malicious Purposes: Never use scraped data for activities like spamming, hacking, or spreading misinformation.

- Bypass Security Measures: Refrain from using unauthorized techniques to access restricted areas of a website.

- Share or Sell Unauthorized Data: Distributing scraped data without permission can breach intellectual property laws and damage trust.

Data scraping, when done legally and ethically, is a powerful tool for unlocking valuable insights. By respecting privacy, adhering to regulations, and practicing responsible data use, businesses can leverage data scraping to drive innovation while maintaining their integrity.

With PromptCloud, you can rely on compliant, ethical, and customized data scraping solutions that prioritize legality and trust – empowering your business with actionable insights responsibly.

How to Leverage Data Scraping for Business Growth?

To maximize the potential of data scraping:

- Define Clear Objectives: Identify specific goals, such as market research, pricing optimization, or lead generation.

- Invest in the Right Tools or Services: Choose solutions that align with your technical capabilities and business requirements.

- Focus on Data Quality: Clean, validate and structure your data to ensure actionable insights.

- Integrate Data Seamlessly: Connect scraped data to analytics platforms, CRMs, or AI models for streamlined operations.

- Stay Compliant: Ensure adherence to data privacy laws and ethical scraping practices.

When used strategically, data scraping can enhance decision-making, improve customer experiences, and drive competitive advantages.

Future of Data Scraping

The future of data scraping will be shaped by advancements in AI, automation, and regulatory frameworks. Here’s a glimpse of what lies ahead:

AI and Automation Trends in Data Scraping

- Self-Adapting Scrapers: AI-powered systems that automatically adjust to website changes without manual intervention.

- Natural Language Processing (NLP): Enhanced ability to extract meaningful insights from unstructured data like reviews or articles.

- Real-Time Scalability: Automated systems capable of handling massive data volumes across multiple sources simultaneously.

Predictions for Data Scraping in 2025 and Beyond

- Increased Focus on Compliance:

- Stricter regulations will drive the need for ethical scraping practices and consent-based data collection.

- Integration with AI Systems:

- Data scraping will increasingly feed predictive models, recommendation engines, and decision-support systems.

- Industry-Specific Solutions:

- Customized scraping pipelines tailored to sectors like healthcare, retail, and finance will become mainstream.

- More Accessible Tools:

- The rise of no-code and low-code platforms will democratize data scraping, enabling wider adoption.

With PromptCloud’s cutting-edge data scraping solutions, you can navigate the complexities of data extraction and focus on what matters most—turning insights into impact. The future of data scraping is here, and it’s time to make it work for you.

Conclusion

Data scraping has evolved into an indispensable tool for businesses, enabling them to extract actionable insights from the vast expanse of web data. Whether through advanced tools like Python-based frameworks, automation platforms like UiPath, or professional data scraping services, organizations can unlock significant value by integrating data scraping into their operations.

Ready to unlock the power of data scraping for your business? Schedule a demo today and discover how our tailored solutions can help you gain actionable insights and drive smarter decisions.

Frequently Asked Questions

Why Might a Business Use Web Scraping to Collect Data?

Businesses use web scraping to collect data because it enables them to access and analyze large volumes of publicly available information quickly, efficiently, and cost-effectively. Here are some key reasons why businesses rely on web scraping:

- Market Research and Trend Analysis:

Web scraping allows businesses to monitor industry trends, competitor strategies, and customer preferences in real-time. This helps them make informed decisions and stay ahead in competitive markets. - Competitive Analysis:

By scraping competitor websites, businesses can gather data on pricing, product offerings, and customer reviews to refine their own strategies and offerings. - Dynamic Pricing Optimization:

Retailers and e-commerce platforms use web scraping to track competitor pricing and adjust their own in real-time, ensuring they remain competitive while maximizing profits. - Customer Sentiment Analysis:

Businesses can scrape customer reviews, social media posts, and forums to understand how their products or services are perceived, helping them address pain points and enhance customer satisfaction. - AI Model Training:

Large datasets collected through web scraping are used to train machine learning models for applications like recommendation systems, predictive analytics, or fraud detection. - Content Aggregation:

Media companies and content aggregators scrape news websites, blogs, and forums to deliver curated content to their audiences. - Regulatory Monitoring:

Businesses in heavily regulated industries scrape government or regulatory websites to stay updated on compliance requirements.

Web scraping offers businesses a scalable, automated way to collect the data they need to drive innovation, improve decision-making, and maintain a competitive edge in a data-driven world. When done ethically and in compliance with legal standards, it’s an invaluable tool for modern enterprises.

Web Scraping is Used to Extract What Type of Data?

Web scraping is used to extract various types of publicly available data from websites. The type of data extracted depends on the specific goals of the business or individual. Here are the common types of data extracted using web scraping:

- Textual Data:

- Articles and Blogs: Extracting written content for sentiment analysis, content aggregation, or research.

- Product Descriptions: Gathering product details for e-commerce or comparison sites.

- Numerical Data:

- Pricing Information: Tracking product or service prices for dynamic pricing strategies or competitive analysis.

- Stock Market Data: Collecting real-time or historical financial data for market analysis and trading algorithms.

- Customer Reviews and Ratings:

- Extracting user reviews and star ratings from platforms like Amazon, Yelp, or Google to understand customer sentiment and product performance.

- Visual Data:

- Images: Scraping product images, real estate photos, or design assets.

- Videos: Extracting metadata or links to video content for analysis or aggregation.

- Social Media Data:

- Collecting posts, comments, hashtags, and engagement metrics for sentiment analysis, trend monitoring, or social listening.

- Structured Data:

- Tables and datasets from sources like financial reports, stock exchanges, or government databases.

- Metadata:

- Gathering meta tags, titles, or keywords for SEO analysis or digital marketing strategies.

- Dynamic Data:

- Scraping real-time feeds such as weather updates, traffic conditions, or live sports scores.

Web scraping can extract almost any type of publicly available data from websites. However, it’s essential to follow ethical practices and comply with legal regulations to ensure responsible use of the extracted data.

How do Web Scraping and Data Mining Differ in Data Extraction?

Web scraping and data mining are related but distinct processes:

- Web Scraping focuses on extracting raw data from websites, such as prices, reviews, or metadata. It’s a method to gather publicly available information for further use.

- Data Mining involves analyzing existing datasets to uncover patterns, trends, and actionable insights. It uses tools and techniques like clustering, classification, and predictive modeling.

In essence, web scraping collects the data, while data mining interprets it, making them complementary to data-driven strategies.