As 2014 draws to a close, it is evident that there has been no let-up in the scorching pace of growth seen in the Big Data space. The Big Data market is estimated to grow to about $45 billion by 2016 at a CAGR of 35%; according to some estimates, this segment is growing at about six times the growth rate of the rest of the IT industry. Unsurprisingly, vast amounts of capital have also followed the opportunities in this space as venture capitalists have invested in a wide range of companies. Hadoop vendors, NoSQL database providers, Business Intelligence (BI) tool providers and analytical framework vendors.

Not with standing all the progress made, one of the critical success factors for maximising the potential of Big Data analytics is a robust infrastructure that can support the relentless pace of growth as well as the growing requirements and expectations from Big Data. As the demand on business outcomes grow, so is the demand on infrastructure. This is driving innovation and development of better capabilities across the Big Data spectrum including data storage and management, networking and software. However, it appears that most organisations are not fully prepared in terms of their IT infrastructure to cope with the requirements of Big Data as indicated in a presentation by IBM. Considering how imperative it has become for businesses to adopt and adapt to the opportunities and challenges that Big Data bring, companies are investing in readying their infrastructure to profit from the data and intelligence deluge.

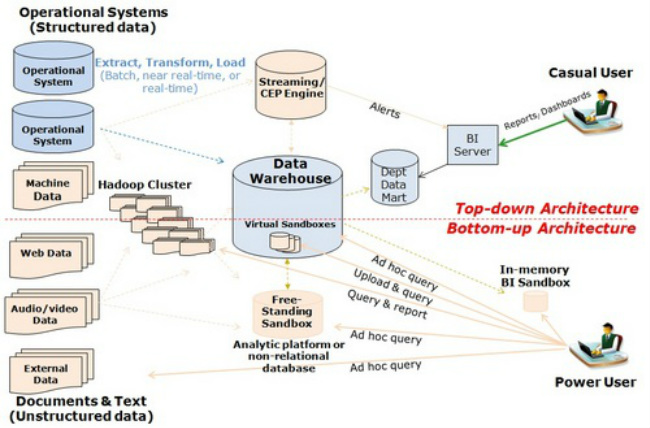

Pre-requisites of Big Data infrastructure

There are three essential characteristics of Big Data infrastructure as the aforementioned presentation shows. These are availability, access and speed.

Availability is critical to ensure that people and processes that need the information and insights get them exactly when and where they need them. Having such insights is empowering to people as well, as it facilitates better collaboration for addressing problems and capitalising on opportunities in a timely fashion. Similarly, the infrastructure should cater to access requirements such that one gets greater visibility into customers and operations.

Thirdly, speed is critical “to accelerate insights in real-time at the point of impact. Infrastructure must build intelligence into operational events and transactions. Optimise decisions in real-time by embedding intelligence into operational processes using integrated high performance infrastructure capabilities.”

Re-thinking both hardware and software infrastructure

An important challenge that companies face while adapting to the rules in a Big Data driven world is the need to have a thorough re-look and re-think of their existing IT infrastructure, systems, tools and processes. Such changes undoubtedly bring with them greater complexity to the IT system, but are unavoidable to ensure the organisations enjoy the potential benefits of Big Data. Resistance to change is quite common and Big Data vendors have the challenge of convincing IT managers to make the requisite upgrade of infrastructure and tools. “…big data vendors are trying to reassure enterprise IT types that these products are secure and reliable as 30-year-old database management systems. Let’s just say that more than a few grizzled IT veterans are still used to working with favored and familiar tools and still need some convincing,” writes Doug Henschen in a recent article Ben Rossi raises the need for companies to think of both the hardware and software infrastructure as they look to address the different and changing applications of Big Data.

“Solving these computing problems at scale, and at speed, is also critical, as companies now expect near real-time insight from their big data (this is especially pertinent in the digital marketing space where campaigns are becoming increasingly targeted and personalised). As a result of this, optimising infrastructure for both hardware and software is crucial. One primary challenge comes as big data can fall into two separate use cases: real-time, transactional data analytics, and deeper dive offline data analysis more often operating in batch mode. Despite the fact many organisations want both processes in place, the optimal infrastructure for each is distinctly different. Real-time data use-cases include website interactive session tracking, session management, real-time and predictive analytics (like recommendation engines) and the like. And while organisations are increasingly coming to understand the improvements big data can effect on their businesses, they need to have a practical plan when it comes to building an IT infrastructure that can keep up with the use-cases identified today, as well as provide a platform for the use cases of tomorrow.”

Improving the network infrastructure

With a robust network infrastructure, Big Data may not be half as useful and consequently, this area gets a considerable mind share among senior executives at companies. In fact, more than 40 percent of IT decision makers who participated in a survey conducted by QuinStreet rate increasing network bandwidth as their top priority in preparing infrastructure for Big Data. As the writer mentions, most of the tools to build the infrastructure for Big Data are already available and businesses are preparing well for the challenge.

The true challenge goes beyond capturing and analysing Big Data but understanding what specifically to do with the results from the data analytics. The question is whether the network infrastructure is ready for that; it could be the weak link that could mean the difference between potential and performance.