**TL;DR**

If you’re in finance, you already know the drill. News breaks fast. Really fast. Markets react even faster. So, trying to keep up with global financial news from sites like Yahoo Finance or Moneycontrol by hand? Honestly, that’s a full-time job—and a frustrating one at that.

Now, here’s where things get easier.

Web scraping companies have quietly become the behind-the-scenes engine for firms that need financial news delivered in real time. Not just from one source, but dozens. Automatically. Cleanly. And in formats that work with your tools.

But not all scraping providers are built the same. Some handle scale better. Others are quicker. A few specialize in finance, which, let’s be real, has its own set of quirks and compliance issues.

So, who should you trust with the job?

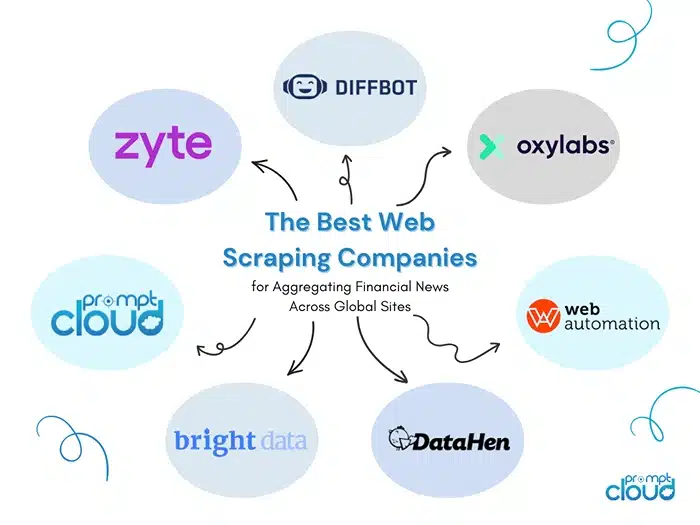

We’ve rounded up the best web scraping companies for pulling financial data and news from across the globe. These aren’t fly-by-night tools, they’re solid partners used by analysts, investment platforms, and fintech teams alike:

- PromptCloud – Built for scale, especially in finance. Offers custom data solutions with ultra-low latency.

- Zyte – You might know them as Scrapinghub. They’ve grown into a serious platform for large-scale scraping.

- Diffbot – This one’s more on the AI side. It turns web pages into structured data using machine learning.

- Oxylabs – Heavy-duty infrastructure. If you need lots of data fast (and clean), they can handle it.

- WebAutomation.io – A plug-and-play tool with templates for scraping financial sites. Great for quick setups.

- DataHen – Known for managed projects. You say what you need; they build it out and keep it running.

- Bright Data – Formerly Luminati. Big on ethical scraping and serious about compliance, which matters in finance.

No matter if you’re scanning for shifts in market mood, digging into macro trends, or just trying to stay a few steps ahead with your research, the companies listed here can help you pull in financial news from all corners of the web, without having to sit there hitting refresh every few minutes.

Why Aggregating Financial News Is So Difficult Without Web Scraping

Image Source: iwebdatascraping

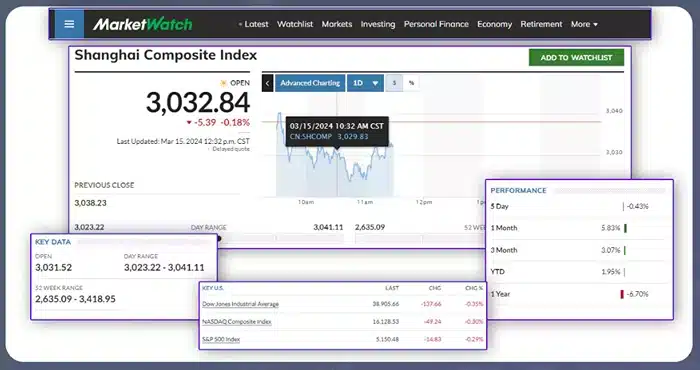

Let’s say you’re a data analyst or managing investments, and your job depends on staying current with what’s happening in the markets. The trouble is, financial news doesn’t arrive in one neat stream. It comes from all over: press releases, company filings, government updates, investor portals, media sites like Yahoo Finance, Moneycontrol, and Morningstar, and even lesser-known regional sources. Some of it is fast. Some of it is slow. And none of it is packaged just the way you need.

If you’ve ever tried to monitor even a handful of these sources manually, you already know the feeling. You’re refreshing browser tabs, switching between sites, and scanning headlines. It’s not only exhausting, it’s unreliable. You miss things. And in finance, what you don’t see can cost you.

Now add scale to that problem. You’re not tracking five sources. You’re following fifty. Maybe a hundred. Different languages. Different time zones. And with updates coming in around the clock, any kind of manual system just falls apart.

Here’s the part people don’t talk about enough: most financial data isn’t structured in a way that’s easy to analyze. A headline on a news site might be useful, but buried in it are dates, figures, and references that need to be pulled out, cleaned, and organized. You can’t plug it straight into a dashboard or alert system without doing some heavy lifting first.

That’s why scraping has become the default for teams.

A good web scraping setup doesn’t just collect data; it pays attention to the shape of it. It knows how to pick out earnings announcements from filler content. It can watch a page and catch changes the moment they happen. And it doesn’t get tired at 2 a.m. when something major drops from a central bank overseas.

Financial news changes quickly. Sometimes, even the same page gets updated five times in an hour. One line gets added. A sentence is reworded. The tone shifts. And if you’re using slow tools or relying on yesterday’s feeds, you’re behind. Scraping fixes that by checking sources constantly and pulling in the newest version, not just the first one that appeared.

The real issue isn’t that financial news is hard to find. It’s that there’s too much of it, and most of it arrives in ways that aren’t usable out of the box.

Web scraping, when done right, turns that mess into something you can work with.

What to Look for in a Web Scraping Company When You Need Financial News That’s Fast, Clean, and Constant

If you’re in a role where financial news impacts the decisions you make, or the tools you build, then you already know the value of being early. Not just early to read something, but early to understand it, act on it, and move ahead of your competitors.

Web scraping helps make that possible, but only if you’re working with the right provider. So before signing on with any company, it’s worth asking: do they offer what financial teams actually need?

Here are the key features that matter most when scraping financial news across global sites:

Real-Time Capability

In finance, being fast isn’t just helpful, it’s often the difference between acting in time or missing the moment. News shows up when it wants to, and if you’re even a few minutes late, the market’s already moved on. That can change how people trade, how investors react, and even how entire strategies play out. A scraping partner worth its salt should be able to capture changes on a page as they happen. That means constant monitoring, not just periodic crawls. When a policy update goes live or a central bank drops a new bulletin, you want that data in your hands immediately, not 30 minutes later.

Multi-Source Aggregation

One source of truth? That doesn’t exist in financial news. A meaningful update might appear first on a company’s website, then on Morningstar, followed by a quick mention on Moneycontrol, and maybe a deeper analysis later in a newsletter. Good scraping companies understand that you need broad coverage. They should be able to pull from international outlets, government portals, investor relations pages, financial blogs—you name it. Anything less, and you’re left with gaps in your view.

Structured Data Delivery

Pulling data off a page is one thing. Getting it in a format that actually makes sense to work with? That’s something else entirely. If you’re spending your morning fixing broken rows or trying to decode unstructured blobs, the time cost adds up fast. That’s why it matters whether your scraping provider sends you clean, usable data—something you can feed into your tools right away, like properly formatted JSON or CSV. The more work they do on their end, the less you have to patch on yours. This isn’t just about convenience. Structured delivery lets you plug scraped data directly into your analytics tools, dashboards, or internal systems with zero friction.

Compliance and Legal Awareness

There’s no point pretending this part doesn’t matter. Scraping financial sites isn’t just a technical job—it comes with legal responsibilities. Any provider worth working with should be upfront about how they operate. That includes how they follow site rules, what they do when a page restricts access, and whether they stay within privacy laws. If you ask about this and get vague answers or a quick subject change, it’s probably not someone you want managing your data flow. In finance, risk management applies to your data pipelines too.

Customization and Flexibility

Financial professionals don’t all want the same kind of feed. A trading platform might need sentiment-rich commentary tied to individual stocks. A hedge fund might be tracking macroeconomic indicators by country. And a fintech app might just need concise daily summaries pulled from trusted sources. The best scraping companies don’t offer cookie-cutter packages—they offer tailored solutions. That’s what allows your data operation to actually support your goals, not work against them.

The Best Web Scraping Companies for Aggregating Financial News Across Global Sites

If you’ve been trying to track financial news across multiple regions and platforms, you already know how messy and inconsistent the landscape can be. From Yahoo Finance to Moneycontrol and Morningstar, every site has its quirks, and getting that information in one place without constant manual effort isn’t something just any scraping vendor can pull off.

Below are a few companies that folks in finance tend to turn to when the usual APIs and dashboards don’t cut it. Some are better for high-frequency data. Others are more flexible with niche coverage or edge cases. But all of them have shown they know how to handle financial content at scale.

PromptCloud

PromptCloud doesn’t advertise itself the way some others do, but that’s mostly because we’re busy building very custom setups for clients who need precision. If you’re dealing with financial content, especially from global sources, we’re one of the few teams that will take the time to get the whole thing tailored.

We usually build the kind of systems that just hum along in the background—quiet, reliable, not needing constant attention. If you’ve ever had to track a long list of sources spread across different countries, or sites that randomly change layout overnight, you’ll get why that matters. Our clients usually want more than just headlines—they’re after structured formats, ticker-specific context, and minimal delay between publishing and delivery.

Zyte

Zyte has been around a while. If you’ve done any scraping yourself, you’ve probably heard of Scrapy—the open-source framework they created. What they’ve built on top of that is something more enterprise-focused, with tools that help users deal with all the things that make large-scale scraping painful.

For financial content, especially if you’re pulling from complex or protected sites, Zyte can be a smart option. They’ve got rotating proxies, browser automation, and systems that retry intelligently when a page fails to load or blocks a request. They also let you focus on the data rather than worrying about the plumbing behind it.

Diffbot

Diffbot works differently from most scraping providers. They’re not writing crawlers by hand—instead, they use AI to scan web pages and recognize patterns, kind of like a person reading a page would. That might sound abstract, but it becomes really useful when you’re pulling from news sources with strange layouts or inconsistent article structures.

What people like about Diffbot, particularly in financial analytics, is its ability to find relevant entities in a story and tie them together. If a company is mentioned alongside a product launch and a market reaction, Diffbot’s tools can often pull that into a format that makes analysis easier later on.

Oxylabs

Oxylabs has built its reputation mostly on infrastructure. They’ve got a huge proxy network and tools meant for users who need large amounts of data quickly. If you’re part of a team that’s collecting updates in real time from dozens of financial portals, you’ve likely run into Oxylabs—or a competitor trying to be like them.

They’ve also become more visible lately for their attention to compliance, which is a big deal in finance. It’s not just about what you can scrape—it’s about how responsibly you do it.

WebAutomation.io

This is a good choice if you’re in a hurry or don’t want to build an entire scraping setup from the ground up. They offer pre-configured templates for many well-known financial sites, and while it might not cover everything under the sun, it’s enough for getting started without technical headaches.

It’s especially handy for people at smaller firms or startups where speed matters more than having something custom. You can point and click your way to useful data and start testing ideas without waiting on a long development cycle.

DataHen

Let’s say you know what kind of data you’re after, but your team’s already stretched thin. That’s where DataHen usually comes in—they’ll take the whole scraping piece off your plate. You’re not writing scripts or worrying about schedulers; they manage the nuts and bolts and just send the data the way you need it.

They’ve worked with financial data projects that involve not just pulling articles or releases, but also keeping track of changes to the same page over time. For analysts and research teams, that kind of detail can be more valuable than just knowing what got published.

Bright Data

Bright Data is best known for its proxy infrastructure, which is massive. That alone makes it popular with large organizations, especially those that need to access websites without getting blocked. But in recent years, they’ve also developed tools that help users extract web data more easily—and legally.

What stands out here is how transparent they are. If your legal team needs clarity on how the data is sourced or how user privacy is handled, Bright Data tends to have answers at the ready. In finance, where compliance isn’t optional, that’s not a small thing.

Choosing the right provider isn’t always straightforward. Maybe speed is your top priority. Or maybe you care more about flexibility or getting deep coverage across different regions. Either way, what really counts is whether the company you go with understands the pressure you’re under, especially when one piece of news can shift the entire market.

How These Services Support Financial Data Analytics and Decision Making

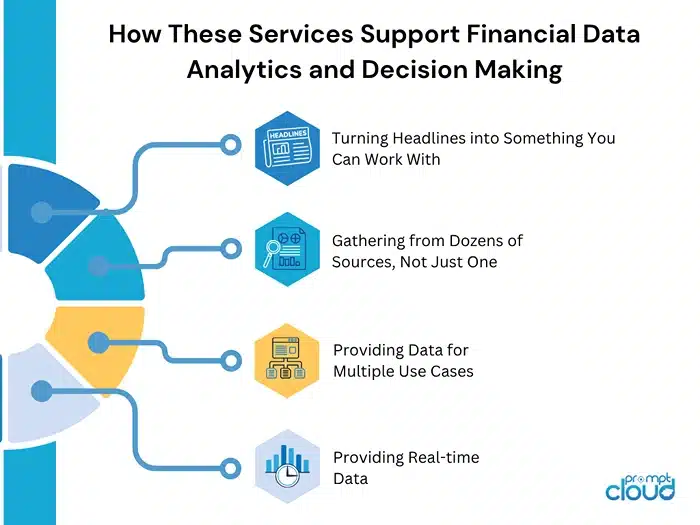

If you’re working in finance, you’re probably drowning in information. News, reports, filings, commentary—it never stops. But grabbing that data is one thing. Making sense of it, fast, is another. That’s where these scraping tools start to matter. They don’t just fetch headlines. They help teams keep their edge by turning scattered updates into usable inputs that actually support smart decisions.

Turning Headlines into Something You Can Work With

Getting news is easy. Getting the right parts of it, in the right format, at the right time—that’s where things get messy. Most financial scraping setups are designed to do exactly that: take bits and pieces from dozens of sources and turn them into structured, machine-readable formats. Not for the sake of neatness, but so the data can feed straight into your dashboards, models, or reports.

And the less time your team spends cleaning it up, the more time you get back to figure out what matters.

Gathering from Dozens of Sources, Not Just One

There’s no single place where financial news lives. A price shift might be explained by something in a niche regional blog or buried in an official PDF from a regulator. That’s why relying on just one or two sources won’t cut it.

Scraping lets you pull from all over—Yahoo Finance, Morningstar, government bulletins, market commentary, and more. And because it runs on your schedule, not theirs, you’re not stuck waiting for updates to trickle in through official feeds.

Where This Data Actually Gets Used

You’ll see this kind of data being used in all sorts of ways. A hedge fund might be scraping short market updates across Asia before the U.S. markets even open. A fintech product could use it to notify users when a stock in their portfolio makes headlines. A research team might track how often a company gets mentioned in news reports versus actual earnings changes.

It’s not about having more data. It’s about using the right data at the right moment—whether that’s live or for historical analysis.

Why Timing Still Wins

You can’t make a move if you’re still waiting for the numbers to show up. A headline that goes unnoticed for 20 minutes can already be priced in by the time you act. And if your team’s working with outdated inputs, your decisions could be off by more than you realize.

Scraping doesn’t just help you collect data—it helps you stay ahead. Because in this space, a few minutes of delay can mean you’re already behind.

Why PromptCloud Stands Out for Financial News Aggregation

If you’ve looked into scraping providers, you’ll notice a lot of them promise the same things—speed, structure, scale. And many of them do a decent job. But in financial services, where the smallest delay or inconsistency can throw off your models, “decent” doesn’t quite cut it.

That’s one of the reasons PromptCloud keeps coming up in conversations with teams that need more than just basic scraping. Their strength isn’t just in pulling data—it’s in working with clients who have specific goals, complex requirements, and very little room for error.

Built for Custom Workflows

Most off-the-shelf scraping tools don’t play well with edge cases. They can pull headlines, sure, but ask them to handle five regional versions of a news site with slightly different layouts—or to monitor for changes in a financial statement PDF—and they start to wobble.

What PromptCloud does differently is build around those challenges. They work closely with clients to design setups that match the actual workflow, whether that’s tracking commodity updates across different time zones or syncing news to specific stock tickers. It’s not a plug-and-play service—it’s more like a tailor-made pipeline that runs quietly in the background.

Real-Time Feeds

In markets, being first often beats being thorough, but you really need both. PromptCloud understands this. Their systems are set up to minimize latency without flooding you with irrelevant content. So if you need structured data from Moneycontrol five seconds after it goes live, they can handle that. But you won’t be stuck filtering out the fluff either.

What this means in practice: less time cleaning data, more time acting on it.

Flexible Delivery Options

Not every team runs the same kind of stack. Some want data piped directly into a data warehouse via API. Others prefer clean CSV drops into cloud storage. And some just want a simple feed they can monitor and test internally.

PromptCloud adapts to that. Instead of asking you to fit into their process, they shape the delivery around yours. That’s something that seems small on the surface, but in fast-paced financial operations, it makes everything smoother.

Used by Teams That Can’t Afford Misses

This is probably the most telling part. The firms using PromptCloud aren’t casual browsers—they’re investment platforms, hedge funds, and data vendors that depend on constant, reliable access to the right kind of financial information. You don’t stick around in that crowd unless your data holds up under pressure.

So if you’re building something that depends on accurate, real-time financial news—and you can’t afford to guess or wait—PromptCloud is one of the few providers designed to meet that kind of demand.

Getting Started with Financial News Scraping: What You Need to Know

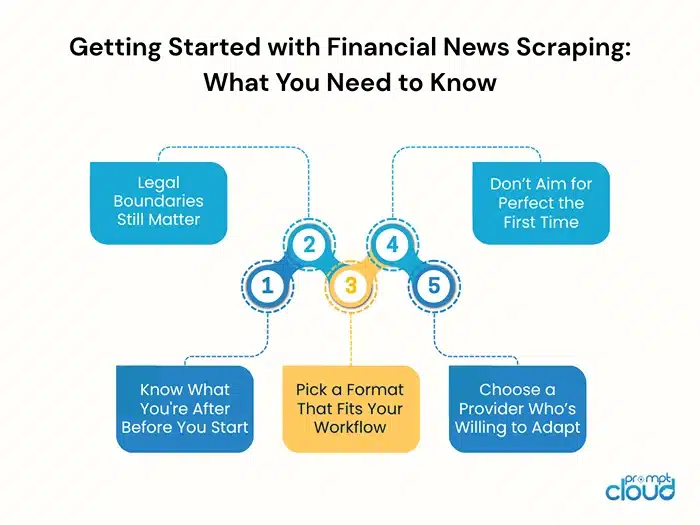

Jumping into financial web scraping might sound technical at first, but it’s really more about clarity. Nobody expects you to code the whole thing yourself. That’s not the job. What really helps is knowing, ahead of time, what kind of data would actually make a difference for your team, and what you’d want to do with it once you’ve got it.

Know What You’re After Before You Start

The first thing to figure out isn’t which platform or tool to use. It’s your end goal. What’s the outcome you’re aiming for? Maybe it’s an internal alert system when a stock gets mentioned. Or maybe you’re tracking how news spreads across markets over a 24-hour window.

Without a solid idea of what you’ll do with the data, the scraping itself can quickly become busywork. The more specific your purpose is, the easier it becomes to build something that fits.

Legal Boundaries Still Matter

Scraping has its limits, legally speaking. Just because something’s public doesn’t mean it’s up for grabs. Sites have terms. Some expect you to pull gently. Some flat-out restrict it. A good provider will already know how to stay on the safe side, without putting your team—or theirs—at risk.

Most serious providers know where the boundaries are. They won’t overload sites, and they’ll respect the rules that come with public data. If a scraping service tells you to ignore all that and just “go get it,” that’s probably not someone you want to build a long-term project with.

Pick a Format That Fits Your Workflow

You’ll need to decide how the data should come in. Should it be JSON over an API? Spreadsheets once a day? A feed you can plug into a tool like Tableau or Power BI?

What’s helpful for one group can be a bottleneck for someone else. If you’re working with dashboards or visuals, you’ll probably want clean, labeled fields—something that plugs in without much cleanup. Other teams might be fine working with raw text or logs. It really depends. If you’re testing models, maybe raw text is better to start. Talk it through with whoever’s helping you build the system—format isn’t just technical, it’s strategic.

Don’t Aim for Perfect the First Time

Here’s something people don’t mention enough: your first setup won’t be flawless. That’s fine. In fact, it’s normal.

Sites change. Feeds break. You’ll realize some data isn’t that useful, and something you overlooked ends up being crucial. So start small. Monitor a few sources. Adjust. Then expand once you know what’s working. The more you treat this like a living process, the better it holds up in the long run.

Choose a Provider Who’s Willing to Adapt

Support matters. Especially when you’re dealing with financial data that updates constantly, often without warning. You want someone who can keep up, who’ll flag changes when a site layout shifts or a news section goes missing.

It’s not about finding the biggest platform with the most features. It’s about working with someone who pays attention, checks in, and helps you adjust when your needs grow or change.

Better Financial Decisions Start With Better Data

Financial news doesn’t wait around. A report drops, a comment spreads, and before you’ve had time to refresh a page, the markets have already moved. That’s just how it goes.

Trying to stay ahead of that without the right systems? It’s tough. You could assign someone to sit and watch a dozen financial sites all day, every day—and things would still slip through. That’s just the reality. It’s one of the reasons more teams have started relying on scraping. Not because it’s some cutting-edge trend, but because it does the job without drawing attention to itself. It keeps running, pulls what’s useful, and shows up when you need it.

The right setup doesn’t just give you a bunch of articles. It gives you structure. It gives you timing. And more than anything, it gives you breathing room—so your team can spend less time chasing updates and more time using them.

It’s not about doing everything at once. Start where the gaps are. Work with someone who listens. So, the next time something big hits the wires, you won’t be catching up after the fact, you’ll already be looking at it, in real time, just as it starts to move the market. Schedule a demo now!

FAQs

1. What’s the best way to collect financial news from multiple global sources?

If you’ve ever tried tracking financial updates across a bunch of different websites, you know how scattered and time-consuming it gets. Scraping tools solve that by pulling news from sites like Yahoo Finance or Moneycontrol and sending it all to you in one place. Instead of checking each site manually, you get the updates organized and ready to use—often in a format your team can plug straight into whatever system you’re already using.

2. Is it legal to scrape financial news websites?

It depends on how it’s done and where you’re pulling the data from. Some sites allow it, others don’t—and many have terms that fall somewhere in the middle. The key is to scrape responsibly. That means not overloading servers, avoiding anything behind a login, and following each site’s published rules. Most top scraping providers know how to work within those boundaries and will help you stay clear of any legal trouble.

3. How fast can I get financial news updates through a scraping provider?

That depends on how things are set up. Some scraping services can catch and deliver updates almost the moment they go live—just seconds after a news article is published or a press release drops. It’s not instant in every case, but it’s usually a whole lot faster than checking feeds yourself or relying on delayed API updates.

4. Who actually uses this kind of scraping?

A wide range of teams rely on it. Hedge funds use scraping to stay ahead of market sentiment. Fintech platforms use it to feed financial news into user-facing tools. Even traditional research teams pull structured data from scraping pipelines to build models or track long-term trends. If financial news plays a role in your work, scraping probably fits.

5. Why is PromptCloud a strong choice for financial data scraping?

PromptCloud does things a bit differently from the typical plug-and-play platforms. Instead of giving you a dashboard and expecting you to adjust, they build around what you need. If your goal is to track financial stories tied to specific companies or sectors—and get the data in a format that fits your workflow—they’ll set that up for you. It’s less about pre-set features and more about solving the problem you actually have.