What is Data Extraction – Tools and Techniques for Data...

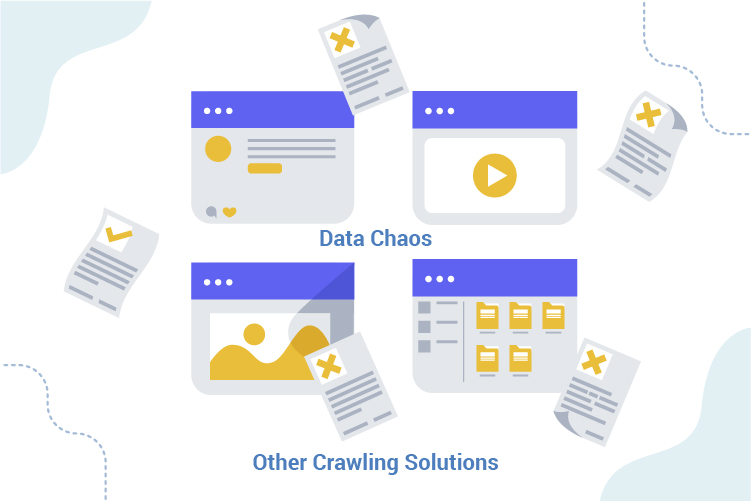

Data extraction tool plays a crucial role in today’s data-driven world, where organizations rely on large volumes of data to

Read morePromptCloud Inc, 16192 Coastal Highway, Lewes De 19958, Delaware USA 19958

We are available 24/ 7. Call Now. marketing@promptcloud.com

Our web scraping services are fully customizable. You can change source websites, frequency of data collection, data points being extracted and data delivery mechanisms can be analyzed based on your specific requirements.

The data-aggregation feature of our web crawler helps clients to get data from multiple sources in a single stream. This feature can be used by different companies, ranging from news aggregators to job boards.

We provide fully customized solutions for companies who are looking to leverage data from the websites. From building DIY solutions to spotting trends or building predictive engines, we help to uncover opportunities.

With low latency data feed to highly scalable infrastructure, all solutions are available on the cloud; where you can be rest assured that even the tiniest of website changes will be tracked and accommodated automatically.

Our automated web scraping solutions can be implemented in a short period of time. Where our core focus will be on acquiring quality data and speed of implementation.

Ready-to-use crawler recipes offer vast experience in building large-scale crawlers for multiple clients across different verticals.

PromptCloud solutions can fulfill your custom and large-scale requirements even on complex sites without having the need to code.

We innovate and invest resources in web crawling techniques so that you get the benefit of high-quality data.

Explore the many areas where PromptCloud will help your company to grow through a deeper understanding of data insights and analytics. We use technologies like live crawler that is powered by artificial intelligence and machine learning techniques. You can use this seamless flow of quality data in the required frequencies and formats.

PromptCloud offers managed data scraping services. From setting up crawlers to data normalisation, we ensure that the harvested data meets your quality standards.

We work with strong SLAs, to provide prompt support and deliver more than we promise. Our data wizards are obsessed about making clients happy!

Irrespective of the complexity of data requirement, our web crawlers are flexible enough to deliver customized data feeds by making use the best web scraping techniques.

Our high-performance machines and the optimized scraping techniques make sure that the scrapes run smoothly to deliver data as per the timeline.

Need customized external data feed running into millions? We’ve got you covered, with our strong web scraping infrastructure and technical expertise, we help you scale with your data.

Our sophisticated monitoring system keeps track of the slightest change in the site structure and alerts the engineering team so that swift updates can be made.

Unrivalled domain expertise and innovative thinking make us the right partner for your web scraping services and external data requirements

To start your data mining project, provide information to us on the sites to be crawled, fields to be extracted and desired frequency of these crawls.

According to your specified requirement, we’ll provide the sample data by setting up the web crawler. You need to validate the data and the data fields present in the sample file.

After you consent, we’ll finalize the crawler setup to proceed with the web scraping service project and upload the data.

Finally, you’ll download the data either directly from CrawlBoard or via our API in XML, JSON, or CSV format. Data can also be uploaded to your Amazon S3, Dropbox, Google Drive, and FTP accounts.

We meet all your data mining requirements.

I have used PromptCloud for my business, and was very happy with the experience. PromptCloud’s customer support was excellent and they worked with me to ensure the data harvested was exactly what I needed.

Promptcloud has provided us with an excellent data quality for many years. They are our first web scraping solution when it comes to getting accessible data from the internet. I highly recommend them, they are indeed the best web crawlers.

PromptCloud provides an excellent data quality service at highly competitive pricing. Their web scraping service quality allowed our engineers to concentrate on the projects closer to the core of the business, with the security of knowing PromptCloud is servicing these other projects with the same level of rigour and precision we’d expect from our core team.

News From the world Of data mining and how different industries can use solutions from web scraping.

Data extraction tool plays a crucial role in today’s data-driven world, where organizations rely on large volumes of data to

Read moreAdjusting prices based on market demands is not a new concept, but it is more relevant than ever. Not that

Read more

Please fill up all the fields to submit