Analyzing search behavior highlights changes in preferences, product demand, and overall market activity. Notably, 68% of all online activities start with a search engine, hence, the data available from searches is invaluable for business intelligence purposes. (Source: BrightEdge)

Historically, businesses have relied on SEO analytics and keyword-tracking tools to monitor broader market search behaviors. These tools, however, provide outdated aggregated data that does not adjust to real-time market changes; this is especially true for niche markets or specific geographic locations.

With the help of a search engine crawler, this situation can be remedied. Tailor-made web crawlers offer businesses up-to-date information concerning their industry’s search patterns through the systematic gathering and analyzing of information based on the displayed results of search engines.

Businesses can now change their strategies from the dependency of rigid and wide-ranging reports to search engine crawling methods capable of tracking keyword variations, monitoring competitor ranks, and acquiring valuable knowledge about the transformation and flow of search queries over various periods.

In this article, we will analyze how web crawling enables businesses to monitor search trends, why it is preferred over traditional methods, and how employing SEO rank APIs along with local SERP trackers provides a holistic view of market trends.

What is a Search Engine Crawler and How Does It Work?

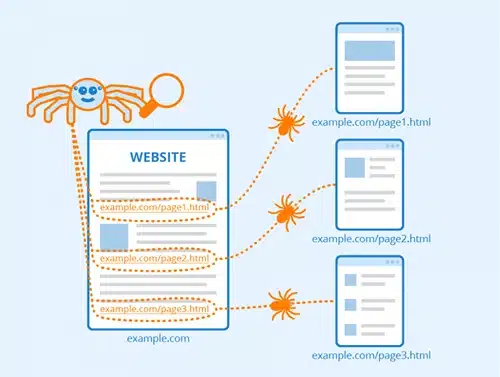

Image Source: seobility

Simply put, a search engine crawler is programmatic software that automatically navigates the internet to gather data. Most people refer to it as a bot or spider. Crawlers are used by search engines like Google and Bing for commercial purposes. However, businesses can also deploy them to extract industry-specific search trends.

The process of search engine crawling is:

- Crawling – The web crawler starts with a published list of URLs and gradually retrieves new pages from the internet.

- Data Extraction – While navigating through a site, the crawler collects important relevant information which can include keywords and metadata, rankings, and content order.

- Indexing & Analysis – Patterns and trends are identified data is collected and stored, checked for consistency, keyword usage incidence, and ranking trends.

This methodology can be tailored to an organization’s needs to track particular competitor URLs, monitor dialed-in SERPs, and observe keyword metrics. An example would be an eCommerce business that has a search engine web crawler that monitors the rate of occurrence of certain product-related searches and how competitors optimize their results pages.

Why Tracking Industry-Specific Search Trends Matters

For most businesses, being able to anticipate changes to search trends can provide valuable insights into their competitors’ strategic moves and help inform their own operational decisions. For these reasons, it is important to analyze such trends:

1. Identifying Emerging Market Trends

Looking up information online is usually a good indicator of what people are hoping to gain access to soon, and this means that upcoming changes in demand are most probably on the way.

The consideration of rising search volume for “sustainable fashion” or “AI-powered analytics,” or any such terms, is clear evidence that changes in the industry direction are being sought. Those who are first to identify such trends and shifts can market their products with more effectiveness.

2. Enhancing SEO and Content Strategy

SEO strategies that are formulated for an organization must focus on one clear objective: to optimize for current trends and change them all. And businesses are lacking the use of SEO tracking crawler tools, these businesses cannot monitor the movement of the keywords under which they want to be listed, and so lose out on relevancy.

If any competitor gains a ranking for key terms in the industry, they need to track what changes were made—was it content, backlinks, or technical updates?

3. Gaining Competitive Intelligence

Monitoring competitors’ performance for certain keywords can show other areas’ gaps and possibilities. A local SERP tracker can help a business view where local competitors rank in search results, assisting businesses to south their regional SEO efforts.

4. Improving Advertising and Paid Search Efforts

Search trends do not affect the ranking alone; they also affect paid search campaigns. Businesses that capitalize on trends for certain industry-based keywords should change their PPC spending strategies earlier than the competition for optimum keywords increases.

How Web Crawlers Track Search Trends in Real Time

Web crawlers allow businesses to monitor search trends dynamically, without relying on third-party reports. Here’s how they help:

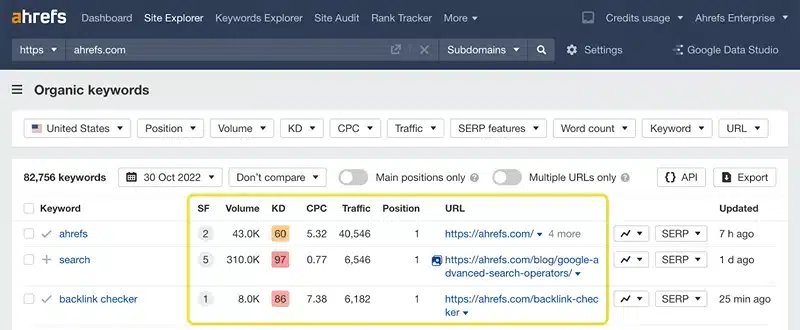

1. Automated Keyword Position Tracking

Instead of verifying each query on a search engine, web crawlers track keyword placements on numerous affiliated search engines. A Search engine crawler can track and inform on important changes within set periods.

Image Source: AHREFS

2. Competitor Benchmarking with Web Crawling

Once a competitor’s website and SERPs are crawled, companies can now find out what keywords are relevant to use as a guide in modifying their SEO, digital content, and advertising campaigns.

3. Localized Search Insights with a Local SERP Tracker

For firms with operations in different geographical locations, local variations of a search are important. A local SERP tracker assists in revealing specific region trends by comparing the tracked search rankings with other regions, which may be overlooked in global search data.

4. Analyzing Seasonal and Trending Keywords

A lot of industries experience heightened demand during certain periods, for example, retail has obvious holiday sales or the spike in “home workout equipment” searches in January. These trends are programmable by web scraping tools, allowing firms to change their marketing efforts accordingly.

5. Monitoring Search Engine Algorithm Updates

Search engines frequently update their ranking algorithms, which impacts the visibility of the websites in the search results. Using a search engine crawler can discover changes in ranking positions that are clear indicators of updates.

SEO Rank APIs for Deeper Insights

While web crawlers collect raw data, integrating an SEO rank API enhances analysis by providing:

- Real-time keyword ranking updates across multiple search engines.

- Historical data comparisons to identify long-term search trends.

- Automated reports to track ranking performance and search trend fluctuations.

For example, an enterprise software company can use an SEO rank API to monitor how search demand for “cloud-based analytics software” changes over time. If rankings decline, they can quickly analyze and optimize their content before losing market share.

Best Practices for Using Search Engine Crawlers

To extract maximum value from search engine crawling, businesses should follow these best practices:

1. Focus on High-Impact Keywords

Not all keywords contribute equally to business growth. Companies should prioritize industry-specific keywords that align with their target audience’s search behavior.

2. Crawl Ethically and Avoid Search Engine Penalties

Search engines have guidelines on web crawling. To prevent being blocked or blacklisted:

- Follow robots.txt rules to respect website crawling restrictions.

- Avoid high-frequency crawling, which can overload servers and trigger IP bans.

3. Combine Web Crawling with SEO and Analytics Tools

Web crawling provides raw data, but pairing it with tools like Google Search Console, SEMrush, or Ahrefs helps visualize trends and identify actionable insights.

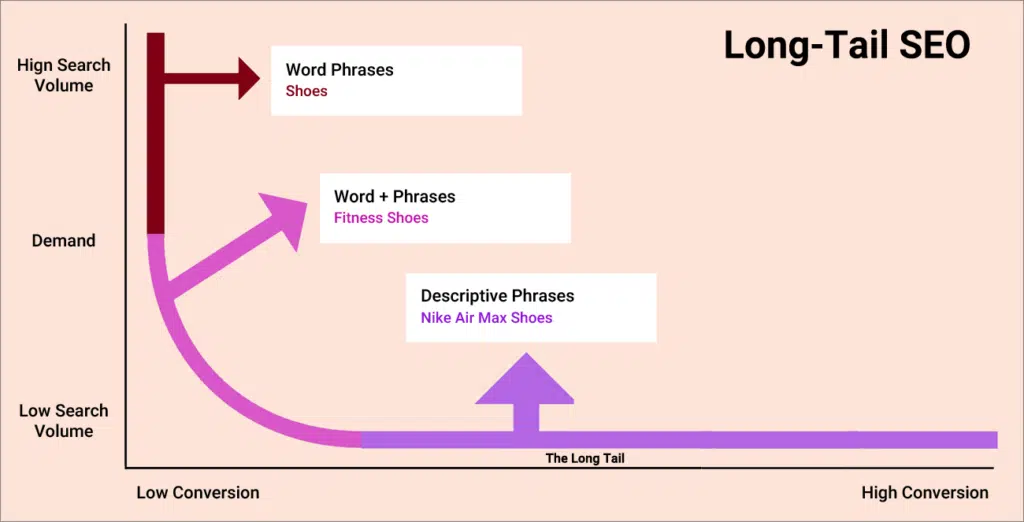

4. Track Long-Tail and Conversational Search Queries

With the rise of voice search and natural language queries, businesses should monitor long-tail keywords that indicate evolving consumer search habits.

Image Source: Pagetraffic

5. Continuously Adapt to Changing Search Trends

Search behavior is never static. Regularly updating crawling parameters ensures businesses track the most relevant search terms in their industry.

Why Web Crawling is Essential for Search Trend Analysis

Businesses that rely on search engine crawlers gain deeper, more accurate insights than those using static SEO reports alone.

By leveraging search engine crawling, local SERP trackers, and SEO rank APIs, companies can:

- Capture emerging trends early in comparison to their competitors

- Optimize content along with SEO smartly and responsively.

- Enhance marketing strategies in particular regions and seasons.

- Respond swiftly to modifications in search engine algorithms.

For businesses looking to enhance their market intelligence capabilities, deploying a robust web crawling strategy is the key to unlocking data-driven decision-making.PromptCloud’s customized web scraping services enable businesses to monitor altering search trends and enhance their business competitiveness, well that’s if the company is searching for remarkable solutions. Contact us today!