In today’s data-driven world, web scraping has become an essential tool for businesses seeking to collect and analyze large volumes of data from various online sources. However, as the amount of data grows, so do the challenges associated with managing and scaling web scraping projects. This article provides practical tips for handling large-scale web scraping projects effectively, focusing on the key methods and tools for scraping data from websites.

What is Web Scraping?

Web scraping involves extracting data from websites by simulating human browsing behavior. This technique allows businesses to gather vast amounts of data, including text, images, and links, from multiple web pages. The ability to automate this process using programming languages like Python has made web scraping an invaluable tool for market research, competitive analysis, and data-driven decision-making.

How to Scrape Data from a Website

Scraping data from a website involves automating the process of extracting information from web pages. This can be achieved by writing scripts that send requests to the target website, retrieve the HTML content, and parse it to extract the required data. Typically, web scraping is done using programming languages such as Python, which offers libraries like BeautifulSoup and Scrapy. These tools help in navigating the HTML structure of web pages and extracting data efficiently. The key steps include identifying the target data, analyzing the website’s HTML structure, writing a script to extract the data, and storing it in a structured format such as CSV or JSON.

Once the data is extracted, it can be saved into various formats for analysis or integration with other systems. For example, you can use the Pandas library in Python web scraping to create a DataFrame from the scraped data and then export it to an Excel file. This allows businesses to analyze the data in a familiar format and derive actionable insights. For more extensive data operations, data can be directly uploaded into databases or visualization tools to facilitate real-time analysis and decision-making. By automating web scraping, businesses can gather large volumes of data quickly and accurately, ensuring they stay informed and competitive in their respective markets.

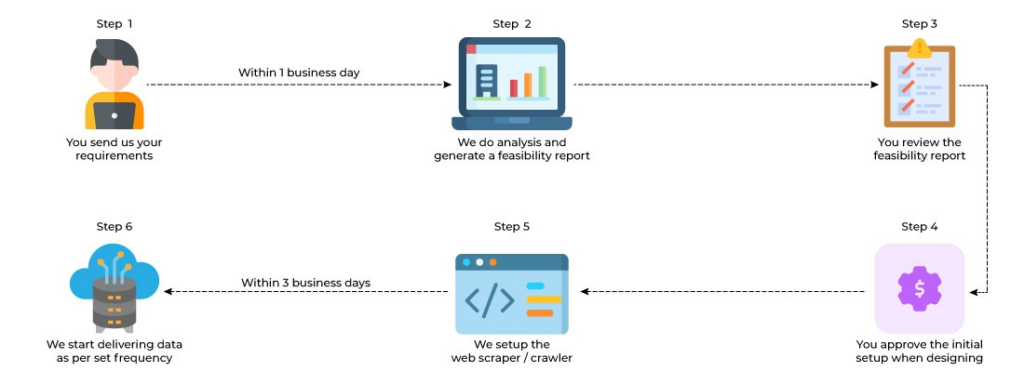

Scraping data from a website involves several steps:

Image Source: AnalyticsVidhya

- Identify the Data Sources: Determine the websites and specific pages you need to scrape.

- Analyze the Structure: Inspect the HTML structure of the web pages to identify the data elements you want to extract.

- Choose the Right Tools: Select appropriate web scraping tools and libraries.

- Write the Scraping Script: Use a programming language, such as Python, to write a script that automates the data extraction process.

- Store the Data: Save the extracted data into a suitable format, such as CSV, Excel, or Google Sheets.

Using Python for Web Scraping

Python is one of the most popular languages for web scraping due to its simplicity and the availability of powerful libraries. Here’s how to get started with Python web scraping:

Set Up Your Environment:

- Install Python and necessary libraries like BeautifulSoup and Scrapy.

- Set up a virtual environment to manage dependencies.

Write Your Scraping Script:

- Use BeautifulSoup for parsing HTML and navigating the web page structure.

- Utilize Scrapy for more advanced scraping tasks, such as handling pagination and dealing with AJAX-loaded content.

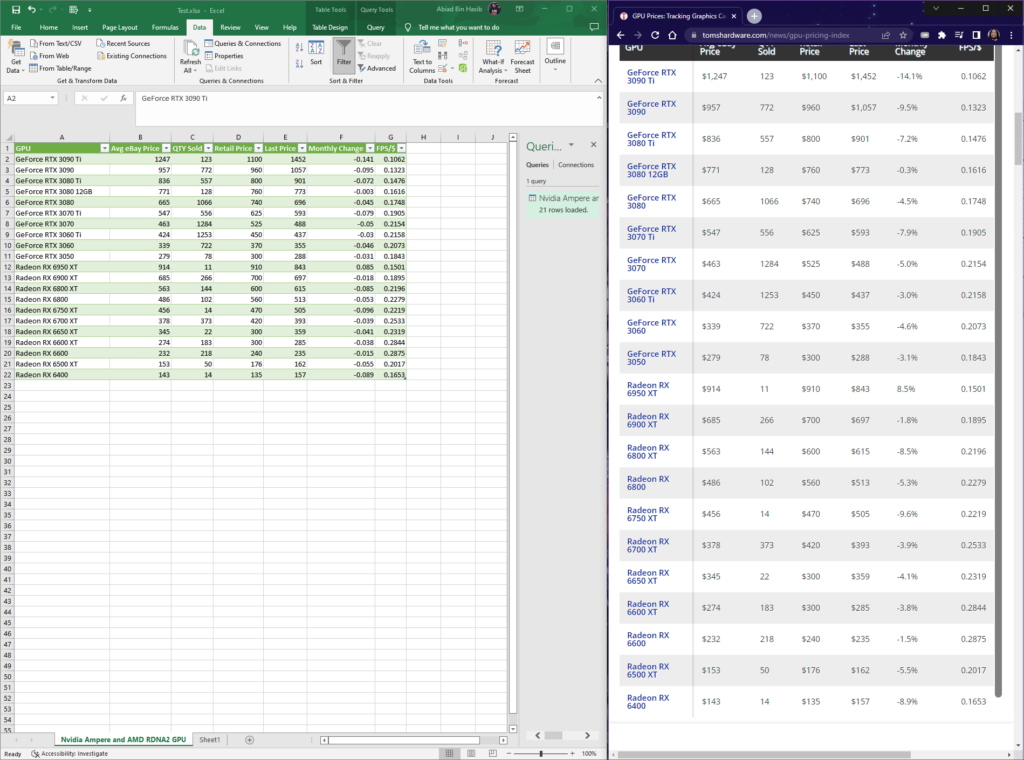

How to Scrape Data from a Website into Excel

Image Source: ScrapingAnt

Scraping data from a website and saving it into Excel involves several steps: identifying the target data, extracting it using a web scraping tool, and then exporting it to an Excel file. Python, with its powerful libraries like BeautifulSoup for scraping and Pandas for data manipulation, simplifies this process significantly. Here’s a step-by-step guide on how to scrape data from a website and save it into an Excel spreadsheet.

Steps to scrape data into Excel:

- Extract Data Using Web Scraping Techniques:

- Follow the web scraping steps to collect the data from the desired web pages.

- Save Data to Excel:

- Use Pandas to create a DataFrame and export it to an Excel file.

How to Scrape Data from a Website into Google Sheets

Scraping data into Google Sheets involves using the Google Sheets API to send the extracted data to a Google Sheet.

Steps to scrape data into Google Sheets:

- Extract Data Using Web Scraping Techniques:

- Follow the web scraping steps to collect the data from the desired web pages.

- Authenticate with Google Sheets API:

- Use the Google Sheets API to connect and authenticate with your Google account.

- Upload Data to Google Sheets:

- Use the API to send the data to a specified Google Sheet.

Tips for Handling Large Volumes of Data

Efficiently handle large volumes of data while avoiding common pitfalls such as being blocked or overloading servers with these tips:

Optimize Your Code:

Ensure your scraping script is efficient. Minimize the number of requests and parse only necessary data.

Use Proxies and Rotate IPs:

To avoid getting blocked by websites, use proxy servers and rotate IP addresses.

Implement Rate Limiting:

Be respectful of the website’s server load. Implement delays between requests to avoid overwhelming the server.

Handle Errors Gracefully:

Implement error handling to manage unexpected issues, such as changes in the website structure or connectivity problems.

Store Data Efficiently:

For large datasets, consider using databases like MongoDB or PostgreSQL instead of flat files like CSV or Excel.

Automate and Schedule Scraping Tasks:

Use task schedulers like cron jobs to automate and regularly execute your scraping scripts.

Conclusion

Scaling your web scraping projects requires careful planning and the right tools to handle large volumes of data effectively. By understanding how to scrape data from a website, utilizing Python for web scraping, and knowing how to save data into Excel or Google Sheets, businesses can streamline their data collection processes. Implementing best practices, such as optimizing code, using proxies, and efficient data storage, ensures that your web scraping projects remain robust and efficient as they scale. Embrace these strategies to enhance your data-driven decision-making and maintain a competitive edge in your industry.

If you’re looking for more robust and scalable data extraction solutions tailored to your specific needs, consider leveraging professional services. PromptCloud offers comprehensive web scraping services that can help you gather large-scale web data efficiently and accurately.

Ready to scale your web scraping projects with ease? Schedule a demo with PromptCloud today!