Why Enterprise Web Scraping Sounds Simple (But Isn’t)

If you’re part of a data-heavy organization, whether you’re a CTO, a product manager, or someone on the procurement side, you already know how critical web data is. Product catalogs, pricing trends, market movement, customer sentiment, competitive intelligence—all of it is out there on the open web. And in theory, web scraping seems like the obvious way to tap into that.

But theory is easy. Practice? Not so much.

Scraping one site with a script is child’s play. Scraping hundreds of sites reliably, across multiple formats, languages, structures, and geographies, every single day? That’s an engineering and operations challenge at scale.

Many teams think they’re ready for enterprise web scraping, but then fall into the same traps: blocked IPs, bad data, scrapers breaking overnight, or worse—legal risks no one saw coming.

In this piece, we’re breaking down six of the most common mistakes enterprises make when trying to scrape the web at scale and how you can fix them before they become expensive.

Mistake #1: Using the Wrong Tools for the Job (Hint: You’re Not a Startup Hackathon)

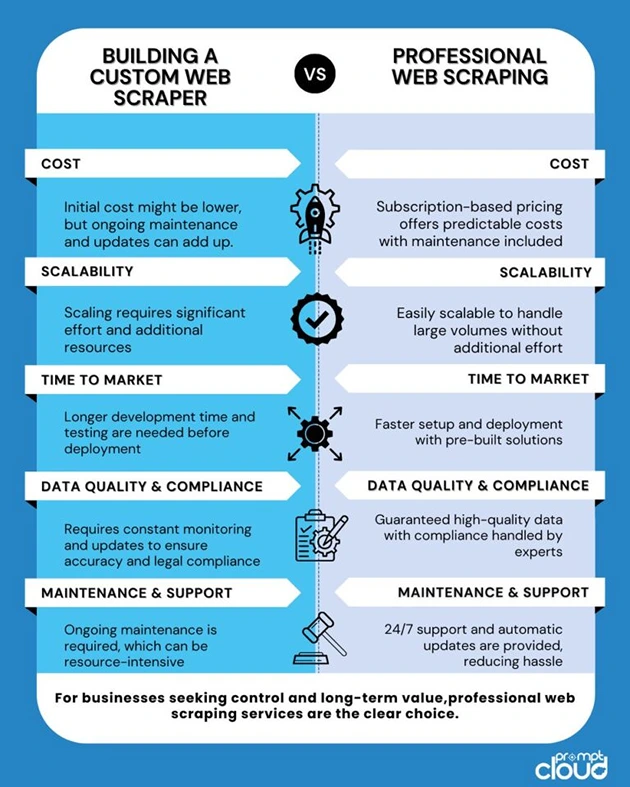

It’s surprising how many large companies still rely on DIY scripts or open-source scraping tools built for side projects. Tools like Scrapy or Puppeteer are fine if you’re running a one-off project. But enterprise web scraping is about scale, consistency, and stability—and these tools aren’t designed for that.

The problem isn’t the tools themselves. The issue is that most enterprise teams underestimate what’s involved in running them at scale:

- Managing server infrastructure

- Rotating proxies to avoid IP bans

- Handling JavaScript-heavy or anti-bot sites

- Maintaining scrapers as site structures evolve

- Dealing with data storage, formatting, and delivery

These are problems that snowball fast.

How to fix it:

Don’t patch together a bunch of tools and hope for the best. You’re not a startup in a dorm room. Instead, invest in an enterprise web scraping service that does all of this for you—so your team can stop firefighting and start analyzing real-time data that actually works.

PromptCloud, for example, offers a fully managed solution that includes proxy rotation, site monitoring, dynamic content rendering, and structured data delivery—so you never have to worry about broken scripts again.

Mistake #2: Underestimating How Often Websites Change

You’d think a website’s structure would stay stable for a while, but that’s rarely the case. Especially with e-commerce, travel, and news platforms, layout changes are constant. A minor update in a div class, the addition of lazy loading, or a shift to client-side rendering can kill your scraper overnight.

And here’s the kicker: most of these changes aren’t announced. Your scripts just stop working, and unless you have proper monitoring in place, you don’t even realize it until it’s too late.

Let’s say you’re scraping a retailer’s site for pricing data. Everything’s going fine until they update their front-end framework. Now your scraper misses half the products for three days, and your competitor analysis is garbage. You don’t just lose data—you lose trust across your org.

How to fix it:

You need scrapers that evolve as fast as the sites you’re scraping. That means:

- Real-time monitoring of scraping jobs

- Auto-detection of structural changes

- Flexible, headless browsers for dynamic content

- Quick redeployment when pages change

This is where working with an automated web scraping provider makes a real difference. At PromptCloud, we continuously monitor target sites, so if something changes, we fix it before you even notice.

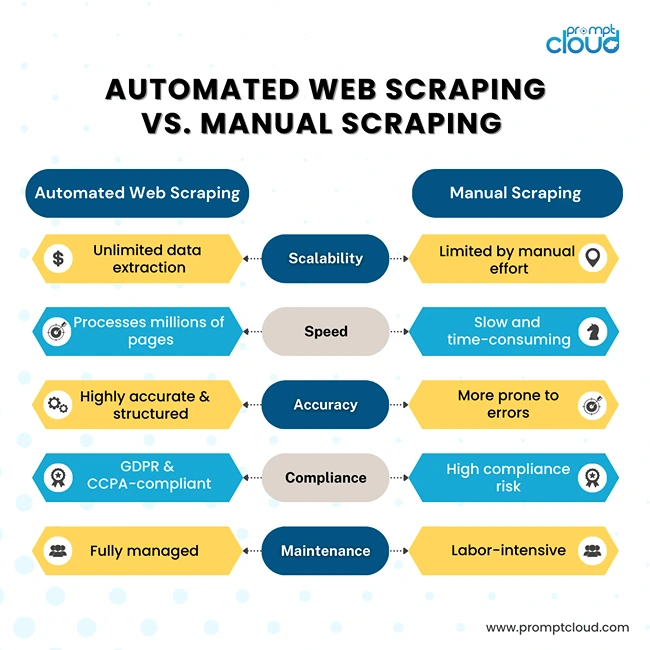

Mistake #3: Skipping Automation and Trying to Scale Manually

A lot of enterprise teams start out managing scraping like a recurring task: run a script here, export to CSV there, upload to a dashboard manually. But as the scope grows, this approach collapses.

Let’s be blunt—manual scraping is not scalable. When you’re trying to pull from 50, 100, or 500 sources on a daily or weekly basis, you can’t afford manual intervention. What ends up happening is:

- Your team burns out babysitting scrapers.

- Data delivery becomes unreliable.

- Bugs pile up, and no one owns the system.

- New business needs can’t be met fast enough.

How to fix it:

Treat your scraping infrastructure like you would any critical pipeline. Automate everything—job scheduling, data validation, delivery, and error handling. Set up alerts when something breaks. And, ideally, plug it all into your existing data lake or BI platform.

With an enterprise web scraping service, this kind of automation is built in. Jobs run on a schedule, retry logic is baked in, and clean data lands in your S3 bucket or FTP server without anyone needing to lift a finger.

Mistake #4: Not Prioritizing Data Quality and Consistency

Image Source: ScopeInsight

There’s a big difference between collecting data and collecting the right data. Just because your scraper grabs a bunch of HTML doesn’t mean that what you’ve got is usable.

We’ve seen enterprise teams spend weeks collecting product data—only to discover:

- The fields are misaligned (e.g., prices tagged as descriptions)

- Duplicate records were introduced during retries

- Special characters or missing values are messing up analytics

- Timestamps are inconsistent, making trend analysis impossible

And here’s the unfortunate truth: if your team doesn’t trust the data, they won’t use it. Data quality isn’t a “nice to have”—it’s mission-critical.

How to fix it:

Build in validation checks early in the pipeline. Normalize fields across sources. Use schema enforcement to catch issues. And most importantly, partner with a provider that delivers clean, structured, and deduplicated data.

PromptCloud, for instance, provides standardized outputs in formats like JSON, CSV, or XML—already cleaned, validated, and formatted for your systems. No post-processing headaches.

Mistake #5: Ignoring Legal and Ethical Boundaries

This one can’t be stressed enough. Just because the data is public doesn’t mean scraping it is always allowed. Legal and compliance issues are one of the most overlooked risks in web scraping—and when they go wrong, they go very wrong.

We’re talking about things like:

- Violating a site’s terms of service

- Disregarding robots.txt instructions

- Scraping personal data without consent (hello, GDPR fines)

- Getting hit with a cease-and-desist letter that stalls your entire data pipeline

Most companies don’t think about this until legal gets involved. By then, it’s usually reactive cleanup.

How to fix it:

Legal review shouldn’t be an afterthought—it should be baked into your scraping strategy. Only collect publicly accessible data. Avoid personal information. Respect site usage policies. If in doubt, consult with a legal professional or use a provider that handles this on your behalf.

PromptCloud follows ethical scraping practices, monitors for legal updates, and works closely with clients to ensure data collection stays compliant in every market.

Mistake #6: Treating Web Scraping Like a Side Project

Here’s one of the biggest missteps: treating scraping as a “tech thing” that someone on the engineering team builds on the side. The reality? Enterprise web scraping isn’t just a technical project—it’s a long-term operational system that supports strategic decisions across teams.

When there’s no clear owner, no roadmap, and no budget, the scraping setup becomes brittle. It gets patched over time, but never truly matures. And when a key developer leaves? The whole thing falls apart.

How to fix it:

If web data is part of your business strategy, treat it like a product:

- Assign a product owner.

- Build a roadmap.

- Secure a budget for infrastructure or managed services.

- Integrate it with BI, analytics, and planning tools.

Better still, offload the whole scraping engine to a specialized partner so your team can focus on what actually moves the needle: using the data, not collecting it.

How PromptCloud’s Enterprise Web Scraping Service Solves All This (So You Don’t Have To)

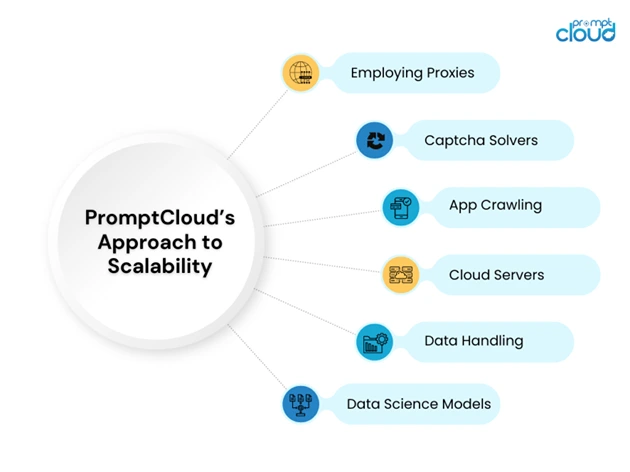

Web scraping at scale doesn’t have to be messy, risky, or frustrating. When you work with a managed enterprise scraping provider like PromptCloud, you get:

- Fully automated workflows, tailored to your needs

- Site change detection and continuous maintenance

- Built-in IP rotation, CAPTCHA solving, and bot management

- Clean, structured data delivered in your preferred format

- Legal oversight and ethical scraping practices

- Support for scaling across industries, use cases, and regions

No more broken scripts. No more blocked IPs. No more internal chaos.

You get reliable, up-to-date web data—ready for analysis, planning, reporting, or machine learning—without worrying about how it got there.

Skip the Headaches, Start with Strategy

Enterprise web scraping is powerful, but only if it’s done right.

Whether you’re trying to track competitors, monitor prices, gather lead data, or fuel an AI model, the quality and reliability of your web data can make or break your outcomes.

So stop making the same mistakes everyone else makes. Stop building fragile, piecemeal scraping stacks. And stop letting valuable data slip through the cracks because the system can’t keep up.

Instead, partner with PromptCloud and get the web data you need—without the pain, the legal risks, or the tech debt. Want to see how it works in action? Talk to our team today!