Everyone’s chasing smarter AI models. Bigger architectures, more parameters, faster training times. That’s fine, but if your data is off, none of it matters.

Models learn from data. That’s the whole game. And yet, data is the part most teams treat like an afterthought. You wouldn’t train a pilot with a broken flight simulator. You wouldn’t teach someone to drive using blurry road signs. But that’s exactly what happens when you feed a model messy, limited, or poorly labeled datasets.

The problem isn’t new. Ask any machine learning engineer what slows their projects down, and they’ll say the same thing: the data, finding it, cleaning it, and labeling it. Making sure it represents the problem they’re trying to solve.

And this isn’t just about quality. It’s about relevance. You could have the cleanest dataset in the world, but if it’s not the right data for the task, your model still won’t learn anything useful. A fraud detection model trained only on clean, legitimate transactions is going to miss the point entirely.

This is why AI datasets matter more than model architecture. They’re not just fuel—they’re the instruction manual. And if you start with the wrong data, you’re going to waste time, money, and compute on a model that doesn’t work when it actually counts.

So, before you mess with your model again, look at your training data. Is it accurate? Is it current? Does it reflect the real-world scenarios your model will face?

That’s the difference between a system that “mostly works in a test environment” and one that actually performs in production.

Next, we’ll break down where to find AI training datasets that are worth your time—and how to spot the ones that aren’t.

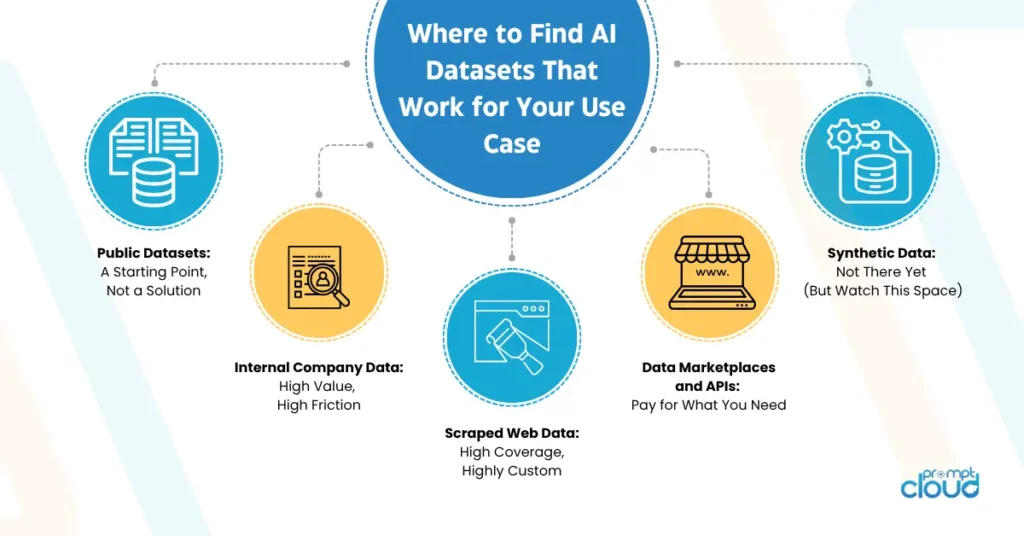

Where to Find AI Datasets That Work for Your Use Case

A million datasets are floating around the internet. Most of them are either outdated, irrelevant, too small to be useful, or full of noise. The trick isn’t just finding data—it’s finding AI datasets that fit your use case, your domain, and the real-world conditions your model will face.

Let’s break down the options.

1. Public Datasets: A Starting Point, Not a Solution

Everyone starts here: Kaggle, Hugging Face, UCI, Google’s dataset search. Great for playing around. Decent for prototypes. But once you go from toy problems to something real—fraud detection, pricing models, and demand forecasting, these fall short fast.

Why? Because everyone else is using the same data. And your use case probably isn’t “generic image classification.”

2. Internal Company Data: High Value, High Friction

If you’ve got product logs, customer feedback, sales transactions—use them. Internal data is gold. But no one talks about the cleanup. Duplicates. Missing fields. Random formats. No labels. You’ll spend more time wrestling Excel sheets than training models.

Still—this is where the value is. You just need to be ready to clean it, label it, and structure it yourself. Or bring in someone who can.

3. Scraped Web Data: High Coverage, Highly Custom

When the data you need isn’t sitting in a neat dataset, you scrape it. Job listings. Product reviews. Flight prices. Real estate leads. It’s all out there—you just have to collect it properly.

But let’s be clear: scraping at scale isn’t about firing up BeautifulSoup on a Saturday night. You need structure, deduplication, rotation, retries, and monitoring. And yeah, legality.

This is where working with a proper web scraping service provider pays off. You get the data you need, delivered clean, at scale, without burning a month of engineering time.

4. Data Marketplaces and APIs: Pay for What You Need

Want crypto transaction data? Product SKUs from a competitor’s feed? Consumer panel info? You’ll probably find someone selling it. APIs make it fast. But don’t expect flexibility. You’re buying what they’ve got, not what you actually need.

It works when you need something specific and don’t have time to source it yourself. Just make sure the licensing is clear, and the refresh rate matches your use case.

5. Synthetic Data: Not There Yet (But Watch This Space)

If you’re in a domain where real data is hard to get—self-driving cars, healthcare, rare-event scenarios—sure, synthetic data can help. But don’t fall for the hype. It’s useful for training edge cases or expanding small datasets, but it won’t replace the real thing.

Use it to patch holes. Not to build the foundation.

The truth is, you’ll probably pull from more than one source. That’s fine. Just don’t confuse “big” with “good,” and don’t assume data someone else collected will magically work for your model.

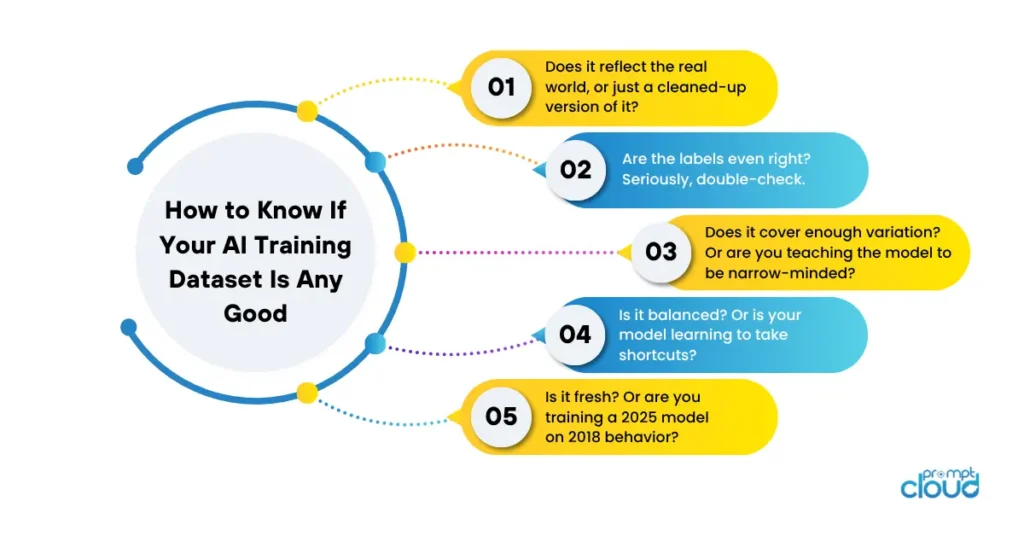

How to Know If Your AI Training Datasets Is Any Good

It’s easy to get excited once you’ve sourced a big, shiny dataset. But size doesn’t mean quality. Half the teams out there are building models on garbage without realizing it, and wondering why the results feel off.

So, how do you know if your AI datasets are solid? Here’s what to look at—without getting lost in theory.

1. Does it reflect the real world, or just a cleaned-up version of it?

Too many datasets are filtered to death. Neatly labeled, balanced classes, no noise. Sounds great—until your model hits production and panics the first time it sees something weird.

Your data needs to look like what the model will face. That includes mess, edge cases, ambiguity, and unlabeled junk. If your dataset is too “perfect,” it’s probably useless in the real world.

2. Are the labels even right? Seriously, double-check.

This one gets overlooked constantly. Labeling errors are the silent killer. If your sentiment dataset thinks “This is sick” is negative when it’s slang for “amazing,” you’ve already failed.

Go sample your data. Spot check. Find the weird cases and edge conditions. If you wouldn’t trust a human looking at the label, don’t expect a model to learn from it.

3. Does it cover enough variation? Or are you teaching the model to be narrow-minded?

You don’t just need more data—you need different data. For example, training a model on 100,000 customer support chats from one product won’t teach it how to generalize across categories.

You need variety. Different formats, sources, timeframes, accents, lighting conditions—whatever’s relevant for your domain. If everything looks the same, your model’s going to fail the moment something new shows up.

4. Is it balanced? Or is your model learning to take shortcuts?

Let’s say you’re training a model to detect defective parts. If only 1% of your dataset is defective, guess what? Your model will just predict “not defective” 99% of the time—and still look accurate on paper.

Accuracy metrics don’t mean anything if the underlying distribution is skewed. You need to check your class balance and understand what “success” actually looks like for your use case.

5. Is it fresh? Or are you training a 2025 model on 2018 behavior?

Data stale fast. Language evolves. Consumer behavior shifts. Products change. If your data’s too old, your model will end up clueless about how the world works today.

Always ask: When was this collected? Is it still relevant? Do I need to update it monthly, weekly, or daily? For anything user-facing, current data isn’t optional—it’s baseline.

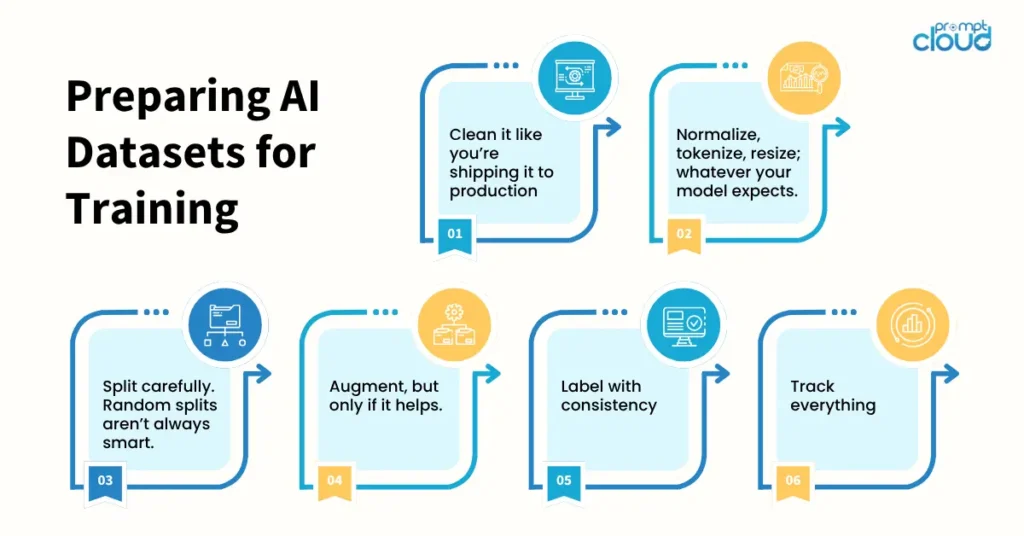

Preparing AI Datasets for Training

You’ve got your data. Now what?

This is the part where a lot of teams mess up. They collect a bunch of AI training data, throw it into a model, and hope for the best. Then, when the results are all over the place, they blame the model. But the truth is, what you do before training matters just as much as the architecture you pick.

Here’s how to get your dataset into shape without wasting time—or breaking your pipeline later.

1. Clean it like you’re shipping it to production

Missing values, duplicate entries, weird encodings, broken formats—get rid of them. Or at least standardize them. If your model gets confused by blank fields or inconsistent timestamps, that’s on you.

Write clear, reusable scripts to clean your dataset. Manual cleanup might work once, but it won’t scale when the next batch of 10 million rows shows up.

2. Normalize, tokenize, resize; whatever your model expects.

Data needs to be shaped to fit your model, not the other way around. For images, that means resizing and color normalization. For text, it might mean tokenization, lowercasing, removing stopwords (or keeping them—depends on the task).

Whatever the format, make sure it’s consistent. Models hate surprises.

3. Split carefully. Random splits aren’t always smart.

You’re probably doing a train-test-validation split. Good. But don’t assume random is always the right choice. If your dataset has temporal dependencies (like sales data or clickstreams), a random shuffle will destroy the signal.

Think about how your model will be used in production. If it’s making future predictions, you need to simulate that in your training/testing split.

4. Augment, but only if it helps.

Data augmentation (adding noise, flipping images, generating paraphrases) can help when you’re working with limited data. But don’t just do it because some blog said so.

Add noise if you expect noisy inputs. Add variation if your real-world users will bring that variation. Otherwise, you’re just inflating your dataset with fake diversity.

5. Label with consistency

This one’s huge. Inconsistent labeling wrecks models. If you have a team tagging data, make sure they’re aligned. Use detailed guidelines. Review edge cases together.

If you’re using automated labeling or weak supervision, audit the results aggressively. And remember: it’s better to have less data with consistent labels than tons of mislabeled junk.

6. Track everything

Version your data. Track preprocessing steps. Log what scripts you ran. You don’t want to be that person who retrains a model and gets different results—but can’t explain why.

Use tools like DVC, Weights & Biases, or even just clear git practices. Treat your dataset like code. Because it is.

You don’t need perfection. But you do need discipline. A well-prepped dataset saves you weeks of debugging and retraining down the line. Sloppy prep? That just drags problems out further into your pipeline, where they’re harder to spot and more expensive to fix.

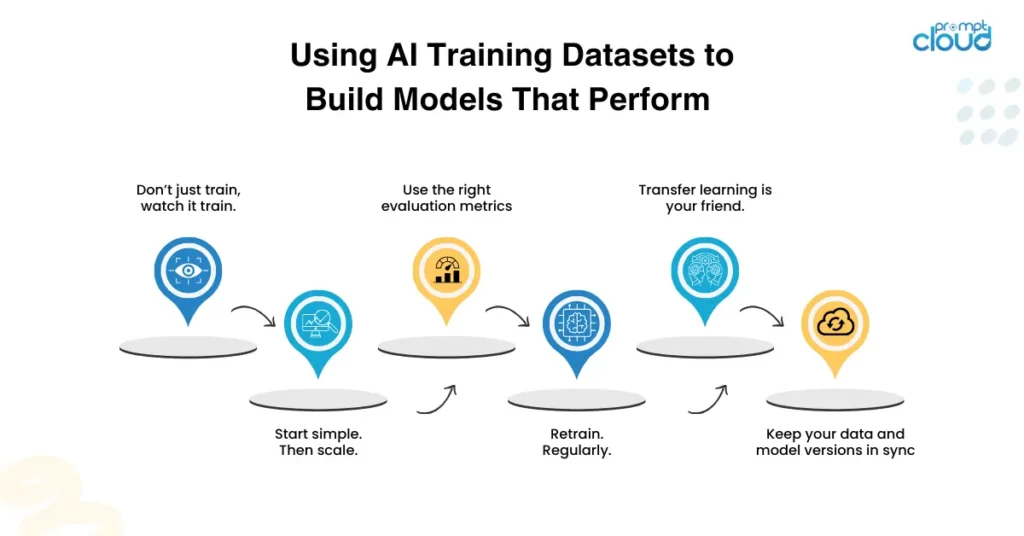

Using AI Training Datasets to Build Models That Perform

This is where all the prep either pays off or completely unravels. You’ve got your cleaned, labeled, preprocessed AI dataset. But using it well isn’t as simple as hitting “train” and walking away.

Let’s be clear: the model isn’t magic. It will learn exactly what your data teaches it. Nothing more. So this part is all about guiding the learning process, not just tossing data at an algorithm and hoping for the best.

Here’s how to actually use your AI ML training datasets to train smarter models:

1. Don’t just train, watch it train.

Your model will try to cheat. That’s what overfitting is—it memorizes instead of learning. You’ll see perfect training accuracy and garbage test results.

This is why monitoring during training is non-negotiable. Plot the loss curves. Track the accuracy over time. Use validation metrics, not just training ones.

If your model’s getting better at training but worse at validation, it’s telling you something. Listen.

2. Start simple. Then scale.

If your model isn’t learning well on a small subset of the data, throwing more data at it won’t fix the problem.

Take a small, representative chunk of your dataset and train a baseline. If that works, scale up. If it doesn’t, stop and debug. Don’t waste compute on broken assumptions.

3. Use the right evaluation metrics.

Everyone loves accuracy. But accuracy means nothing if your dataset is imbalanced or your business problem is more nuanced.

Spam detection? You care about false positives. Healthcare? Precision might matter more than recall. Fraud detection? You want to catch edge cases even if you get a few false alarms.

Choose metrics that reflect what failure really looks like in your application, not what looks good on a dashboard.

4. Retrain. Regularly.

Your data won’t stay relevant forever. If your model’s being used in the wild—on live users, changing products, or evolving markets—you need to retrain.

Set up a pipeline that lets you collect new AI training data, plug it in, and fine-tune regularly. This isn’t a “train once and forget” situation.

The smartest models in production today are the ones that keep learning, not the ones that were trained perfectly once.

5. Transfer learning is your friend.

If you don’t have a massive dataset, don’t start from scratch. Use pre-trained models and fine-tune them with your data. This works especially well in NLP, computer vision, and even some tabular domains.

You get the benefit of general knowledge, with a layer of specialization from your custom dataset. It’s efficient and it works—just make sure your fine-tuning data is clean.

6. Keep your data and model versions in sync

One last thing that’s easy to screw up—version control. If you’re tweaking your AI training datasets, retraining models, and pushing to production without keeping things versioned and traceable, good luck debugging later.

Keep records. Know what dataset trained what model. If performance suddenly drops, you’ll be glad you didn’t treat this like an experiment in a Google Doc.

A model trained on clean, relevant, well-structured data will outperform a “state-of-the-art” model trained on junk every single time. So don’t get distracted by the tech. Focus on the fundamentals. Feed your model what it actually needs—and it’ll deliver.

Best Practices for Scaling Your AI Datasets Strategy

Getting one model to work is hard enough. Scaling that across teams, use cases, and time? That’s where most projects fall apart.

When you’re dealing with more data, more models, and more pressure to “move fast,” things tend to break in subtle, silent ways. The solution isn’t more tools. It’s a strategy. One that’s boring, repeatable, and resilient under pressure.

Here’s what that looks like.

1. Treat datasets like products.

Every dataset you use should have an owner, a version, documentation, and a review process. You wouldn’t deploy code without testing or tracking it—stop doing that with data.

If someone changes a label schema or updates part of the dataset, that should be logged, tracked, and communicated. This avoids a ton of hidden bugs down the line.

2. Build a pipeline, not a pile of scripts.

Most teams start with notebooks and quick fixes. That’s fine early on. But if your data prep involves five manual steps, three one-off scripts, and a person who “just knows how it works,” you’re in trouble.

Use workflow tools. Automate steps. Build something that can be rerun from scratch. Because at some point, you will have to.

3. Monitor data drift. Not just model performance.

Your model might be working fine today. But what if the underlying AI training dataset is shifting? Customer behavior changes. Market conditions change. Input formats change.

Set up checks to catch drift, both in distribution and quality. Don’t wait for the model to fail before you realize your data went stale.

4. Invest in labeling infrastructure.

Labeling is boring, expensive, and absolutely necessary. Whether you’re using internal teams, outsourcing, or weak supervision, treat labeling as a core part of your pipeline.

Build tools for it. Create clear guidelines. Track inter-annotator agreement. Do it right, or you’ll spend months cleaning up later.

5. Audit your datasets like you audit your models.

Bias, imbalance, and missing representation all start in the data. If your model’s making biased decisions, it probably learned it from the input.

Run audits. Ask hard questions. Would this data hold up in court? Would it fail someone who’s not represented in the dataset? If the answer is “I don’t know,” that’s your signal.

6. Don’t scale noise.

The biggest risk when scaling isn’t bad models—it’s bad habits. If you don’t have good data hygiene at 10K rows, scaling to 10 million just multiplies the damage.

Fix the small things early. Make it boring, predictable, and documented. Then scale.

If you’re building serious AI systems, you’re not just in the business of models. You’re in the business of data for AI—sourcing it, cleaning it, maintaining it, and knowing exactly what your model’s learning from.

Get your dataset strategy right, and everything else gets easier.

What Separates a Decent AI Model from a Great One? The Data. Always the Data.

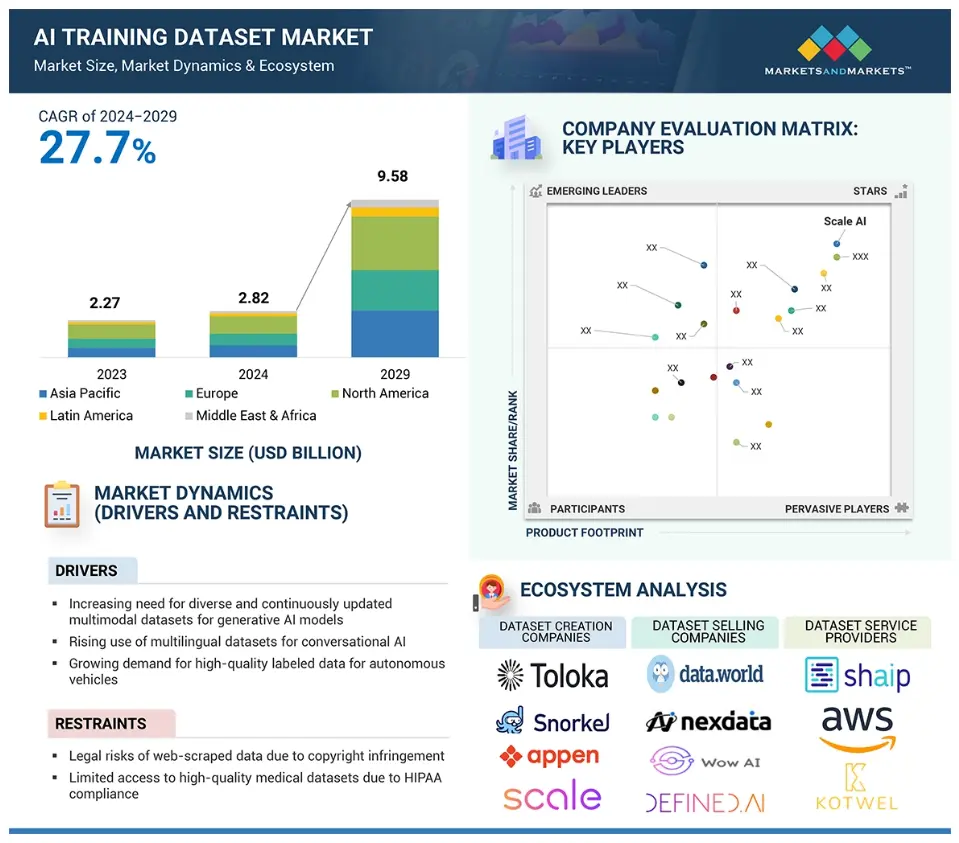

Image Source: marketsandmarkets

You can have the cleanest architecture, the latest framework, and more GPUs than you know what to do with—but if the data’s off, the model’s going nowhere.

Good AI isn’t magic. It’s just pattern recognition at scale. And those patterns come from your dataset. If you half-ass that part, the rest doesn’t matter.

That’s where most teams stumble. Not because they didn’t build a smart model, but because they didn’t take the time to collect the right training data, or didn’t know where to start.

If that sounds familiar, and you’re tired of digging through old public datasets or patching together scraps from random sources, talk to us.

At PromptCloud, we help teams build custom training datasets from the web, real, relevant, and scraped exactly for your use case. No generic feeds, no guesswork, just clean, structured data delivered at scale, ready to plug into your pipeline.

Need data that your competitors don’t have? Let’s talk.

FAQs

What makes a good AI training dataset?

A good dataset is clean, well-labeled, relevant to your task, and representative of the real-world scenarios your model will face. It should also be large enough to capture variation, but not so messy that it confuses the model.

Can I just use publicly available AI datasets for commercial models?

Sometimes, but check the license. Many public datasets are meant for research use only. If you’re building a commercial product, you’ll need permission, or you’ll need to build your own dataset from scratch or with a trusted data provider.

How often should I update my AI training data?

It depends on your domain. For static problems (e.g., basic image classification), you might not need frequent updates. But for anything involving user behavior, real-time markets, or dynamic environments, your data should be refreshed regularly, sometimes weekly or even daily.

Should I build my own dataset or buy one?

If you need domain-specific data that reflects your product or market, building your own dataset (or scraping it) is usually better. If you need something general to get started fast, buying or using open datasets can work. In most real-world cases, you’ll do a bit of both.

What role does a web scraping service provider play in AI dataset creation?

A scraping provider helps you collect large-scale, structured data from public sources on the web, like product catalogs, financial reports, job listings, or user reviews. If done right, it gives you custom datasets that are current, rich, and tailored to your use case—something off-the-shelf datasets rarely offer.