Web scraping has become an invaluable technique for extracting data from websites. Whether you need to gather information for research purposes, track prices or trends, or automate certain online tasks, web scraping can save you time and effort. Navigating the intricacies of websites and addressing various web scraping challenges can be a daunting task. In this article, we will delve into simplifying the web scraping process by gaining a comprehensive understanding of it. We’ll cover the steps involved, selecting the appropriate tools, identifying the target data, navigating website structures, handling authentication and captcha, and handling dynamic content.

Understanding Web Scraping

Web scraping is the procedure of extracting data from websites through the analysis and parsing of HTML and CSS code. It encompasses the sending of HTTP requests to web pages, retrieving the HTML content, and subsequently extracting the pertinent information. While manual web scraping by inspecting source code and copying data is an option, it is often inefficient and time-consuming, especially for extensive data collection.

To automate the web scraping process, programming languages like Python and libraries such as Beautiful Soup or Selenium, as well as dedicated web scraping tools like Scrapy or Beautiful Soup can be employed. These tools offer functionalities for interacting with websites, parsing HTML, and efficiently extracting data.

Web Scraping Challenges

Selecting the Appropriate Tools

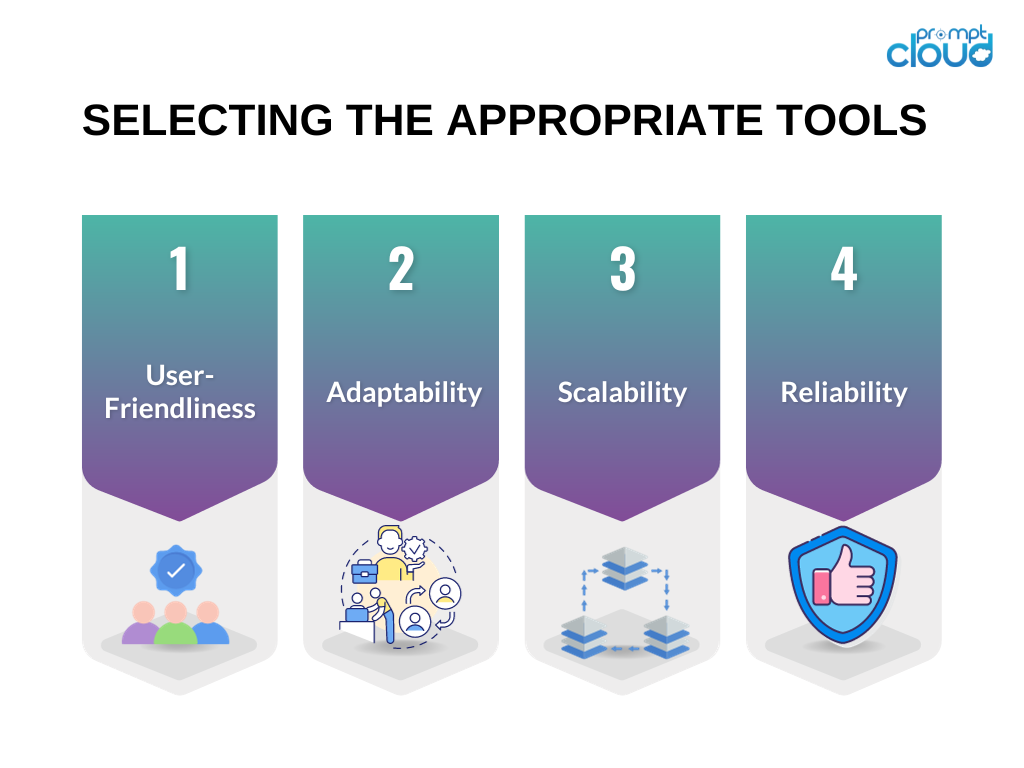

Selecting the right tools is crucial for the success of your web scraping endeavor. Here are some considerations when choosing the tools for your web scraping project:

User-Friendliness: Prioritize tools with user-friendly interfaces or those that provide clear documentation and practical examples.

Adaptability: Opt for tools capable of handling diverse types of websites and adapting to changes in website structures.

Scalability: If your data collection task involves a substantial amount of data or requires advanced web scraping capabilities, consider tools that can handle high volumes and offer parallel processing features.

Reliability: Ensure that the tools are equipped to manage various error types, such as connection timeouts or HTTP errors, and come with built-in error handling mechanisms.

Based on these criteria, widely used tools like Beautiful Soup, and Selenium are frequently recommended for web scraping projects.

Identifying Target Data

Before starting a web scraping project, it’s essential to identify the target data you want to extract from a website. This could be product information, news articles, social media posts, or any other type of content. Understanding the structure of the target website is crucial for effectively extracting the desired data.

To identify the target data, you can use browser developer tools like Chrome DevTools or Firefox Developer Tools. These tools allow you to inspect the HTML structure of a webpage, identify the specific elements containing the data you need, and understand the CSS selectors or XPath expressions required to extract that data.

Websites can have complex structures with nested HTML elements, dynamic JavaScript content, or AJAX requests. Navigating through these structures and extracting the relevant information requires careful analysis and strategies.

Here are some techniques to help you navigate complex website structures:

Use CSS selectors or XPath expressions: By understanding the structure of the HTML code, you can use CSS selectors or XPath expressions to target specific elements and extract the desired data.

Handle pagination: If the target data is spread across multiple pages, you need to implement pagination to scrape all the information. This can be done by automating the process of clicking on “next” or “load more” buttons or by constructing URLs with different parameters.

Deal with nested elements: Sometimes, the target data is nested within multiple levels of HTML elements. In such cases, you need to traverse through the nested elements using parent-child relationships or sibling relationships to extract the desired information.

Handling Authentication and Captcha

Some websites might require authentication or present captchas to prevent automated scraping. To overcome these web scraping challenges, you can use the following strategies:

Session management: Maintain the session’s state with cookies or tokens to handle authentication requirements.

User-Agent spoofing: Emulate different user agents to appear as regular users and avoid detection.

Captcha-solving services: Use third-party services that can automatically solve captchas on your behalf.

Keep in mind that while authentication and captchas can be bypassed, you should ensure that your web scraping activities comply with the website’s terms of service and legal restrictions.

Dealing with Dynamic Content

Websites often use JavaScript to load content dynamically or fetch data through AJAX requests. Traditional web scraping methods may not capture this dynamic content. To handle dynamic content, consider the following approaches:

Use headless browsers: Tools like Selenium allow you to control real web browsers programmatically and interact with the dynamic content.

Utilize web scraping libraries: Certain libraries like Puppeteer or Scrapy-Splash can handle JavaScript rendering and dynamic content extraction.

By using these techniques, you can ensure that you can scrape websites that heavily rely on JavaScript for content delivery.

Implementing Error Handling

Web scraping is not always a smooth process. Websites can change their structures, return errors, or impose limitations on scraping activities. To mitigate the risks associated with these web scraping challenges, it’s important to implement error-handling mechanisms:

Monitor website changes: Regularly check if the structure or layout of the website has changed, and adjust your scraping code accordingly.

Retry and timeout mechanisms: Implement retry and timeout mechanisms to handle intermittent errors such as connection timeouts or HTTP errors gracefully.

Log and handle exceptions: Capture and handle different types of exceptions, such as parsing errors or network failures, to prevent your scraping process from failing completely.

By implementing error-handling techniques, you can ensure the reliability and robustness of your web scraping code.

Summary

In conclusion, web scraping challenges can be made easier by understanding the process, choosing the right tools, identifying target data, navigating website structures, handling authentication and captchas, dealing with dynamic content, and implementing error-handling techniques. By following these best practices, you can overcome the complexities of web scraping and efficiently gather the data you need.