Web scraping has become the most common way of aggregating data from multiple sources and deriving vital information from the internet. This process is being used for enabling data-backed solutions to anything from price matching in e-commerce websites to making decisions in the stock market. With the rise in demand for scraping data from the web, tools, and services that can make web scraping easier have also flooded the internet. However, all of these belong to one of 3 subcategories-

- Creating an in-house web scraping tool using libraries like BeautifulSoup in Python and deploying it in a Cloud Service like AWS.

- Using semi-automated scraping software that can be used to grab parts of the screen. Some human intervention is required for the initial setup but repeated tasks can be automated. However, the degree of automation is limited, the product or business team may face a steep learning curve to use the tool, and not all websites can be scraped using these tools. You will find extra difficulty in handling websites that generate dynamic content using tech like javascript.

- DaaS providers like PromptCloud provide you with a custom data feed based on the websites and data points that you submit as requirements. These services usually charge you based on the amount of data you consume– so your monthly bill is only based on the amount of data scraped and would suit companies of all sizes.

Now a lot of companies may imagine the cost associated with points b or c to be too high and decide to go build a web crawler all by themselves. Why not? Just Googling “How to build a web crawler?” would give you 100s of results. A few of them may even work for your use case. But what is the true cost of building an enterprise-grade web crawler, deploying it to the cloud, and maintaining and updating it over time? Let’s find out.

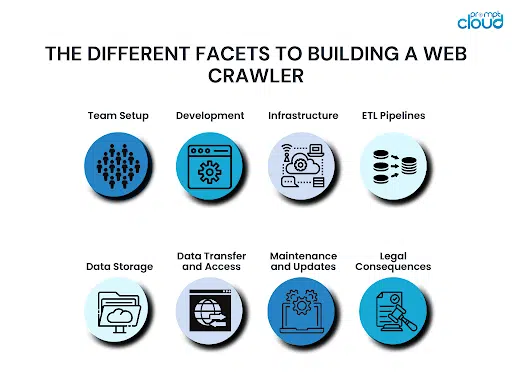

The Different Facets to Building a Web Crawler

When building a web crawler, there are various aspects to keep in mind. Unless you factor in all of these, you may end up biting off more than you can chew. That would end up costing you too much even before you have reached the finishing line, and then you would be stuck between going on with it or giving up on it.

Team Setup:

Major requirements for building a web crawler would be programming knowledge and previous experience of having built a web crawler. Even if you have a tech team, you may lack someone with previous knowledge to lead the pack. Without someone with experience, you may end up making critical mistakes and not realizing them until it’s too late.

Development:

Once you have the team ready, they have to get down to developing your web crawler. This crawler should be able to crawl all the data points required from all the websites in your list. Hence, it will take considerable time not just to build the crawler but also to test edge cases and ensure that it doesn’t break at any point. Depending on how big and experienced your team is, building a new web crawler from scratch may take anywhere from a few months to a few quarters.

Infrastructure:

Building the perfect web crawler is difficult. Deciding on a high-uptime cloud infrastructure that will also be optimized for cost is even harder. Your infra will also need to be scalable such that it can scale as and when your business grows and when you need to scrape data from more sources.

ETL pipelines:

Scraping the data points that you need from the websites of your choice may not be enough. Usually, the data also needs to be normalized, formatted, cleaned, and sorted before it is stored in a storage medium. All of these would require more computing power. Since these pipelines would add a lag in the data flow, getting the correct infra to set up your ETL pipelines on the cloud would be vital.

Data Storage:

Once your data is scraped, cleaned, and ready, you will need to put it in a proper storage medium. This can be a SQL or a NoSQL database. It could also be a data warehousing solution such as Redshift. The choice of database would depend on how much data you want to store, how frequently you want to update or fetch the data, whether the number of columns can change in the future, and more. Like the rest of the resources, the database too needs to be hosted on the cloud, so the pricing also has to be factored in. Additionally, if you have chosen MongoDB as your NoSQL database for its flexibility and scalability, but also require the benefits of relational querying and reporting, you can consider setting up a synchronization process to replicate your MongoDB data into a PostgreSQL database.

Data Transfer and Access:

Now that you have scraped the data and stored it in a database, you may want to fetch it at certain intervals or even continuously. You may create REST APIs to grant the outside world access to your data. Building and maintaining the data access layer would take up time, and you will be charged based on the amount of data transfer you do.

Maintenance and Updates:

A web crawler is never final. It is just a version. A newer version has to be built as soon as any website it is scraping data from gets modified or updated. Adding complex websites to the list of websites to scrape may also need updating of your crawler. Regular maintenance and monitoring of your Cloud Resources is also vital to ensure errors do not pop up in the system and your Cloud compute resources are healthy.

Legal Consequences:

When scraping data from the web, you have to adhere to certain laws of the land. This would be the data protection laws of the country you operate in as well as the laws of countries whose data you scrape. Any mistakes may mean expensive lawsuits. At times, the payouts, settlements, or legal fees are good enough to take a company down under.

The Better Enterprise-grade Web Scraping Solution

The biggest cost that you pay for building your own web scraping solution isn’t even money. It’s time– your business has to wait for the solution to be up and running, for new sources to be added, and more. Instead going for a fully functional DaaS solution that provides you with clean, ready-to-use data and easy integration options would be a wise choice. This is why our team at PromptCloud provides fully managed web scraping solutions hosted on the cloud to our users.

You can start using data from anywhere on the web in just a 3-step process in which you give us a list of websites and data points, validate the results of a demo crawler, and then move on to the final integration. Being a cloud-based solution, we only charge you based on the amount of data you consume– thus the solution is affordable to companies of all sizes. Performing a detailed calculation will show you how you actually save money when going for a managed DaaS solution vs building your own web crawler.

For more details, contact our sales team at sales@promptcloud.com