**TL;DR**

The Web Scraper Chrome extension lets you collect structured data right from your browser with no code required. Install it, build a sitemap (a crawl plan), select the data you need, and export it as CSV or JSON.

It’s great for small research projects, marketing intelligence, and testing data feasibility before scaling to managed pipelines. When your project grows, move to a professional solution like PromptCloud for scalable, compliant, and monitored web scraping.

What Is Web Scraper Chrome and When to Use It

Web Scraper is a Chrome extension that automates the process of extracting structured data from websites. It navigates pages, captures text, links, or images, and exports the output.

Ideal Use Cases:

- Market researchers: Capture product prices or reviews.

- SEO analysts: Gather blog titles or keyword metadata.

- Recruiters: Pull job listings and descriptions.

- Students/researchers: Collect data for analysis projects.

It’s perfect when you don’t need to build complex crawlers but still want structured web data fast.

Before You Start

You’ll need:

- Google Chrome installed.

- Internet connection and access to the target site.

- Permission to extract data ethically (always check robots.txt or ToS).

Step 1: Install the Web Scraper Extension

- Go to the Chrome Web Store.

- Search for Web Scraper (by webscraper.io).

- Click Add to Chrome → Add Extension.

- Once installed, you’ll see a new tab called Web Scraper inside Chrome Developer Tools.

Shortcut:

Ctrl + Shift + I # Open Developer Tools

or right-click on any page and select Inspect → Web Scraper.

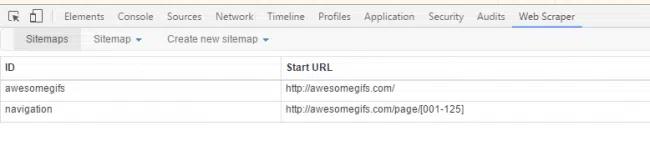

Step 2: Create a Sitemap

A sitemap is a plan that tells the scraper:

- where to start

- what to click

- what to extract

Example:

We’ll scrape GIF titles from https://awesomegifs.com.

Steps:

- Visit the site in Chrome.

- Open Developer Tools → Web Scraper tab.

- Click Create new sitemap → Create sitemap.

- Name it awesome_gifs.

Set Start URL:

https://awesomegifs.com/page/[1-10]

- This tells the scraper to loop through pages 1 to 10 automatically.

- Click Create Sitemap.

Step 3: Add Selectors (What to Extract)

Selectors tell the scraper which data to pull from each page.

Example: Extract GIF image URLs and titles.

- Click Add new selector.

- Set:

- Selector ID: gif_title

- Type: Text

- Click Select and choose a GIF title on the page.

- Repeat for the image URL:

- Selector ID: gif_image

- Type: Element attribute

- Attribute: src

- Click a GIF image, then Done selecting.

- Tick “Multiple” if there are several on the same page.

- Save.

✅ Pro Tip: Use Chrome’s Inspect tool to find a stable selector:

<div class=”gif-card”>

<img src=”https://awesomegifs.com/imgs/funnydog.gif” alt=”Funny Dog” />

</div>

A good CSS selector might be:

div.gif-card img

Step 4: Handle Pagination

If a website uses “Next” buttons instead of numbered URLs:

- Add another selector:

- Selector ID: pagination

- Type: Link

- Click the Next button on the page.

- Selector ID: pagination

- Check the box: “This is a pagination selector.”

- Save.

Now, Web Scraper will keep following the next button until it reaches the last page.

Step 5: Run the Scraper

Click the Sitemap name → Scrape.

A new tab opens, showing progress as pages are visited and data is collected.

When done, go back to the Web Scraper tab and click Export → CSV.

Example Output:

gif_title,gif_image

“Funny Dog”,”https://awesomegifs.com/imgs/funnydog.gif”

“Happy Cat”,”https://awesomegifs.com/imgs/happycat.gif”

Step 6: Work With Extracted Data

You can now import the CSV into:

- Excel or Google Sheets for analysis

- Python (Pandas) for data processing

- Tableau/Power BI for visualization

Quick Python Example:

import pandas as pd

data = pd.read_csv(‘awesomegifs.csv’)

print(data.head())

# Filter GIFs containing ‘dog’

dogs = data[data[‘gif_title’].str.contains(‘dog’, case=False)]

print(dogs)

Step 7: Troubleshooting Common Errors

| Issue | Cause | Fix |

| Empty CSV | Wrong selector or JS delay | Use “Wait for Element” or test new selector |

| Browser freezes | Too many pages | Limit page range or run partial batches |

| Duplicates | Pagination overlaps | Enable deduplication or filter in Excel |

| Blank fields | Hidden JS content | Add delay or “Load More” click before scraping |

Pro Tip:

Test on 2–3 pages first, then scale to the full sitemap.

Want reliable, structured Temu data without worrying about scraper breakage or noisy signals? Talk to our team and see how PromptCloud delivers production-ready ecommerce intelligence at scale.

Advanced: Using JavaScript Rendering & Click Actions

Some pages require extra steps for dynamic or hidden content.

Example:

To click a “Show More” button before scraping reviews:

- Add a selector → Type: Element Click.

- Choose the button.

- Add a child selector to capture the newly loaded content.

To wait for AJAX content:

- Add a Delay (ms) step → 3000 (3 seconds).

- Ensure the target element appears in preview before scraping.

Code Example: Simulating the Same with Python

If you want to replicate the extension’s behavior in code, here’s a simple Selenium script for learning comparison:

from selenium import webdriver

from selenium.webdriver.common.by import By

import csv, time

driver = webdriver.Chrome()

driver.get(“https://awesomegifs.com”)

# Example: extract titles

titles = driver.find_elements(By.CSS_SELECTOR, “.gif-card img”)

with open(“gifs.csv”, “w”, newline=””, encoding=”utf-8″) as f:

writer = csv.writer(f)

writer.writerow([“gif_title”, “gif_image”])

for img in titles:

writer.writerow([img.get_attribute(“alt”), img.get_attribute(“src”)])

driver.quit()

This is essentially what Web Scraper Chrome automates visually with no coding required.

Why Teams Use Chrome Extensions Before Scaling

Chrome extensions like Web Scraper are excellent for validation.

Before building full-fledged pipelines, teams use them to:

- Test if a site’s structure is scrape-friendly.

- Understand field availability (e.g., product IDs, prices).

- Prototype data workflows.

Once validated, these insights inform enterprise-grade crawlers that run on servers, handle anti-bot systems, and push data into structured feeds.

PromptCloud helps clients make this transition smoothly from manual tests to automated, monitored, and compliant pipelines.

Common Pitfalls and How to Avoid Them

1. Over-Scraping

Running unlimited scrapes can flag your IP.

✅ Fix: Add delays (2–3 seconds) and limit parallel runs.

2. Using Volatile Selectors

Auto-generated classes (.x1a2b3) change frequently.

✅ Fix: Target semantic tags like h2.product-title or use XPath:

//div[@class=’gif-card’]//img

3. Not Validating Data

Always check data for duplicates, missing fields, or encoding errors.

✅ Fix: Use simple QA scripts or Excel filters.

4. Ignoring Compliance

Never extract private or copyrighted data. Review Importance of Ethical Data Collection for rules to follow.

Extending the Workflow

Add Nested Pages

For product sites:

- Create a Link selector for product URLs.

- Create a Child Page under it.

- Add selectors for title, price, image, and availability.

Configure Wait Conditions

Use “Wait for Element” before extraction to ensure content is visible.

Example:

Wait for element: div.product-details

Delay (ms): 2000

Merge Data

If you have multiple sitemaps (e.g., categories), export all CSVs and merge them:

cat part1.csv part2.csv > merged.csv

or with Python:

import pandas as pd

files = [‘cat1.csv’, ‘cat2.csv’]

merged = pd.concat((pd.read_csv(f) for f in files))

merged.to_csv(‘final.csv’, index=False)

Data Delivery and Analysis

After exporting, clean and analyze your dataset.

For Analysts:

- Use Excel pivot tables to summarize.

- Import into Google Sheets for collaboration.

For Developers:

- Load into databases or APIs.

- Automate ETL jobs with scripts.

Formats:

| Format | Ideal Use |

| CSV | Tabular data, Excel, BI dashboards |

| JSON | Hierarchical or nested structures |

| XML | Legacy systems, integrations |

| SQL import | Database population |

For more insights, read Data Delivery Formats, Pros and Cons.

Troubleshooting Reference

| Issue | Cause | Resolution |

| Missing images | Lazy load | Add scroll action before scrape |

| “undefined” data | Selector mismatch | Re-inspect and reselect |

| File too large | Memory overload | Export in chunks |

| Crawl loops | Pagination mislink | Stop after first loop, fix URL pattern |

Add-On Block: Advanced Insights for Web Scraper Chrome Users

1. Browser Automation vs. Traditional Web Scraping: The Hidden Divide

At first glance, browser-based tools like Web Scraper Chrome and traditional web scrapers seem identical they both collect website data. But their underlying mechanics are entirely different.

| Aspect | Browser Automation (Web Scraper Chrome) | Traditional Web Scraping (Scripts / APIs) |

| Mechanism | Automates your Chrome browser visually | Sends direct HTTP requests to servers |

| Setup Time | Minutes—no coding required | Needs Python/Node setup and maintenance |

| Speed | Slower (renders full pages) | Faster (headless or parallel requests) |

| Scalability | Limited to your local computer | Scales to thousands of pages via clusters |

| Compliance | Manual responsibility | Managed via legal/compliance frameworks |

| Monitoring | Visual observation | Logs, alerts, and uptime tracking |

Browser automation is perfect for testing, one-off analysis, and research. Traditional scraping (like PromptCloud’s managed crawlers) fits enterprise-grade, recurring data pipelines that require validation, compliance, and SLA-based reliability.

2. Using Selectors Efficiently, Tips That Separate Amateurs from Experts

Even with point-and-click interfaces, selectors remain the backbone of good scrapes. Poorly chosen selectors cause 80% of failures in Web Scraper Chrome.

Pro Tips for Cleaner Selectors

Avoid dynamic classes

Instead of .x9a5d9a_, use semantic paths:

div.product-card h2.title

Target attributes, not positions

a[href*=”product”]

img[alt*=”logo”]

Leverage nth-child logic for lists

ul.results > li:nth-child(3)

- Chain selectors only as needed

Too many layers = fragile; too few = inaccurate.

Validate in Chrome console

document.querySelectorAll(‘div.product-card h2.title’).length

- The count should match visible results on the page.

These habits make your scrapers resilient even when site layouts change slightly.

3. When Web Scraper Chrome Isn’t Enough: Handling Edge Cases

While Chrome extensions simplify scraping, they hit hard limits when real-world complexities arise.

Common Roadblocks and Fixes

| Scenario | Why It Fails | What To Do |

| CAPTCHA or login walls | Website detects automation | Use authenticated crawlers or proxy rotation |

| Infinite scroll pages | Data loads only on scroll | Add “Scroll Down” actions or move to headless browser |

| JavaScript-heavy sites | Data loads after render | Set delay (3-5 s) or use Selenium/Puppeteer |

| Large-scale scraping | Browser memory limits | Switch to distributed crawlers |

| Layout changes | Selectors break silently | Implement schema validation & monitoring |

Once scraping shifts from “experiment” to “operation,” browser-based tools become bottlenecks. That’s the inflection point where businesses transition to managed, server-based pipelines.

4. Integrating Web Scraper Chrome with Workflow Tools (Zapier, Sheets, APIs)

Data has no value sitting in CSVs. The real power comes from integrating it into your existing workflow.

Option 1: Google Sheets

After exporting CSVs:

- Open Google Sheets → File → Import → Upload CSV

Use built-in formulas:

=AVERAGE(B2:B100) // average price

=UNIQUE(A2:A100) // unique titles

Automate refresh with Apps Script:

function refreshData(){

SpreadsheetApp.getActiveSpreadsheet().toast(“Data refreshed”);

}

Option 2: Zapier

Create a Zap:

- Trigger: new file uploaded from Web Scraper Cloud

- Actions: add row to Sheets, send Slack message, or update Airtable

Option 3: API Ingestion

Post your scraped JSON directly to an internal API:

from flask import Flask, request

app = Flask(__name__)

@app.route(‘/ingest’, methods=[‘POST’])

def ingest():

payload = request.json

print(f”Received {len(payload)} records”)

return {“status”: “ok”}, 200

This turns a manual export into a seamless data-in-motion pipeline.

5. Lessons from Real World Teams Using Web Scraper Chrome

Practical experiences from teams that started with Web Scraper Chrome before scaling up:

- Start small, validate ROI – A retail startup monitored 200 SKUs manually and proved a 6 % margin gap before automating.

- Avoid local storage – A research group lost months of scraped data due to cleared browser cache; always back up to the cloud.

- Measure data quality – Volume is useless without consistency checks; run deduplication after every scrape.

- Align with compliance early – A fintech firm paused scraping when privacy concerns arose; legal alignment prevents downtime.

- Upgrade deliberately – Most teams outgrow browser extensions in 6–12 months; migration is a sign of data maturity.

6. Troubleshooting Checklist for Web Scraper Chrome

| Symptom | Cause | Fix |

| Empty export | Selector mismatch | Use “Preview Data” before scraping |

| Missing pages | Wrong pagination link | Verify “Next” selector or URL pattern |

| Blank cells | JS not loaded | Add wait = 3000 ms |

| Browser freeze | Too many tabs | Limit concurrent runs |

| Duplicates | Re-crawl overlap | Enable deduplication in Sheets/Python |

| Encoding errors | Non-UTF-8 text | Re-export as UTF-8 |

Keep this table handy; it saves hours of debugging time.

7. The Next Step: From Browser Tools to Full Data Infrastructure

The Web Scraper Chrome extension is an excellent classroom. It helps you understand how websites structure their data and where automation friction occurs.

But as your goals shift from scrape once to scrape daily, the browser becomes a ceiling.

That’s where PromptCloud comes in:

- Automated orchestration: crawls run continuously without manual input.

- Data validation: broken selectors are auto-detected and retrained.

- Quality control: schema enforcement and sampling QA ensure accuracy.

- Ethical compliance: GDPR-ready pipelines respect each site’s crawl policies.

- Seamless delivery: APIs, S3, BigQuery, or any data lake you use.

In short, PromptCloud turns your one-click Chrome experiment into an industrial-grade, compliant data engine.

Why Managed Scraping Wins Long-Term

Web Scraper Chrome is fantastic for discovery but it’s not scalable for enterprise needs.

Browser extensions have limitations:

- No monitoring

- No deduplication or quality checks

- Limited to local CPU/memory

- Manual triggers only

PromptCloud’s managed web scraping replaces all that with:

- Distributed crawlers and smart throttling

- Automated schema validation and QA

- Real-time API or S3 data delivery

- Compliance with GDPR and data laws

This gives you consistent, clean, and compliant datasets without manual effort.

Common Business Use Cases

- E-commerce: Price, stock, and reviews across thousands of SKUs.

- Travel: Flight and hotel pricing comparisons.

- Finance: Extracting financial filings and market sentiment.

- Real Estate: Aggregating property listings across portals.

- News and Media: Content aggregation and brand monitoring.

All of these workflows start small often with a Chrome extension then scale with managed pipelines.

Conclusion

Browser-based scraping tools like Web Scraper Chrome make data collection accessible for everyone. They’re perfect for experimentation, validation, and short-term projects but they have limits.

As your needs grow, stability, scale, and compliance matter more than convenience. That’s where managed pipelines come in. PromptCloud bridges the gap offering enterprise-grade scraping infrastructure, freshness tracking, and compliance monitoring while you focus on strategy and insights.

Want reliable, structured Temu data without worrying about scraper breakage or noisy signals? Talk to our team and see how PromptCloud delivers production-ready ecommerce intelligence at scale.

FAQs

1. What is Web Scraper Chrome used for?

It’s a browser-based extension that lets users extract data from websites visually, without coding. You can define sitemaps, choose elements, and export datasets in minutes.

2. Can it handle dynamic JavaScript content?

Yes, but you’ll need to enable “JavaScript rendering” and set wait delays to ensure full page load.

3. How does it differ from Instant Data Scraper?

Instant Data Scraper is faster for one-off jobs but offers fewer options. Web Scraper Chrome provides multi-layer sitemaps and pagination control. See Instant Data Scraper Chrome Extensions – A Complete Guide.

4. Is it legal to scrape data using Chrome extensions?

Yes, if done responsibly. Always respect robots.txt, terms of service, and privacy laws.

5. When should I move to a managed service?

If you need recurring data, multiple sources, or high reliability, browser scraping won’t suffice. Managed scraping platforms like PromptCloud automate everything so you get clean, reliable, and compliant data feeds at scale.

6. Can I schedule scrapes automatically?

Yes. Web Scraper Chrome integrates with its Cloud platform, letting you set scheduled runs, define frequency, and download results automatically.

7. Does Web Scraper Chrome store my data online?

By default, no. All data is stored locally unless you use Web Scraper Cloud, which stores results temporarily for download.

8. Can I integrate Web Scraper Chrome with Python or R?

Absolutely. Export to CSV or JSON, then use libraries like pandas, requests, or jsonlite to automate post-processing.

9. How does PromptCloud ensure data quality?

Each dataset passes schema validation, deduplication, and QA sampling. Faulty records are auto-flagged for re-crawl within hours.

10. What are the performance limits of browser-based scrapers?

Your browser’s RAM and network bandwidth define upper limits. Typically, Web Scraper Chrome performs best for up to a few thousand pages. Beyond that, use distributed crawlers.