Most AI talk lately sounds like a broken record. Everyone’s either selling the next magic bullet or pretending like throwing a chatbot at a problem will solve enterprise pain points overnight. But Agentic AI? That’s different.

This isn’t just another AI model that finishes your sentences. Agentic AI is about giving machines a mission and letting them figure out the how on their own. Not just replying to prompts, but taking action, course-correcting, and chasing outcomes. It’s less “give me a recipe” and more “go to the store, grab the ingredients, cook dinner, clean up after, and learn how to make it better next time.”

That’s a leap, and if you’re leading data science, AI strategy, or product innovation, you know how big that is. We’re not talking about replacing analysts. We’re talking about giving them autonomous teammates who don’t get tired, don’t forget steps, and don’t wait around for someone to click ‘run.’

So yeah, it’s a big deal. Especially if you’re working in any field that’s drowning in messy, ever-changing data. And what’s the messiest, most annoying place to collect structured data from? The web.

Web data is gold, but it’s buried under garbage formatting, JavaScript traps, login walls, and legal minefields. It’s not “copy-paste” data. It’s “bring-your-hard-hat” data. That’s why even the smartest AI agents still hit walls when they try to extract real-world web data without help.

Agentic AI is powerful. But it needs clean fuel. It needs structured, reliable, real-time data to do something useful. That’s where web scraping services come in—not as a workaround, but as essential infrastructure. The pipes. The plumbing. The stuff that doesn’t get headlines but makes sure your smart agents don’t go chasing broken links and garbage HTML.

This article isn’t here to sell you hype. We’re going to walk through:

- What Agentic AI really is

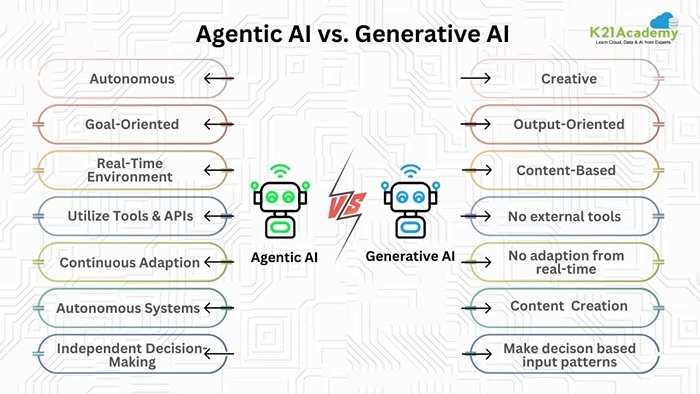

- How it stacks up against Generative AI (hint: totally different goals)

- Where Agentic AI fits into data automation

- And most importantly, why human-built, legally aware, scale-ready web scraping services are still absolutely critical—even in a world where AI does the heavy lifting

Let’s dig into the real differences next: Agentic AI vs Generative AI, because if you don’t get that difference right, you’re setting your tech stack up for disappointment.

Agentic AI vs Generative AI: Same Tech Stack, Totally Different Brains

Image Source: K21academy

You’ve probably sat through at least one meeting where someone said, “Can’t we just use generative AI for this?” It’s understandable, Generative AI is everywhere right now. It writes code, spits out marketing copy, answers customer questions, and even whips up fake headshots. It’s impressive, no doubt. But here’s the thing:

Generative AI and Agentic AI are not the same. Not even close.

Let’s break it down.

Generative AI is great at creating stuff—text, images, code, audio—based on patterns it’s already seen. It’s reactive. You give it a prompt, and it gives you a result. But it has no clue why you asked in the first place, and it’s not about to go take initiative. It’s like a super-fast intern with amnesia. Ask it to write a product description? Done. Ask it to monitor competitors and adjust your product descriptions based on market shifts? Crickets.

That’s where Agentic AI comes in.

Agentic systems don’t just wait for a prompt. They decide what to do next. They can take a goal, say, “track emerging trends in the online fashion market,” and break it down into tasks like:

- Finding relevant data sources

- Scraping product catalogs and influencer posts

- Analyzing price changes or keyword spikes

- Summarizing insights

- Taking action (e.g., triggering alerts or suggesting pricing moves)

They don’t need someone holding their hand at every step. That’s agency. That’s autonomy.

Now here’s the kicker—Agentic AI still uses generative AI under the hood, often for specific tasks like generating summaries or writing scripts. But is the brain driving the whole thing? That’s the agent. The part that decides what to do next, and when, and how.

So no, “just use ChatGPT” won’t cut it when you’re trying to build autonomous systems that actually do things, not just say things.

But even autonomous data agents—smart as it is—can’t fake experience. Especially when it comes to navigating the real world of web data.

It might know how to extract a product list from a site. But does it know whether that site allows scraping? Can it handle rate limiting, anti-bot detection, or rotating proxies? Can it guarantee clean, labeled, structured data that integrates into your pipelines?

Usually? No. And that’s where this all comes together. The true power isn’t Agentic AI versus web scraping for real-time data. It’s Agentic AI working with high-quality, enterprise-grade web scraping infrastructure. One provides the intelligence. The other delivers the fuel.

In the next section, we’ll dive into what Agentic AI can actually do right now, and what it can’t, when it comes to autonomous data collection.

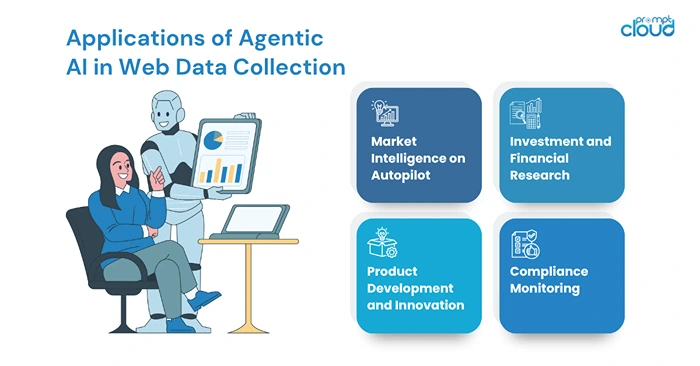

Real-World Applications of Agentic AI in Web Data Collection

Let’s get one thing straight: Agentic AI isn’t some far-off fantasy. It’s already out there, working behind the scenes in serious use cases. Especially when it comes to data, specifically, web data.

Companies are starting to use agile systems to handle complex workflows that used to eat up whole teams. We’re talking about automating the full lifecycle of data tasks: identifying sources, gathering info, cleaning it, interpreting patterns, and kicking off responses.

Sounds dreamy, right? It’s happening. But with some serious caveats we’ll get into in a minute.

Let’s look at where Agentic AI and web scraping are already working together:

1. Market Intelligence on Autopilot

Instead of hiring an analyst to manually gather competitive pricing or product catalog updates, autonomous data agents can automatically monitor competitors’ websites, detect changes, and send alerts when thresholds are crossed. It doesn’t just scrape data—it decides when to scrape, where to look, and what matters.

2. Investment and Financial Research

Agents can scan hundreds of news outlets, social media pages, company sites, SEC filings, and other public sources to extract signals—mergers, leadership changes, regional expansion, etc. One hedge fund used a system like this to scan 10,000+ web sources daily and flag early indicators of risk. That’s not just automation. That’s decision support.

3. Product Development and Innovation

Looking to break into a new category? An agentic system can monitor niche forums, customer reviews, ecommerce listings, and patents to spot gaps in the market or emerging needs. It pulls together insights from unstructured web data—things your CRM or internal dashboards will never show you.

4. Compliance Monitoring

Agentic systems can regularly scan websites or vendor pages for compliance updates—think terms of service, privacy policy changes, or product recall announcements. Instead of waiting for legal to flag something, these agents proactively find risks.

These aren’t science projects. These are real workflows running at scale in smart companies. But here’s the reality check: Agentic AI is only as strong as its data pipeline. And web data? It’s not plug-and-play.

Web scraping at enterprise scale isn’t a Saturday side project. Sites block bots. They change layouts without warning. Some bury data in AJAX. Some require login flows. And some will straight-up sue you if you don’t follow the rules.

Agentic AI might try to navigate that, but it’s not built for cat-and-mouse games. It doesn’t have decades of human context about copyright law, robots.txt ethics, or which proxies are reliable in Indonesia on a Tuesday morning.

So while agentic systems can ask for data and act on it, they still need expert infrastructure to get that data in a usable, legal, and scalable way. That’s where web scraping services come in. Not as an add-on, but as a core dependency.

Why Web Scraping Infrastructure Is Essential for Agentic AI

Here’s the part that too many AI-first evangelists don’t like to talk about: Agentic AI doesn’t replace web scraping services; it depends on them.

Yes, agents can figure out what data they need. They can map out steps, initiate processes, and even loop through retries if something breaks. That’s cool. But when it comes to actually navigating the web at scale, legally, and reliably? That’s where things get real messy, real fast.

Let’s break down why web scraping services are still doing the heavy lifting behind the scenes and why they’re more than just a data pipeline.

1. Web Scraping Is Technically Brutal

Most websites aren’t designed to be scraped. They’re designed to be seen by humans, clicked through, loaded dynamically, and occasionally frustrate you with pop-ups.

An agent might know it needs product data from a site. But does it know how to handle infinite scroll? Does it adjust for CAPTCHAs? Does it detect when the HTML structure has silently changed, even though the page looks identical?

Scraping providers build, test, monitor, and fix crawlers constantly. They maintain proxy infrastructure, handle browser rendering, and optimize throughput. That’s not AI magic—it’s war-tested engineering. You don’t just toss a self-directed bot at the open web and hope it works.

2. Data Quality Still Needs a Human Touch

Let’s say the agent does manage to pull some data. Now what? Is it clean? Consistent? Does it line up with what your systems expect?

Good data extraction means more than pulling values. It means dealing with different date formats, currencies, multilingual content, broken tags, and encoding issues. It means de-duplicating records and understanding when two pages with the same layout have totally different business meanings.

That kind of nuance? It’s not easy to learn, especially not from scratch. Scraping services embed quality control, validation logic, enrichment, and formatting into the flow. They’re not just getting the data—they’re making sure it’s usable.

3. Compliance Isn’t Optional

Web scraping lives in a legal gray zone. The line between public data access and overstepping boundaries isn’t always obvious. What’s legal in the U.S. might be off-limits in Europe. Some sites explicitly prohibit scraping. Others are vague. Some send cease-and-desists for fun.

An AI agent has no legal intuition. It doesn’t “feel” risky. That’s a problem when you’re scraping at enterprise scale.

In reality, agentic AI for enterprise automation needs solid infrastructure beneath it. It can strategize and orchestrate, but only if someone is keeping the data flowing, clean, and on the right side of the law.

Professional web scraping services build guardrails: they respect robots.txt, they implement rate limiting, they anonymize IPs, and—importantly—they know when to stop. Some even consult legal teams before taking on new targets. That kind of due diligence doesn’t come baked into even the most advanced AI.

4. Adaptability Is Baked into Human Teams

The web changes all the time. If your AI agent fails because the site layout changed slightly or the data field moved, what happens next?

In a robust scraping service, there’s a team monitoring crawler health 24/7. They’re fixing breaks before your AI even notices something’s wrong. They’re proactive, not reactive. That’s the kind of reliability enterprise systems demand.

And if your data goals shift? If your team decides to scrape new regions, new sites, or new content types? A scraping provider pivots with you. They don’t need retraining—they just build.

5. Scalability Isn’t Just About Code

Sure, an agent can scale in theory. It can spin up multiple workflows and run in parallel. But scraping at scale means more than concurrency. It means managing hundreds or thousands of active sessions, across rotating proxies, in multiple time zones, under different user agents, through varying response formats.

There’s real-world overhead here, costs, latency, availability, throughput, and API rate limits. This is where scraping services shine. They’ve already built for scale, and they’ve learned (sometimes the hard way) what breaks.

Agentic AI doesn’t kill the need for scraping services. It makes them more important. Because the better your agent is, the more it needs high-quality, structured, real-time data to act on. And the only way to consistently get that from the web? Through the kind of focused, specialized infrastructure that scraping providers have been quietly perfecting for over a decade, scalable data pipelines with AI at the core.

How Agentic AI and Web Scraping Services Will Coexist

If there’s one takeaway here, it’s this: Agentic AI is not replacing web scraping for real-time data. It’s accelerating its importance. And the future isn’t one or the other—it’s both, working in sync.

We’re entering a new phase in data automation. Not just faster scripts or prettier dashboards—but systems that think and act without waiting for a prompt. That’s what Agentic AI for enterprise automation promises. But thinking is only half the job.

The other half? Reliable, real-time, clean data from the most chaotic, constantly changing information source in the world: the internet.

That’s why the winners in this space won’t be the companies trying to go all-in on DIY agents. It’ll be the ones who build hybrid systems—where Agentic AI handles the decision-making, and battle-tested scraping infrastructure handles the execution.

Let’s look at how this plays out.

Agents Set the Goals, Scraping Services Deliver the Goods

Imagine an Agentic AI system tasked with tracking emerging competitors across a dozen countries. It decides what sources to monitor—startup databases, ecommerce platforms, company press releases, and social media.

But then what?

It can’t brute-force its way through login walls, language variations, and dynamic site elements. That’s where scraping infrastructure takes over—authenticating where needed, navigating structureless pages, parsing the content, and handing over structured, high-confidence data.

The agent doesn’t care how the data got there. But it definitely needs to be accurate, complete, and fresh. That’s the division of labor.

Agentic AI Doesn’t Need to Know the Plumbing, But Someone Does

Just like a product manager doesn’t need to know how a server is patched, an AI agent doesn’t need to understand how proxies are rotated or headers are spoofed. But somebody has to.

Web scraping services are that “somebody.” They’re the invisible hands making sure the agent isn’t flying blind, or worse, ingesting garbage data and making dumb decisions. You can’t just spin up an agent and expect the web to roll over and hand you clean JSON.

It’s like building a Formula 1 car with no pit crew. Sure, it can drive, but when something breaks (and it will), you’re toast.

The Smartest Companies Are Already Building Hybrid Models

This isn’t theoretical. Companies at the leading edge of AI adoption are already pairing agentic orchestration with human-validated data feeds. They’re using agents to direct the flow: what’s needed, where to look, and what actions to take based on the data.

But the actual collection and validation? That still lives with professional scraping teams.

The result: faster insights, leaner ops, and fewer errors—without compromising on legality, uptime, or data quality.

This hybrid model is where things are heading. It’s not AI versus humans. It’s AI plus deep domain expertise. And it’s a massive opportunity for forward-thinking orgs that don’t fall for the hype and actually build for reality.

Where PromptCloud Fits In: The Backbone for Agentic AI Data Needs

If you’ve made it this far, you get the picture: Agentic AI can’t run without clean, consistent, and compliant data—and the web isn’t giving that up easily. That’s where PromptCloud steps in.

PromptCloud isn’t just another scraping tool or proxy provider. We’re a full-stack web data platform—designed to support scalable data pipelines with AI, even when your needs span thousands of URLs and regions. We work with some of the biggest names across e-commerce, finance, market research, and AI innovation—because we don’t just extract data, we deliver production-grade pipelines.

In the age of Agentic AI, here’s what that means:

- Custom scraping solutions that are tailor-made for your sources, formats, and update frequency—because “off-the-shelf” doesn’t cut it when agents are making real-time decisions.

- Legal-first approach with transparent compliance practices, so your AI systems don’t accidentally wander into gray zones that trigger lawsuits or regulatory nightmares.

- Scalable infrastructure that can handle thousands of sources across geographies and languages without blinking—while your agents keep their focus on high-level strategy.

- Real-time change detection and site monitoring, so even if the structure of a key data source shifts overnight, your pipeline doesn’t miss a beat.

- Human-in-the-loop QA, to make sure the data your agents use is clean, labeled, and ready to act on—because even the smartest AI still needs someone watching its blind spots.

We’re already helping AI-first teams build smarter, faster, and more resilient data systems. And as Agentic AI becomes more embedded in the enterprise stack, PromptCloud is ready to be the data infrastructure that powers it.

You bring the intelligence. We bring the fuel.

Want to build an AI system that doesn’t just sound smart but acts smart?

Let PromptCloud handle the data complexity, so your agents can focus on what they’re built for: taking action. Contact us today!

FAQs

1. What is Agentic AI, and how is it different from traditional AI?

Agentic AI refers to AI systems that can independently set goals, plan actions, and carry them out without step-by-step human instructions. Unlike traditional or rule-based AI, agentic systems exhibit autonomy—they decide what to do and how to do it. This goes far beyond just responding to prompts. It’s a shift from reactive to proactive AI, and it’s foundational to the next generation of automation.

2. Agentic AI vs Generative AI, what’s the real difference?

While both fall under the broader AI umbrella, they serve different purposes. Generative AI is designed to create content—text, images, code, etc.—based on existing patterns. It’s output-focused and prompt-driven. Agentic AI, on the other hand, is goal-focused. It can initiate tasks, adapt to changing inputs, and make decisions on its own. Think of generative AI as the artist, and agentic AI as the strategist or operations lead.

3. Can Agentic AI handle web scraping by itself?

Not reliably. Agentic AI can identify what data it needs and why, but the actual execution of web scraping—navigating different site structures, handling authentication, complying with legal frameworks, managing proxy infrastructure—is still incredibly complex. That’s why dedicated web scraping services like PromptCloud are essential. They ensure your agents are fed clean, structured, compliant data.

4. What are the main applications of Agentic AI in data workflows?

Agentic AI is already being used to automate complex workflows like competitive intelligence gathering, trend tracking, compliance monitoring, product research, and financial news aggregation. It excels at decision-based tasks where static scripts would break or fall short. But again, the success of these use cases depends heavily on access to consistent, high-quality external data, especially from the web.

5. Why are web scraping services still critical if AI can automate so much?

Because the web is unpredictable. Layouts change. Data hides behind scripts. Legal risks shift by region. AI can automate a lot, but it doesn’t yet replace human insight, infrastructure, or real-world experience when it comes to navigating the complexity of web data. Scraping services bring legal compliance, domain expertise, and reliability—everything AI still lacks. That’s why they’re not becoming obsolete. They’re becoming more essential.