**TL;DR**

Scraping Amazon prices at scale sounds simple—but the site is built to block bots. With dynamic content, geo-based pricing, and aggressive anti-scraping tech, self-built scripts fail fast. A web scraping service provider handles proxy logic, retries, data structuring, and scale—so you get accurate, usable data with zero fire fighting.

Price Scraping Is Harder on Amazon Than You Think

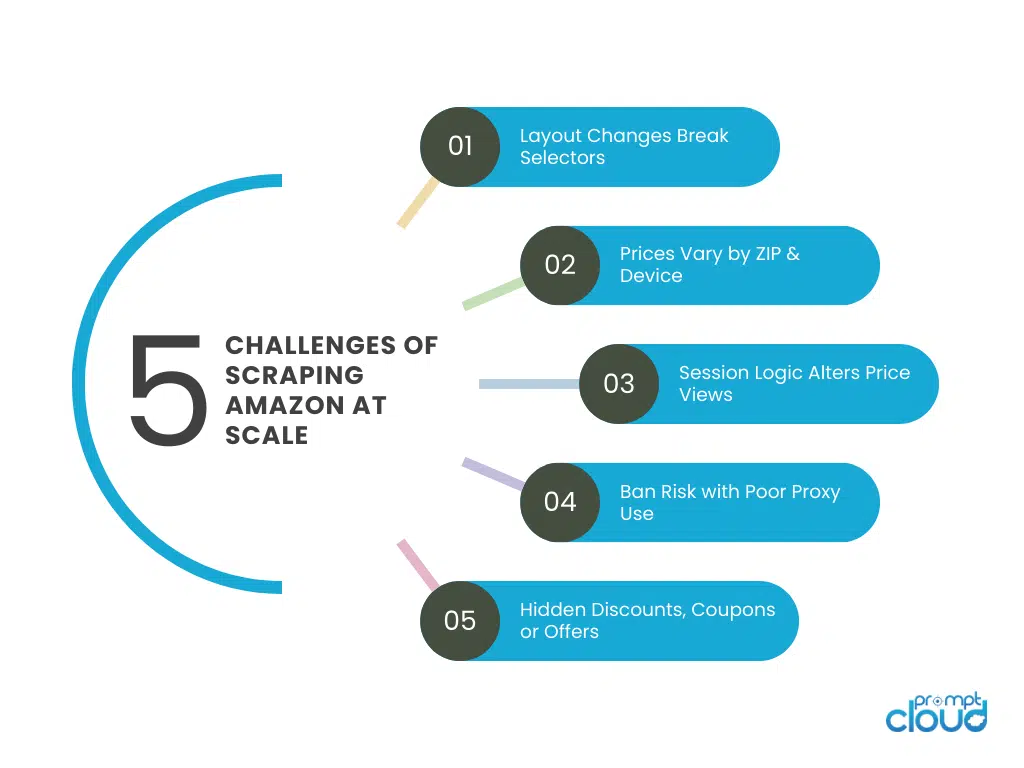

Scraping Amazon prices isn’t like scraping a product list from a blog—it’s an arms race. The site constantly shifts its structure, enforces strict anti-bot measures, and personalizes content based on geography, device, and browsing history. What loads for you may not even exist for a crawler without the right fingerprint.

Here’s what makes Amazon price scraping especially painful:

| Challenge | Why It Matters |

| Frequent UI changes | Breaks selectors—missing price fields or ASINs |

| Dynamic rendering (JS) | Pages load late; scrapers see partial HTML |

| CAPTCHA / bot detection | Blocks after a few dozen requests |

| Geotargeted prices | Prices vary by ZIP, delivery pin, or mobile region |

| Buy Box volatility | Prices change based on seller, time, or cart state |

| Mobile vs desktop variance | Some offers only visible on mobile experience |

Unless your scraping logic can keep up with Amazon’s speed of change, you’ll end up with gaps, stale data, or blocked IPs.

Want Amazon price data at scale?

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

Why DIY Tools and Scripts Break Fast

Sure, you can spin up a Selenium bot or run Scrapy with proxies. It may work—until it doesn’t. DIY scraping setups often fail due to:

- Static logic: Hardcoded selectors don’t adapt when Amazon changes HTML layouts.

- Proxy mismanagement: IP bans start after a few requests unless you rotate cleanly.

- No retry logic: You might miss data due to timeouts or soft blocks without even knowing.

- No QA: HTTP 200 doesn’t mean you scraped the actual price—just a product shell.

Most homegrown scrapers operate like this:

Crawl → Extract → Save → Hope

But when you’re tracking thousands of products across regions, categories, and sellers—hope isn’t a strategy. You need structured retries, fallback proxies, and active monitoring to even stay afloat.

What a Web Scraping Service Actually Does Differently

A web scraping service doesn’t just “fetch” pages—it engineers reliability.

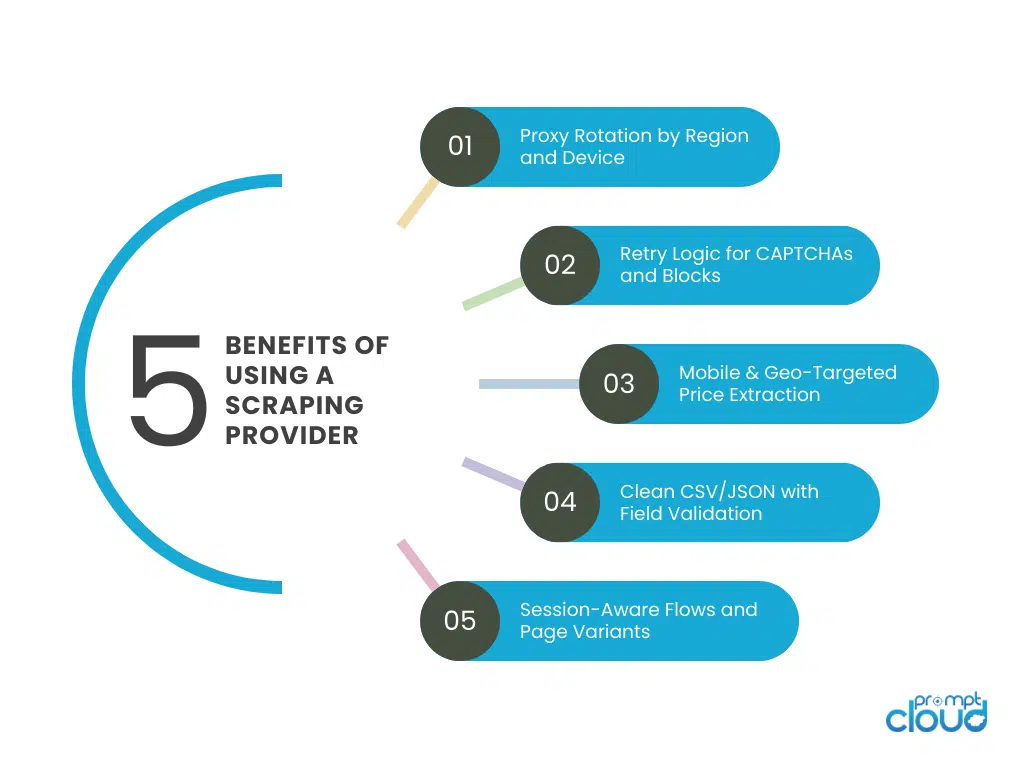

While DIY scripts break under Amazon’s rotating ASINs, layout tweaks, and session controls, a professional service builds scraping logic that adapts. Here’s what you get:

| Functionality | DIY Script | Web Scraping Service |

| Proxy & IP Rotation | Manual / none | Automated, country-targeted |

| Ban Detection & Retry Logic | Rare or missing | Built-in retry w/ escalation |

| Geo & Mobile Rendering Support | Needs extra config | Native support |

| Structured Output | Raw HTML / broken fields | Clean CSV/JSON, deduped |

| Monitoring & QA | Manual spot checks | Automated field validation |

Instead of chasing bugs, you focus on using the data. The service handles:

- Proxy rotation across datacenter, residential, and mobile IPs

- Retry logic for timeouts, soft blocks, and CAPTCHAs

- Geo-routing via US ZIP codes or device-level targeting

- Field-level monitoring to catch layout changes or field gaps

- Delivery pipelines that format and send deduped price data to your systems

Most importantly, scraping services are built for scale. Whether you’re tracking 500 products or 5 million, they can expand without additional dev work from your end.

Amazon-Specific Challenges That Need Experience

Amazon isn’t just another ecommerce site—it’s a fortress of price personalization, real-time updates, and aggressive bot detection. And it’s not just about getting the price. It’s about getting the right price, for the right product, in the right condition, at the right time.

Here’s what a general-purpose scraper often misses:

- Buy Box Shifts: Prices can change every few minutes based on seller rank, shipping, or promo triggers.

- Mobile vs Desktop Views: Mobile users often see different pricing, layout, or even product bundles.

- Location-Specific Pricing: Delivery pin codes or regional warehouses influence prices and availability.

- A/B Price Testing: Some users get different prices for the same ASIN during testing.

- App-Only Promotions: Mobile-exclusive discounts or flash sales often don’t appear in desktop HTML.

A scraping service with Amazon expertise knows how to:

- Rotate mobile and desktop UAs based on context

- Route requests through US mobile proxies for local ZIP-targeted views

- Detect layout shifts or variant mappings using field heuristics

- Persist session cookies for price flow fidelity

This goes far beyond “get HTML from this URL.” It’s about replicating a real user journey—at scale and on schedule.

Some of these changes are only visible through mobile browsers or ZIP-specific targeting. If you want a deeper dive into how regional pricing patterns show up, check out our guide on Amazon market trends.

Amazon’s bot detection systems track much more than IPs. They analyze session timing, scroll behavior, viewport resolution, and HTTP headers. A key part of this is the User-Agent string and browser fingerprint. Learn more about how User-Agent behavior affects scraping outcomes.

Real Use Cases: How Brands, Retailers, and Analysts Scrape Amazon Prices

Scraping Amazon isn’t just for monitoring your own listings. Here’s how different teams use Amazon price data in the real world:

Brands — MAP Monitoring & Buy Box Defense

If your brand’s product is listed by multiple sellers, you need to know who’s winning the Buy Box, undercutting MAP (Minimum Advertised Price), or bundling your product for unauthorized discounts. A scraping service helps brands:

- Detect MAP violations daily

- Track unauthorized third-party sellers

- Monitor Buy Box ownership shifts

- Export data into pricing enforcement dashboards

Retailers — Competitive Pricing Intelligence

Multi-channel sellers track competitor pricing on Amazon to adjust their own pricing dynamically. But scraping Amazon manually doesn’t scale. Scraping services help retailers:

- Benchmark against Amazon first-party and third-party sellers

- Monitor seasonal pricing changes

- Analyze shipping and fulfillment pricing (FBA vs FBM)

- Track regional price variations by ZIP or device

From MAP enforcement to real-time dynamic pricing, scraping Amazon powers smarter pricing intelligence for brands and multichannel sellers alike.

Analysts — Market & Trend Research

Researchers and analysts use Amazon data to understand:

- Pricing volatility across product categories

- Popular product variants and price elasticity

- Launch pricing strategies by competitors

- Inventory and restock cycles

And they need this data clean, deduped, and ready to plug into models—not buried in raw HTML with 20% field loss.

For retailers in particular, Amazon scraping is a core piece of broader eCommerce data solutions used to benchmark against Amazon’s 1P and 3P sellers in real time.

Why Scale Matters: Beyond One-Time Crawls

You don’t just scrape Amazon once. If you’re serious about pricing intelligence, you need a repeatable, scalable, and adaptive pipeline. Here’s why:

| Challenge | Why It Fails at Scale |

| Manual scheduling | Missed intervals → stale pricing |

| Static proxies | Bans rise after a few hundred requests |

| Missing deduplication logic | Inflates dataset with duplicate ASINs |

| No field validation | Data becomes silently unusable |

| No feedback loop | Blocked pages aren’t retried or repaired |

Most price scraping errors don’t show up as failed crawls—they show up as bad data. Broken selectors still return HTTP 200, but you’re missing prices, reviews, or listings. Here’s a breakdown of the most common scraping mistakes and how to avoid them.

When you’re tracking tens of thousands—or millions—of SKUs, scale isn’t just about crawling faster. It’s about:

- Ensuring freshness (price changed 3 hours ago—do you have it?)

- Tracking change deltas (is this discount new or just a new seller?)

- Feeding live dashboards (not just static exports)

- Controlling cost per SKU (optimized routing, minimal retries)

A scraping service provider gives you this infrastructure without needing your engineers to babysit scripts 24/7.

The Real KPI Isn’t “Requests per Minute.” It’s Cost per Valid Price

Most scraping tutorials talk about how fast your crawler runs. But in production, the question is: what’s your cost per valid price record?

A valid price isn’t just any number you extract—it’s a verified, contextually accurate value that’s tied to the right ASIN, offer condition, and time. Measuring this metric means looking beyond HTTP status codes and tracking:

- Field-level success rates (price + coupon + seller present)

- Retry and solve costs (proxy GB, headless runtime)

- QA overhead from manual reviews or selector updates

A web scraping service like PromptCloud tracks and minimizes this cost across millions of requests, ensuring not just speed, but economic reliability.

Buy Box “Truth Testing”: A Method to Trust (or Reject) a Price

Scraped prices are often misleading. Amazon can show one price in the Buy Box, another in the Offers section, and yet another after you add the product to your cart.

To extract trustworthy prices, you need Buy Box truth testing:

- Extract the primary Buy Box value

- Cross-check it against Offers listings

- Parse and normalize discounts, coupons, and promotions

- Re-render or retry if the values conflict

- Log the validation trail

Most basic scrapers stop at step one. A service provider can build this logic into your flow—automatically, reliably, and at scale.

PA‑API vs Scraping: When to Reconcile, When to Override

Amazon’s Product Advertising API (PA‑API) is clean and structured—but it doesn’t show everything. Scraping fills the gaps, but introduces uncertainty. The best approach is reconciliation:

- Use PA‑API for stable fields like ASIN, title, brand

- Use scraping for dynamic data like prices, stock, and Buy Box owner

- Cross-match both sources using session state and location context

- If they disagree, escalate to a full session replay and prioritize the user-facing view

This ensures you only act on prices that reflect what your customers actually see.

Geo‑Mobile Parity Tests: Are You Seeing the Same Web as Your Shoppers?

Amazon changes prices based on where you are, what device you’re using, and even what time it is. Scraping from a static IP with a generic user-agent won’t cut it. You need geo-mobile parity testing:

- Route requests through real US mobile proxies

- Set delivery ZIP codes for specific product regions

- Match mobile browser headers (viewport, User-Agent)

- Compare scraped results against real browser screenshots

PromptCloud does this out of the box—ensuring your data mirrors the real buyer experience.

Proxy Pool Health Decay: Measure It or Pay for It

Most scraping failures aren’t from the scraper—they’re from the proxies.

Proxy pools decay. SIM cards get flagged. Subnets get throttled. If you’re not scoring pool health, you’re flying blind. You need to track:

- Block rates (403s, CAPTCHAs, soft blocks)

- Latency and jitter

- Field coverage success per proxy

- Decay curves across ASNs

A service provider can rotate, refresh, and de-prioritize bad proxies automatically—without you touching a line of code.

Session-Aware Flows: When Price Only Appears After Interaction

Sometimes the price only shows up after:

- Setting a ZIP code

- Choosing a variant

- Adding the product to cart

Scrapers that treat every page the same will miss these cases. PromptCloud’s session-aware scraping logic simulates user flows so nothing slips through. It reuses session cookies, sets delivery preferences, and triggers dynamic price rendering the same way a real user would.

Freshness SLOs: How Fast Do You See Price Changes?

A lot can happen in 12 hours. On Amazon, prices can change every few minutes.

To compete, you need a freshness SLO—a promise like “95% of SKUs updated within 2 hours of change.” PromptCloud achieves this by:

- Using lightweight detectors for sentinel SKUs

- Escalating high-change categories automatically

Prioritizing delta-first delivery (new vs unchanged prices)

You don’t just get a daily dump—you get a competitive edge.

Data Integrity Model: From Parse to Price You Can Present

Price data is only useful if it’s trustworthy. PromptCloud attaches integrity metadata to every row:

- Was the price parsed from primary or secondary anchor?

- Were promotions present?

- Was the session mobile, desktop, or mixed?

- Did reconciliation succeed?

Your analysts get data they can actually use, and your dashboards won’t crash from missing fields.

Compliance and Auditability: No Gray Areas

Scraping public data doesn’t mean ignoring compliance. PromptCloud builds compliance into the workflow:

- Robots.txt aware by default

- No personal data scraping allowed

- Event logging for every request and response

- IP sourcing transparency (especially for mobile proxies)

- Opt-out logic for sensitive endpoints

If you’re in a regulated industry—or just want to stay out of trouble—this matters.

Cost Control Without Losing Accuracy: A Smarter Routing Policy

Throwing mobile proxies at every page is expensive. Skipping retries is risky. You need routing logic that adjusts per target. PromptCloud uses a multi-tier fallback system:

- Start with fast datacenter proxies

- Detect patterns (403s, latency, layout gaps)

- Escalate to residential or mobile only if needed

- Score proxies by decay and success rates

- Load-balance across carriers, not just IPs

It’s scraping with discipline—and it saves you money.

Engineering Patterns That Survive Amazon Changes

Amazon pushes layout updates weekly. The only way to survive is to code defensively.

PromptCloud uses:

- Redundant selectors (with voting logic)

- Heuristics (e.g., reject $0 unless it’s a promo)

- Canary SKUs to detect field drift early

- Feature flags to adjust scraping logic mid-run

When something breaks, it auto-recovers. You don’t even need to open a ticket.

Analytics Playbook: From Raw Prices to Decisions

What do you do once you have Amazon price data? Here’s what clients build on top of PromptCloud feeds:

- Price elasticity analysis (track price drops vs sales velocity)

- MAP enforcement queues (auto-alert on violations)

- Promotion fingerprinting (strike-throughs, coupons, CTAs)

- Buy Box win/loss predictors (based on pricing gap and shipping options)

This is what scraping is really for—not the HTML, but the intelligence behind it.

The Build‑or‑Buy Table: DIY, PA‑API, Scraping API, or Managed Service

| Criterion | DIY Scripts | PA‑API | Scraping API Vendor | Managed Service (PromptCloud) |

| Price fidelity (buy‑box, coupons) | Variable | Limited | Good | Highest (truth‑testing + flows) |

| Geo/mobile parity | Manual heavy lift | Limited | Partial | Native routing + parity tests |

| Scale & freshness SLOs | Hard | Quota bound | Good | SLO‑backed orchestration |

| Proxy health & decay mgmt | Manual | N/A | Vendor‑managed | Vendor + telemetry scoring |

| QA & field completeness | Manual | High for API fields | Varies | Automated field‑level QA |

| Compliance/audit trails | Manual | Strong | Varies | Built‑in evidence logs |

| Total cost per valid price | Uncertain | Predictable but limited | Medium | Lowest at scale |

Trends for 2025 in Amazon Price Scraping

2025 is bringing new dynamics to Amazon price scraping. If you want to stay competitive, here’s what to watch and adapt to:

- Increased Bot/Behavioral Detection Sophistication

Amazon is investing heavily in fingerprinting: behavioral signals (scroll, mouse move), device characteristics, cookie histories. Simple user‑agent rotation won’t cut it. Scrapers need to simulate real user behavior more closely and manage fingerprint consistency. - Mobile & App‑Only Pricing Gaps

Discounts, promos, and Buy Box advantages increasingly show only in app or mobile‑specific views. In 2025, seeing only desktop HTML will miss a chunk of dynamic pricing data. Scraping providers are building mobile‑app emulation or Android/iOS traffic models to capture those offers. - Geo‑Based Pricing Discrimination Becomes Finer

Amazon is slicing pricing not just by country but by state, ZIP, sometimes even SKU neighborhoods. With increased same‑city courier options and local delivery, your scrape must mimic local delivery ZIPs and reflect local warehouse pricing. Trend is toward more localized price variance. - Real‑Time or Near‑Real‑Time Pricing Demands

Velocity matters: flash deals, lightning deals, Prime day promos—these can last minutes. Customers of price‑data want live or near‑live updates. Static snapshots or daily batches increasingly fail buyer demands. Systems that push deltas quickly will win. - Stricter Compliance & Transparency Requirements

With increased scrutiny on data privacy, Amazon scraping is under more regulatory gaze. Scrapers need crystal‑clear logs of IP sources, scraper behavior, no PII leaks, and compliance with robots.txt. Transparency not just for risk teams but also for customers demanding ethical sourcing. - AI & ML Assisted Price Pattern Identification

Rather than just grabbing numbers, ML models are being used to surface patterns: which sellers are most likely to undercut, which SKUs show frequent price‑volatility, what discount patterns follow inventory surges. Scraping providers will increasingly embed AI to flag anomalies or profit opportunities. - Composable Data Pipelines

Moving away from monolithic dumps toward APIs, real‑time streaming, and event‑based notifications. Clients want to subscribe to price‑drop events, Buy Box loss events, or MAP violation alerts—not pull large CSV files daily. 2025 sees more push architectures.

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

FAQS

1. Is it legal to scrape Amazon prices for competitive analysis?

Yes—scraping publicly available Amazon pricing data is legal if done responsibly. To stay compliant, avoid collecting personally identifiable information (PII), respect robots.txt guidelines, and use ethically sourced proxies. PromptCloud follows a compliance-by-design approach with full audit trails.

2. How does a scraping service provider reduce Amazon bans and CAPTCHAs?

A managed scraping provider uses layered proxy infrastructure, session management, and fingerprint stability to avoid triggering Amazon’s anti-bot systems. They detect block patterns (302s, soft blocks, timeouts) and automatically escalate proxy types—reducing retries and keeping ban rates low.

3. Why is mobile and geo-targeted pricing so important on Amazon in 2025?

In 2025, Amazon’s pricing is increasingly customized based on delivery ZIP codes, user devices (mobile vs desktop), and even session state. Some deals only appear on mobile or in specific regions. To get accurate price intelligence, your scraping system must simulate local mobile users—not just generic bots.

4. What makes web scraping Amazon at scale so difficult without a service?

DIY scripts often break due to layout changes, session requirements, and evolving anti-scraping defenses. Scaling also introduces proxy decay, silent data loss, and high retry overhead. A scraping service handles this complexity with monitoring, retry logic, field validation, and infrastructure—so your team doesn’t have to.

5. What should I look for in an Amazon scraping service provider?

Look for providers who offer:

Proxy rotation by device and ZIP code

Field-level QA with monitoring and alerts

Buy Box truth validation

Session-aware scraping flows

Compliance documentation and audit logs

PromptCloud offers all of the above—plus structured delivery in JSON, CSV, or API format.