**TL;DR** If all you need is to scrape a few pages once or twice, those plug-and-play scraper tools are usually fine. They get the job done… until they don’t.

But after a while, things break. Maybe the site changes. Maybe the tool just starts acting weird. Suddenly, what was supposed to save time turns into something you must babysit. And if the site has anti-bot stuff or odd layouts? Yeah, good luck.

That’s where a custom web scraping service comes in. They don’t just hand you a tool, they build the whole thing for you, maintain it, adapt it when websites change, and deliver clean, structured data. You don’t touch the backend. You just get what you asked for.

If you’ve hit the ceiling with your current tool, you’ll know. This is the next step.

Web Scraping in the Modern Data Stack

By now, most people working with data, especially in product, research, or marketing roles, have at least dabbled in web scraping. Maybe you’ve used a free scraper tool to collect product prices. Maybe your team pulled data from a few job boards. It’s common. The thing is, web scraping seems simple, until it isn’t.

Websites aren’t built to make your life easy. Some of them change layouts every few weeks. Others block traffic that looks automated. And even when the scraping part works, you’re often left cleaning up messy data or wondering why half the entries are suddenly missing.

That’s where the debate comes in: do you keep tweaking your current DIY scraper, or is it time to bring in a web scraping service provider?

This article isn’t here to explain what a scraper tool is; you probably already know that. Instead, we’re going to look at what those tools can do well, where they tend to fall short, and why a custom web scraping service might be the better choice if you’re looking to scale or rely on data for anything serious.

We’ll also look at real-world examples, trade-offs, and what changes when you shift from a one-size-fits-all scraper to a tailored setup managed by professionals.

What Are Scraper Tools and What Do They Offer?

If you’ve ever tried to pull some data off a website, maybe prices, maybe a list of products- you’ve probably come across one of those plug-and-play scraping tools. Names like ParseHub, Octoparse, and WebHarvy pop up a lot. They’re often described as scraper tools or DIY scrapers, and they’re basically built for people who don’t want to code.

You paste in a link, click on a few things you want to extract, hit run, and just like that, you’ve got a spreadsheet. At least when things go smoothly.

These tools can be helpful, especially when you’re starting out or don’t need anything fancy. But it’s worth knowing what they’re really offering and where they start to hit limits.

What Do Scraper Tools Offer?

1. Point-and-Click Interfaces

Most of these tools are made so you don’t have to write code. You open a site in their built-in browser, click on the things you want, like the product name or price, and the tool figures out the pattern. For people who aren’t developers, this makes scraping feel accessible.

2. Works Fine on Simple Sites

If the site doesn’t have login walls, endless scroll, or aggressive anti-bot protection, these tools usually do okay. Think of straightforward listing pages, like a local directory or a basic online catalog.

3. Can Be Scheduled (Sort Of)

A few scraper tools let you set things up to run daily or weekly. It’s not perfect, and sometimes breaks silently if the page changes, but it’s there. If you’re just checking on a price once a day or pulling a blog feed, this might be enough.

4. Exporting Data is Easy

Once a scrape is done, you can download the data in formats like CSV or Excel. This part is usually smooth and works out of the box. You don’t need to write scripts to process the output or clean it up, at least for simpler jobs.

5. Low-Cost and Easy to Try

Many scraper tools offer free versions or low-tier pricing. For basic needs or teams just testing the waters, this can be an affordable way to experiment with data collection.

These features make scraper tools a solid starting point. But as soon as things get more complicated—like scraping hundreds of pages, dealing with sites that block you, or trying to get clean, structured data every single day—they tend to crack under the pressure.

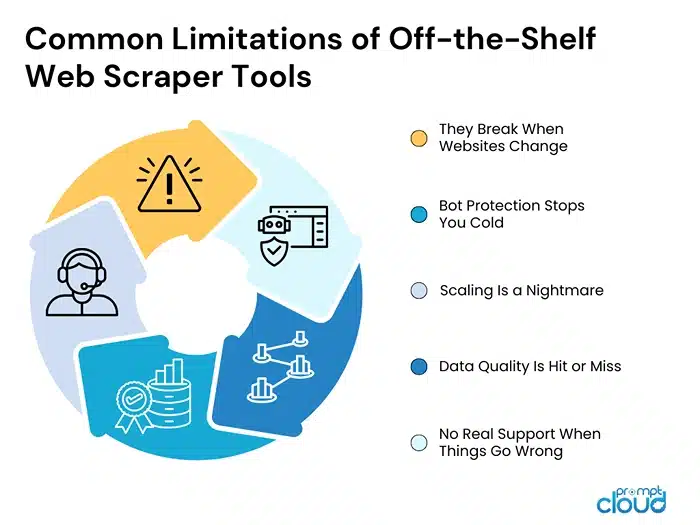

Common Limitations of Off-the-Shelf Web Scraper Tools

Scraper tools are fine, until they’re not. That sounds blunt, but it’s the truth: anyone who’s used them at scale eventually runs into. These tools are built to be general-purpose, which makes them easy to start with, but not so great when things get messy. And websites, as you probably know, love to get messy.

Here’s where most of these tools start to hit a wall.

They Break When Websites Change

Most websites aren’t built for scraping. They shift layouts, rename classes, add pop-ups, or change how content loads. Even something minor, like a renamed HTML tag, can stop a scraper tool from working.

And the kicker? Many tools won’t even tell you it broke. You might just get an empty CSV and not realize it until much later.

Bot Protection Stops You Cold

Try scraping a flight aggregator or a ticketing site using a basic tool, and you’ll see what we mean. Sites like that have layers of anti-bot defenses, IP blocks, CAPTCHAs, rate limits, JavaScript rendering. Most DIY scrapers don’t have the tech to get past these.

You either get blocked outright or fed junk data. And no tool helps when the site starts throwing 403s.

Scaling Is a Nightmare

Scraping 10 pages? No problem. A hundred? Maybe. But what about 50,000? Or daily updates across hundreds of URLs?

That’s where most scraper tools start falling apart. You’ll run into rate limits, performance issues, browser timeouts, and throttling errors. And unless you’re managing your proxy network (which most tools don’t offer reliably), you’re stuck.

Data Quality Is Hit or Miss

Scraping the data is one thing. Getting it clean, normalized, and usable? Totally different story.

Most of the time, once a scraper tool finishes the job, that’s where the help stops. You might download the file and find some data missing or characters that don’t display right. Sometimes, rows are misaligned or duplicates sneak in. If you’re planning to use that data in anything important, like a dashboard or a machine learning model, you’ll likely need to clean it up. And that’s not always a quick fix. It often means spending time writing scripts just to sort things out.

No Real Support When Things Go Wrong

Let’s say your scraper stops working after a site update. Who do you call? With most tools, support is limited to help docs, forums, or chatbots. Sometimes you get an email back in a day or two. Other times? Radio silence.

When your team is relying on that data to make decisions or feed a pipeline, waiting around isn’t an option.

All of these issues add up, especially if you’re trying to use the tool regularly or at scale. What started as a small side project becomes something you need to monitor, debug, and maintain constantly. And for many teams, that’s the moment they start asking, “Should we just hand this off to someone who knows what they’re doing?”

Spoiler: that’s where web scraping service providers come in, and we’ll break that down next.

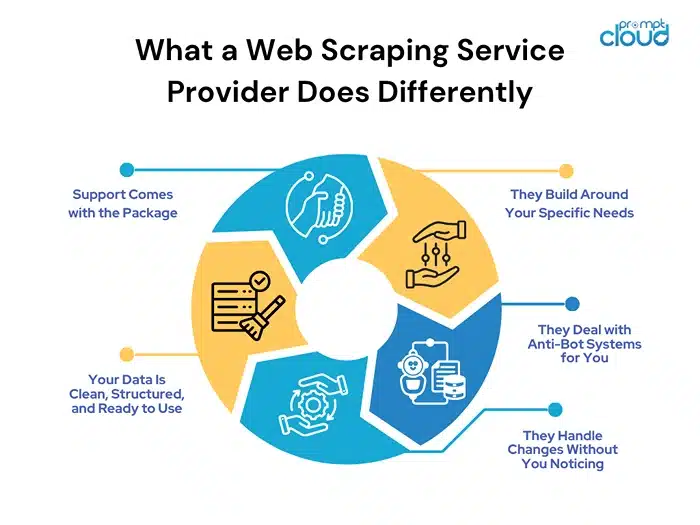

What a Web Scraping Service Provider Does Differently

When a scraper tool hits its limits, most teams try to patch things up themselves. Maybe they change a few selectors, rotate some proxies, or throw a junior dev at the problem. It works, until it doesn’t again. That’s the cycle.

But a web scraping service provider breaks that cycle completely. Instead of giving you a tool and expecting you to make it work, they handle everything: setup, maintenance, scale, delivery, and even troubleshooting when things go sideways.

Here’s what sets them apart.

They Build Around Your Specific Needs

Off-the-shelf tools are one-size-fits-all. A scraping service isn’t. They talk to you, understand your use case, and then design a setup that fits, not the other way around.

Need to scrape data from 20 job portals every day, normalize it, and feed it into your hiring dashboard? They’ll handle that. Want thousands of eCommerce listings pulled with brand-level filtering and category tagging? Not a problem.

Every part of the pipeline—from site handling to delivery format—is tailored to your workflow.

They Deal with Anti-Bot Systems for You

Handling bot protection isn’t just about using proxies. It’s about simulating human behavior, dealing with dynamic content, solving CAPTCHAs, and staying ahead of countermeasures that change constantly.

Scraping providers come equipped with infrastructure and tech stacks that are built to get around these barriers. And the best part? You don’t have to worry about how. It just works, and you get the data.

They Handle Changes Without You Noticing

Websites change. They always do. But with a scraping service, you don’t have to drop everything to fix a broken script.

If the layout shifts, the provider updates the scraper. If new fields appear, they’ll add them to your output. You stay focused on what you do best, and they quietly keep the system running in the background.

Your Data Is Clean, Structured, and Ready to Use

You’re not just getting a dump of raw HTML. A solid provider won’t just grab the data and send it over. They’ll clean it up, check for duplicates, fix weird formatting, and make sure everything’s consistent. The kind of stuff that usually takes forever if you’re doing it yourself.

So instead of spending your time patching spreadsheets or writing cleanup scripts, you can just use the data right away. No extra steps. In a way, it’s less about the scraper itself and more about getting hours back for your team.

Support Comes with the Package

Things will break. Maybe not today, but eventually, especially with scraping, it happens. The difference is, when it does, you’re not on your own. There’s a real team watching in the background. If something looks off, they catch it. And if you spot something first, you’ve got someone to reach out to who knows the system inside out.

You’re not just firing support tickets into the void and hoping. Whether it’s a message, a quick Slack ping, or someone already on the case, the support feels more like an extension of your team than an external vendor.

The difference is simple, really: a scraper tool gives you something to build with. A web scraping service provider gives you the finished product and keeps it working.

If your data needs are growing, or if you’re tired of firefighting broken scripts, this kind of support can make a huge difference.

Real-World Use Cases Where Scraper Tools Fall Short

You don’t always know you’ve outgrown a tool until it starts breaking in weird ways. Maybe it was handling 10 pages fine last month, and now it’s choking on 100. Or maybe the data just doesn’t look right anymore, and nobody’s sure why.

Below are a few examples where scraper tools often struggle and where a custom web scraping service makes a clear difference.

1. Tracking Price and Inventory Across Multiple Retailers

Let’s say you’re monitoring prices across 50 different e-commerce sites. Sounds simple, right?

Now imagine those sites:

- Change product layouts every few weeks

- Block requests from known IPs

- Use JavaScript to load prices dynamically

- List the same product under slightly different names or formats

A DIY scraper tool will catch some of that… until it doesn’t. You’ll get empty fields, stale prices, or pages that refuse to load. And fixing each issue manually turns into a full-time job.

Now, with a scraping service, you’re not stuck every time a site does something unexpected. They built the crawler to handle that kind of thing. If a retailer changes how they show prices or switches up the layout, the system gets updated on their end. A lot of the time, you won’t even know anything changed—it just keeps working.

2. Aggregating Job Listings from Dozens of Portals

Job data is messy. Titles vary, fields are often optional, and every job board has its format. Some require logins. Others use client-side rendering or infinite scroll.

Scraper tools might work on one or two boards, but scaling beyond that is painful. And if your goal is to normalize the data (e.g., map “Software Engineer” and “Full Stack Dev” under one role), that’s way outside what these tools can handle.

A scraping provider doesn’t just collect the data—they also help standardize and clean it, so you’re not left piecing together mismatched job titles from fifteen different sources.

3. Monitoring Dynamic Travel or Event Pricing

Sites like airline portals or concert ticket sellers are built to prevent scraping. They block IPs aggressively, rotate content with JavaScript, and often require user interaction (like selecting a date or location) before showing any data.

Most off-the-shelf scraper tools can’t handle that kind of complexity. Even if you manage to get through, you’ll likely get flagged or served dummy data after a few requests.

A custom scraping solution simulates human behavior—clicking, scrolling, filling forms—and works with headless browsers, CAPTCHA-solving, and smart retries to make sure you get the real data, not just placeholder junk.

4. Running High-Frequency Data Updates

Sometimes the challenge isn’t about the site—it’s about timing. Let’s say your business relies on getting fresh data every 15 minutes, or your model depends on hourly stock availability.

Scraper tools might offer basic scheduling, but they’re not optimized for high-frequency scraping. You’ll hit rate limits, see skipped runs, or get blacklisted altogether.

A scraping partner builds systems that are fault-tolerant and designed for this kind of cadence. They use proxy rotation, load balancing, and monitoring to keep things running smoothly at whatever interval you need.

5. Feeding Clean Data into Business Systems Automatically

Getting the data is just one part. Most teams need it delivered in a certain format—maybe a JSON API feed, maybe a daily CSV to a specific S3 bucket, or maybe something that plugs into an internal CRM or analytics pipeline.

Most tools stop at the download button. After that, it’s your problem.

Custom data providers don’t just scrape—they deliver. Whether it’s FTP, webhook, cloud sync, or database insertion, they fit into your process instead of making you build a whole system around theirs.

If your use case looks anything like the ones above, you’ve probably already felt the friction of pushing a scraper tool beyond its comfort zone. And if you haven’t yet, you probably will—once scale or complexity starts creeping in.

That’s when most teams realize they don’t just need a scraper. They need a partner.

Key Advantages of Choosing a Custom Web Scraping Partner

There comes a point when data becomes business-critical. And when that happens, you don’t want to babysit scrapers, fix selectors every week, or lose sleep over whether the data you pulled is even usable.

That’s the moment when a web scraping service provider becomes more than just a “nice to have.” It turns into an operational advantage.

Here’s why working with a provider makes a difference.

1. You Don’t Have to Maintain Anything

No more fixing broken scripts after a website update. No more watching for stealthy layout changes or errors that silently ruin your data. A service provider takes full ownership of the setup and keeps it working every day.

2. Scaling Is Built In

Whether you’re scraping 10 pages or 10 million, a good provider has the infrastructure to handle it. That means load balancing, proxy management, dynamic throttling, and robust scheduling—stuff you don’t want to manage yourself.

3. The Data You Get Is Ready to Use

It’s clean. It’s structured. It’s delivered how and where you need it—S3 bucket, Google Cloud, FTP, API, you name it. No post-processing, no data patching. Just plug it in.

4. You Get Support from Real Humans

When things go wrong with a tool, you’re often stuck waiting for a reply or digging through forums. With a provider, there’s a team behind the scenes who knows your setup and can fix problems quickly, without the guesswork.

5. It Grows With Your Business

As your data needs evolve, a scraping partner can adjust. More sites? Tighter schedules? Advanced data normalization? No problem. You don’t have to start from scratch.

Scraper Tool vs. Custom Web Scraping Service: Side-by-Side

| Feature/Need | Off-the-Shelf Scraper Tool | Custom Web Scraping Service Provider |

| Ease of Setup | Quick for small projects | Initial setup guided by experts |

| Handling Site Changes | Manual updates required | Auto-maintained by the provider |

| Bypassing Anti-Bot Measures | Often blocked or rate-limited | Handled with advanced techniques |

| Scalability | Limited by tool/browser constraints | Can scale to millions of pages |

| Data Quality and Structure | Inconsistent; often needs cleanup | Cleaned, normalized, and QA-tested |

| Delivery Options | Manual download, limited integrations | Custom delivery (API, cloud, database) |

| Ongoing Support | Basic or community-based | Dedicated technical support team |

| Cost Over Time | Low upfront, but high maintenance cost | Scalable pricing, lower ops overhead |

| Adaptability | One-size-fits-all | Fully tailored to your use case |

If your data project is something you run once and forget, tools might be fine. But if your business relies on accurate, fresh data from the web every day—or will soon—it’s worth having someone else in charge of making sure it never breaks.

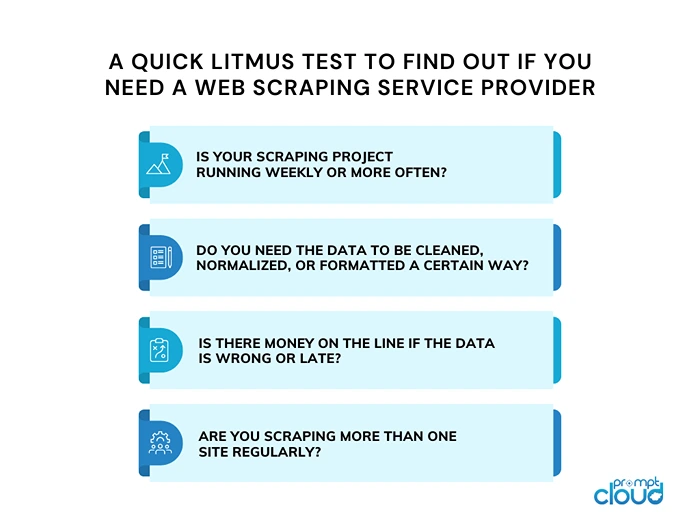

When Should You Choose a Web Scraping Service Provider Over a Scraper Tool?

Most teams don’t start by hiring a scraping service. They try to do it themselves. And honestly, that’s a good way to learn. But at some point, you stop learning and start wasting time fixing the same stuff over and over.

So, how do you know when it’s time to stop relying on tools and bring in a provider?

Here are a few signs.

Your Use Case Isn’t Just a One-Off

If you’re running a one-time experiment, say, scraping 20 pages to test an idea, a DIY scraper tool probably works. But if you need daily, weekly, or even monthly updates? And especially across multiple sites? That’s a different ballgame.

Web scraping at scale isn’t a “set it and forget it” situation. It needs upkeep. It needs monitoring. That’s where a managed service shines.

The Data Is Driving Business Decisions

If someone on your team is using this scraped data to set prices, track competitors, adjust inventory, or inform a model, that data better be right. Scraper tools can break silently. And bad data often looks just like good data, until something downstream blows up.

A scraping provider puts guardrails in place: validation checks, error alerts, backups. You get data you can trust.

You’re Running Into Site Restrictions or Blocks

Bots are getting blocked more aggressively than ever. A 2024 study by DataDome found that over 40% of web traffic was flagged as “bad bots,” and many scraper tools don’t have the defenses to avoid detection.

If you’re seeing a lot of 403 errors, broken sessions, or CAPTCHAs, you’ve probably already hit the wall. Providers come prepared with rotating proxies, smart delays, headless browsers, and the works.

You Don’t Want to Build an Internal Scraping Team

Some companies try to solve their scraping problems by throwing a dev at it. That works short-term. But soon you’ll need someone to monitor scripts, fix breakages, and build delivery pipelines and suddenly your engineering team is distracted from their actual job.

With a provider, all of that’s handled. No internal firefighting. No “who owns this script?” questions. Just reliable delivery.

The Tools Just Can’t Keep Up Anymore

If you’re constantly tweaking selectors, restarting failed crawls, cleaning up weirdly formatted data, or redoing exports, you’re spending more time managing the scraper than using the data. That’s a good signal that the tool has reached its limit.

If you said yes to even two of those, you’re probably better off with a web scraping service provider. It’s not just about getting the job done—it’s about not having to think about it every week.

When Does It Make Sense to Move Beyond Scraper Tools?

Honestly? You probably already know.

If the scraper tool you’re using works fine and your use case is simple, there’s no need to change. But if things are starting to break, if someone on your team is spending more time fixing scripts than doing anything with the data, it might be time to rethink your setup.

Scraper tools are fine when you’re testing ideas, pulling a few pages, or just exploring. But they weren’t built for production. They weren’t built for scraping at scale, or for sites that push back, or for teams that need clean, structured data on a regular schedule.

A web scraping service provider steps in when that complexity starts to pile up. You stop worrying about how the data gets to you and just start using it.

Not Sure if You’ve Outgrown Your Scraper Tool?

We’ve seen all kinds of setups, some simple, some held together by duct tape. If you’re wondering whether it’s time to upgrade, we can help you figure it out. Let’s talk!

FAQs

1. Can’t I just hire a freelancer to build a scraper instead of using a service provider?

Sure, that’s an option. Some people do that, especially for small jobs. But here’s the thing, when the site changes (and it usually does), that scraper breaks. And unless you’ve got that same freelancer on standby, you’re back to square one. Most folks end up spending more time fixing stuff than actually using the data. It’s kind of like patching a leaky pipe every other week.

2. Are scraper tools completely useless for business use?

No, not at all. They’re pretty useful if what you’re doing is simple, say, grabbing a few pages or testing an idea. The trouble starts when you try to use them for bigger stuff. Like, pulling data every day or scraping sites with tough layouts. At that point, they just can’t keep up, and you’ll feel it fast.

3. How do scraping service providers deal with sites that block bots?

They’ve got a whole bag of tricks, honestly. Things like switching IPs, faking browser behavior, or mimicking how a real person would click around. Some even handle those annoying CAPTCHAs. It’s not bulletproof, but they’re a lot better equipped than most tools or DIY scripts. You don’t have to deal with any of it—that’s the big win.

4. What kind of data cleanup do providers usually include?

They’ll usually fix up the stuff that makes data a pain to work with—like removing duplicates, filling in blanks, and making sure everything’s lined up right. If you’ve ever pulled a messy spreadsheet from a tool and had to clean it for hours, you’ll appreciate not having to do that anymore. It’s all handled behind the scenes.

5. Isn’t a custom scraping setup expensive?

Depends on what you’re comparing it to. If you’re just looking at the monthly cost, sure, it might seem like more. But think about the hours your team spends fixing broken scrapers or redoing exports. That adds up fast. In the long run, having something that just works often ends up cheaper and way less frustrating.