**TL;DR**

Every scraper breaks eventually. Here are the key takeaways:

Takeaways:

- Smart logging beats guessing – always record request, response, and selector states.

- Anti-bot systems evolve faster than scrapers; rotate proxies, headers, and timing.

- Use validation checks to detect schema drift, null fields, and partial scrapes.

- Managed scraping solutions now include observability dashboards and automated fixes.

The Complete Guide for Detecting Web Scraping Failures

Web scraping doesn’t fail quietly; it fails sneakily. Your jobs are complete. Your logs look fine. Then, someone checks the output and realizes a column has been empty for two days, or that 30% of pages started returning CAPTCHA walls overnight. What worked last week might fail tomorrow with no visible clue.

Let’s learn moe about it now.

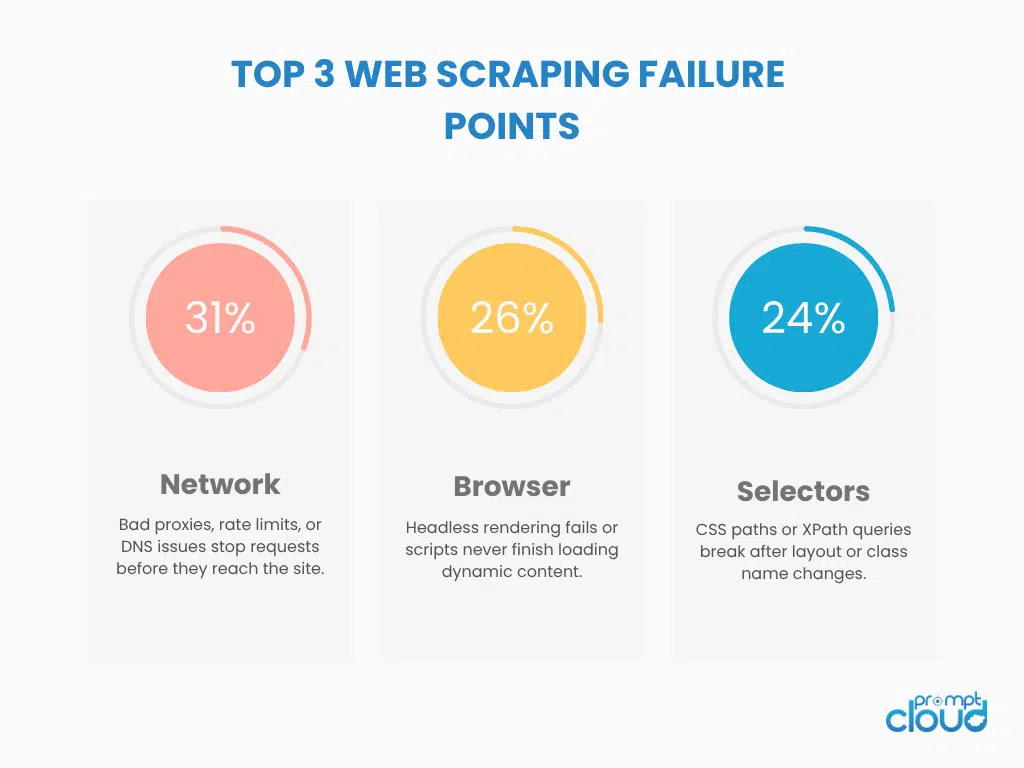

The 5 Core Layers Where Scraping Errors Happen

Every web scraping failure fits into one of five layers. The trick is isolating which layer broke before you touch code. Fixing a parsing issue won’t help if your network requests are never completed, and patching a selector doesn’t solve a CAPTCHA block.

Think of these five as a hierarchy of failure; start at the bottom and move up.

1. Network Layer Failures

Typical symptoms:

- 403 Forbidden / 429 Too Many Requests: The site is blocking your scraper.

- Timeouts or Empty Responses: Target server dropped your connection.

- Inconsistent Success: Requests fail intermittently depending on IP or region.

Debugging approach:

- Check if the site loads in a regular browser from the same server.

- Swap in a fresh IP or proxy — if the request succeeds, you’ve been fingerprinted.

- Add realistic headers (User-Agent, Accept-Language, Referer).

- Use exponential backoff with retry limits to avoid repeated bans.

If you’re repeatedly seeing 403s or 429s, your scraper’s traffic signature is too obvious. Rotate proxies, randomize request timing, and stagger concurrent requests.

2. Browser & Rendering Layer Failures

Some sites load everything via JavaScript. You can fetch the HTML, but it’s a skeleton until a script populates the data. When your headless browser setup fails, you’ll see empty tables or half-loaded content.

Typical symptoms:

- HTML structure looks correct, but fields are missing.

- Works in normal Chrome but fails in headless mode.

- Random “page crashed” or “execution context destroyed” messages in logs.

Debugging approach:

- Log full rendered HTML to verify content actually loaded.

- Use wait_for_selector() or similar methods — don’t scrape before DOM ready.

- Run a single page interactively (non-headless) and watch what changes.

- Check memory limits and concurrent browser instances; rendering pools can leak.

If the problem vanishes in visible mode, it’s likely headless detection. Many sites now test for headless signatures: missing plugins, graphics contexts, or fonts. Using stealth browser builds (like undetected Playwright) usually helps.

3. Parsing & Selector Layer Failures

Once your content loads, the next risk is schema drift — the page structure changes slightly, breaking your CSS or XPath selectors. These are the silent killers: your scraper “runs successfully” but extracts nulls.

Typical symptoms:

- Output fields suddenly become empty.

- HTML looks fine, but find() or select() returns nothing.

- Logs show no errors, only bad data.

Debugging approach:

- Inspect the live page — did class names or element nesting change?

- Use broader selectors or semantic anchors (e.g., labels, text nodes).

- Implement selector versioning: tag scrapers with schema dates and auto-flag anomalies.

- Run nightly validation comparing output field counts to historical norms.

To prevent repeat breakage, add a lightweight DOM diff test to your pipeline. When a layout shifts, the system can quarantine the crawl automatically instead of delivering half-empty CSVs.

4. Logic & Control Flow Failures

Even when network and parsing layers behave, logic bugs cause subtle data corruption. Maybe pagination didn’t increment, or you overwrote your own dataset.

Typical symptoms:

- Duplicate or missing pages.

- Partial datasets after “successful” runs.

- Log shows success for every page, but totals don’t match expected count.

Debugging approach:

- Compare page counts to known values (e.g., pagination total).

- Check deduplication logic and filename patterns.

- Track each crawl as an atomic batch; fail or succeed as a unit.

- Add checksum verification for every data file after save.

When scrapers operate in distributed clusters, a single job timeout can quietly skip dozens of records. A queue-aware architecture prevents that by reassigning incomplete tasks.

5. Schema & Validation Layer Failures

The final failure type isn’t technical; it’s data integrity. You can have perfect scrapers but broken data: malformed JSON, nulls where numbers should be, outdated fields that passed unnoticed.

Typical symptoms:

- Data types inconsistent (price as text, rating as float).

- Missing timestamps or mismatched IDs.

- Drift between old and new schema versions.

Debugging approach:

- Add range and type assertions per field.

- Log null counts and value distributions.

Large-scale systems pair this with observability metrics: tracking freshness, null percentage, and schema drift over time. It’s the difference between knowing your scraper works and knowing your data’s trustworthy.

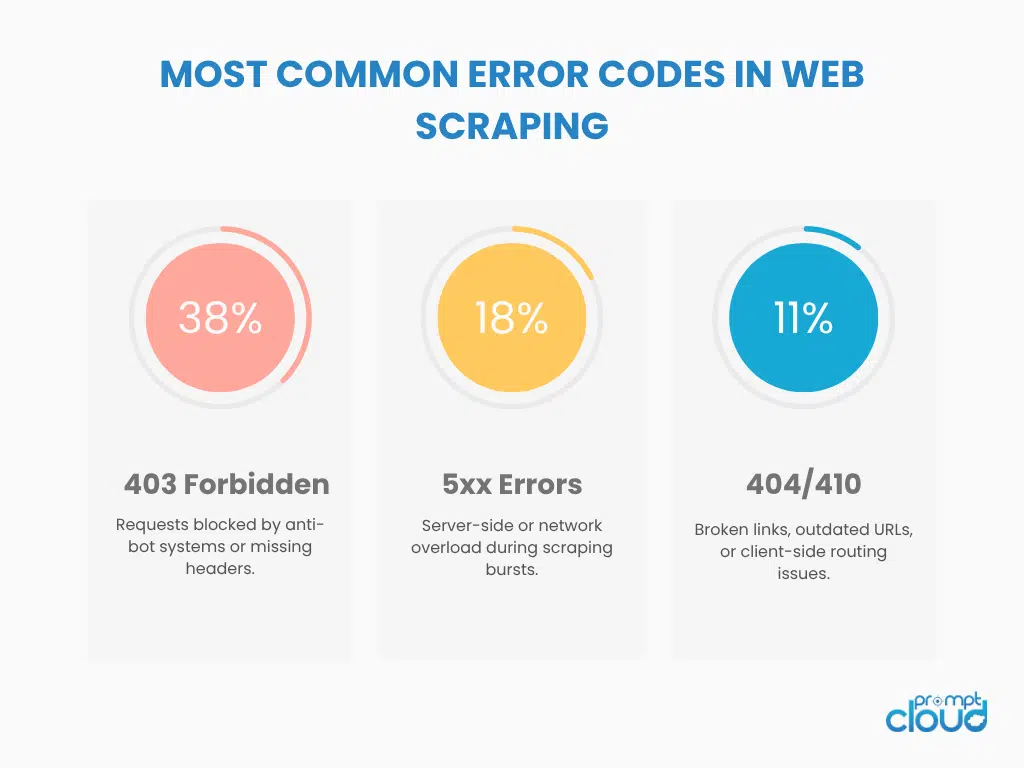

Common Web Scraping Error Codes and What They Really Mean

Quick reference

| Code | What it looks like | Likely root cause | Fast test | Practical fix |

| 403 Forbidden | Page returns 403 for bots, works in your browser | IP fingerprinted, missing headers, headless detected | Retry from a residential IP with realistic headers | Rotate IPs, add Accept-Language and Referer, slow request pace, use stealth headless, set cookies from a warm-up visit |

| 429 Too Many Requests | Spikes during bursts, clears if you wait | Rate limit per IP, per session, or per path | Reduce concurrency by 50% and try again | Backoff with jitter, per-domain concurrency caps, queue throttling, alternate paths or time windows |

| 5xx Server Errors | 500 or 503 intermittently | Target is overloaded or blocking ranges | Load site in normal browser via same proxy | Exponential backoff, retry with different IP range, respect crawl gaps, avoid synchronized bursts |

| 301 or 302 loops | Endless redirects or login wall | Geo or cookie gate, mobile vs desktop, A/B flags | Follow redirect chain manually in dev tools | Pin User-Agent, persist cookies, choose correct locale, disable auto redirect and inspect Location |

| 404 or 410 | Not found or gone | Stale URLs, JS-built routes, bad pagination | Open URL in normal browser and view network tab | Rebuild URL list from sitemaps or category crawl, handle JS routers with headless render |

| 451 Unavailable for Legal Reasons | Regional block | Geo fencing, licensing | Test from different country IP | Geo aligned proxies, source alternates, document block for compliance review |

| Captcha page | HTML contains captcha form or challenge | Behavior flagged as automated | Try in visible browser with human pause | Use challenge solving only if policy allows, otherwise slow pace, randomize actions, reuse warmed sessions |

403 Forbidden: the blocked but not banned case

Symptoms

- Works from your laptop, fails from scraper hosts

- Only some paths return 403

- 403s cluster by data center region

Root causes

- Missing or inconsistent headers

- New IP range flagged by reputation lists

- Headless browser fingerprints detected

Fixes that stick

- Use realistic headers: User-Agent that matches your browser, Accept-Language, Referer if the page is normally navigated to

- Switch to residential or mobile IPs for sensitive sections

- Use a stealth headless build and enable WebGL, fonts, media codecs

- Add a warm-up step that lands on home or category pages, sets cookies, then navigates

429 Too Many Requests: your scraper is too impatient

Symptoms

- Bursts succeed, then everything 429s

- One IP hammered, others fine

- Recoverable after a cool down

Root causes

- Concurrency spikes without pacing

- Parallel hits on the same path

- Predictable timing patterns

Fixes that stick

- Add per-domain concurrency caps and a token bucket limiter

- Randomize gaps between requests with jitter

- Spread load across paths and time windows

- Backoff tiers: 2s, 10s, 60s, then park the job

5xx Errors: not always their fault

Symptoms

- Intermittent 500 or 503 under load

- Correlates with traffic peaks on target

- Some proxies succeed while others fail

Root causes

- Target side rate protection

- Your fetch layer retry storming their origin

- CDN edge quirks for specific IP ranges

Fixes that stick

- Add circuit breaker logic per domain to prevent retry storms

- Try a different ASN or residential pool

- Respect Crawl-Delay from robots.txt even if informal

- Switch some routes to scheduled off-peak windows

Redirect loops and login detours

Symptoms

- 301 to geo domain, then back

- 302 to consent or cookie wall

- Parameters stripped by your client

Root causes

- Locale or A/B cookies required

- Consent pages on first visit

- Incorrect redirect policy in client

Fixes that stick

- Perform a warm visit, accept consent, persist cookies and reuse the session

- Pin a locale and device type consistently

- Disable auto follow once to inspect Location headers and rebuild the correct entry URL

404 or 410: when the URL is not the problem

Symptoms

- Category shows items, item URLs 404

- Pagination beyond page N returns 404

- Direct links work only after interactive navigation

Root causes

- Client-side routers build virtual paths

- Pagination index different from what you assumed

- Stale links in your seed list

Fixes that stick

- Render category pages and capture real item hrefs from the DOM

- Re-calc last page via visible pagination control or API call

- Refresh seed lists from sitemaps and canonical tags

Headless browser failures that look like data problems

Sometimes the page loads, but your data never appears. That is usually a rendering or timing issue, not a selector bug.

Tell-tale signs

- Empty containers where data should be

- Works in visible mode, fails headless

- Occasional “execution context destroyed” errors

Checklist

- Wait for a specific selector that only exists after data loads

- Set a maximum wait, then log the DOM for post-mortem

- Reuse browser contexts and close them cleanly to avoid memory bleed

- Enable stealth features: proper navigator, plugins, timezone, fonts

- If the site exposes a JSON XHR for the data, skip rendering and fetch that endpoint directly

Anti-bot triggers you can actually control

Bots are detected by patterns. Reduce the obvious ones.

- Timing: add random delays, vary request order, avoid synchronized starts

- Headers: keep them consistent per session, don’t rotate UA every request

- Behavior: land on home or category first, then navigate like a user

- Session reuse: keep cookies and localStorage across a short window

- Geo: align IP location with site expectations and language

- Footprint: limit concurrent hits to the same path or store

When selectors drift, validate before it hurts

Schema drift rarely throws an error. It just delivers empties.

What to log per page

- URL, timestamp, response code

- Selector used and the count of matches

- First 200 characters of extracted text for each field

- Null count per field

Automated guardrails

- If matches drop to zero for any required selector, fail the job

- Compare today’s field distributions to a 7 day baseline

- Quarantine batches that fail range or type checks

- Open a ticket with a DOM diff for quick patching

Decision tree: fix fast without guessing

- Check the response code

- 403 or 429: treat as rate and fingerprint issue. Apply proxy rotation, header realignment, and backoff.

- 5xx: enable circuit breaker, slow down, try different IP ranges.

- If 200 but data missing

- Rendered HTML empty: headless or timing problem. Add waits and stealth.

- HTML rich but selectors fail: schema drift. Broaden selectors or retarget anchors.

- If totals look wrong

- Recount pagination and compare it to the last successful run.

- Check dedupe and storage step for overwrites.

- Check if the Verify queue did not drop tasks after timeouts.

- Before re-running everything

- Reproduce on a single URL with full verbose logs.

- Patch once, then fan out with a small canary batch.

- Promote fix only after QA passes on the canary.

Your Repeatable Playbook to Debug Web Scraping Fails

No matter how advanced your stack gets, every team needs a runbook; a simple, repeatable debugging process that engineers can follow before panic sets in. Here’s how to make scraper recovery fast, predictable, and well-documented.

1. Step Zero: Reproduce the Error in Isolation

When a scraper fails, don’t rerun the entire job. Start with a single, reproducible URL. Use verbose logging, save raw HTML, and disable retries. The goal is to confirm what actually happened before you change anything.

| Check | Why it matters | How to confirm |

| Response code | Confirms if you’re blocked (403/429) or if the page itself is broken. | Curl or Postman test using same headers. |

| HTML length | Detects empty bodies or redirect loops. | Compare byte length to past successful runs. |

| Selectors | Finds schema drift or hidden data. | Run parser directly on the saved HTML sample. |

If the content looks correct in-browser but your scraper gets blanks, it’s likely a rendering or headless detection issue.

2. Layered Diagnosis: Go Bottom-Up

Use the five-layer hierarchy from earlier (Network → Browser → Parser → Logic → Schema). Debug one layer at a time. Here’s how it translates to a standard incident triage flow:

| Layer | Typical fix | Time to confirm |

| Network | Retry with new proxy / user-agent | 5 minutes |

| Browser | Enable visible mode, add wait-for-selector | 10 minutes |

| Parser | Inspect live DOM, broaden selector | 15 minutes |

| Logic | Check pagination or dedupe routines | 10 minutes |

| Schema | Validate field names and data types | 5 minutes |

This approach prevents wasted cycles. You can skip entire steps if your evidence already rules them out.

3. Log Smarter, Not Louder

Debugging without context is guesswork. Overlogging slows you down; underlogging blinds you. A good logging design includes four essentials:

- HTTP metadata – URL, status code, headers.

- DOM snapshot – first 500 chars of extracted text per field.

- Timing metrics – render time, total crawl duration.

- Validation metrics – null counts, field completeness score.

4. Use Canary Runs and Health Checks

A canary run is a small batch of test URLs that run before every full job. If they fail, the main crawl stays paused.

Combine this with a health dashboard showing:

- Success rate by domain

- Average latency

- Drift in record count vs previous run

- Proxy pool utilization

When one metric goes off baseline, engineers get notified early, preventing silent corruption. Teams often embed these checks into scheduling pipelines; a lightweight version of full observability.

5. Build a Scraper Incident Template

When something breaks, documentation should start immediately.

A simple, shared incident template ensures nothing gets missed.

| Field | Description | Example |

| Date / Time | When failure first detected | 2025-10-13 04:30 UTC |

| Domain / Endpoint | URL or category affected | /products/electronics/ |

| Impact | Data loss or null field ratio | 25% of prices missing |

| Suspected Cause | Selector drift / 403 / JS delay | Schema drift |

| Fix Applied | Changed selector, added wait-for-load | Adjusted CSS path |

| Verification | Canary run result | 10/10 success |

| Next Check | Follow-up time or trigger | Next crawl cycle |

Treat these incident logs like QA reports, not post-mortems. Over time, they become your best prevention tool.

6. Communicate Failures Before They Escalate

Most scraping “crises” happen because someone upstream spots bad data before the scraper team does. Prevent that by automating communication:

- Send alert summaries to Slack or email when errors cross thresholds.

- Auto-generate incident tickets for schema drift.

- Include “expected vs actual record count” in every delivery report.

Further reading, if you would like to know more:

- Google AdWords Competitor Analysis with Web Scraping — shows how accurate, timely reporting of scraping failures directly improves downstream analytics quality and campaign data integrity.

- Google Trends Scraper 2025 — demonstrates how continuous data validation prevents stale or incomplete trend datasets during live event spikes.

- JSON vs CSV for Web Crawled Data — covers how JSON’s nested structure makes debugging logs and partial extractions far easier than flat CSVs.

- Best GeoSurf Alternatives 2025 — explains how proxy rotation, ASN diversity, and IP health checks prevent recurring access errors.

7. Test Before Scaling

After a fix, don’t immediately resume full production scale. Run a targeted 5–10% subset of jobs to confirm:

- Fields populate correctly

- No unexpected 403/429 spikes

- Validation logs pass with consistent field counts

Once the canary passes, promote the patch across all crawlers. Large scraping systems operate like financial exchanges: stability beats speed. Fix small, verify fast, then scale safely.

How to Prevent Future Scraping Errors with Better Design?

Most web scraping failures aren’t random; they’re architectural. If you design the system for recovery, validation, and visibility, errors stop being emergencies and become routine maintenance. Here’s how to build resilience into your scrapers from the start.

1. Use Modular Architecture

Separate each major function into its own component — fetching, rendering, parsing, validation, and delivery. This makes failure containment possible.

| Module | Purpose | Benefit |

| Fetcher | Handles requests, retries, proxy logic | Prevents network errors from corrupting data |

| Renderer | Runs headless browsers only when required | Cuts compute cost and memory leaks |

| Parser | Extracts and transforms fields into schema | Localizes schema drift issues |

| Validator | Checks completeness and type conformity | Stops bad data before it spreads |

| Delivery | Writes final data to API, S3, or warehouse | Keeps downstream pipelines clean |

When an issue occurs, only one module needs attention; not the entire stack. This modular design is what separates quick prototypes from production-grade scrapers.

2. Automate Validation Early

Catching errors during extraction beats catching them downstream. Validation should happen before data hits storage. The Best practice is to maintain a schema definition file; specifying required fields, allowed data types, and acceptable value ranges.

If a scraper suddenly outputs prices as strings or loses 10% of records, your validation system flags it automatically and halts that job. For most enterprise systems, even a basic Pydantic or Great Expectations check cuts silent errors by 80%.

3. Introduce Drift Detection and Versioning

DOMs change slowly, then all at once.Automate schema drift detection by comparing current HTML snapshots with historical versions. A diff on class names or element structure often spots breaking changes before they cause nulls. When drift occurs, log a new schema version ID, this preserves transparency when reconciling datasets later.

4. Monitor Your Proxy Layer Like a Data Source

Proxies aren’t infrastructure, their inputs. Their health, speed, and reputation directly influence scraping accuracy. Keep per-proxy performance metrics like:

- Error rate (403, 429, 5xx)

- Median response latency

- Country and ASN mix

- Session reuse time

A balanced rotation policy, refreshed daily, can reduce block rates by up to 40%. This kind of visibility also helps you negotiate proxy vendor SLAs with evidence, not assumptions.

Read more: AIMultiple Proxy Management Report 2025 — a current industry review outlining trends in proxy orchestration, ASN diversity, and anti-bot evasion strategies.

5. Add Observability That Serves Humans

Monitoring dashboards only works if people look at them. Instead of dense logs, create clear visuals:

- Error heatmaps by domain

- Trend graphs for success and null rates

- Latency distribution to catch network saturation

- Freshness clocks showing how stale each dataset is

Integrate alerts where your team already lives — Slack, Teams, or email. When data quality dips, the system should explain why automatically.

6. Keep a Warm Cache of Known Good Selectors

Sometimes a page breaks because one selector changed. Maintaining a cached library of “known good selectors” for each domain lets you compare new layouts to old working versions quickly. You can patch from a backup instead of rebuilding from scratch.

Combine this with unit tests that check whether each selector still finds at least one match in live HTML — a simple but powerful early warning.

7. Build for Failure Recovery, Not Perfection

No scraping system is perfect. Your goal isn’t zero errors: it’s fast containment. That means:

- Canary jobs on every release

- Quarantine queues for failed crawls

- Automatic escalation after N consecutive errors

- Regular revalidation of all “fixed” scrapers

When you expect failure, you respond calmly instead of reactively. Over time, this transforms scraping operations from brittle scripts into predictable data pipelines.

8. Know When to Stop Building and Start Buying

There’s a threshold where maintaining in-house scrapers costs more than outsourcing to a managed provider. The trigger points are clear:

- More than 10 concurrent domains under active maintenance

- Weekly schema drift incidents

- Frequent IP bans across geographies

- Teams losing time to queue management or retries

When you hit that scale, offloading extraction to a service like PromptCloud ensures stability, compliance, and round-the-clock validation.

If you spend more time fixing scrapers than using the data

Want reliable, structured Temu data without worrying about scraper breakage or noisy signals? Talk to our team and see how PromptCloud delivers production-ready ecommerce intelligence at scale.

FAQs

1. Why do web scraping errors happen so often?

Because websites change constantly. Even small layout or code updates can break a scraper’s logic. Common culprits include selector drift, rate limits, and JavaScript rendering issues. The key is to expect change and build for it — with retries, validation, and monitoring instead of assuming “it’ll keep working.”

2. How can I tell if my scraper is being blocked?

You’ll usually see 403 or 429 status codes, CAPTCHAs, or empty responses while the same page loads fine in a browser. These are signs your scraper’s pattern (IP, timing, headers) has been flagged. Try slowing requests, rotating proxies, and adding real browser headers to mimic normal traffic.

3. What’s the best way to debug a broken scraper?

Start small. Re-run one failed URL in verbose mode, save the raw HTML, and compare it with a working page. Then check layer by layer:

- Network (can you reach it?)

- Browser (did it render?)

- Parser (did selectors change?)

- Schema (did validation catch it?)

This bottom-up flow catches 90% of issues quickly.

4. How do I stop schema drift from silently corrupting data?

Automate checks. Use a schema file with field rules (type, range, required), and compare current output to historical runs. When a field’s structure or value distribution changes, pause that scraper automatically. You’ll fix problems before bad data spreads downstream.

5. Can managed scraping really prevent these errors?

It won’t make the web static, but it shifts the burden. Managed scraping platforms handle proxy rotation, drift detection, validation, and compliance for you. Instead of chasing breakages, your team just receives verified, ready-to-use data feeds.