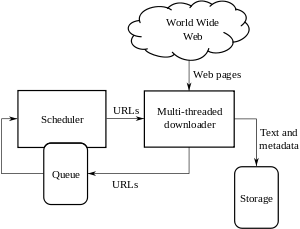

Web Crawlers are main components of web search engines. These are programs that follow links on web pages by moving from one link to another to gather data about those web pages. Crawlers index these web pages and help users to make queries against the index and search web pages that match those queries. A web crawler is used for varied purposes including discovering and downloading of web pages, moving from one link to another on web pages, collecting information on web pages and many others.

A search engine employs web crawlers to find information on the web amongst millions of web pages that exist by building list of words found on websites. This process of building lists is known as Indexing. It initiates with a list of web addresses obtained from past crawling activity along with site maps provided. Crawlers move along these websites and find new links. Computer programs help in deciding which websites to crawl, how many times and how many pages to obtain from concerned websites.

Search engines gather the pages during crawl process and then create an index. This index includes information about words and their locations. After searching at the most basic level, algorithms search for the terms in the index to find relevant information.

Despite the numerous benefits, the pains of Web crawling can’t be overlooked. The biggest pain area can be witnessed in the form of threat to privacy. Web crawlers or Spiders are automated bots that can scan and copy information from web pages. Spiders perform web crawling to copy content on web pages. Individuals expect privacy constraints on publicly accessible data.

In the present scenario, there are no specific guidelines placing restrictions in a machine-readable form. As a result, to avoid hacking and stealing of strategic information website owners are blocking crawlers for unacceptable behaviour. Some sites are even specifying restrictions in the terms of service and taking legal actions against the defaulters. However, this is not a practical solution for operators of web crawlers as other methods such as manual intervention and others are expensive. The major reason behind the reservations towards Web Crawling is that behavioral data is available at an ever increasing quantity and web crawlers are extremely cheap to set up and operate.

Another problem area with web crawling is that most web pages are designed to be read by humans. This implies that enormous information is available for presentation purposes like navigation menus, headers and footers and so on. All that additional information offers a more pleasant experience for the user instead for a web crawler. Web crawler is actually reducing quality of information during indexing.

Another significant issue with web crawling is that the web crawlers are flouting various regulatory policies and breaking the rules of crawling.

Some of the Legal policies associated with Web Crawling that operators of web crawlers need to know are:

- Data should not be archived beyond a definite period.

- Limited crawling is allowed but large scale is strictly prohibited.

- Public content can be crawled only in adherence with copyright policies.

- Robots.txt informs you which website to crawl and which not to crawl. A web page that is blocked cannot be crawled. It actually acts as an approval form that needs to be abided when a web page is crawled.

- Terms-of-use of a website must be checked to ensure transparency.

- Many websites, which are authentication-based, discourage crawling to ensure you are not hitting their servers too hard.

- Robots.txt mentions the time-lag that must be ensured between consecutive crawls. Hitting the server consecutively can lead to IP blockage.

- Personal information should not be linked with other databases.

Several companies are using web scraping services to stay at the forefront in the competition. Companies are scraping online social networks for behavioral data, complex financial data and others to understand market trends and customers’ behavior. Web scraping converts HTML form to another format such as XML to make it more understandable and extract data from websites.

Some of the worst Web Scraping headaches are experienced by the companies when their competitors crawl their website to gain strategic advantages. Scrapes are usually done by software that extracts valuable data from the website and modify it according to customers’ preferences. Most web scrapers do not pay attention to the fine line between stealing and collecting information. Almost every website has a copyright statement that protects the information on the website legally. However, it entirely depends upon the scraper to read the information and follow it ethically. For instance, a regional software company had to pay $20 million fine for illegally scraping website of a noted database company for gaining some strategic information.

There have been several others cases wherein data scrapers did serious harm to person and organization involved. Some of these illegal scrapers have been penalized while others are still carrying on illegal activities freely. In today’s world, it poses as our single biggest challenge to protect our information and website from illegal scraping and crawling.