**TL;DR** A leading used car marketplace came to PromptCloud, facing a very familiar problem: chaotic, incomplete, and inconsistent auto listings across multiple sources. From missing specifications to constantly changing prices and listing duplicates, their internal teams were losing time and accuracy in trying to compile the data they needed.

Enter PromptCloud’s custom web scraping services.

We didn’t just hand over a scraper. We built a crawler designed to cut through the mess, pulling in over 10,000 car listings from multiple sources, cleaning them up, and giving the client structured, reliable data they could act on immediately. No delays. No guesswork. Just a real-time pulse on the market that gave them faster analysis, smarter pricing, and complete visibility into their inventory. This wasn’t just a backend fix; it transformed their entire marketplace strategy.

If you’re a decision-maker evaluating scraping vendors for your car marketplace, this is your chance to see what enterprise-grade web scraping looks like in action.

Why Car Marketplaces Run on Data, Not Just Listings

Let’s call it like it is: data powering today’s car marketplaces. Whether you’re listing used cars, brand-new models, or rental fleets, you’re not just in the business of selling vehicles. You’re in the business of serving clean, accurate, up-to-date data, because that’s what buyers trust, and that’s what keeps them coming back.

And that’s where things usually fall apart.

Auto listings come from dozens, sometimes hundreds, of sources. Dealerships, OEM portals, classifieds, and inventory partners all with their own formats, update frequencies, and naming conventions. One source might list “Hyundai Tucson 2.0 CRDi AT GLS,” another calls it “Tucson GLS Diesel Auto.” You can imagine what that does to your filters, your search results, and your backend logic.

The marketplace that gets it right wins big. The one that doesn’t? Loses traffic, trust, and traction.

That’s the pressure one of our clients, a fast-scaling automotive marketplace focused on used cars, was facing when they came to us. Their internal team was battling messy data feeds, duplicate entries, and pricing inconsistencies across the board. Market analysis was slow. Inventory was hard to normalize. Decisions were reactive, not strategic.

They didn’t need more data. They needed better data.

So, we built them a crawler from the ground up. One that could scrape and analyze over 10,000+ auto listings from multiple sources. One that was fast, accurate, compliant, and tailored for the real-world chaos of car marketplaces.

This is the story of how that crawler changed its business.

If you’re evaluating web scraping services for your own auto platform, whether it’s for new cars, used cars, or both, this case study is going to show you what’s possible when you stop struggling with data and start using it as your competitive edge.

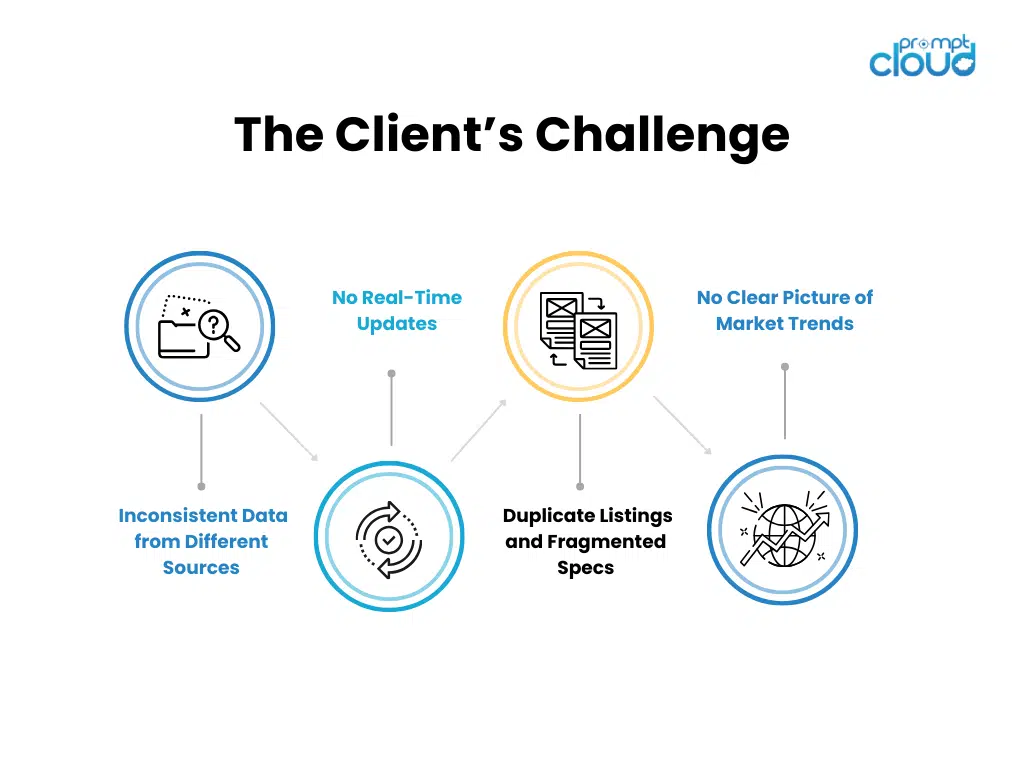

The Client’s Challenge: Data Chaos in Auto Listings

The client, a growing automotive marketplace, wasn’t new to the game. They had dealers onboarded, traffic picking up, and ambitious plans to expand across new cities. But their biggest roadblock wasn’t scaling operations. It was scaling car listings data.

And the issues? They were piling up fast.

1. Inconsistent Data from Different Sources

They were pulling in listings from a variety of dealer websites, classifieds, and aggregator feeds. Each one had its own structure. Some had prices but no mileage. Others had trim details but skipped service history. In some cases, two listings would show the same car, but you’d never know it because the titles, formats, and images didn’t match.

2. No Real-Time Updates

Prices changed daily. Inventory moved fast. But the client’s data update system? It lagged by days. Users would click on a car, ready to book a test drive, only to find out it was sold days ago. That’s not just a bad experience. That’s how you lose trust, and eventually, traffic.

3. Duplicate Listings and Fragmented Specs

One Honda Civic could appear six times, each with slightly different specs. Worse, some listings would say “Automatic” in the title but “Manual” in the description. That kind of inconsistency made their search filters practically useless. It also made dealer attribution and analytics impossible to trust.

4. No Clear Picture of Market Trends

With all this mess, the client couldn’t confidently answer simple but critical questions:

What’s the average resale price of a five-year-old SUV in Delhi? Which models are trending this month? Where’s the price drop pressure coming from?

Without structured, unified cars data, they couldn’t track inventory movements, measure dealer performance, or refine their pricing engine. They were flying blind, with dozens of spreadsheets and multiple teams manually cleaning listings just to get by.

They didn’t need more engineers. They needed infrastructure. They needed a crawler built for auto marketplaces.

That’s where PromptCloud came in.

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

Let’s connect.

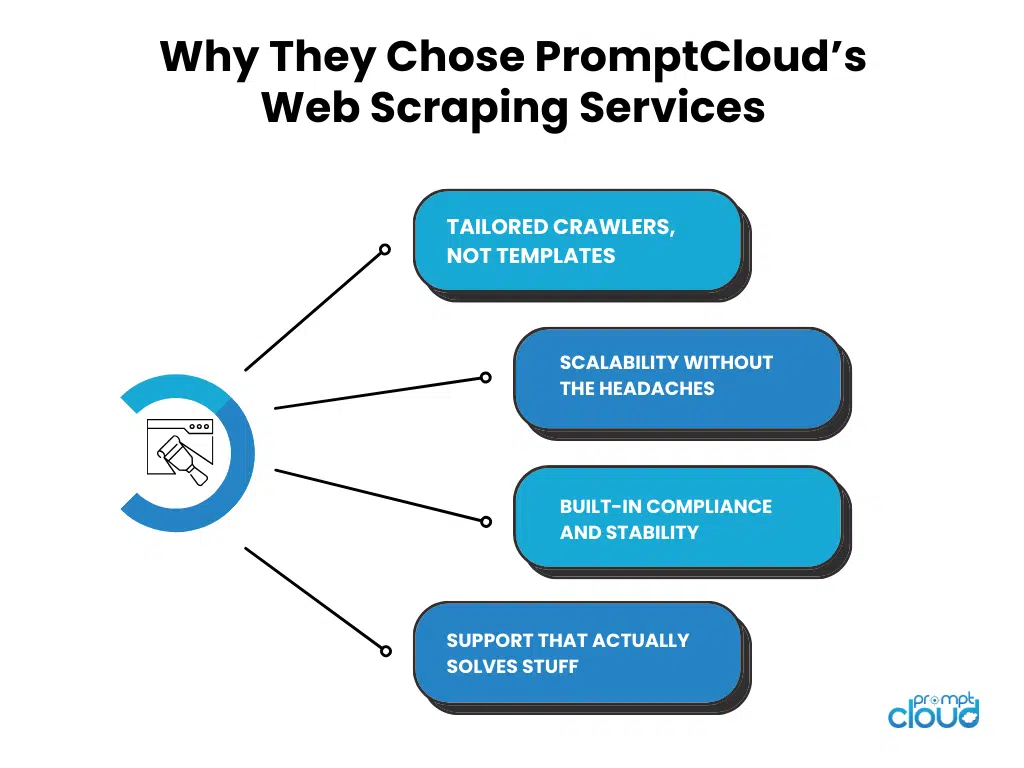

The Turning Point: Why They Chose PromptCloud’s Web Scraping Services

The client didn’t come to us casually. They’d already tried building a few scrapers in-house. They’d tested a low-cost vendor. They’d wrestled with broken crawls, slow delivery, and support tickets that went nowhere. By the time they reached out, their question wasn’t “Should we use web scraping?” It was, “Can anyone actually do this right?”

They needed more than just data delivery; they needed a reliable web scraping partner who understood the complexities of car marketplaces and could scale with them.

Here’s what made PromptCloud the obvious choice:

1. Tailored Crawlers, Not Templates

They weren’t interested in a generic, one-size-fits-all scraper. Their business model demanded precision, down to trim-level specs, real-time price fluctuations, and location-tagged listings. Our approach of building fully custom crawlers meant they could extract the exact fields they needed from each source, no compromises.

We weren’t offering them “scraped data.” We were offering operational control.

2. Scalability Without the Headaches

They were growing fast, and their data pipeline needed to keep up. We showed them how our infrastructure could crawl 10,000+ auto listings daily across multiple websites, without slowing down, crashing, or triggering bot detection systems. Whether they were onboarding 5 new dealerships or 50, our system could scale with a simple config update, not a rebuild.

3. Built-In Compliance and Stability

The client’s legal team was cautious (as they should be). They had questions: “Is this compliant? Are we risking IP bans? What happens if a source changes layout overnight?”

We walked them through our ethical scraping practices, source monitoring, intelligent throttling, IP rotation, fallback systems, and more. We made sure every crawl respected source term and anti-bot policies while still delivering the data they needed. Our uptime was 99.9%. Our commitment to compliance? 100%.

They didn’t just feel supported, they felt safe.

4. Support That Actually Solves Stuff

They’d been burned by vendors who vanished after onboarding. With PromptCloud, they had direct access to a team that not only understood their use case but actually spoke their language. Weekly check-ins, response SLAs, rapid crawler tweaks, no chasing, no delays.

They got a partner, not a helpdesk ticketing system.

That moment, the one where they saw their first batch of structured, unified, real-time listings across six major sources, that’s when the relationship clicked.

Because for the first time, their team wasn’t cleaning data. They were using it.

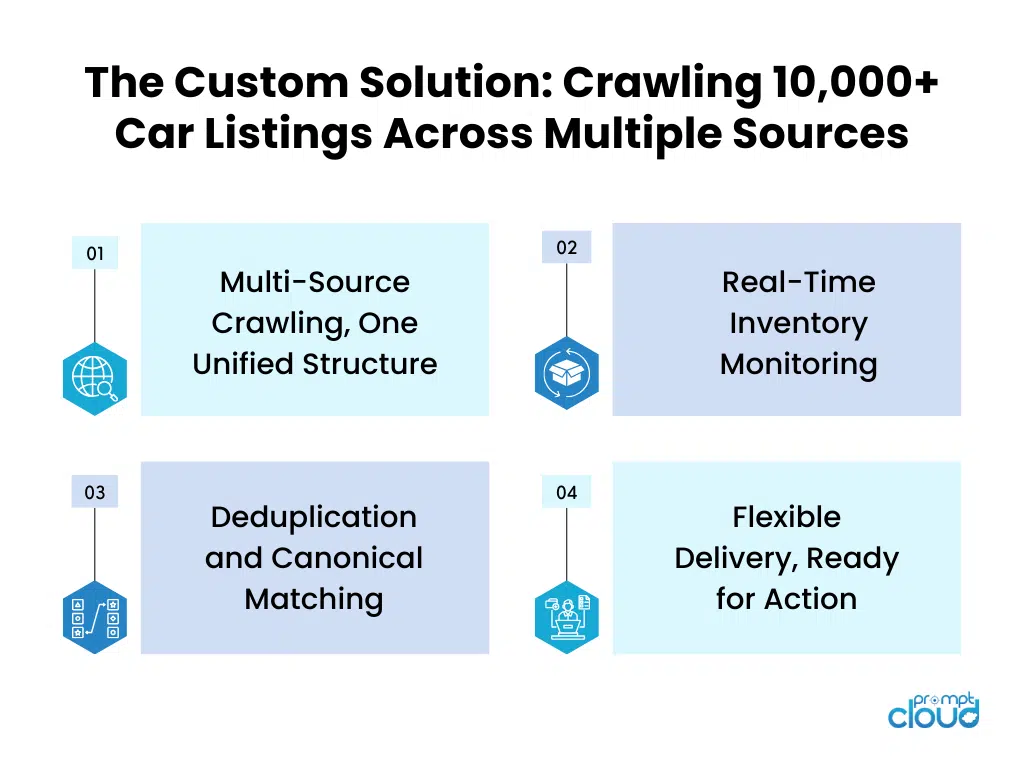

The Custom Solution: Crawling 10,000+ Car Listings Across Multiple Sources

Once the scope was defined, it was time to build.

We didn’t offer the client an off-the-shelf scraper or hand them some open-source scripts. We engineered a custom crawling infrastructure tailored specifically for car marketplace data. It wasn’t just about scraping more listings; it was about scraping smarter.

Here’s what that looked like in practice:

Multi-Source Crawling, One Unified Structure

The client needed data from six key sources: dealership sites, classified portals, and competing aggregators. Each one had a different structure, and each one changed frequently.

We built dedicated crawlers for each domain, using dynamic schema mapping to normalize the fields across platforms. So, whether one source called it “2.0 Turbo Petrol” and another said “Petrol 2L Turbocharged,” it didn’t matter. The output was clean, consistent, and ready to use, engine type, transmission, model variant, fuel efficiency, all exactly where they needed to be, every single time. Structured. Clean. Searchable.

Real-Time Inventory Monitoring

Inventory in the car business is liquid. One listing might expire within hours. A dealer might drop prices over lunch. So, we set up high-frequency crawls, some running hourly, others in staggered windows based on the source behavior and the client’s data priorities.

And this wasn’t brute force scraping. It was intelligent scheduling.

We didn’t just hammer the site with full crawls every time. Each crawler was smart enough to spot what changed, new listings, sold vehicles, and price updates, and only pulled what was different. No wasted cycles. No stale data. It saved bandwidth. It respected the source. And it gave the client fresh data without lag.

Deduplication and Canonical Matching

Duplicate listings were one of the client’s biggest headaches. The same vehicle, same VIN, same dealer, could appear multiple times with slightly different metadata.

So, we added a deduplication layer using a combination of fuzzy matching and unique identifier logic. It grouped near-identical listings, ranked the most complete one, and flagged duplicates for removal.

That meant no more confusion in the search results. No more price variation for the same car. Just clarity.

Flexible Delivery, Ready for Action

The final data was delivered exactly how the client needed it, via secure API and scheduled data dumps in JSON. We added tags for “new listing,” “price dropped,” and “sold,” so their internal systems could trigger actions right away.

Within days, their product team had cleaner search filters. The pricing team got a clearer view of market trends. And the leadership team finally had the one thing they’d been missing: trust in the data.

What we built wasn’t just a crawler; it was a data engine that plugged directly into the way their car marketplace operated.

Handling Real-World Complexities in Cars Data Extraction

Here’s the thing about crawling auto listings at scale: it’s never clean. Anyone can scrape a static page or pull five data points from a clean feed. But real-world car marketplaces don’t work like that.

They’re messy. Dynamic. Designed for human browsing, not machine parsing. And if your web scraping setup isn’t built to deal with that, it breaks, often.

So, we built our solution with the mess in mind.

1. Dynamic Pages and JavaScript-Loaded Content

Some of the client’s key sources didn’t load listings on the initial HTML page at all. Data came in through background JavaScript calls, meaning traditional crawlers saw blank pages. We built render-aware crawlers that could wait for AJAX content, parse shadow DOMs, and extract the listing data once fully loaded.

It wasn’t just scraping; it was controlled page simulation, done at scale.

2. Pagination Logic That Actually Works

One platform buried listings across hundreds of paginated results with no standard structure. Some pages loaded via infinite scroll. Others had non-incremental URLs. We reverse-engineered the pagination logic for each site to ensure every listing was captured, no matter how deep it sat.

No cars left behind.

3. Location-Based Pricing Variations

A big complexity in car marketplaces is regional pricing. The same model could be listed at different prices across cities due to taxes, local demand, or dealer discounts. We built logic to extract and tag location-specific pricing for each listing, then grouped results by region for downstream analysis.

Now the client could slice data by city, state, or dealer zone, without touching a spreadsheet.

4. Image Handling and VIN Extraction

Some sources embedded key details inside image carousels. Others tucked away VINs inside image file names or secondary listing tabs. Our crawler framework was extended to capture and label images with metadata, allowing the client to run image-driven listing validation and VIN tracking downstream.

That meant fewer fakes, fewer misclassified listings, and stronger data confidence.

5. Anti-Bot Defenses and Crawl Stability

One of the trickier sources started blocking requests after a few dozen visits. We deployed a rotating IP strategy with distributed crawl nodes and user-agent rotation. When the site upgraded its anti-bot measures, we added randomized delay logic and session-based headers to fly under the radar.

In short: no downtime, no bans, no broken crawls.

These weren’t edge cases. These were the realities of pulling automobile data at scale from the open web. The difference? Our infrastructure didn’t crack under the pressure; it adapted.

The Compliance Edge: How PromptCloud Ensures Safe Scraping

Let’s be honest, web scraping gets a bad rap. And it’s not because it doesn’t work. It’s because most people doing it are either cutting corners or flying blind.

That’s where PromptCloud is different.

For our client, scraping wasn’t just about speed or volume. It was about reliability and reputation. They needed a solution that worked at scale but wouldn’t land them in legal gray zones or get their IPs blacklisted by the very sources they depended on. Their CTO said it best: “We don’t want to play defense every week just to get our data.”

We agreed.

Built-In Compliance from Day One

Every crawler we deploy is designed with compliance as a non-negotiable. Before writing a single line of code, we analyzed the client’s target sources for scraping terms, traffic thresholds, bot rules, and acceptable behavior.

If a source explicitly disallowed crawling, we flagged it. If it allowed it within reason, we designed a plan that respected those limits. This wasn’t about being safe later. It was about starting safe and staying safe.

IP Rotation and Source-Aware Load Management

To avoid overloading any site or triggering blocks, we implemented intelligent IP rotation across multiple data centers, mimicking distributed human traffic. Our crawlers also adhered to crawl-delay rules, randomized access patterns, and mimicked genuine browser headers, all designed to keep the experience frictionless for source websites.

That meant zero downtime and zero escalations during the entire engagement.

Bot Detection? Not a Problem

Some sources used sophisticated bot detection, CAPTCHA, session tokens, and even behavioral fingerprinting. We had answers for each. From using real browsers in headless mode to rotating user agents dynamically, our system was able to access protected content without crossing any ethical or legal boundaries.

The result? Our client got uninterrupted access to crucial auto listings while maintaining good standing with every platform we scraped.

Secure Delivery and Internal Governance

Every dataset delivered went through encrypted channels, with strict access controls on both ends. The client could track who accessed what, when, and how. For an auto platform preparing to scale internationally, that level of visibility and governance mattered.

Because this wasn’t just about compliance with websites. It was about compliance with their own internal data policies and the trust they owed their users.

In a space as fast-moving and competitive as car marketplaces, the last thing you want is a scraping setup that creates new problems while solving old ones. With PromptCloud, they didn’t have to choose between data access and data ethics.

They got both.

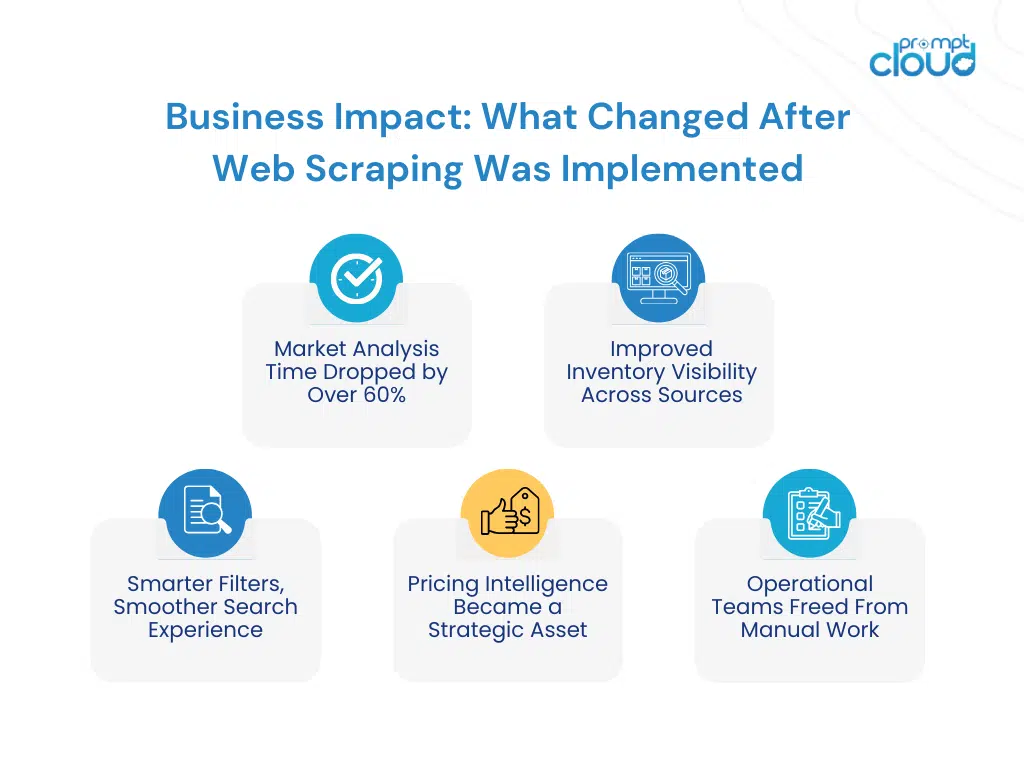

Business Impact: What Changed After Web Scraping Was Implemented

The client didn’t come to us looking for a dashboard. They came to us looking for clarity, the kind that drives better pricing, better search experiences, and smarter decisions across their marketplace.

Once the crawler went live, that clarity showed up fast.

1. Market Analysis Time Dropped by Over 60%

Before PromptCloud, their pricing team needed days, sometimes weeks, to make sense of fluctuating listing trends. Now, they had a near real-time feed of car listings, segmented by city, variant, price, and mileage. Trend reports that used to take hours to build were now generated in minutes, with automobile data they could trust.

That meant faster, sharper pricing strategies and less margin lost to guesswork.

2. Improved Inventory Visibility Across Sources

Previously, their marketplace would show the same vehicle listed twice or, worse, show an expired listing still marked “available.” After implementing our deduplication and real-time update logic, that noise disappeared.

Dealers started receiving cleaner, verified leads. Customers spent more time browsing. Bounce rates on vehicle detail pages dropped by 35%.

Why? Because users finally trusted what they were seeing.

3. Smarter Filters, Smoother Search Experience

Once the client had structured, normalized vehicle specs from across all listings, their product team was able to refine the platform’s filters, engine type, body style, transmission, ownership history, location, and more.

Now, when a customer searched for “diesel SUV under ₹12 lakhs in Bangalore,” they got accurate, relevant results every time. That boosted on-site conversions and made the platform feel more responsive than its competitors.

4. Pricing Intelligence Became a Strategic Asset

One of the biggest game-changers? The ability to monitor competitor platforms at scale.

By scraping listings from peer platforms, the client could now see how similar vehicles were priced in real time. They used this to build a dynamic pricing index, adjust promotions, and even help dealers price more competitively, without race-to-the-bottom discounting.

The result? Higher dealer retention, improved negotiation leverage, and a stronger brand position in the marketplace.

5. Operational Teams Freed From Manual Work

Before the engagement, three internal team members were manually checking sources, updating spreadsheets, and reconciling mismatched listings. Post-crawler, that entire workflow was automated.

Instead of fighting fires, those same teams were now focused on building new features and expanding into Tier 2 cities. That’s what happens when your data stops being a burden and starts becoming a growth lever.

This wasn’t just a technical upgrade. It was a foundational shift in how the client ran their business. And it all started with the right approach to web scraping services, built specifically for the realities of modern car marketplaces.

Key Takeaways for Decision-Makers in Auto Aggregator Platforms

If you’re in charge of data, product, or pricing at a car marketplace, you know the challenges we’ve just outlined aren’t rare; they’re daily. The question isn’t whether you need data. You already have data. The real question is whether it’s doing any real work for you.

Here’s what this project made clear, and what you can apply to your own business today:

1. Structured Data Is a Strategic Advantage

This isn’t just about populating your listings page. When your cars data is consistent, real-time, and reliable, everything downstream improves, filters work better, pricing becomes smarter, and customer trust goes up.

The team that owns the cleanest data wins.

2. Custom Scraping Beats Plug-and-Play Tools, Every Time

Generic scraping tools might get you part of the way. But if you’re operating at scale, with multiple sources, dynamic pricing, and complex inventory flows, a custom web scraping service is not a luxury; it’s a necessity.

What worked for eCommerce won’t cut it in car marketplaces.

3. Real-Time Isn’t Optional Anymore

Car inventory moves fast. If your platform shows stale listings, outdated prices, or sold-out stock, customers don’t wait around. They bounce. A crawler that updates every few days isn’t “good enough.” Real-time is the new baseline.

Your users expect it, even if you’re not offering it yet.

4. Compliance and Reliability Are Dealbreakers

Scraping isn’t just about speed. You need to do it right, ethically, legally, and with full transparency. A vendor who can’t answer your legal team’s questions or adapt to anti-bot changes isn’t a vendor you want in your stack.

Stability is just as valuable as scale.

5. The ROI Is Bigger Than You Think

The client didn’t just save time. They improved conversions, dealer retention, and pricing accuracy. They freed up internal teams to focus on growth. In short, the web crawler didn’t just solve a problem; it unlocked performance across the board.

This is what happens when you stop treating data as a backend chore and start using it as a competitive edge.

Why PromptCloud is the Partner of Choice for Automobile Data

There are a lot of data vendors out there. Some sell access to feeds. Others offer generic scraping tools. But when you’re building a car marketplace, something complex, high-volume, and deeply dynamic, you need more than a tool. You need a technical partner who understands the terrain.

That’s what PromptCloud brings to the table.

1. We Build Crawlers for the Real World

We don’t hand you DIY scripts. We build robust, custom scrapers that work at scale, across changing website structures, dynamic pages, anti-bot challenges, and messy, unstructured data. And we keep them running, without your team lifting a finger.

If something breaks, we fix it. If a source changes, we adapt. That’s the job.

2. We Speak Automotive

Over the years, we’ve worked with some of the biggest names in auto listings, fleet intelligence, and vehicle marketplaces. We know how messy this space gets, trim-level inconsistencies, missing VINs, location mismatches, the works. So, we didn’t just grab automobile data and call it a day. We cleaned it, structured it, and made sure it meant something when your team sat down to use it.

That means less time spent cleaning data, and more time using it.

3. Scale Is Baked In

Whether you’re starting with 5,000 listings or planning to scale up to 500,000, our infrastructure flexes with your needs. Need to add new sources next week? Done. Need to expand into new geos? We’ve already handled internationalized domains, local tax variants, and multilingual marketplaces.

Scale isn’t a stretch; it’s a feature.

4. You Own the Output, We Handle the Heavy Lifting

Your team gets clean, structured cars data, delivered where you want it, when you want it, without worrying about crawler maintenance, source downtime, or legal headaches. From compliance to delivery, we manage the complexity so you can stay focused on growth.

No hidden costs. No vendor lock-in. Just data that works.

So, if you’re evaluating web scraping services to power your auto platform, know this: we’ve already built what you’re trying to build. And we’ve made it work for companies that needed it to work right now, not months from now.

When you’re ready to stop fighting your automotive data and start winning with it, PromptCloud is here.

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

Let’s connect.

Frequently Asked Questions

1. We’ve tried web scraping tools before. What makes PromptCloud different?

The difference is simple: we don’t hand you a tool and wish you luck. We build a custom crawler that’s engineered for your sources, your data needs, and your scale. No duct-taped scripts, no broken feeds, no generic dashboards. We own the complexity so your team can stay focused on growth.

2. Can PromptCloud handle pricing and listing changes in real time?

Absolutely. We’ve built crawlers that refresh high-priority auto listings multiple times a day, sometimes hourly, depending on how fast your market moves. Whether it’s a dealer changing price tags or a competitor listing new inventory, you see it fast, without having to hunt for it.

3. What about legal concerns? We can’t afford to cut corners.

Neither can we. Every crawler is built with compliance in mind, IP rotation, crawl delays, anti-bot respect, and real-time monitoring are standard. If a source is sensitive, we work within safe boundaries. No scraping chaos. Just clean, ethical data delivery that won’t come back to bite you.

4. Can we start small and scale up later?

Yes, and we designed the system that way. You can begin with a few high-value sources and grow from there. Adding new sources, fields, or delivery methods doesn’t mean rebuilding the whole setup, it’s just a config update on our end.

5. What kind of support do we get after onboarding?

You don’t get a chatbot. You get real people, engineers, account managers, and crawler experts, who stay in the loop with your business. If a site layout changes, we fix it. If your needs change, we adapt. You don’t chase us. We stay ahead of it, so you don’t have to.