Janet Williams

- August 27, 2021

- Blog, Web Scraping

Are you looking for a way to extract data from websites? Well, if so, then I have good news for you! This blog post is going to show you the top 9 easiest website ripper copiers. There are many options out there that make this process quick and painless, but only a handful of them are easy enough for anyone with basic computer skills to use. So, without further ado: here is our list of the top 9 easiest-to-use website ripper copier!

What Is A Website Ripper?

A website ripper copier basically extracts data from a certain website and places it in an external file. This information can be saved to your computer locally or uploaded to the internet for anyone who wants it, making it incredibly helpful when you’re looking for something specific on the web that doesn’t exist (or does exist, but is only accessible through a paid subscription, etc.). So with that being said, here is our countdown of the top 10 easiest website rippers:

Top 9 Easy use Website Ripper Copier

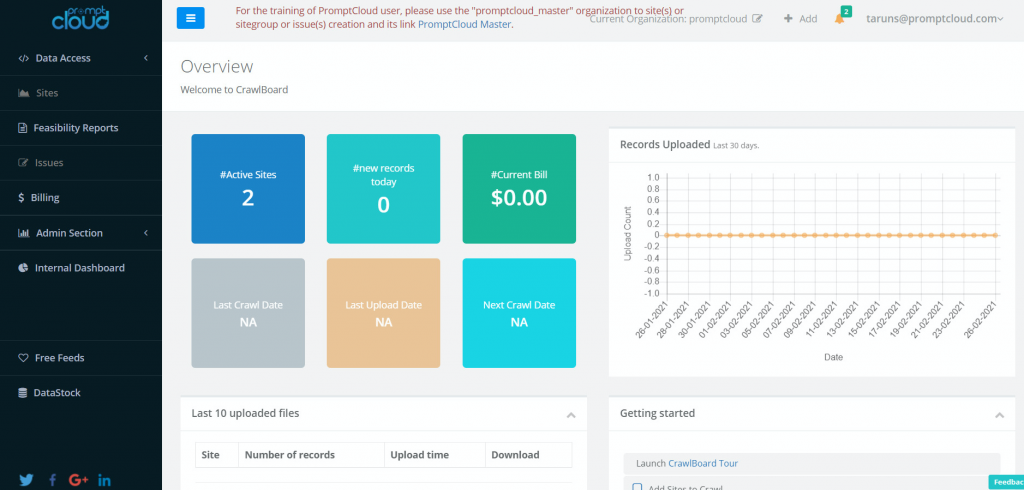

Promptcloud

Promptcloud is an enterprise-grade web crawler with which you can extract data from websites. Just enter a URL and their crawler robot will do the rest. It automatically recognizes input URLs, parses them into elements, and extracts relevant data – all within seconds.

HTTtrack

This free software is very straightforward and simple to use. You can save the contents of a website as an HTML, text, image, or pdf file on your computer by just right-clicking on that particular website’s address and choosing HTTrack Website Copier from the drop-down menu.

Wget

The GNU Wget is a free software package for retrieving files using HTTP, HTTPS, and FTP. It is a non-interactive command-line tool, so it may easily be called from scripts, cron jobs, terminals without X-Windows support, etc. If you are using a Linux terminal, you can use the ‘wget’ command to get the contents of a website into a text file.

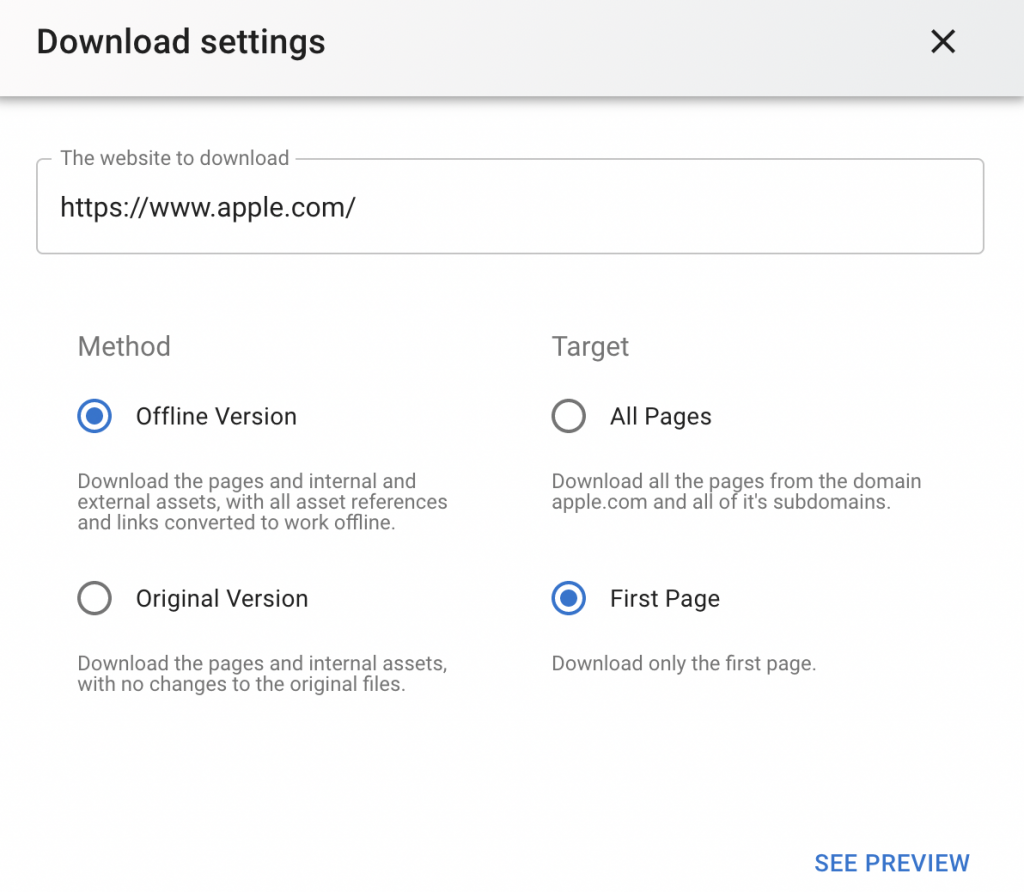

Websitedownloader.io

Websitedownloader.io is a universal web crawler and downloader that extracts data from websites. Although it hasn’t been updated in several years, we’ve included it on this list because many people swear by its ease of use.

Cyotek Web

Cyotek WebCopy is an advanced utility that automatically crawls websites and saves data locally on your PC. Data can be extracted from a single page or multiple pages simultaneously, allowing you to create searchable indexes of websites in a matter of minutes.

Getleft

Getleft is a free, open-source web application that automatically extracts links from an input URL and displays them in the app. All you have to do is enter your website’s URL into the address bar and click “Start”, then GetLeft will download all of the linked URLs into a text file for offline use later on.

UnMHT

This addon is compatible with Firefox, so you can download data from any website into an MHT file. Just right-click on the website you want to copy and choose “Save Page As” from the drop-down menu. Then, save it with a .mht extension, open Mozilla Firefox, and go to File > Open File. Enjoy!

Grab-site on Github

Grab-Site is a tool designed specifically for backing up websites. Give Grab Site the URL and it will recursively crawl the site, creating WARC files along the way using an internal fork of Wpull to do so.

grab-site gives you

- a crawler that shows a dashboard of all URLs and the number left in the queue.

- Once a crawl is running, you can add ignore patterns that would otherwise prevent the crawl from ever finishing.

- Debugging requires a little extra work, but it is made much easier by using an extensively tested default ignore set (global) as well as additional (optional) ignore sets for forums, Reddit, etc.

- Duplicate page detection ensures that links to pages with identical content aren’t followed.

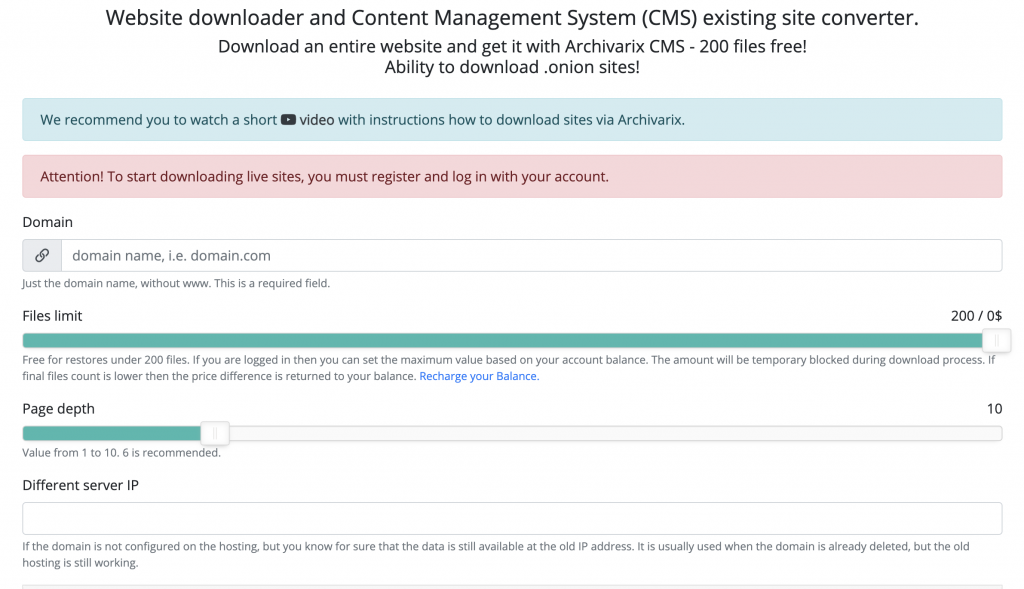

Archivarix

Archivarix is a web crawling software that crawls websites and extracts media files from videos or images to a local directory. It can also be used as a free online website downloader with an easy-to-use interface.

Conclusion

We hope you’ve found this article informative and that it gives you a better understanding of what website ripper copiers do. If your business needs to quickly copy content from one site onto another, we recommend PromptCloud for its ease of use and affordability. They also offer more features than some other web scraping tools which is why they rank higher on our list. Let us know if there are any specific questions about using these types of software or how the process works in general!

Sharing is caring!