**TL;DR**

Most AI pipelines fail long before the model sees any data. They fail at the point where raw web inputs do not follow a predictable structure. One site calls it “price,” another calls it “current_amount,” a third uses a hidden field that only appears after running JavaScript. Without a schema, nothing lines up. Fields drift. Mapping breaks. Models inherit inconsistencies they cannot detect. This is why AI-ready schemas matter. This blog walks through how to design dependable AI-ready schemas, how metadata taxonomy fits in, and how teams standardize structure at scale for real-world pipelines.

An Introduction to AI-Ready Schema Templates in 2025

When schemas are weak, every downstream step becomes harder. Cleaning takes longer. Validation becomes messy. Training signals become noisy. Small inconsistencies turn into unpredictable behaviour. The goal of this blog is to give you a grounded way to think about schema templates and standardization. Not complicated theory. Just practical structures that make your data easier to maintain, automate, and trust.

Why AI Pipelines Depend on Strong Schemas

AI models are only as reliable as the structure of the data they receive. When fields shift, formats change, or values arrive inconsistently, the model does not fail loudly. It fails quietly. It learns patterns that are not real, misses signals that matter, and struggles to generalize across sources. A strong schema prevents this by giving your entire pipeline a single source of truth.

A schema is more than a field list.

- It defines relationships, formats, expectations, and boundaries.

- It tells the pipeline what should exist and what should never appear.

- It gives the model a stable foundation even when sources keep changing.

Here is why strong schemas matter in every AI-ready workflow.

They remove ambiguity.

A clear schema ensures “price,” “sale_price,” and “discountedValue” do not drift into three unrelated fields. The model gets one meaning instead of three interpretations.

They stabilize transformations.

Mapping, normalization, enrichment, and validation all depend on predictable structure. When the schema is strong, transformations stay consistent across every dataset version.

They improve data quality early.

Most errors surface at ingestion. A schema catches type mismatches, missing fields, extra fields, broken tags, and formatting inconsistencies before they affect training.

They support reproducibility.

A stable schema lets you recreate a dataset, repeat a training run, or roll back a pipeline version without guessing how the structure looked last month.

They make scaling safer.

Adding new sources becomes easier when they must follow the same schema. You avoid the silent drift that usually appears when more sites join the pipeline.

They help models learn context.

Schema structure teaches the model which fields belong together, which attributes describe the same entity, and how similar records should align.

Without a strong schema, an AI pipeline becomes a guessing game. With one, the pipeline becomes predictable, explainable, and easier to maintain. The next section breaks down the components every AI-ready schema should include.

Core Components of an AI-Ready Schema

An AI-ready schema is not just a set of fields. It is a structured agreement between your crawlers, your validation rules, your storage layer, and your models. When each component is defined clearly, the entire pipeline moves with less friction. When one layer is vague, every downstream process becomes harder to trust. To design a schema that holds up in real AI workflows, you need five core components. These components work together to keep structure stable and meaning consistent.

Component one: Field definitions

Every field needs a clear description. Not just “price” but whether it is a numeric value, whether currency is included, and whether it reflects full price or discounted price. The more clarity you give here, the fewer assumptions your pipeline makes later.

Component two: Data types and constraints

A strong schema defines whether a field is numeric, string, boolean, array, or nested object. It also defines constraints such as required, optional, or conditionally required. These constraints catch drift early.

Component three: Relationships and grouping

Fields rarely exist alone. Product data has pricing attributes, content attributes, and metadata attributes. Job data has role fields, skill fields, and compensation fields. Grouping fields gives your pipeline context and teaches the model which parts move together.

Component four: Valid values and controlled vocabularies

Categories, statuses, currencies, and rating scales should come from a closed list. Open lists cause unpredictable variation and inconsistent mapping. When vocabulary is controlled, your downstream tasks remain stable even as sources change.

The next section explains how schema templates help standardize structure across large datasets.

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

Why Schema Templates Matter for Web Data at Scale

Schema templates are the practical way teams bring order to web datasets that come from dozens or hundreds of sources. Without templates, every new site introduces fresh variations. Field names shift. Formats change. Metadata is inconsistent. Over time, your pipeline ends up holding multiple versions of the same information with no guarantee of consistency.

A schema template removes that uncertainty. It gives every source the same structure to follow. It creates a stable target that incoming data must match.

Templates matter most when scale increases. Web data is unpredictable, and no two sites describe the same concept the same way. A template standardizes how those variations map into your schema. With one template per entity type, the entire pipeline becomes more manageable.

Here are the reasons templates become essential as datasets grow.

They prevent silent drift.

When a website changes a field name or drops a value, the template catches the mismatch before it passes downstream.

They reduce onboarding time for new sources.

Instead of designing mappings from scratch, you map each new source into the existing template. This shortens development cycles and keeps structure consistent.

They ensure uniform quality checks.

Validation rules, type checks, and field constraints stay the same across every dataset. You do not rewrite validation for each new site.

They simplify transformations and enrichment.

A single template means enrichment logic does not need custom branches for every source. This avoids the complexity that usually slows pipelines down.

They support versioning and reproducibility.

When schema templates evolve in controlled versions, you can trace how datasets changed across months or years.

They reduce model confusion.

Standard structure gives models predictable input formats. The model learns relationships more efficiently when each record follows the same shape.

A strong template is not rigid. It is structured but adaptable. It supports optional fields, conditional fields, and future expansion. The next section walks through how to design templates that work across diverse sources without becoming over engineered.

How to Design Schema Templates That Hold Up Over Time

A good schema template does two things at once. It gives your pipeline a strict, dependable structure, and it stays flexible enough to absorb changes in websites, formats, and new fields. The biggest mistake teams make is treating templates as frozen blueprints. Web data does not behave that way. Templates must be structured but adaptable.

A strong template feels predictable to machines and forgiving to humans. It defines what must exist, what may exist, and what conditions control those boundaries.

The best schema templates evolve through a simple design philosophy:

- Start with the minimum stable core.

- Add optional fields around it.

- Create room for safe expansion.

- Version every change clearly.

This approach prevents both extremes: over engineering and under specifying. Below is a clear breakdown of what makes a schema template durable.

Table 1 — Qualities of a Strong AI-Ready Schema Template

| Quality | What It Means | Why It Matters |

| Stability | Core fields rarely change and act as the backbone | Keeps models grounded and prevents breaking changes |

| Flexibility | Optional and conditional fields absorb variations | Handles unpredictable web structures without redesign |

| Clarity | Each field has a clear meaning and scope | Reduces assumptions across teams and transformations |

| Constraint Control | Types, required flags, and valid values are explicit | Stops silent drift and catches upstream errors early |

| Extensibility | Safe pathways for adding new fields or metadata | Allows long term scaling without fragmenting structure |

| Versioning | Every template update is tracked and documented | Supports reproducibility and lineage over time |

How to build templates aligned with these qualities

Define a mandatory core

Identify the fields that describe the entity in every scenario. For product data this may be title, category, price, currency. For job data it may be role, skills, location, experience.

Add optional and conditional fields

Templates should not reject data simply because a site omits non critical attributes. Optional fields keep the schema practical.

Design for multi source input

Do not tailor your template to one site. Build it around the universal structure of the entity, not the quirks of any specific source.

Apply consistent naming

A template with intuitive and uniform naming conventions reduces confusion, especially as more sources are added.

Include metadata early

Source, timestamp, region, parser version, and crawl conditions belong inside the template. Metadata makes debugging much easier.

Version everything

Schema templates must evolve transparently. Record every change, including added fields, removed fields, or type changes. This ensures downstream models can be linked to the exact template version they used.

A durable schema template is not static. It is stable and adaptable at the same time. The next section explains how data standardization turns these templates into predictable, AI-ready flows.

The Role of Data Standardization in AI-Ready Pipelines

Websites use different naming conventions. Some send numbers as strings. Others hide metadata in script tags or embedded JSON. Standardization turns this chaos into predictable structure. Think of standardization as the enforcement layer. The schema tells you what should exist. Standardization makes sure it actually does.

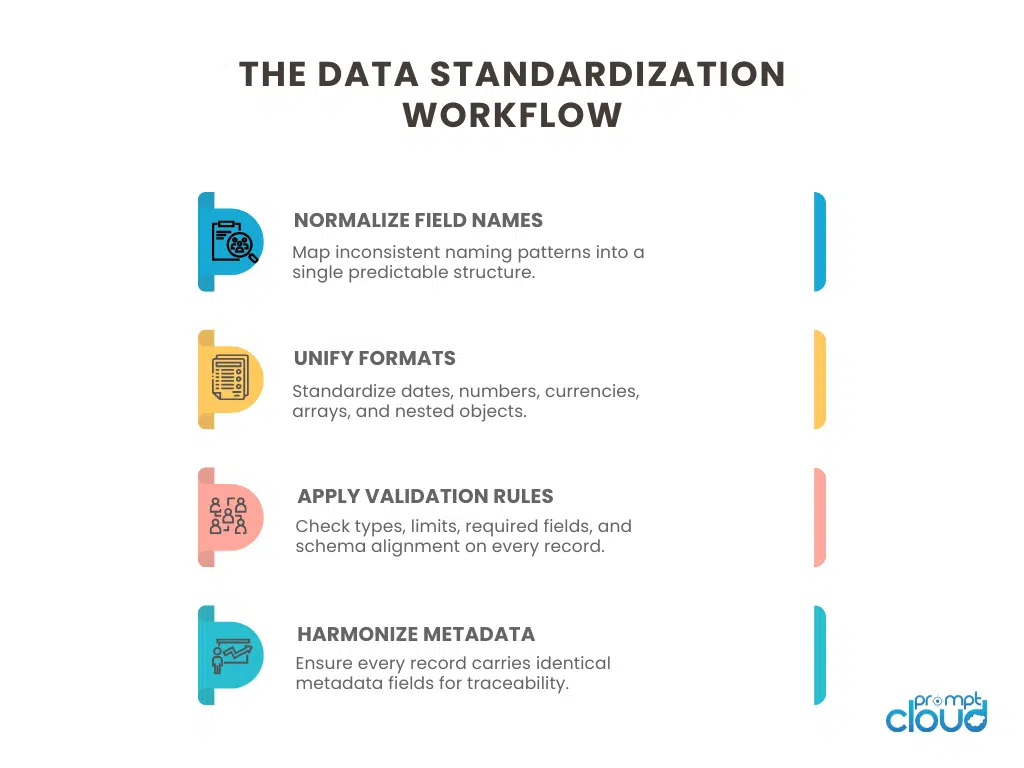

Figure 1. Step-by-step workflow for designing AI-ready schema templates that stay stable over time.

A standardization workflow handles differences that appear naturally across websites and APIs. Rather than rewriting logic for every new source, you normalize everything into one predictable format that the model can rely on. This reduces downstream friction and keeps your training signals clean.

Here is how standardization supports AI-ready pipelines.

It aligns formats across sources.

Dates, numeric fields, currencies, dimensions, and units need consistent formatting. Standardization removes differences that creep in from source variations.

It stabilizes naming and structure.

Fields like “current_price,” “price_value,” and “listingPrice” should always become the same final field. This is what prevents mapping explosions.

It ensures consistent metadata.

This consistency keeps lineage, filtering, and auditing reliable.

It improves model learning

Models learn best when structure does not change. Standardization gives the model clear signals, especially when training on multi-source datasets.

It supports long-term maintenance.

When new sites are added, the same standardization rules apply immediately. This prevents schema fragmentation over time.

Standardization is not a one-time cleanup step. It is an ongoing practice that strengthens every stage of the pipeline. Without it, even the best schemas break under the weight of inconsistent inputs. With it, AI pipelines stay predictable, aligned, and far easier to scale.

Metadata Taxonomy: Giving Structure Meaning

A schema defines the fields in your dataset. Standardization keeps them consistent. Metadata taxonomy adds the meaning behind those fields. Without metadata, your data has structure but no context. With metadata, every record carries information about its origin, environment, version, and conditions of collection. This context is what makes AI pipelines traceable, debuggable, and easier to trust.

Figure 2. Core steps of the data standardization process used to align multi-source web data into one predictable structure.

Metadata taxonomy is simply the structured classification of all the descriptive information you attach to a dataset. It answers the questions your schema cannot answer alone. Where did this data come from? How was it collected? Which parser version processed it. Which region or device produced the content. When each part of the record was last updated. This context makes the dataset operationally useful.

Here is how metadata taxonomy strengthens AI-ready data flows.

It improves dataset traceability.

When every record carries standardized metadata, you can trace errors back to specific sources, dates, or versions without manual investigation.

It provides clarity for downstream consumers.

Analysts, data engineers, and model builders understand exactly what they are working with. They are not guessing which scraper, which region, or which cycle generated a value.

It supports reproducibility.

When training data is tied to specific crawl times, schema versions, and pipeline runs, you can rebuild the exact environment that produced a model, even months later.

It creates structure around change.

Metadata captures the conditions under which data was collected. If a site redesign breaks extraction, the metadata shows when behaviour changed.

It simplifies compliance.

Retention rules, source policies, and collection notes become part of the dataset’s metadata layer. This keeps audits clean and reduces confusion.

A good metadata taxonomy should be simple enough to apply everywhere, but detailed enough to provide real insight. The goal is not to add noise. The goal is to add context the AI pipeline needs to behave predictably. In the next section, we bring everything together into a practical process for building AI-ready schemas that scale.

How to Build AI-Ready Schemas: A Practical 5 Step Framework

Designing schemas is not difficult. Maintaining them is. An AI-ready schema must hold steady across hundreds of sources, unpredictable HTML layouts, partial data, and multiple model versions. The easiest way to achieve that stability is through a clear, repeatable process. This framework keeps structure consistent while giving you enough flexibility to handle real-world variation.

Below is a five step approach teams use to build schemas that last.

Step one: Define the core entity

Start by deciding what the record represents. A product, a job listing, a property, a vehicle, a review, or an article. The core entity decides which fields matter most and which ones are optional. Without this clarity, schemas become too broad and lose meaning.

Step two: Identify required, optional, and conditional fields

Required fields describe the entity. Optional fields enrich it. Conditional fields appear only in some scenarios. This structure helps your pipeline accept variation without losing consistency. It also gives your validation rules a clear map to follow.

Step three: Establish naming, types, and constraints

Keep names intuitive. Keep types strict. Keep constraints explicit. This prevents silent drift and makes downstream transformations predictable. Data types should not change, even if values do.

Step four: Add metadata and taxonomy early

Metadata such as source, region, timestamp, and parser version belongs inside the schema, not outside it. Taxonomy adds meaning by showing how the record fits into categories, hierarchies, or classification systems. This context becomes vital during debugging and reproducibility.

Step five: Version the schema and track every change

A schema without versioning will break your pipeline. Every update needs a version number, a change note, and a migration plan. Even adding one field or updating a type must be tracked. Versioning is what keeps your lineage clear and your training data reproducible.

A schema built with this framework does not stand still. It evolves in controlled increments. It stays stable without becoming rigid, and flexible without losing structure. With this in place, your AI pipeline becomes easier to scale, easier to debug, and far more predictable under changing web conditions.

The Final Cut

AI pipelines break when structure breaks. It is rarely the model that fails first. It is the data underneath it. When fields shift, formats change, or metadata disappears, the pipeline becomes unpredictable in ways that are difficult to trace. AI-ready schemas exist to prevent this. They anchor your data in a stable, well defined structure that holds up even as websites evolve and new sources join the system.

Strong schemas do more than keep fields organized. They reduce noise. They prevent drift. They make validation easier, mapping more reliable, and model training far more consistent. When schema templates, standardization rules, and metadata taxonomy work together, your AI pipeline gains a long term foundation rather than a loose collection of custom mappings.

The real value shows up over time.

- You onboard new sources without reshaping your pipeline.

- You update parsers without breaking downstream steps.

- You handle new fields without rewriting logic.

- You reproduce any dataset version without guessing.

- You keep your models grounded even as the world changes around them.

An AI-ready schema is not a technical luxury. It is an operational requirement. It gives your team confidence, your pipeline clarity, and your models the structure they need to perform at scale. When your dataset has a stable shape, every layer built on top of it becomes easier to trust.

Further Reading From PromptCloud

Here are four related resources that connect closely with this topic:

- Understand safe collection practices that influence clean schema design in How to Read and Respect Robots.txt

- See how market signals can be structured into consistent templates in Market Sentiment Using Web Scraping

- Compare dataset formats and how they align with schema driven pipelines in JSON vs CSV for Web Crawled Data

- Follow a practical extraction workflow that aligns with standardization practices in Google AdWords Competitor Analysis With Web Scraping

A clear, practical look at data standardization and schema driven design is available in W3C’s Data on the Web Best Practices which outlines structured data principles widely used across the industry.

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

FAQs

1. Why do AI models perform better with schema aligned data?

Models learn patterns more clearly when structure does not shift across sources. Schema alignment removes inconsistencies that cause confusion, drift, and unreliable outputs.

2. How often should schemas be updated in a web data pipeline?

Schemas evolve slowly. Updates happen only when new fields appear or source behaviour changes. Each update must be versioned so you can reproduce earlier datasets without guessing.

3. What is the difference between a schema and a schema template?

A schema defines the fields and types. A schema template shows how those fields should be applied across all sources. Templates prevent fragmentation and keep structure uniform during ingestion.

4. How does metadata taxonomy support debugging?

Metadata gives context. When you know the source, region, parser version, and timestamp for each record, you can find the exact point where an error or drift began.

5. Is data standardization optional if the schema is well designed?

No. A schema defines what data should look like. Standardization ensures incoming data actually follows that shape. Without standardization, schemas break under real world variation.