**TL;DR**

Synthetic data fills gaps, expands rare patterns, and boosts volume when real examples are limited. Real-world web data gives models grounding, context, and natural variability. The strongest AI training pipelines rely on both: real data for truth, synthetic data for controlled expansion. This blog breaks down how they differ, where each one works well, and how to combine them without weakening model integrity.

The Real World Web Data Problem

Both types of data solve different problems. Both come with clear strengths and clear limitations. And both play a role in how stable and accurate an AI system can become.

Real-world web data gives models something synthetic data never fully captures. It carries natural noise, unexpected phrasing, edge-case behaviour, and real patterns humans produce without thinking. This makes it a powerful grounding layer. When a model learns from real data, it understands how information appears in the wild rather than how a generator imagines it.

Synthetic data plays a different role. It adds volume when the dataset is too small. It creates balanced examples when one class dominates. It fills missing scenarios that rarely happen in production.

What Synthetic Data Actually Is

Synthetic data is information generated by algorithms instead of collected from the real world. It can be produced using rule based systems, probabilistic models, simulators, or modern generative models. The core idea is simple. You create data that behaves like reality without depending on real people, real websites, or real transactions. This makes synthetic data flexible and safe to scale, especially when the goal is to increase coverage or create examples that rarely show up in live environments.

Synthetic data is not just random output. It works only when it follows the structure, logic, and statistical patterns of the domain you care about. Good synthetic datasets borrow the shape of real data. Great ones also mimic its variability, boundary cases, and contextual clues. When used well, synthetic data helps fill missing slices, balance imbalanced classes, and create richer training signals.

Here are the types developers use most often.

Pattern based synthetic data

Rules, templates, or structured patterns generate predictable samples that match a known schema.

Statistical synthetic data

Models learn the distribution of real data and generate new rows that fit the same statistical shape.

Simulation driven synthetic data

Synthetic examples come from a controlled environment, such as a pricing simulator or behaviour simulator.

Generative synthetic data

Models such as GANs or LLMs create new examples by learning from real-world samples and producing original variants.

Augmented synthetic data

Developers start with real data and apply controlled modifications, distortions, or expansions to increase coverage.

Synthetic data becomes useful when it adds clarity or fills a gap. It becomes harmful when it tries to replace the natural complexity of real signals. The next section breaks down what real-world web data offers that synthetic generators still struggle to match.

Turn your scraping pipeline into something you can trust under change with PromptCloud

What Real-World Web Data Provides That Synthetic Data Cannot Replace

Real-world web data gives AI models something synthetic data struggles to reproduce. It carries the messy, unpredictable, organically created signals that come from millions of users, sellers, brands, writers, and platforms. This natural variation is what helps models understand how information appears outside controlled environments. When you train an LLM or classifier on real data, you give it access to the true distribution of behaviour, phrasing, layout, and context.

Real data also captures edge cases you never think to create. A product title that mixes three languages. A job listing with an unusual structure. A review that contains sarcasm, complaints, praise, and storytelling in one sentence. These patterns appear naturally online. Synthetic generators rarely produce them unless they are explicitly instructed or fine tuned to do so.

Another advantage is ground truth value. Real data reflects what actually happened. Prices shown during a sale. Availability during a stock-out. True user sentiment across thousands of reviews. Real distribution shifts over time. You cannot invent this behaviour with a generator. Models trained only on synthetic data will often fail when exposed to true market dynamics.

Here are the qualities real-world web data provides reliably.

Authentic phrasing and tone

Users write in inconsistent, emotional, multilingual ways that synthetic generators rarely mimic with accuracy.

Organic diversity

Web data covers countless products, industries, forums, layouts, and structures without any manual tuning.

Real distribution shifts

Markets change. User behaviour changes. Platforms redesign layouts. These changes become part of real data naturally.

True edge cases

Odd patterns, noisy formatting, and rare events appear only in actual usage, not in rule based synthetic production.

Unscripted complexity

Web data contains mistakes, abbreviations, cultural expressions, and structural noise. Models learn resilience from this.

Real-world data is the grounding layer. Synthetic data becomes powerful only when it builds on this foundation. The next section looks at how teams evaluate the strengths of each data type so they can choose the right one at the right stage of training.

Strengths of Synthetic Data vs Strengths of Real-World Web Data

Synthetic and real-world web data solve very different problems. Most teams get better results when they stop comparing them as competitors and look at how each one contributes to the training pipeline. Synthetic data expands what you already have. Real data gives you the grounding and complexity you cannot invent.

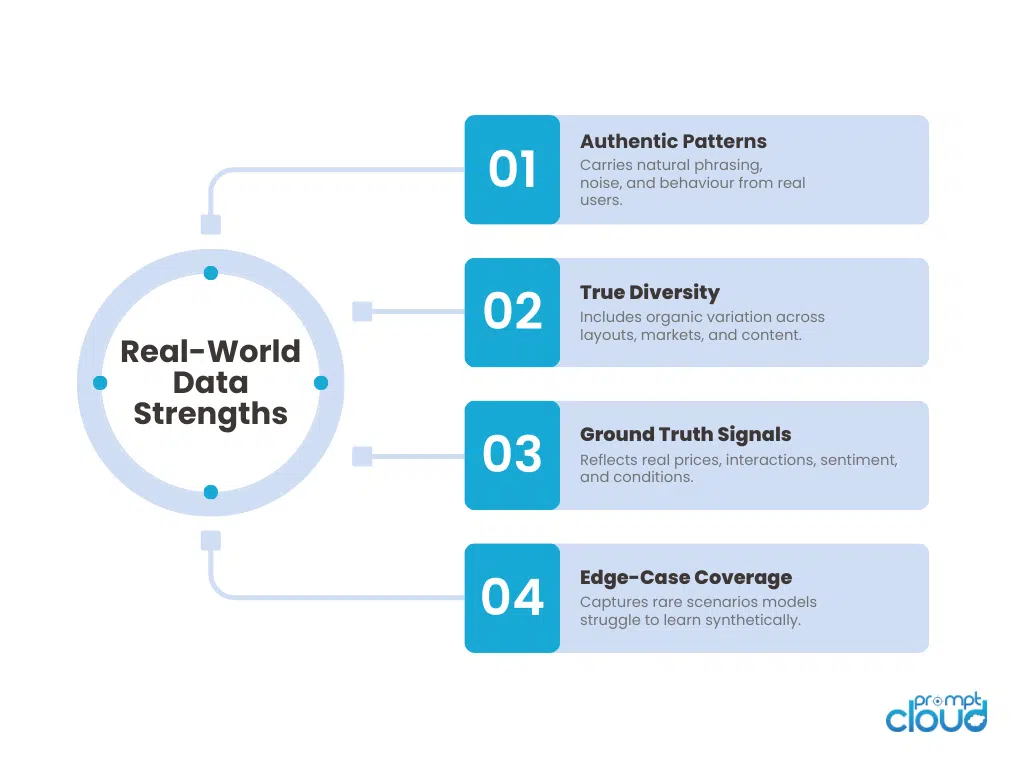

Figure 1. Key strengths of real-world web data that synthetic generation cannot fully replicate.

With synthetic datasets, you can generate balanced classes, increase rare examples, fix coverage gaps, and avoid sensitive information. It is also easier to produce at scale because it does not depend on the availability or behaviour of external websites. When the goal is to increase variety or run safe experiments, synthetic data gives you flexibility.

Real-world web data is strongest when you need truth. It carries natural patterns, time-based shifts, unexpected phrasing, and genuine diversity. It is also the only reliable source of ground truth.

Synthetic data excels at:

- Filling missing scenarios developers did not collect

- Creating safe examples without sensitive or restricted content

- Balancing classes when one label dominates

- Controlling structure so the model sees consistent patterns

- Increasing dataset size without extra scraping

Real-world web data excels at:

- Teaching the model how information appears in actual usage

- Capturing natural distribution, noise, and diversity

- Exposing rare edge cases and unexpected patterns

- Reflecting true market behaviour and time-based variation

- Serving as the factual grounding layer for all training

Figure 2. Core advantages of synthetic data for controlled AI training and targeted augmentation.

Synthetic data brings coverage. Real data brings credibility. Together they create a richer, more stable training signal. The next section explains why most high-performing models use a hybrid approach rather than relying on either type alone.

Why Most AI Pipelines Use a Hybrid Model (Real + Synthetic)

Most high performing AI systems do not choose between synthetic data and real-world web data. They combine both. Real data provides grounding, edge cases, noise, and authenticity. Synthetic data fills blind spots, increases coverage, and helps shape the dataset in ways real data alone cannot. A hybrid model gives you the flexibility of synthetic generation without losing the realism that keeps models stable.

A simple pattern appears in mature AI teams. They start with real-world data to establish truth. Then they inject synthetic data to stretch, balance, or stress test the model. Synthetic data fills small gaps. Real data prevents artificial drift. Synthetic data balances labels. Real data keeps the model aligned with natural distribution.

Here is a clear breakdown of why the two work best together.

Table 1 — How Synthetic and Real Data Complement Each Other

| Training Need | Real-World Web Data | Synthetic Data |

| Grounding and truth | Strong. Reflects actual behaviour and market conditions. | Weak. Depends entirely on generated assumptions. |

| Rare patterns | Limited. Rare cases appear unpredictably. | Strong. Can generate controlled variations. |

| Bias correction | Hard. Bias is embedded in real sources. | Strong. Can create balanced distributions. |

| Volume scaling | Medium. Requires crawling at scale. | Very strong. Can generate large volumes rapidly. |

| Complexity and noise | Very strong. Captures natural language and layout diversity. | Moderate. Can imitate but not fully reproduce. |

| Safety and privacy | Variable. Must follow compliance rules. | Strong. Avoids sensitive fields entirely. |

| Schema consistency | Variable. Websites change formats often. | Strong. Always follow your chosen structure. |

How teams usually combine them

Step one: Start with a real dataset

This becomes the grounding layer. You clean it, structure it, and build your schema from it. The model learns how the domain behaves in the wild.

Step two: Identify gaps or imbalances

Look for missing classes, sparse categories, rare behaviours, or uneven labels. These are the places synthetic data helps most.

Step three: Generate synthetic expansions

Produce additional examples that follow your schema but introduce variety. This increases coverage without distorting natural distributions.

Step four: Blend both datasets carefully

Keep synthetic data as an additive layer, not the primary source. The ratio depends on the task, but real data should always anchor the distribution.

Step five: Validate with real data only

Synthetic data supports training. Real data determines whether the model can survive real environments.

When Synthetic Data Hurts Performance (and How to Avoid It)

Developers often learn this the hard way when performance drops during real-world testing even though synthetic-augmented training metrics look strong.

The problem is not the synthetic data itself. The problem is using it without boundaries. Models learn whatever they see in volume. If the synthetic portion becomes too dominant or too artificial, the model starts fitting to invented behaviours instead of natural ones. Here are the most common failure modes and how to avoid them.

Table 2 — Common Synthetic Data Pitfalls and Safe Practices

| Pitfall | What Goes Wrong | How to Avoid It |

| Overuse of synthetic samples | Model learns artificial patterns that do not exist in real data. | Keep synthetic data below a balanced threshold, often 10 to 30 percent. |

| Poor schema alignment | Synthetic examples include fields or formats the real data never uses. | Produce synthetic data only from validated schemas and mappings. |

| Unrealistic variability | Synthetic diversity exceeds what the real world ever displays. | Anchor generation to real distribution statistics. |

| Lack of grounding | Synthetic data tries to replace the complexity of real data. | Always train with a real-data foundation before adding synthetic layers. |

| Label drift | Generators create noisy or inconsistent labels over time. | Validate synthetic labels with the same checks used for real data. |

| Ignoring time-based behaviour | Synthetic data misses seasonal or temporal patterns. | Use real-world timelines as the backbone for synthetic expansions. |

Signals your synthetic data is hurting the model

Validation accuracy rises but real-world performance falls

A sign that the model has learned artificial structure rather than natural behaviour.

The model fails on low-frequency real patterns

Synthetic expansion may have drowned out rare real cases rather than enhancing them.

Outputs feel “too clean” or templated

Synthetic data often lacks the noise, emotion, and clutter seen in web data.

Generalization weakens when new sources are added

Over-reliance on synthetic samples can reduce the model’s resilience to unfamiliar formats.

Safe synthetic workflows teams follow

Use synthetic data as seasoning, not the base.

Real-world data should remain the reference point for distribution, tone, and complexity.

Generate synthetic variants directly from real examples.

This maintains grounding and keeps the generative layer aligned with true inputs.

Regularly test with a real-only validation set.

If synthetic data introduces distortions, the drop will appear immediately.

Version synthetic datasets just like real ones.

This makes drift visible and allows teams to roll back synthetic expansions easily.

Building a Combined Real + Synthetic Training Pipeline

A hybrid pipeline is not just a mix of two datasets. It is a structured workflow that defines when real data leads, when synthetic data supplements, and how both flow into the model without distorting distribution. Teams that succeed with hybrid training treat it as a layered process rather than a loose merge.

The goal is simple. Real data provides grounding. Synthetic data provides expansion. The pipeline preserves both.

Here is how most production teams structure the combined workflow.

Step one: Begin with a real-world baseline

Start by collecting and structuring a real dataset that reflects the natural behaviour of users, marketplaces, products, forums, or listings. This becomes the foundation. Clean it, normalize it, and validate schema alignment. The baseline gives you the “truth layer” the model must learn before any synthetic expansion occurs.

Step two: Identify gaps and imbalances

Use simple diagnostics to find missing classes, rare edge cases, uneven label distributions, and sparse categories. These are the signals that guide synthetic generation. Without this analysis, teams often generate synthetic samples that fail to solve any real problem.

Step three: Generate synthetic expansions

Produce additional samples only for the gaps identified. Keep them aligned with schema, tone, and distribution statistics. Use controlled variation rather than uncontrolled randomness. The goal is to reinforce weak areas without overpowering natural signals.

Step four: Blend datasets using a grounded ratio

Most teams use ratios such as 70/30 or 80/20, keeping real data as the anchor. The synthetic batch should strengthen patterns, not replace them. The exact ratio depends on the task, but real data always serves as the primary context.

Step five: Apply unified validation across both sources

Schema checks, type checks, distribution analysis, and label audits must apply to both real and synthetic samples. This prevents the synthetic layer from introducing silent drift.

Step six: Train models in stages

Stage one: Train on real data only.

Stage two: Fine tune with a blended dataset.

Stage three: Evaluate strictly on real-world validation sets.

This layered training keeps the model grounded before expanding its flexibility.

Step seven: Monitor for synthetic drift

Track metrics such as real-world test accuracy, variance, and edge-case performance. If the model improves on synthetic slices but weakens on real slices, reduce the synthetic ratio or tighten generation rules.

A combined pipeline works best when synthetic data supports real signals instead of shaping them. The next section brings everything together into a practical, decision-focused framework.

Decision Framework: When to Use Synthetic Data, Real Data, or Both

Choosing between synthetic and real-world web data is not a philosophical debate. It is a practical decision about what the model needs at a specific moment. Some situations demand the authenticity of real data. Others need the flexibility of synthetic generation. Most production systems need both.

Think of this section as a simple decision map. Not rigid rules. Just a clear guide for choosing the right data at the right stage.

Use real-world web data when:

You need truth.

Real data captures authentic behaviour, natural phrasing, and unpredictable patterns that generators rarely recreate well.

You want to understand market conditions.

Pricing shifts, sentiment swings, competitive changes, and seasonality appear only in real sources.

The domain is noisy or unstructured.

Product titles, job descriptions, property listings, reviews, and forum posts contain subtle context synthetic data often smooths out.

You need grounding for model reliability.

Even small grounding shifts can cause models trained on synthetic data to behave unrealistically.

Use synthetic data when:

Your dataset is too small or unbalanced.

Synthetic data fills sparse classes, rare events, and missing labels at scale.

You must avoid sensitive or restricted content.

Synthetic variants allow you to create safe training examples without collecting personal or regulated data.

You need controlled scenarios.

If you want the model to learn a pattern that appears rarely in the real world, synthetic examples give you direct control.

You need safe experimentation.

Testing new features or model behaviours becomes easier when you can generate additional variations without touching production data.

Use both when:

You want accuracy and resilience at the same time.

Real data gives you grounding. Synthetic data adds flexibility.

Your domain changes frequently.

Web data evolves quickly. Synthetic expansion helps you explore new scenarios without waiting for natural examples to appear.

You have complex or sparse labels.

Some labels are expensive to obtain at scale. Synthetic reinforcement reduces cost while real data maintains semantic integrity.

You are building models for multiple markets or contexts.

Real data gives you natural diversity. Synthetic data fills geographical or linguistic gaps.

Synthetic and real data are not rivals. They are complementary tools. The strongest pipelines use real data to anchor the model in truth, then use synthetic data to refine, expand, and stabilize learning. With the right balance, you avoid the weaknesses of each type and combine their strengths into one training strategy.

What’s Next in 2025?

Synthetic data and real-world web data are often presented as two competing choices, but in practice they serve different purposes. Real data gives your model grounding. It reflects how people actually write, search, review, compare, and behave online. It carries natural noise, complex phrasing, and the subtle signals that come only from real interactions. No generator fully replaces this. Real data keeps the model honest.

Synthetic data steps in where real data struggles. It adds coverage when classes are uneven. It strengthens rare patterns that appear too infrequently to train on. It gives teams safe ways to experiment without touching sensitive or rate-limited sources. It also makes it possible to scale faster than external websites allow. Synthetic data fills the empty spaces without disrupting the core distribution.

The strongest AI systems combine both. They start with a real foundation. They learn natural patterns first. Then they extend that training with carefully generated synthetic examples that fill gaps without distorting reality. This blended approach produces a model that understands the real world yet handles rare events with confidence.

As AI adoption grows, teams will rely more on hybrid data strategies. Relying on real data alone limits growth. Relying on synthetic data alone weakens grounding. But when you connect the two through clear schemas, validation rules, and tight control of proportions, the pipeline becomes more stable and more predictable. Models behave more consistently. Errors become easier to diagnose. And your dataset becomes a flexible asset instead of a fixed constraint.

Synthetic and real-world data are not opposites. They are two sides of a strong training strategy. When you understand how to balance them, you build AI systems that are not only accurate, but resilient to the unpredictable patterns of real-world usage.

Further Reading From PromptCloud

Here are four articles that connect well with this topic:

- Understand how pricing patterns influence machine learning decisions in Dynamic Pricing Strategy: Types, Benefits and Challenges.

- Learn what makes a dataset trustworthy and reusable in What Is a Data Set? Explained.

- Compare different extraction methods and how they shape data quality in Data Scraping vs Data Crawling.

- Follow a hands-on workflow for structured extraction in How to Scrape Data With Web Scraper Chrome.

For a deeper look at how synthetic data is evaluated in enterprise AI settings, see this overview from MIT:

Synthetic Data for AI and Machine Learning.

Turn your scraping pipeline into something you can trust under change with PromptCloud

FAQs

1. Is synthetic data enough to train a reliable model on its own?

Not usually. Synthetic data helps fill gaps, but it cannot replace real-world complexity. Models trained only on synthetic samples often perform well in controlled tests and then break when exposed to natural language, noisy formatting, or unpredictable patterns.

2. When should I use synthetic data during training?

Use it after you have a stable real-world baseline. Synthetic data works best as an expansion layer that strengthens weak classes, rare behaviours, or missing contexts. It should never be the first or only source the model sees.

3. How much synthetic data is safe to use?

Most teams stay between ten and thirty percent. This keeps the model grounded in reality while giving it enough variation to learn from additional scenarios. The exact ratio depends on the task, but real data must always dominate.

4. Can synthetic data help reduce bias?

Yes, but only when used carefully. You can generate balanced examples to correct skewed class distributions. However, if the generator itself learns from biased data, synthetic outputs may repeat those patterns. Validation is essential.

5. Does synthetic data work for text heavy domains like reviews or job listings?

It can help, but output quality varies. Simple rule-based generators struggle with tone and nuance. LLM-based generators work better but still lack true diversity. In text-heavy domains, synthetic samples should support the real set rather than replace it.