**TL;DR**

Robots.txt scraping is not about blindly following allow and disallow rules. For developers, it is about correctly interpreting robots policy, understanding ethical crawling boundaries, and aligning crawlers with consent protocols that reflect real-world expectations.

What do you mean by Robots.txt Interpretation?

Most developers meet robots.txt early. You build a crawler. You see a text file sitting at the root of a domain. A few lines of rules. Disallow this. Allow that. It feels simple. Almost too simple.

And that is where trouble starts. In practice, robots.txt scraping is one of the most misunderstood parts of web data collection. Some teams treat it as a strict legal contract. Others ignore it entirely. Both approaches create risk, just in different ways.

Robots.txt was never designed to be a legal enforcement mechanism. It was designed as a communication layer. A way for site owners to express preferences about how automated agents interact with their infrastructure. Over time, those preferences started carrying ethical, operational, and sometimes legal weight.

Today, when developers talk about ethical crawling, robots policy interpretation sits right at the center. Not because robots.txt solves consent, but because it signals intent. The complexity increases when crawlers move beyond indexing. Scraping for analytics, ecommerce intelligence, recruitment data, or AI pipelines introduces new responsibilities. Suddenly, it is not just about whether a URL is disallowed. It is about rate limits, user-agent identification, downstream use, and whether consent protocols are implicitly violated even when rules are technically followed.

PromptCloud helps build structured, enterprise-grade data solutions that integrate acquisition, validation, normalization, and governance into one scalable system.

What Robots.txt Was Actually Designed to Do (And What It Wasn’t)

What robots.txt was meant to do

At its core, robots.txt is a voluntary signaling mechanism. It allows a website to publish a simple set of rules that automated agents can read before crawling. Those rules express preferences. Not permissions. Not enforcement. Preferences.

The file answers three basic questions:

Which user agents does this apply to? Which paths should they avoid or prioritize? Are there crawl pacing hints, like crawl-delay? For developers building crawlers, robots policy is essentially an early handshake. A site is telling you how it would like to be treated by automated traffic.

Following that signal is the foundation of ethical crawling.

What robots.txt was never designed to be

This is where confusion creeps in.

Robots.txt is not a legal contract. It is not an access control system. It does not grant consent in the privacy sense. It does not authenticate or authorize crawlers. Anyone can fetch robots.txt. Anyone can ignore it. The protocol relies entirely on crawler behavior, not enforcement. That means robots.txt scraping decisions are ethical and technical choices made by the crawler developer, not guarantees provided by the site owner.

Why this gap matters today

Modern scraping use cases go far beyond indexing pages for search. You might technically respect all disallow rules and still violate reasonable expectations around load, frequency, or downstream use. Conversely, you might access allowed paths in a way that overwhelms a site. Ethical crawling lives in that gray space between what robots.txt says and what responsible behavior requires.

How Robots.txt Rules Are Parsed and Interpreted by Crawlers

This is where things stop being philosophical and start being very technical. On the surface, robots.txt looks simple. A few lines of text. Some paths. A user-agent or two. But the way those lines are parsed and interpreted can change crawler behavior in meaningful ways. And this is where many robots.txt scraping mistakes happen.

User-agent matching is not as straightforward as it looks

Robots.txt rules are grouped by user-agent. That part is obvious.

What is less obvious is how matching works. Most crawlers follow a “longest match wins” approach. If a rule is written specifically for your crawler’s user-agent, it should override generic rules written for *.

Allow and disallow are evaluated together

A common misunderstanding is that Disallow always wins.

It does not.

When both Allow and Disallow rules match a URL, most modern crawlers apply the rule with the longest matching path. That means a more specific allow can override a broader disallow.

For developers, this matters when sites selectively expose certain subpaths while blocking others. Incorrect path matching logic can either block too much or crawl things that were clearly meant to stay off-limits. Robots.txt scraping engines that do not implement proper precedence logic tend to behave unpredictably.

Crawl-delay is advisory, not universal

Some robots policies include a Crawl-delay directive. Others do not. Some crawlers respect it. Others ignore it entirely.

There is no universal enforcement here.

From an ethical crawling standpoint, crawl-delay should be treated as intent. A signal that the site is sensitive to request volume. Even if your crawler does not formally support the directive, ignoring the message behind it is a bad idea. Rate limiting should be proactive, not reactive.

Case sensitivity and encoding matter

This sounds trivial until you realize how many crawling issues come from edge cases like this. A crawler that lowercases everything may accidentally crawl paths that were meant to be blocked. Details matter here more than people expect.

Why interpretation errors escalate quickly

A single misinterpreted rule rarely causes immediate harm. But at scale, small parsing errors multiply. Suddenly, a crawler hits endpoints it should not. Load increases. IPs get blocked. Legal questions start appearing. All from a file that was “read,” but not fully understood. This is why robots.txt scraping should be treated as a parsing problem, not a string-matching one.

Ethical Crawling Beyond Robots.txt: Where Developers Need to Go Further

If robots.txt were enough, this section would not exist. But every experienced developer knows the uncomfortable truth. You can follow robots.txt perfectly and still behave badly. That is why ethical crawling cannot stop at robots’ policy interpretation.

Robots.txt signals preference, not consent

Robots.txt tells you what a site prefers crawlers to do. It does not tell you whether the data use that follows is acceptable.

For example, a product listing page might be fully allowed. Crawling it once for indexing is very different from crawling it every few minutes, storing historical changes, and reselling insights downstream. From an ethical crawling perspective, frequency and intent matter just as much as path access. Consent protocols live above robots.txt. They show up in privacy policies, terms of service, API offerings, and sometimes explicit data access programs. Developers who ignore these signals often claim technical compliance while missing ethical alignment entirely.

Rate limiting is a responsibility, not an optimization

Many crawlers treat rate limiting as a performance tweak. Something to adjust only when blocks start appearing. That mindset is backwards. Ethical crawling assumes you limit request volume before a site asks you to. Crawl-delay, server response times, and error rates should inform how aggressively your crawler behaves.

Clear identification builds trust

Anonymous crawlers raise suspicion. A responsible crawler identifies itself clearly using a stable user-agent string. Ideally, it includes a contact URL or email. This gives site owners a way to understand who is accessing their infrastructure and why. This matters more than many developers expect. Transparent identification often prevents blocks, misunderstandings, and escalation. Ethical crawling is easier when you are not hiding.

Why this matters in production systems

Crawlers that respect robots policy, rate limits, and consent signals tend to last longer. They break less. They attract fewer complaints. They survive scrutiny from legal, security, and compliance teams. In other words, ethics is operational stability wearing a different hat.

Common Robots.txt Scraping Mistakes Developers Still Make

This is the part where most developers nod slowly. Not because they are careless, but because these mistakes are easy to make and hard to notice until something breaks. Let’s go through the most common ones, why they matter, and what better behavior looks like in practice.

The usual mistakes, side by side

| Mistake | Why it happens | Why it’s a problem |

| Treating robots.txt as optional | “It’s not legally binding” thinking | Leads to blocks, complaints, and reputation damage |

| Parsing rules with simple string matching | Robots.txt looks trivial | Causes incorrect allow or disallow behavior |

| Ignoring crawl-delay | Many crawlers don’t support it | Results in unnecessary load and faster blocking |

| Using generic or rotating user-agents | Easier to blend in | Breaks trust and rule targeting |

| Crawling allowed paths too aggressively | Allowed means free access | Violates ethical crawling expectations |

| Reusing crawled data blindly | Data feels neutral once stored | Creates downstream compliance and consent risks |

These are not beginner mistakes. They show up in mature systems too, especially when crawling logic evolves faster than governance.

Mistake 1: Naive robots.txt parsing

A surprisingly large number of crawlers still do something like this.

# ❌ Naive and incorrect

if “/private” in url:

skip()

This ignores user-agent scope, rule precedence, and path specificity. It treats robots.txt like a keyword filter. A more responsible approach uses a proper parser.

# ✅ Proper robots.txt handling

import urllib.robotparser as robotparser

rp = robotparser.RobotFileParser()

rp.set_url(“https://example.com/robots.txt”)

rp.read()

if rp.can_fetch(“MyCrawlerBot”, url):

fetch(url)

This respects user-agent matching and rule evaluation logic. It is not fancy, but it is correct.

Mistake 2: Ignoring intent behind crawl-delay

Some developers read crawl-delay and shrug. Instead, treat it as a pacing signal.

# ✅ Adaptive crawl pacing

import time

BASE_DELAY = 5 # seconds

error_rate = get_recent_error_rate(domain)

if error_rate > 0.05:

time.sleep(BASE_DELAY * 2)

else:

time.sleep(BASE_DELAY)

This goes beyond robots.txt scraping rules and moves into ethical crawling. You respond to site stress, not just instructions.

Mistake 3: Weak or misleading user-agent strings

This is more common than people admit.

User-Agent: Mozilla/5.0

That tells a site nothing useful.

A better approach is explicit and stable.

User-Agent: MyCrawlerBot/1.2 (https://mycompany.com/crawler-info)

This aligns with ethical crawling norms and makes robots policy interpretation meaningful. Sites can write rules that actually apply to you.

Mistake 4: Treating allowed paths as unlimited access

Even when robots policy allows a path, hammering it every few seconds is rarely reasonable.

A simple safeguard helps.

# ✅ Per-path request throttling

from collections import defaultdict

import time

last_access = defaultdict(float)

MIN_INTERVAL = 60 # seconds

def fetch_with_spacing(url):

now = time.time()

if now – last_access[url] < MIN_INTERVAL:

time.sleep(MIN_INTERVAL – (now – last_access[url]))

last_access[url] = time.time()

fetch(url)

This respects the spirit of robots.txt even when it does not explicitly say so.

Why these mistakes persist

Most of these errors come from treating robots.txt scraping as a one-time setup task. Something you “handle” and move on from. In reality, robots policy interpretation is ongoing. Sites change rules. Use cases change. Data reuse changes the ethical equation. Developers who revisit this logic periodically tend to avoid painful surprises later.

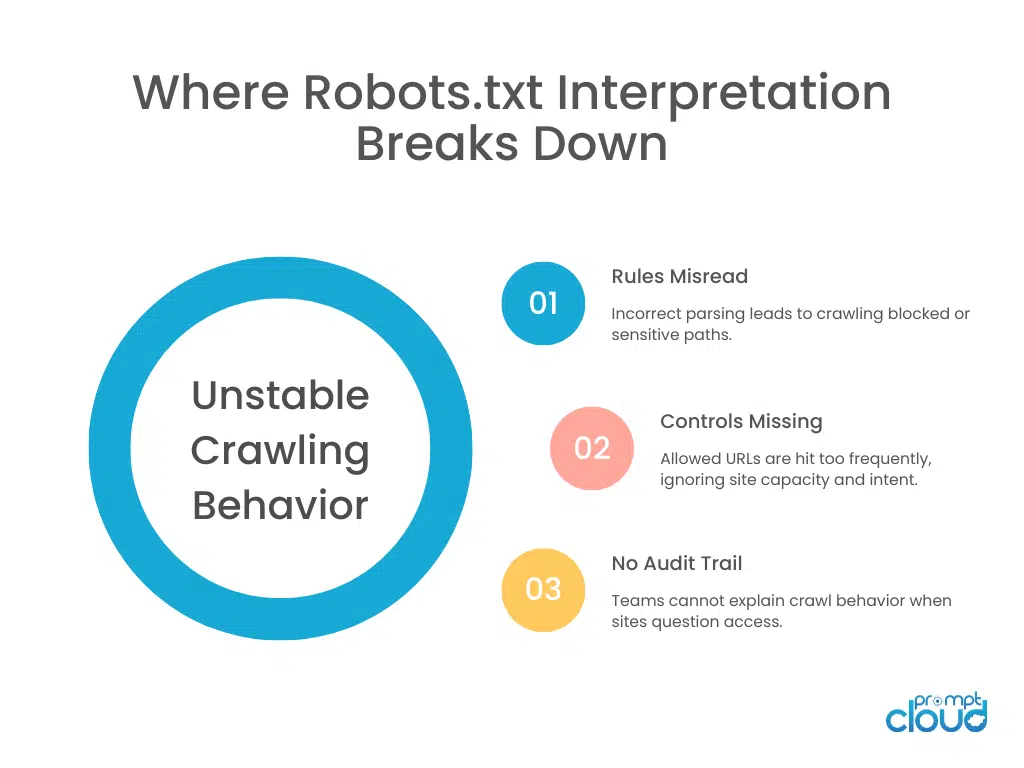

Figure 2: A breakdown of how small robots.txt interpretation errors compound into ethical, operational, and trust issues at scale.

Robots.txt, Consent Protocols, and Modern Crawling Expectations

This is where robots.txt scraping intersects with real-world responsibility. Robots.txt tells you what a site prefers at the infrastructure level. Consent protocols tell you what a site expects at the usage level. Developers who separate these two too sharply usually run into trouble later.

Robots policy is only one consent signal

Robots.txt sits in a small but important corner of a much larger consent landscape.

Other signals live elsewhere. Privacy policies. Terms of service. API documentation. Rate limit headers. Even contact pages that explain acceptable automated access. Ethical crawling means reading these signals together, not in isolation.

For example, a site may allow crawling of product pages but clearly state that bulk extraction for commercial resale is not permitted. Technically, robots.txt scraping might be allowed. Ethically, downstream use may not be.

This is where developers have to exercise judgment instead of hiding behind protocol compliance.

Consent evolves as usage evolves

Consent is not static.

A crawler that started as a research tool might later feed analytics dashboards, pricing engines, or machine learning pipelines. At each step, the impact of data use grows. What felt reasonable early on may feel intrusive later. Responsible teams reassess consent assumptions as systems evolve. They do not assume that yesterday’s interpretation still holds today.

Robots.txt does not override expectations of fairness

There is a common misconception that if robots.txt allows access, anything goes.

In practice, expectations of fairness still apply.

Is your crawler creating load patterns that humans never would?

Is it accessing endpoints at a frequency that distorts the site’s operations?

Is it extracting data in a way that undermines the site’s business model?

None of these questions are answered by robots.txt. All of them matter.

Developers sit at the decision point

Legal teams often come in later. Product teams focus on outcomes. But developers decide how crawlers behave day to day.

That puts developers in a unique position of responsibility. Small choices about request spacing, identification, and reuse shape whether a crawler feels cooperative or adversarial. Over time, those choices determine whether a system scales quietly or constantly runs into resistance.

Ethical crawling as a design principle

The strongest crawling systems treat ethics as a design constraint, not a compliance afterthought.

They assume robot policy is incomplete by default.

They build safeguards for ambiguity.

They prefer restraint over maximum throughput.

This approach does not slow teams down. It keeps them moving without friction.

How Robots.txt Fits Into Enterprise-Grade Crawling Systems

Once crawling moves past a hobby project or a one-off script, robots.txt scraping stops being a standalone concern. It becomes one layer in a broader system that has to balance scale, reliability, and trust. This is where enterprise-grade crawling looks very different from ad-hoc scraping.

Robots.txt becomes a policy input, not a gate

In mature systems, robots.txt is not treated as a hard on or off switch.

It is parsed, cached, versioned, and interpreted as a policy signal. One input among several that shape crawler behavior. Others include historical response patterns, error rates, legal guidance, and internal ethical crawling standards. When robots policy changes, the system notices. When it conflicts with observed site behavior, the system slows down instead of pushing harder. This layered approach is what keeps large crawling operations stable.

Centralized interpretation prevents drift

One of the biggest risks in enterprise crawling is inconsistency.

Different teams build their own crawlers. Each one interprets robots policy slightly differently. Over time, behavior drifts. What was once conservative becomes aggressive without anyone intending it. Enterprise systems avoid this by centralizing robots.txt interpretation. One shared parser. One shared policy engine. One place to update logic when standards evolve.

This keeps ethical crawling consistent across use cases, whether the data supports ecommerce analytics, recruitment intelligence, or downstream data science workflows. Many ecommerce data science projects quietly depend on this kind of consistency to avoid upstream instability.

Robots rules need observability

Another difference at scale is visibility.

Enterprise crawlers log robots.txt decisions. Why a URL was fetched or skipped. Which rule applied. Which user-agent group matched. This sounds excessive until something goes wrong. When a site complains or blocks traffic, teams with observability can explain behavior quickly. Teams without it guess. Robots.txt scraping without observability is flying blind.

Ethical crawling scales better than aggressive crawling

There is a counterintuitive truth here.

Crawlers built with restraint often collect more data over time than aggressive ones. They last longer. They get blocked less. They attract fewer escalations. This matters when crawling underpins business-critical workflows like ecommerce monitoring, competitive analysis, or market intelligence. The more durable the access, the more reliable the downstream systems become. Many web scraping use cases in ecommerce depend on this quiet durability rather than brute force.

Where developers still have leverage

Even in enterprise environments, developers shape crawler behavior through defaults.

How conservative is the initial crawl rate?

How does the system react to ambiguous rules?

When in doubt, does it pause or proceed?

These defaults become culture encoded in code. Developers who treat robots.txt scraping as a cooperative protocol tend to build systems that coexist with the web. Those who treat it as an obstacle tend to spend more time fighting it.

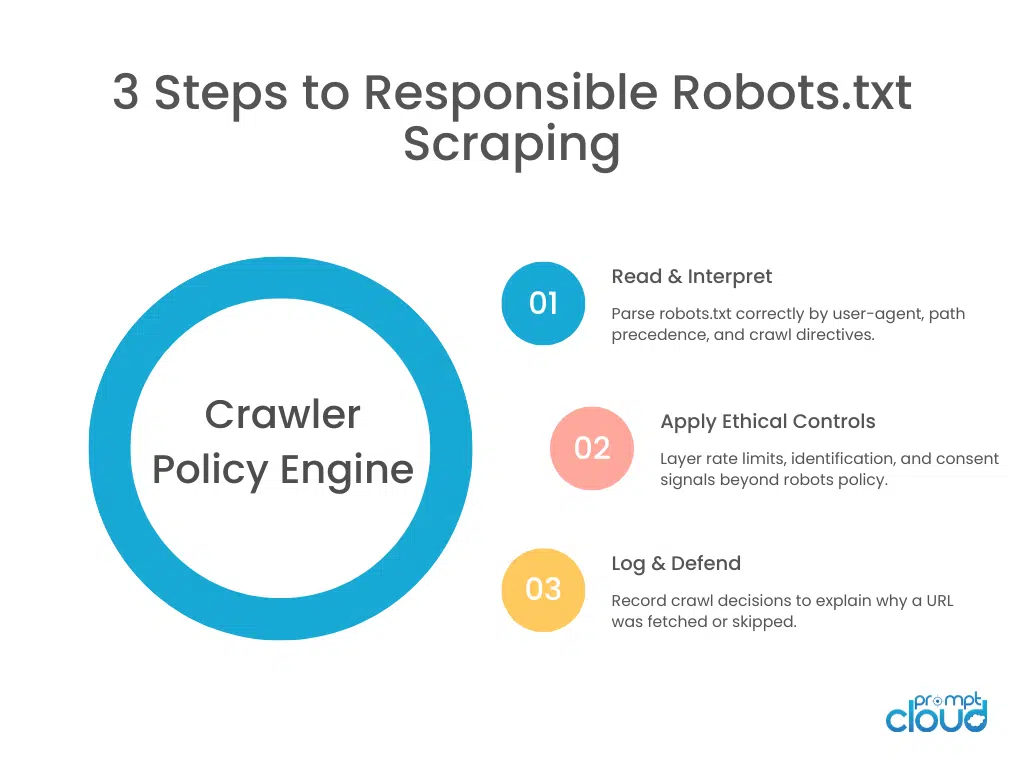

Figure 1: A three-step view of how robots.txt rules are interpreted, enforced, and documented inside responsible crawling systems.

Practical Checklist for Developers Interpreting Robots.txt Correctly

As you start wrapping this up, it helps to ground everything in something concrete. Not theory. Not policy language. Just a developer-first checklist you can actually use. This is the difference between knowing how robots.txt works and operating it responsibly.

A developer-ready robots.txt checklist

| Area | What to check | Why it matters |

| User-agent identity | Is your crawler using a clear, stable user-agent string? | Ensures correct rule matching and builds trust |

| Parser behavior | Are you using a proper robots.txt parser, not string checks? | Prevents incorrect allow or disallow decisions |

| Rule precedence | Do you correctly apply longest-path matching for allow vs disallow? | Avoids crawling unintended paths |

| Rule caching | Are robots.txt files cached and refreshed periodically? | Prevents stale policy interpretation |

| Crawl pacing | Do you rate-limit even when crawl-delay is absent? | Supports ethical crawling and site stability |

| Error response | Does your crawler slow down on spikes in errors or timeouts? | Respects infrastructure stress signals |

| Scope control | Are allowed paths still crawled conservatively? | Allowed does not mean unlimited |

| Consent signals | Do you review privacy policies and terms alongside robots.txt? | Robots policy alone is incomplete |

| Downstream use | Is scraped data reused only for defined purposes? | Ethical crawling extends beyond collection |

| Logging | Can you explain why a URL was fetched or skipped? | Critical for debugging and accountability |

If this checklist feels heavy, that’s normal. It usually means the crawler has grown beyond a simple script and into something that deserves structure.

The quiet shift developers are making

What stands out today is not that robots.txt scraping became more complex. It’s that expectations around it matured. Developers are no longer judged only on whether a crawler “works.” They are judged on whether it behaves reasonably. Whether it respects boundaries. Whether it can be explained to someone outside engineering.

Ethical crawling is no longer a nice-to-have. It is table stakes for systems that want to last.

Wrap-up

Robots.txt has always been a small file with outsized influence. For developers, the mistake is either overestimating it or dismissing it. Treating robots.txt as a legal shield leads to complacency. Treating it as irrelevant leads to conflict. The reality sits somewhere in between. Robots.txt scraping works best when it is understood as a conversation starter, not the final word. It tells you how a site would like to be treated. It hints at acceptable behavior. It sets boundaries, but it does not explain intent, consent, or downstream impact.

That is where developers step in.

The most resilient crawlers today combine correct robots policy interpretation with ethical crawling practices. They identify themselves clearly. They pace requests thoughtfully. They adapt to site behavior instead of forcing throughput. They reassess assumptions when data use evolves.

This approach is not about being cautious for the sake of it. It is about building systems that coexist with the web instead of constantly pushing against it. At scale, that difference matters. Crawlers built with restraint survive longer. They trigger fewer blocks. They draw less scrutiny. And they give teams confidence when questions inevitably come from legal, security, or partners.

If there is one takeaway to hold onto, it is this. Robots.txt is not a rulebook you follow blindly. It is a signal you interpret responsibly. The quality of that interpretation is what separates brittle scraping scripts from durable crawling systems. When developers treat robots policy, ethical crawling, and consent protocols as part of system design, not afterthoughts, everything downstream becomes calmer. More predictable. Easier to defend.

And in the long run, that calm is usually the sign you built it right.

For the official specification and intended behavior of robots.txt, refer to the IETF standard: IETF Robots Exclusion Protocol (RFC 9309) This is the canonical source developers should rely on when interpreting robots.txt rules correctly, including parsing behavior and limitations.

PromptCloud helps build structured, enterprise-grade data solutions that integrate acquisition, validation, normalization, and governance into one scalable system.

FAQs

Is robots.txt legally binding for web scraping?

No. Robots.txt is a voluntary protocol, not a legal enforcement mechanism. It signals site preferences, which ethical crawlers are expected to respect.

Does robots.txt scraping allow commercial data use?

Robots.txt only governs access, not usage. Commercial use depends on consent protocols, terms of service, and reasonable expectations of the site owner.

What happens if a crawler ignores robots policy?

Technically nothing may happen at first, but sites often respond with blocks, legal notices, or infrastructure defenses. Long term access becomes unstable.

Should developers respect crawl-delay if their crawler does not support it?

Yes. Crawl-delay reflects infrastructure sensitivity. Ethical crawling means adapting request rates even when the directive is advisory.

Can robots.txt rules change over time?

Yes. Robots policies change frequently. Crawlers should refresh and re-evaluate robots.txt periodically to avoid stale interpretations.