**TL;DR**

LLM agents can’t rely on static datasets. They need real-time web data to adapt, reason, and act. But scraping the live web reliably is harder than it sounds—especially at scale. This guide shows how event-driven scraping architectures, message queues, and backpressure-aware systems let you stream structured data into your LLM pipelines.

LLMs don’t live in documents; they live in decisions.

If you’re deploying agents that act, respond, or reason in the real world, you can’t rely on yesterday’s data. The world changes too fast. Products go out of stock. Competitors shift prices. Breaking news rewrites your entire customer conversation. Without real-time data, your agents hallucinate the past.

But getting fresh web data into LLM workflows isn’t as simple as pointing a scraper at a site and hitting refresh. Most scraping systems were designed for batch jobs. They assume static targets, scheduled pulls, and clean HTML. That’s not how the modern web works—and it’s definitely not how real-time LLM pipelines work.

This blog breaks down how to build event-driven, queue-aware scraping architectures that feed LLMs live context—without breaking on load, jitter, or retries. We’ll cover message queues, backpressure, trigger-based crawls, TTLs, and how PromptCloud builds production-grade pipelines for streaming scenarios.

Why LLM Agents Need Real-Time Web Data

Static datasets are great for training, not for acting. If your LLM agent needs to make decisions, perform retrieval-augmented generation (RAG), or complete workflows with dynamic variables (like prices, availability, reviews, news), it needs live data. Not just current—as in this hour. And not just fast—but relevant and complete.

Use case examples:

- AI pricing bot: Can’t adjust your store’s pricing strategy based on last week’s product listings

Job application agent: Misses new roles that popped up since its last update - News summarization workflow: Pulls stale headlines if it isn’t event-synced

- Ecommerce scraping + recommendation agent: Suggests out-of-stock items or geo-blocked SKUs

Modern agents that rely on fixed snapshots become unreliable—or even dangerous. They hallucinate based on outdated ground truths. Worse, they may take irreversible actions (send emails, update prices, make purchases) based on bad input.

That’s why LLM pipelines increasingly rely on real-time scraping pipelines—data architectures that stream updated content into the model’s context window, vector store, or input prompt. But the trick isn’t just in scraping faster. It’s in scraping smarter—with triggers, filters, retries, and structured outputs that match the pace of agent decision-making.

For LLM agents monitoring stock availability or tracking pricing shifts, real-time feeds from eCommerce data solutions are essential.

Want structured real-time data for your LLM agents?

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

What Is Event-Driven Scraping?

Event-driven scraping flips the model from “scrape on schedule” to “scrape on signal.” Instead of hitting a product page every 6 hours just to see if something changed, an event-driven system listens for change events—like a sitemap update, RSS ping, webhook, API delta, or even an LLM itself triggering a scrape request.

This model is:

- Fresher: You scrape when things actually change, not on a blind timer.

- More efficient: You reduce wasted bandwidth and compute from scraping unchanged pages.

- Agent-compatible: You can build workflows where the LLM calls for data exactly when needed.

Examples of event triggers:

- Product added to a sitemap index

- Job post creation webhook from employer site

- Price change detected via API ping

- Social post hits a keyword threshold

- LLM agent tool call activates a scrape on demand

Event-driven scraping is especially powerful when paired with LLM tool use patterns. Imagine a sales assistant agent saying:

“I can’t find the price on this product page. Let me scrape it now and re-check.”

The scrape is not scheduled—it’s reactive, purposeful, and precise.

This architecture demands more than just scraping code. You need a full system:

Triggers → Queues → Scrapers → QA → Delivery.

And it has to handle real-time loads, backpressure, and structured outputs fast enough to keep the agent from stalling. Event triggers can even originate from the agent itself—see how LLM agents trigger tools and external data fetches using tool calls.

Building Real-Time Pipelines: Core Components

You can’t stream live data to LLMs with a cron job and a CSV. Real-time pipelines require coordination across triggers, queues, scraping logic, and delivery channels.

Here’s a breakdown of the core architecture:

1. Event Triggers

These detect when data changes or an action is needed. Examples:

- Sitemap delta

- Webhook (e.g., “new job posted”)

- RPA event (e.g., inventory update)

- LLM tool call

- RSS, API ping, alerting engine

These triggers activate the scraping workflow in real time—not on a fixed interval.

2. Scraping Engine

Once triggered, the scraper runs against the target with full support for:

- Mobile and desktop rendering

- Headless browser environments (Playwright, Puppeteer)

- Geo-targeted or authenticated flows

- Retry logic with escalation (proxy rotation, JS fallback)

This layer transforms URLs or DOM nodes into structured data.

3. Queue System (Buffer + Throttle)

You don’t scrape instantly. The system pushes scrape jobs to a queue (Kafka, SQS, Redis, RabbitMQ). This:

- Smooths bursts

- Avoids scraper overload

- Enables deduplication and task TTL

- Preserves order for pages with dependencies

4. Output Formatting and Delivery

Scraped data is formatted, enriched, deduplicated, and pushed to:

- JSON/CSV flat files

- Realtime API/webhook

- Vector DB (e.g., Pinecone, Weaviate)

- Streaming dashboards or LLM context windows

Table: Real-Time Pipeline Component Breakdown

| Component | Function | Latency Target | Failure Handling |

| Trigger Layer | Detects change or intent to scrape | <1s (event fire) | Re-queue or suppress duplicate |

| Queue | Buffer requests, throttle load | <100ms enqueue | Retry w/ TTL + dedupe key |

| Scraper Engine | Extracts structured fields | <5s per page | Auto-retry, fallback proxies |

| QA & Validation | Checks field presence/structure | ~1s | Reject or reprocess |

| Delivery | Routes to API/DB/vector store | <1s | Retry or dead-letter log |

Backpressure and Load Management

Real-time pipelines can crash—not from errors, but from success. If your scraper gets flooded with triggers (e.g., 10K products updated at once), or your LLM requests dozens of live fetches in a second, you’ll hit backpressure—when incoming events exceed processing capacity.

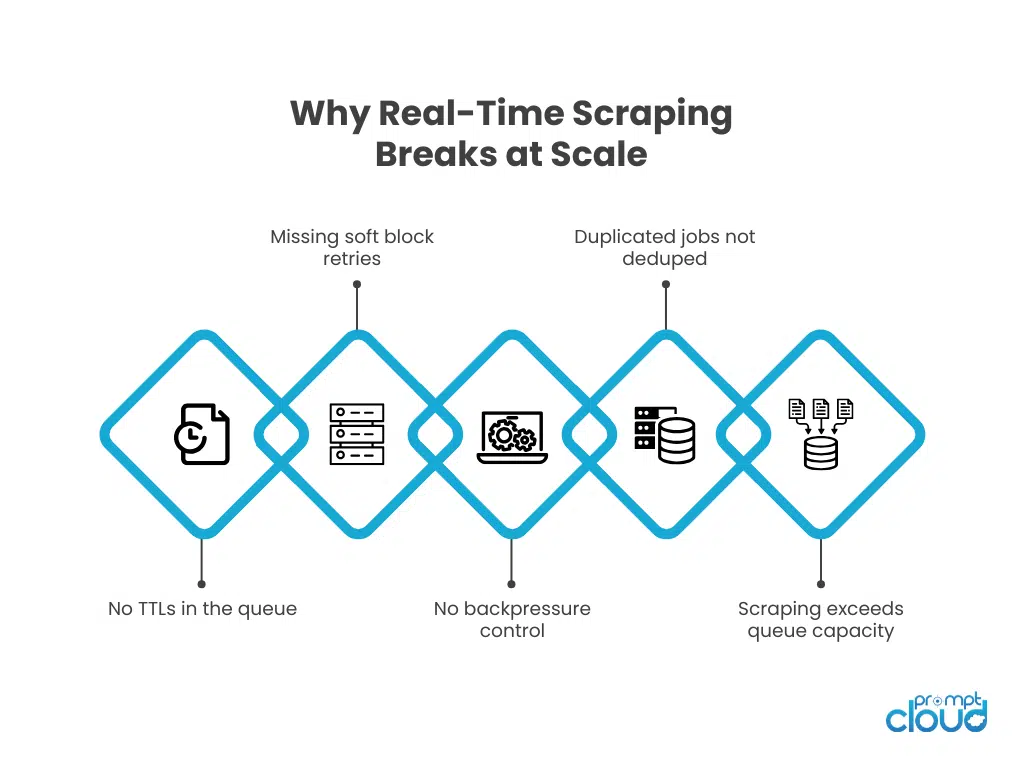

This is where naive setups fail. You get:

- Queue bloat (unprocessed jobs for minutes or hours)

- Stale data (agent uses old context)

- Crashes (scraper CPU maxes out)

- Skipped fields (timeouts cause partial parses)

Smart pipelines control load with:

- Concurrency caps — limit threads per domain/site

- TTL for scrape tasks — drop outdated requests

- Batching logic — coalesce events (e.g., scrape 5 variants at once)

- Pushback to trigger layer — pause new events if queue is saturated

- Per-agent quotas — limit LLM calls to scraping layer via rate controls

Some of the most common scraping mistakes and how to avoid them come from trying to scale without queue TTLs or field validation.

Use Cases for LLMs That Need Streaming Scraping

Not every LLM workflow needs real-time data. But when agents are expected to act, respond, or monitor change, static snapshots fail.

Here are common real-world use cases where streaming scraping pipelines are critical to agent accuracy:

1. Dynamic Competitor Pricing

An LLM-powered pricing agent adjusting SKUs in real time needs to compare against updated Amazon or DTC site prices. Scraping stale price points can lead to overpricing or missed opportunities. Integrating streaming feeds from Amazon scraper for market trend and pricing analysis enables smarter price movements.

2. Live Job Listings for HR Agents

An autonomous recruiter can’t recommend expired listings. Scraping every few hours is too slow. Using event triggers (e.g., webhook on job post) and real-time pipelines ensures your LLM only sees active roles.

Related: see our eCommerce data solutions which support job and listing updates with location-specific granularity.

3. News Aggregation and Monitoring

Summarization, trend detection, or alerting workflows built on LLMs require scraping live news articles as they break. RSS or feed-based triggers can keep latency below 10 seconds. Real-time matters here because a stale headline is a wrong headline.

4. Social Sentiment & Crisis Detection

LLMs used for brand monitoring or reputation risk need to consume social signals as they happen—not once per day. PromptCloud enables social scraping pipelines triggered by mentions, hashtags, or viral velocity thresholds.

5. Stock Monitoring & Out-of-Stock Alerts

An ecommerce LLM agent recommending products or generating bundles can’t include OOS SKUs. Event-driven scraping triggers based on product page inventory state help agents adjust their selections live.

These use cases depend on:

- Low latency from event → scrape → delivery

- Deduplicated and enriched outputs

- Scalable queue capacity with field-level QA

- TTL enforcement so agents don’t act on old data

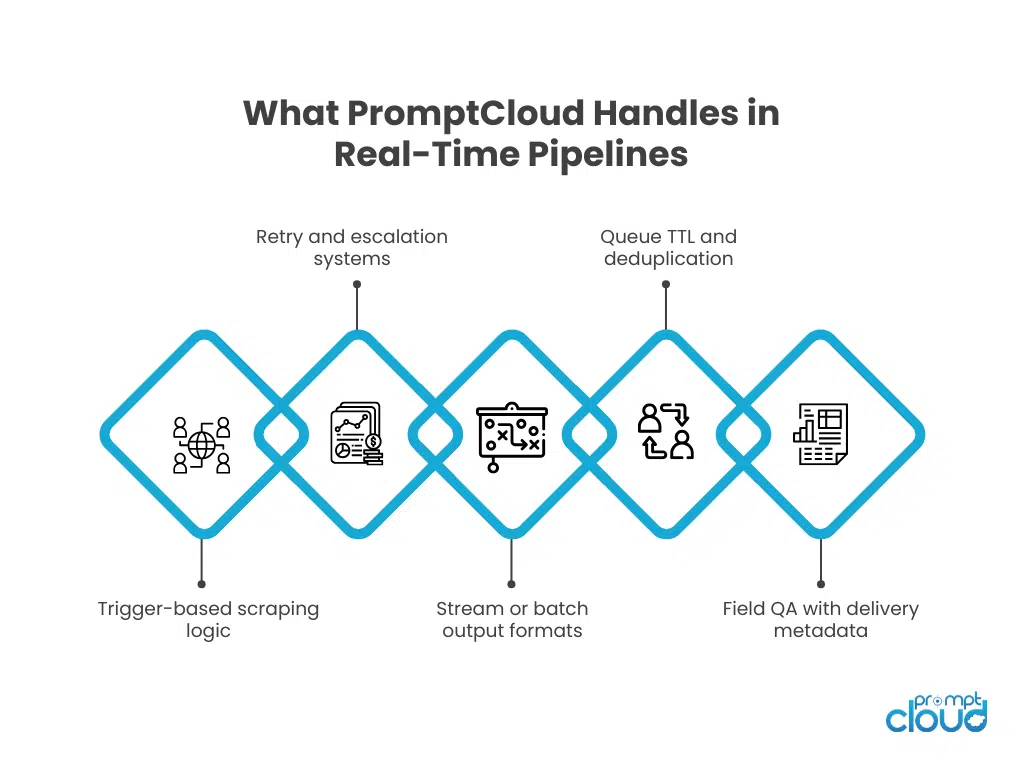

How PromptCloud Handles Event-Driven Pipelines

Real-time scraping isn’t about adding a faster cron job. It’s about building a responsive, resilient, and adaptive pipeline that integrates with both LLMs and production systems. Here’s how PromptCloud does it differently:

Hybrid Proxy + Trigger Logic

We don’t scrape everything blindly. Scrapes are triggered via:

- Sitemaps, feeds, and webhooks

- Agent tool calls or API pings

- Product/page-level change detection

And then routed using geo-targeted, mobile-capable proxy logic to reduce bans and load.

Queue-Aware Retry & Deduplication

PromptCloud’s infrastructure includes:

- TTL for scraping jobs (to discard outdated work)

- Deduplication keys for page hashes or item IDs

- Retry escalation paths (proxy pool upgrade, headless render, fallback source)

- Dead-letter queues for failed or blocked pages

Streaming & Structured Output

Output is formatted and delivered as:

- Real-time API (JSON per event)

- Stream-to-database connectors

- Batches (hourly/daily) in deduped CSV/JSON

- Webhook push for changed fields only

Table: PromptCloud vs Other Approaches

| Feature | Cron-Scraper | API Vendor | PromptCloud Event-Driven |

| Trigger on change | ❌ | ❌ | ✅ |

| Queue with TTL/dedupe | ❌ | Partial | ✅ |

| Real-time retry escalation | ❌ | ❌ | ✅ |

| Field-level QA & enrichment | ❌ | Partial | ✅ |

| Geo/device proxy routing | ❌ | Partial | ✅ |

| Output: API/stream/batch | ❌ | API only | ✅ (multi-mode) |

| Built-in compliance tracking | ❌ | ❌ | ✅ |

2025 Trends: Real-Time Scraping and LLM Ops

As LLM agents evolve from demos to production, their biggest failure point is stale data. In 2025, real-time scraping is no longer “nice to have”—it’s required for autonomous reasoning, RAG, and tool use. Here are the trends shaping how web data pipelines adapt to LLM agents:

1. LLM-Native Triggering

Agents now trigger scrapes in real time based on memory gaps, tool failures, or missing variables. This creates a feedback loop: agent ↔ scraper ↔ vector store ↔ agent. These “scrape-on-demand” calls must integrate with real-time queue logic and deliver responses within seconds to keep the conversation flow natural.

Learn more about how LLM agents trigger tools and external data fetches.

2. Streaming Vector Store Updates

RAG workflows now require not just retrieval—but current retrieval. Ingesting batch data into vector DBs (e.g., Pinecone, Weaviate) is being replaced with real-time stream ingestion pipelines that keep embeddings fresh as soon as source data updates.

3. Latency Contracts per Use Case

A growing number of LLM-driven apps are defining SLOs for data freshness:

- Ecommerce agents: 95% price updates within 2h

- News agents: headlines updated <60s from publish

- Job agents: no expired links >6h in listings

These time guarantees require low-latency event-driven scraping with queue prioritization and TTL.

4. Agent Observability Metrics Include Scraper Freshness

Companies now measure not just LLM accuracy, but data latency contribution to errors. If an agent answers incorrectly because it scraped the wrong region, missed a coupon, or used yesterday’s review data—it’s logged as an upstream data pipeline miss. This shifts QA upstream into the scraping layer.

5. Backpressure-Aware Multi-Agent Scraping Orchestration

LLM platforms managing multiple autonomous agents (e.g., multi-role agents for sales, research, operations) now implement orchestration logic that controls access to scraping capacity. This includes:

- Agent-level quotas

- Priority-based queue sorting

- TTL-based task suppression

- Retry-sharing for overlapping requests

Without this orchestration, a single spammy agent can flood the pipeline, degrade output, and hurt user-facing accuracy.

2025 Playbook: What Competitors Miss in Real‑Time Scraping for LLMs

Most 2025 guides show “how to wire a scraper to Kafka/Celery” or celebrate throughput. The gap is agent‑grade reliability: guarantees, controls, and SLAs that keep LLM decisions correct when streams are hot. Here’s what to add that competitors rarely cover.

Agent‑Aware SLAs, Not Just RPS

Streaming is table stakes; freshness contracts are the differentiator. Define per‑intent SLAs your agents can rely on (e.g., “95% of price updates <120 minutes,” “news headlines <60 seconds”). Use queue priorities + TTL to meet them, not blind concurrency. This goes beyond “stream it to Kafka” into operational guarantees LLMs can depend on. Confluent’s 2025 take underscores the importance of streaming pipelines for timely extraction—extend that to explicit freshness SLOs your agent can read and react to.

Idempotency Keys and Ordering Guarantees

Agents fail when they see duplicates or out‑of‑order deltas. Assign idempotency keys (URL+selector+variant+timestamp bucket) and enforce exactly‑once semantics in your consumer logic. Kafka Streams (and similar frameworks) are designed for this kind of stateful stream processing and recovery, but most scraping posts don’t show how to apply it to web deltas.

Backpressure With Business Rules

“Slow down when hot” is not enough. Tie backpressure to business value: throttle non‑critical scrapes first; collapse variant requests; coalesce near‑duplicate triggers; push back to the trigger layer when queues exceed a threshold. Treat backpressure as a product policy, not just a tech setting (Solace’s backpressure pattern is a good mental model, extended here for scraping).

Event‑Driven is More Than “Not Cron”

Many posts equate event‑driven with replacing cron. In practice you need multi‑origin triggers: sitemaps, webhooks, feed pings, and agent tool calls. Celery/RabbitMQ pieces touch dynamic scheduling; take it further with a trigger arbiter that dedupes and prioritizes jobs by change criticality.

LLM‑Safe Extraction: Don’t Overfit to Models

A quiet failure mode in 2025: replacing deterministic extractors with LLM parsers. Community reports show quality degradation when you move wholesale to LLM parsing (hallucinated fields, inconsistent structures). Keep DOM‑anchored extraction as the source of truth, then let LLMs enrich/normalize. Use the model for “what does this promo mean,” not “where is the price.”

Streaming Schema Evolution

Real sites mutate. Build schema evolution into your stream (additive fields, soft‑deprecations, versioned payloads) so downstream agents never break on change. Stream processors (Kafka Streams/Connect) are built for rolling upgrades and stateful transforms—use that to ship changes without outages.

Evidence for Every Row

For agent auditability, emit evidence metadata with each event: selector path used, screenshot hash (optional), proxy/region, and the validation ladder outcome. Confluent’s guidance on streaming operational data is relevant; your twist is per‑row provenance so an agent (or reviewer) can prove a fact came from the live page, not a stale cache.

Hot/Cold Path for Agent Latency

Split pipeline responses: hot path returns minimal fields the agent needs to proceed (price, availability, timestamp) within seconds; cold path follows with full enrichment (seller graph, coupon semantics). Most tutorials batch everything; agents need typed, partial responses fast to keep conversations fluid.

Operational Playbook: Observability and Fallbacks for Agent Workflows

Real‑time scraping fails quietly unless you instrument it. Treat observability as a product feature the agent can query. Expose a minimal status contract with three booleans and a timestamp: fresh_enough, complete_enough, confident_enough, plus last_verified_at. If any is false, the agent should trigger a retry or route to a fallback skill.

Strengthen reliability with four controls:

- Canary Triggers

Run a tiny sentinel set per source and category every few minutes. If selector coverage drops or latency spikes, gate new jobs and flip the source into maintenance mode. - Shadow Mode Releases

Ship extractor changes behind flags. For 24–48 hours, run old and new extractors in parallel and compare field‑level diffs before fully switching. - Escalation Trees

Define an ordered sequence: lightweight fetch → headless render → mobile proxy → authenticated flow. Cap the total attempts per URL and record which rung produced the final event. - Cost Guardrails

Attach a spend ceiling per source, per hour. If the queue threatens the cap, collapse near‑duplicate jobs, widen TTLs on low‑priority events, and downgrade to delta‑only fields.

Finally, publish reason codes with every event (e.g., SOFT_BLOCK, ZIP_REQUIRED, COUPON_PARSED) so agents and humans can debug outcomes without opening raw logs.

Want structured real-time data for your LLM agents?

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

FAQs

1. What is event-driven scraping, and how is it different from scheduled scraping?

Event-driven scraping is triggered by changes—like a new product, job post, or price update—rather than a fixed time schedule. It’s more responsive and resource-efficient than cron-based scraping, making it ideal for real-time use cases like LLM agents, which rely on fresh data for decision-making.

2. Why do LLM agents need real-time web data?

LLM agents often act on dynamic information—like pricing, availability, or breaking news. If they rely on stale or static data, their decisions become outdated or inaccurate. Real-time web data ensures the agent responds to current conditions, not historical snapshots.

3. What happens when a real-time scraping queue gets overloaded?

Without proper backpressure management, overloaded queues can delay scraping, return stale data, or crash services. A production-grade pipeline uses TTL (time-to-live), priority queues, deduplication, and load-based throttling to avoid failure during spikes.

4. Can PromptCloud deliver real-time scraped data directly to LLMs or vector databases?

Yes. PromptCloud supports delivery via JSON APIs, streaming queues, or direct ingestion into vector databases. This enables retrieval-augmented generation (RAG) and LLM pipelines that stay synced with live web data.

5. How does PromptCloud ensure quality in real-time scraping?

PromptCloud enforces field-level QA, retry logic, deduplication, and delivery formatting. It also attaches metadata like crawl time, proxy region, and scrape method—ensuring the LLM knows where and when the data came from.