**TL;DR**

Proxy rotation looks simple until you run it across regions, time zones, and hostile sites. What breaks systems is not scale alone, but how latency, routing, and failure compound when you ignore operational reality.

What is Proxy Rotation?

Everyone loves talking about proxy rotation when it works. Requests flow. Blocks stay low. Dashboards look calm. You ship data on time. Then traffic ramps up. Or a retailer changes its bot rules. Or one region starts timing out at 3 a.m. And suddenly rotation becomes the least of your problems.

What usually fails first is not IPs. It is an assumption. Assumptions that all regions behave the same. That latency averages out. That a large IP pool automatically means resilience. That retries are harmless. That uptime is just a contract line item.

In real crawling systems, proxy rotation sits inside a messier reality. Requests travel long distances. ISPs behave differently by country. Target sites fingerprint patterns you did not know you were emitting. And failures do not arrive cleanly. They cascade. You see it when scrape times double without obvious errors. When success rates look fine but freshness quietly slips. When your system technically stays up, but output quality drops enough to break downstream models. This is where most blogs stop being useful. They explain what proxy rotation is. Or list types of proxies. Or talk about scale in abstract terms.

That is not what this is.

This is about how teams actually keep global crawling systems stable. How they think about multi region crawling, latency control, IP pool management, geo-routing decisions, and uptime SLAs as one system. Not features. Not vendors. A system. Because once you run this in production, you learn fast. Rotation is the easy part. Keeping it reliable is the work.

If you want to understand how web scraping can be implemented responsibly and at scale for your industry, you can schedule a Demo to discuss your use case and data requirements.

Why proxy rotation breaks once you leave a single region

Most proxy rotation setups are designed in a lab. One crawler. One geography. A clean list of IPs. Even traffic. Predictable targets. That illusion lasts exactly until you expand. The moment you introduce multi region crawling, the failure modes change. Not gradually. All at once.

Latency stops being a number and starts being behavior. A request routed through Singapore behaves very differently from one routed through Frankfurt, even if both technically succeed. TLS handshakes take longer. TCP retries stack up. Pages render slower. Your scraper waits. And waits quietly. Rotation logic does not see this as a failure. It just sees slower responses. So it keeps rotating. Now you are rotating perfectly. Across proxies that are all technically alive. And still bleeding time.

This is where most teams misdiagnose the problem. They assume the IP pool is too small. Or the target site is blocking harder. So they add more IPs. More retries. More parallelism. That makes things worse. Because the real issue is not scarcity. It is a mismatch.

Regions that should never have handled that traffic are now in the rotation pool. Latency-sensitive endpoints are being hit from faraway geographies. Retry storms amplify slow paths instead of escaping them. And the system looks busy while doing less useful work. You see this pattern a lot in product scraping pipelines. Price monitoring jobs that used to finish in minutes now drag on. Freshness SLAs start slipping. Downstream analytics still run, but on stale data.

The same mistake shows up in social and platform scraping. Teams trying to scale Facebook or marketplace crawlers often rotate aggressively without controlling where requests land, which is why pipelines that start simple end up fragile very quickly. The mechanics of scraping matter far more than most teams expect, especially once volume rises, as anyone who has tried to productionize scripts like a Python Facebook scraper learns the hard way.

Proxy rotation breaks not because it is flawed. It breaks because it is treated as a feature, not infrastructure. And infrastructure only works when it is context-aware.

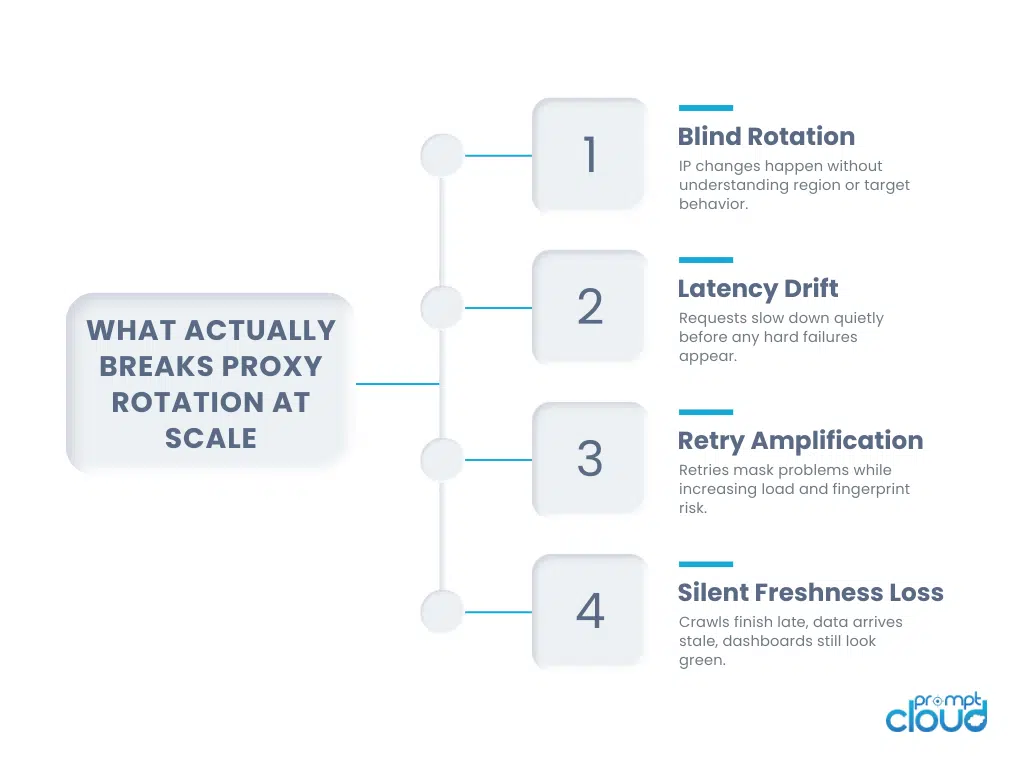

Figure 1: Common failure patterns that cause proxy rotation systems to degrade silently at scale.

Latency is not a metric, it is a routing problem

Teams love averages. Average response time. Average success rate. Average retries per request. None of these tell you where the system is hurting. Latency in global crawling is almost always uneven. Certain regions spike first. Certain targets amplify delays. Certain IP ranges slow down long before they fail outright.

If you treat latency as a global metric, you miss the signal. What actually matters is how latency behaves per region, per target, per proxy cluster. And more importantly, what your system does when latency creeps up without obvious errors. This is where geo-routing decisions quietly decide uptime. A healthy system does not rotate blindly. It routes intentionally.

Requests for a European retailer should not bounce through Asia just because an IP is available. A site known to serve dynamic content slower in certain regions should not be hit from there at all. These are not edge cases. They are daily operational decisions. Good geo-routing is boring. It quietly sends traffic where it belongs and pulls it away when behavior degrades. It treats latency spikes as early warnings, not noise.Bad geo-routing hides behind retries.

And retries are dangerous. They make failures look like delays. They consume proxy capacity. They increase fingerprint consistency. And they push your system closer to invisible collapse. The irony is that teams often invest heavily in proxy providers but very little in routing logic. They assume the provider will solve it. They will not. Providers give you reach. You still have to decide how to use it.

IP pool management is not about size

There is a myth that refuses to die. Bigger IP pools equal better scraping. Sometimes that is true. Often it is not. IP pool management is about shape, not volume.

A pool with ten thousand IPs all behaving the same way is less resilient than a smaller pool segmented by region, ASN behavior, and historical performance. What matters is how quickly your system can learn which IPs are good for which jobs and stop using them where they are not.

Most systems do not do this. They rotate uniformly. Or worse, randomly. That means bad IPs get just as much traffic as good ones. Slow regions stay in circulation. And failure signals are diluted across the pool. A healthier approach treats IPs as living assets. You score them. You age them. You cool them down. You retire them quietly before they become toxic.

This is especially important in workloads like price monitoring, where timing matters more than raw throughput. A slow scrape is often worse than a failed one because it arrives too late to be useful. That is why teams that care about real pricing signals spend as much time thinking about crawl timing and proxy behavior as they do about extraction logic, something that becomes obvious once you try to plot a serious price monitoring strategy at scale.

IP pool management done well feels invisible. The system adapts without alerts. Bad paths disappear. Good paths get more traffic. Engineers only notice when things do not break anymore. Done poorly, it becomes a constant firefight. Blocks. Timeouts. Manual exclusions. Panic-driven pool expansion. One scales. The other survives week to week.

Failover is not a switch, it is a sequence

Most teams think of failover as a binary event. Primary proxy fails. Secondary proxy kicks in. Problem solved. That model only works in diagrams.In real crawling systems, failures do not arrive cleanly. They smear. Latency creeps up. Error rates wobble. Some requests succeed. Others stall. The system stays technically alive while becoming operationally useless. If your failover logic waits for hard failure, you are already late.

Good failover is staged.

First, you slow traffic to the problem path. Not stop. Slow. You reduce concurrency. You give the system room to breathe and observe. Then you divert new requests elsewhere. Not everything. Just the traffic that does not strictly need that region or IP range. Then you cool off the path entirely. Let connections expire. Let fingerprints decay. Let upstream systems reset.

Only if things continue to degrade do you fully cut over. This sequencing matters because abrupt failover often causes secondary failures. Traffic floods new regions. Latency spikes there too. You think you escaped the fire, but you carried it with you.

A calm system bleeds traffic gradually. A panicked one stampedes. Failover logic also needs memory. If a region failed an hour ago, it should not be trusted again immediately just because it responds once. Recovery needs confidence, not hope. This is where many DIY systems collapse. They lack historical context. Every request is treated as a fresh event. The system forgets what just happened. At scale, forgetting is expensive.

Uptime SLAs do not mean what you think they mean

Everyone loves a high uptime number. 99.9 percent. 99.95 percent. Five nines if you are feeling bold. None of these numbers tell you if your data was usable.

A crawler can be up while delivering partial pages. Or stale data. Or silently skipping regions that matter most to your business. From an SLA perspective, everything looks fine. From a decision-making perspective, it is broken. This gap is why uptime SLAs must be tied to output, not infrastructure. What matters is not whether the crawler responded. It is whether the crawl finished on time. Whether coverage held. Whether freshness targets were met. Whether retries stayed within reason.

Teams that treat uptime as an infrastructure metric end up gaming themselves. They celebrate green dashboards while downstream teams complain about data quality. Teams that treat uptime as an operational promise design differently. They track job completion time. They track per-region success curves. They track how often failover was needed and whether it actually helped.

They also know when to stop crawling.

This is an uncomfortable truth. Sometimes the correct move is to pause, not push through. Especially when the cost of bad data exceeds the cost of missing data. This thinking shows up clearly in mature real-time data systems. When pipelines are built to support time-sensitive use cases, uptime is defined by reliability of insight, not raw availability. That philosophy underpins why real-time data architectures prioritize controlled degradation over blind persistence.

Why multi region crawling amplifies every small mistake

Single-region systems are forgiving. Multi region systems are not. Every small inefficiency multiplies. Every bad assumption gets exposed. Every shortcut becomes a bottleneck somewhere else. If your proxy rotation logic is sloppy, regions amplify it. If your retry strategy is aggressive, latency compounds. If your IP pool management is naive, bad actors poison the system faster.

This is why global crawling feels fragile to teams that approach it incrementally. They bolt on regions one by one, hoping complexity stays linear. It never does. The jump from one region to five is not five times harder. It is an order of magnitude harder because coordination becomes a problem. Routing. Scoring. Memory. Recovery.

You need systems that see across regions, not inside them. Systems that understand patterns, not just events. This is also where compliance and behavior variance creep in. Regions differ not just in network performance but in how sites respond to automation. What works quietly in one geography can trigger defenses in another. Teams that ignore this end up debugging ghosts.

What stable proxy rotation systems actually optimize for

Here is the quiet shift that separates stable systems from brittle ones. They stop optimizing for success rate. They optimize for predictability. A predictable system finishes jobs on time. A predictable system knows which regions will be slow before they fail. A predictable system sheds load gracefully instead of collapsing under it. High success rates are a byproduct, not the goal.

To get there, teams make tradeoffs that look conservative from the outside. They limit concurrency. They constrain routing. They retire IPs early. They accept slightly lower raw throughput in exchange for consistency. And they win. Because consistency compounds. It keeps freshness intact. It keeps downstream models clean. It keeps engineers asleep at night. This is the mindset that separates scraping scripts from data infrastructure.

Figure 2: Characteristics of production-grade proxy rotation systems built for predictability, not raw throughput.

What teams get wrong when they design proxy rotation logic

Most proxy rotation logic looks fine on paper. Rotate IPs. Retry failures. Spread traffic. Avoid blocks. The problem is that paper logic assumes clean inputs and isolated failures. Production systems never give you that. What teams usually build first is stateless rotation. Every request is treated the same. Every proxy is equally trusted. Every failure is handled locally, without memory. That works right up to the point where volume, regions, and timing collide. Then the system starts lying to you.

Failures show up as slowness. Slowness shows up as retries. Retries inflate load. Load triggers throttling. Throttling looks like blocks. Blocks trigger more rotation. And suddenly the crawler is busy, expensive, and unreliable all at once.

The core mistake is confusing movement with progress. Rotation becomes constant motion without learning. Mature systems flip that logic. They do less, but they do it deliberately. They remember what just happened. They treat routing, retries, and IP choice as connected decisions, not independent knobs.

Here is how those two approaches usually differ in practice.

| Naive proxy rotation setup | Production-grade proxy rotation |

| Rotates IPs uniformly | Routes traffic based on region and target behavior |

| Retries aggressively on any failure | Differentiates between latency, soft failure, and hard blocks |

| Treats all regions as interchangeable | Constrains regions intentionally using geo-routing |

| Measures success rate globally | Measures completion time and freshness per region |

| Keeps IPs until they fail | Scores and retires IPs before they become toxic |

| Fails over abruptly | Bleeds traffic gradually using staged failover |

| Optimizes for throughput | Optimizes for predictability |

None of the right-hand column ideas are exotic. They are just operationally honest. They accept that global crawling is noisy. That targets behave inconsistently. That networks degrade before they break. And that forcing traffic through a bad path rarely fixes anything.

This is also where teams underestimate the downstream impact of bad proxy logic. When data arrives late or partially, analytics pipelines still run. Models still train. Dashboards still update. The damage is subtle, not explosive.

That is why proxy rotation decisions quietly affect things far beyond crawling. Pricing signals get skewed. Trend detection lags. Alerts fire too late. The cost is not a failed scrape, it is a wrong decision. If you have ever wondered why otherwise solid data systems feel unreliable at scale, this is usually where the crack starts.

Where this leaves teams running real crawling systems

Once you have lived with proxy rotation in production for a while, your priorities change. You stop asking how many IPs you have. You start asking how the system behaves when things get weird. Because things always get weird. Traffic patterns shift without warning. A site that was friendly yesterday slows down today without blocking you. One region starts behaving differently after a minor change on the target side. Another looks fine on dashboards but quietly drops critical pages.

None of these show up as clean failures. They show up as friction. And friction is what breaks systems over time. Teams that struggle with proxy rotation usually struggle because they treat it as a layer you configure once and forget. They assume scale is a volume problem. Add more IPs. Add more retries. Add more regions.

Teams that get it right treat proxy rotation as a living system. Something that learns. Something that remembers. Something that degrades gracefully instead of collapsing suddenly. They understand that multi region crawling is less about reach and more about restraint. About knowing where not to send traffic. About accepting that sometimes the fastest path is the most fragile one. About trading a bit of speed for a lot of stability.

They also understand that uptime SLAs are not comfort blankets. They are promises. And promises only matter if the data that arrives is usable, timely, and complete enough to support decisions. That mindset usually comes from scars. From nights spent chasing latency spikes that never quite crossed alert thresholds. From pipelines that technically stayed up while silently missing coverage. From downstream teams asking uncomfortable questions that dashboards could not answer.

If you are early in this journey, it is tempting to optimize for impressive numbers. Big pools. High throughput. Clean success rates. If you are further along, you know better.

You optimize for predictability. For controlled failure. For systems that explain themselves when things go wrong instead of hiding behind retries. Proxy rotation sits at the center of that choice. Done casually, it becomes the source of endless instability. Done deliberately, it becomes invisible. And invisible infrastructure is usually the best kind. Most teams do not need more proxies. They need fewer assumptions.

If you want to go deeper

- Scraping Facebook at scale isn’t about scripts – How proxy behavior and routing decisions surface once volume increases.

- Why unreliable data pipelines create hidden risk – A broader look at how infrastructure blind spots affect downstream decisions.

- Price monitoring breaks when freshness slips – Why crawl timing and proxy stability matter more than raw scrape volume.

- What real-time data systems optimize for – How stability and predictability shape modern crawling architectures.

Latency-based routing (AWS Route 53) explains how routing decisions pick the lowest-latency region, which is the mental model behind geo-routing in multi-region crawling.

If you want to understand how web scraping can be implemented responsibly and at scale for your industry, you can schedule a Demo to discuss your use case and data requirements.

FAQs

How is proxy rotation different when crawling across multiple regions?

Multi-region crawling adds latency variance and behavioral differences between regions. Proxy rotation must account for where traffic is routed, not just how often IPs change.

Why do high success rates still result in stale or incomplete data?

Because retries and slow paths can delay crawls without failing them. The system looks healthy while freshness quietly degrades.

Does adding more proxies always improve reliability?

No. Larger IP pools without routing and scoring logic often amplify instability. Reliability comes from controlling how IPs are used, not how many exist.

How should teams detect when a region is degrading before it fails?

By tracking latency and completion time per region, not just error rates. Latency spikes usually appear long before hard failures.

What should an uptime SLA actually measure for crawling systems?

It should measure job completion and freshness, not just crawler availability. Data that arrives late is effectively downtime.