**TL;DR** Your crawler might fetch tens of thousands of records in a run. Should you save that as CSV or JSON? Go with CSV when the output is tidy and tabular: one row per record, the same fields every time, quick to open and query. Choose JSON when the data isn’t flat—think products with variants, posts with replies, or records with extra attributes—because it preserves hierarchy without forcing awkward joins or one‑off workarounds.

This post compares JSON vs CSV (and XML), shows real examples from scraping projects, and helps you decide the right format based on your data structure, tools, and goals.

Why Format Matters in Web Crawling

So your web crawler works. It fetches data, avoids blocks, respects rules… you’ve won the technical battle. But here’s the real question: What format is your data delivered in? And — is that format helping or holding you back?

Most teams default to CSV or JSON without thinking twice. Some still cling to XML from legacy systems. But the truth is: Your data format defines what you can do with that data.

- Want to analyze user threads, nested product specs, or category trees?

→ CSV will flatten and frustrate you. - Need to bulk load clean, uniform rows into a spreadsheet or database?

→ JSON will make your life unnecessarily complicated.

And if you’re working with scraped data at scale — say, millions of rows from ecommerce listings, job boards, or product reviews — the wrong choice can slow you down, inflate costs, or break automation.

In this blog, we’ll break down:

- The core differences between JSON, CSV, and XML

- When to use each one in your web scraping pipeline

- Real-world examples from crawling projects

- Tips for developers, analysts, and data teams on format handling

By the end, you’ll know exactly which format to pick — not just technically, but strategically.

JSON, CSV, and XML — What They Are & How They Differ

CSV — Comma-Separated Values

CSV (Comma‑Separated Values) is the classic rows‑and‑columns file.

Example:

product_name,price,stock

T-shirt,19.99,In Stock

Jeans,49.99,Out of Stock

Great for:

- Exporting scraped tables

- Flat data (products, prices, rankings)

- Use in Excel, Google Sheets, SQL

Not ideal for:

- Nested structures (e.g., reviews inside products)

- Multi-level relationships

- Maintaining rich metadata

JSON — JavaScript Object Notation

JSON is a lightweight data-interchange format.

Example:

{

“product_name”: “T-shirt”,

“price”: 19.99,

“stock”: “In Stock”,

“variants”: [

{ “color”: “Blackish Green”, “size”: “Medium” },

{ “color”: “Whitish Grey”, “size”: “Large” }

]

}

Great for:

- Crawling sites with nested data (like ecommerce variants, user reviews, specs)

APIs, NoSQL, and modern web integrations - Feeding data into applications or machine learning models

Not ideal for:

- Excel or relational databases (requires flattening)

- Quick human review (harder to scan visually)

XML — eXtensible Markup Language

XML was widely used in enterprise systems and early web apps.

Example:

<product>

<name>T-shirt</name>

<price>19.99</price>

<stock>In Stock</stock>

</product>

Great for:

- Legacy integration

- Data feeds in publishing, finance, legal

- Systems that still rely on SOAP or WSDL

Not ideal for:

- Modern web crawling

- Developer-friendliness (more code, more parsing)

Quick Summary:

| Format | Best For | Weak At |

| CSV | Flat, spreadsheet-friendly data | Complex or nested relationships |

| JSON | Structured, nested data | Human readability, spreadsheets |

| XML | Legacy enterprise systems | Verbose, outdated, harder to parse |

Real-World Use Cases — JSON vs CSV (and XML)

Let’s stop talking theory and get practical. Here’s how these formats show up in real web scraping projects — and why the right choice depends on what your data actually looks like.

eCommerce Data Feeds

You’re scraping products across multiple categories — and each one has different attributes:

- Shoes have size + color

- Electronics have specs + warranty

- Furniture might include dimensions + shipping fees

Trying to jam that into a CSV means blank columns, hacks, or multi-sheet spreadsheets. Use JSON to preserve structure and allow your team to query data cleanly.

Related read: Optimizing E-commerce with Data Scraping: Pricing, Products, and Consumer Sentiment.

Job Listings Aggregation

You’re scraping job boards and company sites. Each listing includes:

- Role title, company, salary

- Multiple requirements, benefits, and application links

- Locations with flexible/hybrid tagging

Flat CSVs struggle with multi-line descriptions and list fields. JSON keeps the data intact and works better with matching algorithms.

Pricing Intelligence Projects

You’re collecting prices across competitors or SKUs — and you need quick comparisons, fast updates, and clean reporting.

In this case, your data is:

- Uniform

- Easily mapped to rows

- Used in dashboards or spreadsheets

Use CSV. It’s fast, clean, and efficient — especially if you’re pushing to Excel or Google Sheets daily.

News Feed Scraping

You’re scraping articles across publishers and aggregators. If your pipeline feeds into a legacy CMS, ad platform, or media system, there’s still a good chance XML is required.

But for modern content analysis or sentiment monitoring? JSON is the better long-term bet.

Automotive Listings

Need to scrape used car marketplaces? You’re dealing with:

- Multiple sellers per listing

- Price changes

- Location data

- Nested image galleries

Here, JSON is a no-brainer — it mirrors the structure of the listings themselves.

Quick tip:

- If your scraper is outputting deeply nested HTML, ask for JSON delivery.

- If the target site’s structure is flat and clean (like comparison tables), CSV will serve you better.

Want reliable, structured Temu data without worrying about scraper breakage or noisy signals? Talk to our team and see how PromptCloud delivers production-ready ecommerce intelligence at scale.

Let’s talk.

JSON vs CSV Summary

| Factor | CSV | JSON |

| Structure | Flat, tabular rows and columns | Nested objects and arrays |

| Human-readability | Easy (open in Excel, Sheets) | Moderate (requires viewer or parser) |

| Nested Data Support | Poor (requires flattening) | Excellent |

| File Size | Lightweight | Heavier (but compressible) |

| Usability in Python | Easy with pandas | Easy with json and flexible parsers |

| Compatibility | High (databases, spreadsheets, reports) | High (APIs, NoSQL, app pipelines) |

| Error Sensitivity | High — breaks on commas, newlines | Moderate — more resilient to data structure shifts |

| Data Mapping | One-to-one fields only | One-to-many, nested groups supported |

Related read: Structured Data Extraction for Better Analytics Outcomes.

What this means for your crawler

If you’re scraping something like:

- A job board

- A real estate listing

- A complex product page

- A forum thread with replies

→ JSON is your friend. It’s built to reflect real-world hierarchy.

If you’re scraping:

- A comparison table

- A price tracker

- A stock screener

- Basic, clean listings

→ CSV is cleaner and easier to plug into spreadsheets and dashboards.

How Output Format Impacts Storage, Analysis & Delivery

Your web crawler is only as useful as the data it feeds into your systems. And your choice between JSON or CSV doesn’t just affect file size or parsing — it impacts how fast you can analyze data, where you can send it, and what tools can consume it downstream.

Not all data formats are created equal — and your choice shapes what’s possible with your pipeline. For a general overview, here’s how file formats work across computing systems.

Storage Considerations

- CSV files are lightweight and compress well.

- JSON files are bulkier, and retain more structure.

Key notes:

- If you’re sending scraped data to analysts for slicing/dicing in spreadsheets — CSV is lightweight and faster.

- If you’re feeding it to a NoSQL database or an app — JSON is more powerful.

Analysis & Reporting

- CSV plugs easily into BI dashboards, Excel, or even Google Sheets.

- JSON requires pre-processing or flattening for relational tools — but works great for document-level analysis and nested data mining.

Use case tip: If you’re scraping user reviews with sub-ratings (e.g. product → multiple comments), JSON keeps those relationships intact. CSV would require a messy join table.

Related read: From Web Scraping to Dashboard: Building a Data Pipeline That Works.

Delivery & Integration

- Need to feed a 3rd-party system (ERP, ML model, search engine)?

→ JSON is almost always preferred. - Need to deliver simple daily product feeds to retailers or channel partners?

→ CSV is the standard (and usually required).

Common Mistakes When Choosing Format (and How to Avoid Them)

Mistake #1: Defaulting to CSV for Everything

CSV is familiar. But when your crawler pulls nested data — like product reviews with replies, job posts with locations, or real estate listings with multiple agents — trying to fit it all into flat rows gets messy fast.

Fix: If your data has layers, relationships, or optional fields → use JSON.

Mistake #2: Using JSON When You Only Need a Table

If your output is a clean list of SKUs, prices, or rankings — and it’s going straight into Excel — JSON just adds friction.

Fix: Don’t overcomplicate it. For flat, one-to-one fields → CSV is faster, lighter, easier.

Mistake #3: Ignoring What Your Destination Needs

Too many teams format for the crawler, not the consumer of the data.

Fix:

- If the end user is a BI analyst → CSV wins.

- If it’s an ML model or backend system → JSON fits better.

Mistake #4: Not Considering File Size and Frequency

A daily crawl of 100,000 rows in JSON format? That adds up — fast.

Fix: Benchmark both formats. Compress JSON if needed. Split delivery if CSV row limits are exceeded (e.g., Excel caps at ~1 million rows).

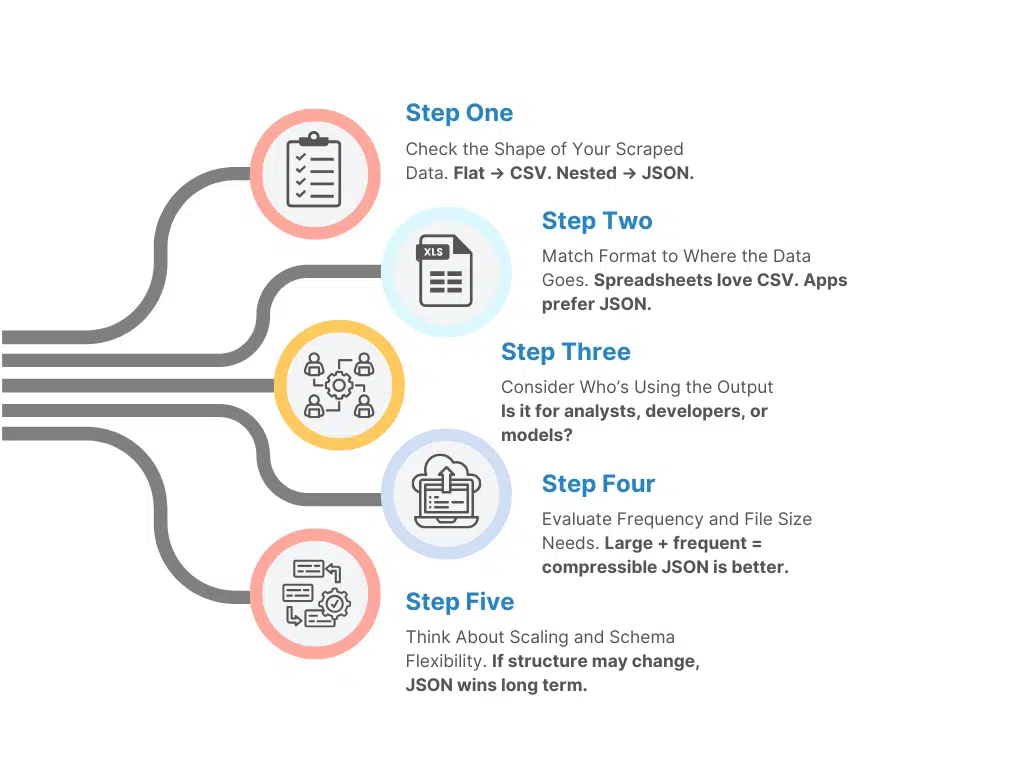

How to Choose the Right Format for Your Web Scraped Data?

Trends in Web Scraping Data Formats — What’s Changing?

If you’re still thinking of CSV and JSON as “just output formats,” you’re missing how much the expectations around scraped data delivery are evolving.

In 2025, it’s not just about getting data — it’s about getting it in a format that:

- Works instantly with your systems

- Minimizes preprocessing

- Feeds directly into real-time analysis or automation

- Complies with security, privacy, and data governance standards

Let’s look at what’s shifting — and why it matters.

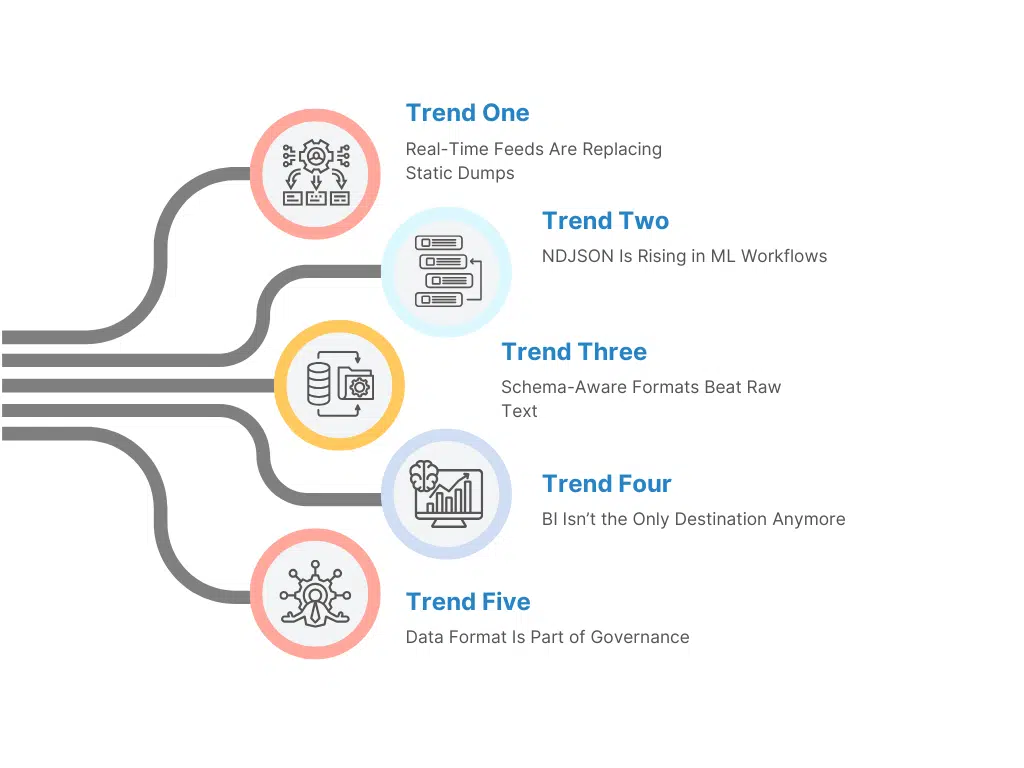

Trend 1: Structured Streaming Over Static Dumps

Gone are the days when teams were okay with downloading a CSV once a week and “figuring it out.” Now, more clients want real-time or near-real-time streaming of data — delivered via:

- REST APIs

- Webhooks

- Kafka or pub/sub streams

In this world, CSV doesn’t hold up well. JSON (or newline-delimited JSON, a.k.a. NDJSON) is the preferred format — lightweight, flexible, easy to push and parse.

If you’re building anything “live” — market monitors, price trackers, sentiment dashboards — streaming + JSON is the new normal.

Trend 2: Flat Files Are Being Replaced by Schema-Aware Formats

CSV is schema-less. That’s its blessing and curse.

While it’s fast to create, it’s fragile:

- Column order matters

- Missing or extra fields break imports

- Encoding issues (commas, quotes, newlines) still ruin pipelines

Newer clients — especially enterprise buyers — want their crawled data to come with embedded schema validation or schema versioning.

Solutions like:

- JSON Schema

- Avro

- Protobuf

…are being adopted to validate format integrity, reduce bugs, and future-proof integrations. This trend leans heavily toward JSON and structured binary formats — not CSV.

Trend 3: Unified Data Feeds Across Sources

As scraping scales, teams often gather data from:

- Product listings

- Reviews

- Pricing

- Competitor sites

- News aggregators

- Social forums

But they don’t want five separate files.

They want a unified data model delivered consistently — with optional customizations — so every new data feed plugs into the same architecture.

This is harder to do with CSV (unless every source is rigidly flattened). JSON’s flexibility allows you to merge, extend, and update data feeds without breaking things downstream.

Trend 4: Machine Learning Is Now a Key Consumer

A growing percentage of scraped data is going straight into ML pipelines — for:

- Recommendation systems

- Competitor intelligence

- Sentiment analysis

- Predictive pricing models

- LLM fine-tuning

ML teams don’t want spreadsheet-friendly CSVs. They want:

- Token-ready, structured JSON

- NDJSON for large-scale ingestion

- Parquet for large, columnar sets (especially on cloud platforms)

If your output format still assumes “some analyst will open this in Excel,” you’re already behind.

Bottom Line

JSON is no longer just a developer-friendly format. It’s becoming the default for:

- Scale

- Flexibility

- Streaming

- Automation

- ML-readiness

- Data quality enforcement

CSV is still useful — but no longer the default. It’s ideal for narrow, tabular tasks — but fragile for anything complex, nested, or evolving.

5 Emerging Trends in Scraped Data Delivery Formats

What’s Next for Crawler Output (2025+)

1. Data Contracts for Scraped Feeds

Expect to hear “data contracts” far more often. In plain English: you define the shape of your crawler’s output (fields, types, optional vs required) and version it—just like an API. When something changes on the source site, your team doesn’t learn about it from a broken dashboard; they see a schema version bump and a short changelog. JSON plays well here (JSON Schema, Avro). CSV can fit too, but you’ll need discipline around column order and null handling.

2. Delta-Friendly Delivery

Full refreshes are expensive. Many teams are moving to delta delivery: send only what changed since the last run—new rows, updates, deletes—with a small event type field. It lowers storage, speeds ingestion, and makes “what changed?” questions easy to answer. JSON (or NDJSON) is a natural fit because it can carry a little more context with each record.

3. Privacy by Construction

Privacy isn’t just legalese; it’s design. Pipelines are increasingly shipping hashed IDs, masked emails, and redacted handles by default. You keep the signal (e.g., the same reviewer returns with a new complaint) without moving sensitive strings around. CSV can carry these fields, sure—but JSON lets you attach privacy metadata (how it was hashed, what was removed) right next to the value.

4. Parquet for the Lake, JSON for the Pipe

A practical pattern we’re seeing: JSON or NDJSON for ingestion, Parquet for storage/analytics. You capture rich, nested signals during collection (JSON), then convert to Parquet in your lake (S3/Delta/BigQuery) for cheap queries and long-term retention. CSV still shines for the last mile—quick analyst slices, one-off exports, partner handoffs—but the lake prefers columnar.

5. Model-First Consumers

More scrapes go straight into models—recommendation systems, anomaly alerts, LLM retrieval, you name it. These consumers favor consistent keys and minimal surprises. JSON with a published schema is easier to trust. You may still emit a weekly CSV for the business team, but your “source of truth” will feel more like a contracted stream than a spreadsheet.

Bonus Section: One Format Doesn’t Always Fit All

Here’s something we don’t talk about enough: you don’t have to pick just one format.

A growing number of teams now run dual or multi-format delivery pipelines; not because they’re indecisive, but because different consumers have different needs.

For example:

- Analysts want a CSV file they can open in Excel today.

- Developers want JSON to feed into dashboards or microservices.

- Data science teams want NDJSON or JSONL to push directly into ML models or labelers.

Rather than force everyone to adapt to one format, modern scraping pipelines often deliver:

- CSV for business reporting

- JSON for structured data apps

- NDJSON for scalable ingestion

- Parquet or Feather for long-term archival or analytics

This is easier than it sounds — especially if the crawler outputs JSON by default. From there, clean conversion scripts (or built-in support from providers like PromptCloud) can generate alternate formats on a schedule.

Bonus use case: LLM-Ready Datasets

As teams begin fine-tuning large language models (LLMs) or training smaller domain models, the way data is formatted matters more than ever.

- Well-structured JSON makes it easy to align examples, metadata, and output labels

- CSV might be used to store instruction/output pairs or curated evaluation sets

- NDJSON is often used in fine-tuning pipelines that stream examples line by line

If LLMs are part of your future roadmap, building your scraper to deliver format-ready datasets today gives you a head start tomorrow.

Want reliable, structured Temu data without worrying about scraper breakage or noisy signals? Talk to our team and see how PromptCloud delivers production-ready ecommerce intelligence at scale.

Let’s talk.

FAQs

1. Can I get both JSON and CSV formats from my web scraper?

Yes. PromptCloud delivers data in both formats, depending on what you need. Many clients start with JSON and request a parallel CSV version for internal tools.

2. Is JSON always better for nested data?

Pretty much. JSON is designed to represent nested relationships naturally (think: reviews, product variants, metadata). It keeps those relationships intact—CSV flattens them awkwardly.

3. Will Excel handle large JSON files?

Not easily. JSON is best parsed with programming tools (Python, JavaScript, etc.). For Excel users, CSV is simpler and faster to open, scan, and pivot.

4. Can I convert between CSV and JSON?

Technically yes—but context matters. Going from CSV → JSON loses structure unless the original data was nested. Going from JSON → CSV can get messy if you don’t flatten it carefully.

5. What’s the best format for long-term storage?

For structured, clean tabular data: compressed CSV works great. For dynamic, schema-flexible, or machine-readable archives: JSON wins for portability and flexibility.