With the tremendous advancements in computer hardware and surplus availability of big data, artificial intelligence is rapidly finding its way into our everyday lives from the world of science fiction. While artificial intelligence is not a new topic at all and the first ever AI program – Logic theorist was created as early as in the 1955, our early computer systems lacked the capabilities to run advanced AI algorithms. But, processing power is becoming less of a concern now as the modern day computers have come a long way in this regard.

Tech giants like Google have incorporated complex AI algorithms into our handheld devices like smartphones. And so far, these technologies have helped us save time and improve our productivity by leaps and bounds.

However, there has been a rise in the negative connotation around artificial intelligence lately, especially after Elon Musk and Mark Zuckerberg had a spat about whether or not artificial intelligence is going to kill us all.

Musk, who is the chief of SpaceX and Tesla has had longstanding worries about the dangers of an AI future. In 2014, he even went so far as to call AI the “biggest existential threat” to humanity.

In a recent Facebook Live broadcast, Zuckerberg accused Musk’s doomsday fears of negativity and said he finds it pretty irresponsible. This was followed by Musk’s tweet which read “I’ve talked to Mark about this. His understanding of the subject is limited.”

While the tech emperors have conflicting opinions of the implications of artificial intelligence upon humanity, it can be seen as an endless debate with both sides having valid points. The opinions expressed by Zuckerberg and Musk could also be just an attempt to defend their personal brands. However, the question still remains – is AI really a threat to humanity?

What exactly is AI?

While science fiction often likes to portray AI as robots with physical characteristics similar to humans, artificial intelligence can encompass anything from IBM’s Watson to Google’s search algorithms to autonomous weapons. The applications can be progressive, regressive or even destructive, which is why the industry experts have conflicting opinions on AI.

Today’s artificial intelligence isn’t powerful enough to match human intelligence, which is why it’s known as narrow AI — it can perform only narrow tasks such as voice or facial recognition and driving a car. This means they are completely under the control of humans and aren’t any more dangerous than the humans that control them.

However, the researchers are still working on creating a general AI (AGI or strong AI). Narrow AI will beat humans at whatever task it’s designed for, while AGI can outperform humans at most things including learning.

This is where things get a little blurry. Will an AI that’s as intellectually powerful as humans side with humans or turn against us? If such an intelligent machine can have access to all the data it wants, the implications are all the more unpredictable.

How dangerous is AGI?

It is unlikely that even a super-intelligent AI will show human emotions like hate or love – this needs more than just intelligence and we haven’t yet figured out how humans develop it. This means there isn’t really any reason for an AI to go rogue and wreak havoc. When it comes to anticipating the dangers of AI, we can typically consider two scenarios:

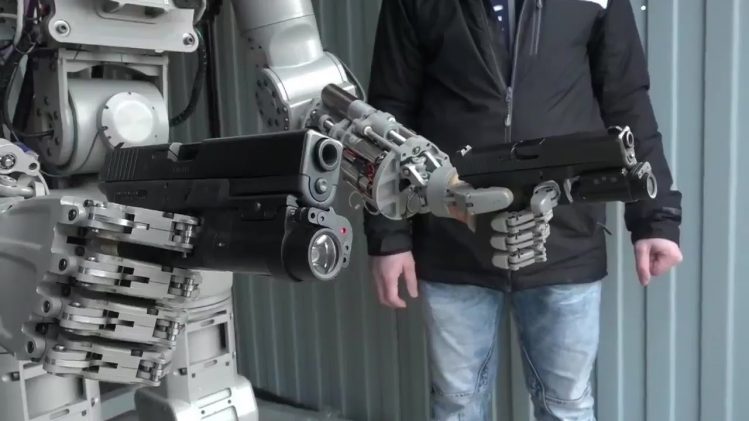

- AI is designed to do something destructive

- AI is designed to do something constructive, but it chooses a destructive method to achieve its goal

AI is designed to do something destructive

War is an evil that humans aren’t likely to give up on, at least in the near future. As with all kinds of technology, AI will definitely be used to design more efficient weapons. An autonomous weapon is an AI that’s programmed to destroy and kill. Such systems, if falls into the wrong hands, could cause mass destruction. A destructive machine would be built in such a way that it cannot be simply turned off like your TV. This would make them extremely dangerous to the target and can cause mass casualties without doubt. This kind of a system is something that’s achievable even with our present day narrow AI and the risk can grow as it further improves.

AI is designed to do something constructive, but it chooses a destructive route to achieve its goal

This situation is plausible if the goals of the AI isn’t perfectly aligned with ours in which case it may choose a route that’s not favorable to us. For example, if we trust a super-intelligent AI with the task of building a house, it may do a great job, but might not do it in a way that’s best for our environment. It will literally get the task done using its own methods and any attempt to stop it could be seen as a threat to be dealt with. Here, the AI does its job well, but in a very literal manner with undesirable side effects.

Aligning the goals is the key

The real concern about artificial intelligence is its competence. A powerful AI system will be extraordinarily good at achieving its goals, no matter how. If these goals aren’t exactly aligned with ours, we have a problem. Aligning the AI’s goals with that of humans would be the biggest challenge in this regard. Without this goal alignment in place, the power of the AI could easily become a threat and this is one of the biggest dangers with intelligence without proper goal alignment. If the intelligent machine cares about the same things as we do, AI can augment the human race with its super-intelligence.