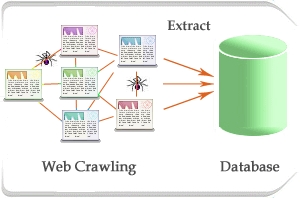

Web Crawlers came into existence in the early 90’s and since then they have been helping almost everyone directly or indirectly, making sense of the massive piles of unrelated data accumulated over the years on the internet. There are numerous web crawlers available to us today with varying degrees of usability, and we can pick and choose according to whichever crawler matches our criteria for data requirement the best. No wonder there is a huge market of data crawling with newer web crawlers popping up every day, but only a few makes a mark in the data industry. Reason?

Because however easy web crawling might sound in theory (crawling a webpage, following page links from one page to another and crawling the next page and so forth), creating an efficient web site crawler is an equally difficult job. With ever-expanding data divided in different formats, multiple codes and languages and various categories, interconnected in no particular order, webcrawler development is an ever-evolving process. Here are a few pointers on qualities of a good web crawler to know in order to get ahead in the game of qualitative web crawling solutions.

1. Architecture

Speed and efficiency are two basic requirements in any data crawler before it is let out on the internet. The architectural design of the webpage crawler programs or auto bots comes into the picture. Like any fully functional organization to work smoothly, a hierarchy or smooth architecture is required between different job roles, similarly web crawlers require a well-defined architecture to function flawlessly. Web crawlers should thus follow the gearman model with supervisor sub crawlers and multiple worker crawlers.

Each supervisor crawler would manage the worker crawlers working on different levels of the same link and thus help speed up the data crawling process per link. Apart from the speed, a safe and reliable web crawling system is also desirable to prevent any loss of data retrieved by the supervisor crawlers. Therefore, it becomes mandatory to create a backup storage support system for all supervisor crawlers and not depend on a single point of data management and crawl the web efficiently in a reliable manner.

2. Intelligent Recrawling

Web crawling has multiple uses and various clients who are looking for relevant data. Let’s say a book retail client needs the titles, author names and prices of each book as and when it gets published. Since different genres and book categories get updated on different websites and by different publishers, all these websites might have a different frequency of updating their lists. Sending a crawler to these sites relentlessly for data scraping will prove to be a wastage of time and resources. Thus it is important to develop intelligent crawlers that can analyze the frequency with which the pages get updated on the targeted websites.

3. Thorough and Efficient Algorithms

Mostly data crawlers follow a Last in First out (LIFO) or First in First out (FIFO) methodology to traverse the data present on the interconnected pages and websites. It works well theoretically but the problem arises when the data to be traversed per crawl sprawls larger and deeper than anticipated. Hence arises another requirement for optimized crawling to be incorporated in the data crawlers.

This can be accomplished by assigning priority or to the crawled pages on the basis of popular demands and features such as page rank, frequency of updates, reviews, etc. and identifying the time it takes to crawl them all. You can thus enhance your web crawling system by analyzing the crawling time of such pages and dividing the tasks among all data crawlers equally so there are no vacant resources (read data crawlers) and no bottlenecks are created either.

4. Scalability

Taking a more futuristic view of data cloud present on the web, based on the escalating number of data files and document uploads, it is necessary to test out the scalability of your data crawling system before you launch it. Keeping that in mind, the two key features you need to incorporate in your data crawling system are Storage and Extensibility. On average, each page has over 100 links and about 10-100 kb of textual data. Fetching that data from each page takes just about 350kb of space.

So make sure that your crawler compresses the data before fetching it or uses a bounded amount of storage for storage-related scalability. Also, a modular architectural design of the web page crawler helps, so the crawler can be modified easily to accommodate any changes in the big data crawling requirements of the client.

5. Language Independent

With the rising demand for data acquisition, it is important for a website crawler to be language-neutral and extract data in all languages as requested across the globe. Though English is still the most used language over the web, but other languages and their data are slowly catching up and capture a large chunk of the Internet data available to crawl for business insights and analytical conclusions.

6. Politeness

Sending a badly timed or poorly structured data crawler to crawl the web could mean unleashing a DoS attack on the internet. To help prevent this, some web pages have crawling restrictions mentioned on their page, to help regulate their crawling process. So avoid sending over-eager crawlers to a website to avoid overcrowding its server resulting in a site crash. For more polite and ethical practices refer to the blog The Ethics of Data Scraping.

Do consider these points while building the next crawling system for an improved performance and better results. Till then, Crawl Away!