The proliferation of internet and smart devices have brought about a massive evolution in the way information is generated and assimilated. Earlier, there was always a finite amount of information available on any given topic and a lot less of processing was needed in order to make sense of it in a larger context. With the advent of big data, many standardized practices and processes have been transformed or irrefutably changed to allow it to give the necessary intel in the context of the world at the present time.

How have businesses adapted?

While the amount of daily generated data has increased exponentially, many business roles have not changed in essence. While the roles are similar in nature, there is of course the need to adapt their workings to the present day context and its technological advanced and its idiosyncrasies. While many have embraced the change and made the most of it, some are still in the adjustment process and likely to find their way sooner or later in the future.

It is this strange recipe for change that gave birth to the discipline known now as data journalism. The term data journalism or data driven journalism might be confusing to people, as it is obvious that journalists need data to craft their stories and flesh them out. In this era when Big Data has taken over the world by storm, data journalism presents a different context. It refers to journalism done with the express use of data acquired from the massive data cloud that is Big Data. It is journalism with the right modern twist.

What is data journalism?

Data journalism can be best described as the use of large sets of data, and operations such as data filtering, processing and analysis for the purpose of creating news or supplying customers with specific information. Journalism in itself is a field full of meticulous nuances, and this is just the next step in terms of change in times. The rudiments of data driven journalism can be found in the older practices of computer assisted reporting or CAR, which was in vogue a few years earlier. It also derives heavily from other journalistic philosophies, particularly those which require the use of social science techniques in journalism.

The scope and spread of data journalism has become wider and wider over time. The core of this process can be attributed to the instant and unencumbered availability of huge volumes of data which is available openly and for everyone on the internet. The concept of data driven journalism promises a whole new level of service to the consuming public and to business interests, with superior information, stories and content that can inspire smart decision-making.

The Evolution of data journalism

From the old days of CAR to the present concept of data journalism, there has been quite an evolution. The

The process is essentially a linear workflow which starts off with the collection of data from hundreds or thousands of sources on the internet using techniques such as web scraping. This is followed by further processing, which essentially cleans the data, eliminating duplicates and irrelevant information and structuring it in a manner that is easy to comprehend and analyze. This data is then filtered down based on a few specific information requirements or criteria, leaving the journalist with a large volume of high quality data. This can be then be used to visualize and construct the story.

The main rules and nuances of journalism are still intact, but this approach has added several new layers to the overall notion of journalism. The main goal of data journalism is to be able to tell stories based in data. Findings which arise from collected data can be put down in journalistic language, visualization can be astutely used to add new dimensions and easier comprehension. Different elements of communication and storytelling can also be used to make the story to relate better and interesting.

The Workflow

The workflow that is usually associated with data driven journalism is split into multiple steps, all of which use data in some way or the other. The processes are also very much suited for the use of modern technologies. From start to end, the workflow consists of the following steps –

- Finding the required data through multiple approaches involving searching and web scraping

- Cleaning the stored data by filtering and removing redundancies and irrelevancies

- Visualizing the main story pattern

- Integrating the visuals into one solid plot and attaching the relevant data bits to make the stories more believable

- Distribution and tracking

This mode of operation is in use in major corporations around the world in creating rich, immersive digital stories that make an impact. To achieve success in this field, it is necessary to fully understand the importance of each of these steps and their contribution towards the whole product. This is where the most important part of this process comes in first – the finding and collecting of data.

Web Scraping – An Essential Tool for Data Journalists

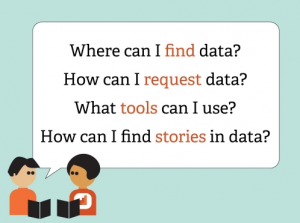

It is clear that the first and most important part of any data journalism effort is the collection and gathering of the relevant data needed for the project. The internet is filled with large volumes of information, most of which can be irrelevant for a specific topic. Taking out the particular data you need from this large pool of unrelated, unstructured data is something that takes time and effort, and has a great impact on the finished product.

This is the point where web scraping becomes an irreplaceable component of the data journalism process. Web scraping is a targeted and strategic information gathering process using a professional quality scraper program. The journalist starts the scraper and lets it do all the hard work and gets rewarded with a starting set of relevant data conveniently stored in a database.

How data journalism depends on web scraping?

This, of course, is what shapes the rest of the steps in the process and finally decides the quality or success of the resultant story. The quality of information that data journalists need to maintain is pristinely accurate, and it is web scraping that brings them that accurate data which enables them to ply their trade and enjoy its successes. From their basic rudiments, custom web scraping tools have advanced in leaps and bounds and the latest, cutting-edge scrapers can provide stunningly accurate results in a matter of minutes, even seconds.

In journalistic stories, any error or fault can have a lasting impact and even hold the power to end the journalists’ careers. It is damaging to the reputation of the journalist and can have a number of other possible backlashes. This is why data journalists need to carefully examine every bit of information they collect and verify their veracity before proceeding with the rest of their projects. As the digital world and the media have also taken a turn from attention-causing content to content that builds trust, preserving data quality and accuracy is extremely important. Web scraping tools make this so much easier to achieve, swiftly and accurately collecting all the important data needed for a particular project and doing so in a fast, responsive manner.

Can web scraping be bypassed in effective data journalism?

Although there are several ethical issues connected with web scraping, the fact that is the backbone of the time-tested process that brings data journalists and data reporters their daily bread is irrefutable. Companies have already developed a number of specialized web scraping tools that are built especially to address the needs of data reporters. Many data journalists also create their own scraping tools, or take advantage of open source tools that are easy to configure to their tastes and requirements. This way, they can exercise more control over data precision, get to their desired results faster and have better quality of information available to work with.

To sign off

Overall, web scraping is an integral part of the concept of data driven journalism. The advancements in web scraping technology has also played a significant part of the evolution of data driven journalism itself, and for a field that is still clearly in the stage of early development, web scraping and its benefits are sure to be a prime driving force for the betterment and development of data journalism in future. It can pave the way towards new, more interesting possibilities and help data journalists create stories that are tellingly important, relatable and engaging. Data journalism owes much to web scraping and will continue to do so.

Planning to acquire data from the web? We’re here to help. Let us know about your requirements.