**TL;DR**

Reddit isn’t just memes and cat pictures. It’s a living, breathing archive of what real people actually think, feel, and fight about, across millions of niche communities you won’t find anywhere else. But here’s the ugly truth: getting that data cleanly is brutal. API limits choke your access. Threads are a tangle of nested chaos. Comments vanish before you can grab them. And building your own scraper? Get ready to pour weeks (and a chunk of your budget) into coding, debugging, and constant maintenance, only to end up with a noisy mess that still needs cleaning.

Meanwhile, your competitors? They’re already feeding on structured, ready-to-use Reddit datasets, running sentiment models, tracking market shifts, and spotting product feedback before it hits mainstream. They’re not wrestling with broken scrapers. They’ve outsourced the grind.

This guide is your map to getting there without burning time or sanity. We’ll break down why Reddit data is a goldmine for analysts and researchers, the hidden landmines in DIY scraping, and how professional web scraping services (like PromptCloud) hand you clean, structured, analysis-ready Reddit data, historical or real-time, without you lifting a finger. If you want the insights without the headache, read on.

Why Analysts and Researchers Rely on Reddit Data

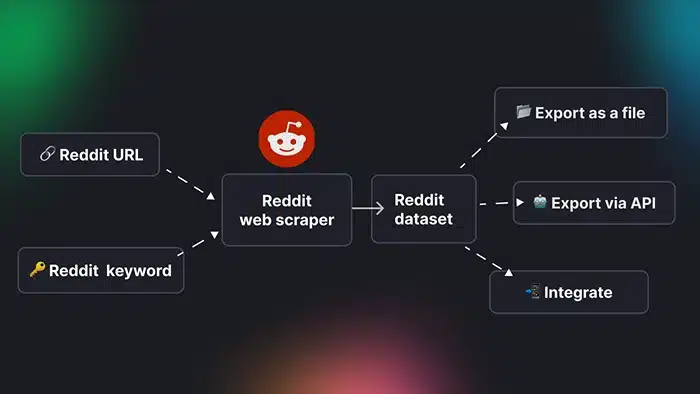

Image Source: Apify

If you’re in the business of understanding people, customers, competitors, and markets, Reddit is the unfiltered focus group you didn’t have to pay for. Five hundred million monthly users. Hundreds of thousands of active communities. Millions of conversations every single day.

For data analysts, that’s priceless. Forget polished survey answers or brand-friendly feedback forms. On Reddit, people spill their real frustrations with a product. They hype what they love. They tear apart what’s broken. They argue over trends, share niche hacks, and reveal buying intentions long before they show up in sales reports.

Researchers don’t just sit around crunching numbers; they hang out where the conversations happen. On Reddit, that might mean spotting a sudden spike in posts about some new supplement over on r/fitness, or noticing a quiet but steady buzz around a tech gadget that hasn’t even hit the big blogs yet. Product managers do the same thing, only their radar is tuned for trouble; a customer gripe that pops up in a thread today can be dealt with fast, before it blows up into a pile of angry reviews next month. Growth teams monitor subreddits to find hidden audiences they didn’t even know existed.

And here’s the kicker, Reddit isn’t just global; it’s hyper-segmented. Want to know what urban gardeners in Chicago think about a new soil brand? There’s a subreddit for that. Need real-world chatter from crypto traders, medical device users, or indie gamers? Reddit’s got it. It’s market segmentation in its most natural form; you just have to capture it before it’s gone.

That’s why Reddit data isn’t “nice to have” anymore. For many analysts and researchers, it’s the competitive edge. But only if you can actually get it, and that’s where the trouble starts.

Why Scraping Reddit Yourself Sounds Easier Than It Is

On paper, scraping Reddit sounds straightforward. Spin up a script, hit the API, pull some posts, done. In reality? It’s a rabbit hole with teeth.

First, the API. Reddit’s official API looks friendly until you hit the wall. Strict rate limits mean you’re sipping through a straw when you need a firehose. Want historical data? Forget it. The API only gives you so much before the trail goes cold. And if the post or comment you need gets deleted or edited before you grab it? Gone.

Then there’s the messy structure. Reddit threads aren’t neat little rows in a spreadsheet. They’re a sprawling tree of nested comments, each with its own quirks. Some are replies to replies to replies, five layers deep. Good luck making sense of that in Excel without weeks of cleanup.

Don’t forget moderation delays. A post might not even appear until a mod approves it, by then, the conversation’s already moved on. If your goal is real-time monitoring, that lag can kill your insights.

And yes, you can build your scraper. But here’s the real cost:

- Development time to write, test, and debug your scripts.

- Infrastructure to run them without getting blocked.

- Ongoing maintenance is required every time Reddit changes its layout or rules.

- Hours spent cleaning raw data so it’s usable.

You start thinking you’re saving money by doing it in-house, but between dev hours, server costs, and the opportunity cost of not analyzing while you’re firefighting scraper issues, it’s a loss.

This is exactly where most teams start looking at professional web scraping services. Not because they can’t code, but because they’re tired of wasting cycles wrestling with the plumbing when what they need is the water.

The Hands-Off Way to Get the Reddit Data You Need

Here’s the thing, scraping Reddit isn’t rocket science, but doing it well, at scale, and without babysitting scripts 24/7? That’s where the grind lives. And it’s why smart teams skip the DIY pain and go straight to a professional web scraping service.

A good web scraping service doesn’t just “grab posts.” It acts like your always-on data ops team, crawling Reddit at the scale you need, pulling exactly the subreddits, keywords, and timeframes you care about. No more wrestling with rate limits or wondering if you missed something important because the API cut you off.

They also handle the dirty work:

- Cleaning: stripping out junk, spam, and irrelevant chatter so you only get the good stuff.

- Structuring: turning messy threads into neatly formatted datasets you can sort, filter, and run analysis on instantly.

- Enriching: adding metadata like timestamps, user activity, and engagement scores so your insights have context.

And because they’re built for scale, you can get real-time streams for live monitoring or historical datasets for deep trend analysis, without worrying about storage, speed, or compliance.

The result? You’re not staring at a wall of unstructured text. You’re holding a clean, analysis-ready Reddit dataset in CSV, JSON, or Excel, whatever plugs straight into your workflows.

It’s the difference between buying a plot of land and having to build the whole house yourself, or walking into a fully furnished home with the lights already on.

PromptCloud helps build structured, enterprise-grade data solutions that integrate acquisition, validation, normalization, and governance into one scalable system.

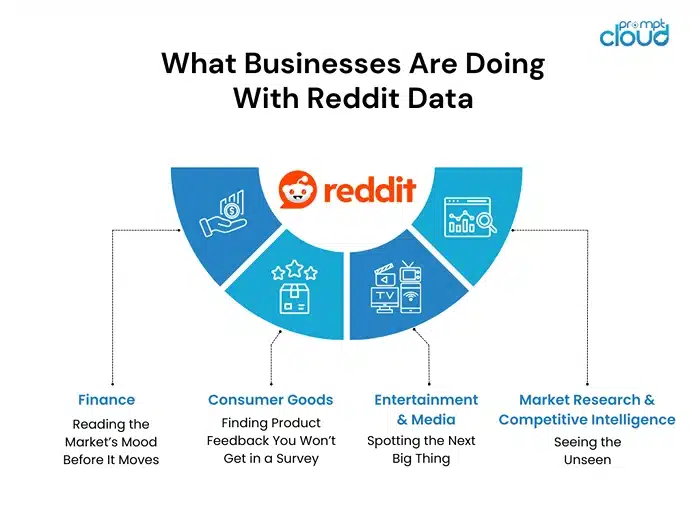

What Businesses Are Doing With Reddit Data

Reddit data isn’t just “interesting.” It’s actionable intelligence, the kind that shapes product roadmaps, drives marketing campaigns, and even moves markets. Here’s how different industries are already putting it to work.

Finance: Reading the Market’s Mood Before It Moves

Remember when WallStreetBets turned GameStop into a headline stock? That wasn’t a fluke. Finance teams now mine finance and crypto subreddits for sentiment signals, tracking keywords, post volumes, and emotional tone to anticipate stock surges or dips. By the time mainstream analysts catch on, Reddit’s already been talking about it for days.

Consumer Goods: Finding Product Feedback You Won’t Get in a Survey

If you sell physical products, Reddit is the customer support line you didn’t set up. From r/Coffee to r/SkincareAddiction, people don’t hold back. They’ll rave about what they love, tear apart what they hate, and get into the weeds on things like weird packaging quirks or a tiny ingredient swap they noticed. Brands that are paying attention scrape those threads, not just to catch defects early, but to hear the “wish list” ideas customers toss around and to see exactly how their products stack up against the competition.

Entertainment & Media: Spotting the Next Big Thing

Studios and streaming platforms quietly scrape Reddit to track which shows, genres, or games are catching fire. Fan theory threads, cosplay discussions, and character debates are signals of engagement depth, the kind of chatter that can inform renewal decisions, merch lines, or spin-off projects.

Market Research & Competitive Intelligence: Seeing the Unseen

Agencies use Reddit to uncover emerging market segments that don’t show up in traditional reports. A niche subreddit exploding in membership? That’s a signal. Heated debates around a competitor’s launch? That’s free competitive analysis.

Here’s the common thread: these teams aren’t wading through raw posts by hand. They’re plugging clean, structured Reddit datasets straight into sentiment analysis models, BI dashboards, and market trend tools. The insights are fresh, relevant, and ready to act on.

That’s the difference between knowing what happened and seeing it coming.

Structured Reddit Datasets: Why They Save You More Than Just Time

Raw Reddit data is like a thrift store; buried somewhere in the clutter is gold, but you’ll spend forever digging, cleaning, and figuring out what’s worth keeping. Structured Reddit datasets flip that. They hand you the gold already sorted, polished, and labeled.

When you start with clean, structured data, you skip the grunt work entirely. No hours wasted untangling comment threads. No de-duplicating reposts. No filtering out bot spam. Just usable insights from the first minute you open the file.

Here’s what that can look like:

Imagine you’re tracking consumer sentiment for a new electric car launch. Instead of pulling random posts from r/electricvehicles and r/cars, you get a dataset where:

- Every row is a unique post or comment.

- Columns include subreddit name, post title, full text, timestamp, author ID (anonymized), comment depth, upvotes, and sentiment score.

- Threads are already grouped by topic, so you can spot patterns fast.

That’s the difference between spending days getting your data into shape and spending those same days analyzing it.

| subreddit | post_title | post_text | timestamp | upvotes | sentiment_score |

| r/electricvehicles | First impressions of the new Tesla Model X refresh | Picked up the new Model X last week. The yoke steering is… interesting. | 2025-07-01 14:32:00 | 420 | 0.75 |

| r/cars | EV vs Hybrid: Which one should I buy? | Looking for advice: Should I go for a full EV or a hybrid for my daily commute? | 2025-07-02 09:15:00 | 312 | 0.20 |

| r/electricvehicles | Charging speed comparison between top EVs | We tested 5 EVs for charging speed, here’s the data from fastest to slowest. | 2025-07-03 19:45:00 | 198 | 0.60 |

| r/technology | A breakthrough in battery technology was announced | Researchers claim a 50% increase in battery density. It could change EVs forever. | 2025-07-04 11:10:00 | 560 | 0.85 |

| r/crypto | Bitcoin discussion: market outlook for 2025 | What’s everyone’s prediction for Bitcoin prices over the next year? | 2025-07-05 22:05:00 | 689 | 0.40 |

And this is where PromptCloud comes in. Our ready-to-use Reddit datasets are built exactly for this. Whether you need:

- Historical datasets covering months or years of subreddit activity for trend analysis.

- Real-time streams of posts and comments so you can react to market shifts as they happen.

- Topic-specific datasets curated to your niche, from skincare to gaming to fintech.

You get it all in your preferred format (CSV, JSON, Excel), fully cleaned, structured, and ready to plug into your analytics tools. No missed posts. No dirty data. No wasted hours.

It’s not just about saving time, it’s about hitting the ground running while everyone else is still cleaning up their scrape.

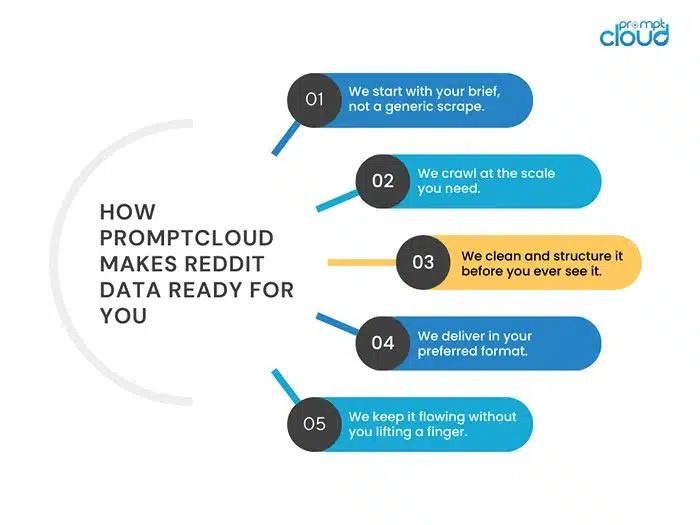

How PromptCloud Makes Reddit Data Ready for You

When you work with PromptCloud, you’re not just “ordering some scraped posts.” You’re plugging into a system built to deliver Reddit data that’s already clean, structured, and tailored to your exact needs. No babysitting scripts. No, wondering if you missed something important.

Here’s what the process looks like:

1. We start with your brief, not a generic scrape.

You tell us which subreddits, keywords, timeframes, and data points matter. Need only posts from verified subreddits? Only top-level comments? Posts with a certain upvote threshold? We bake that into the crawl from day one.

2. We crawl at the scale you need.

Whether it’s historical data stretching back years or real-time streams updated by the minute, our crawlers pull exactly what you need, without tripping API limits or getting throttled. You set the frequency, and we deliver.

3. We clean and structure it before you ever see it.

Spam? Gone. Deleted or duplicate posts? Removed. Thread structures? Flattened into clear, sortable datasets. Every record comes with consistent fields like subreddit name, post title, text, timestamp, author ID (anonymized), engagement metrics, and, if you want, sentiment scoring.

4. We deliver in your preferred format.

CSV for Excel? JSON for your data pipeline? XLSX for sharing with a non-technical team? We send it exactly how you need it, ready to drop into your analysis tools.

5. We keep it flowing without you lifting a finger.

For ongoing needs, we automate the delivery so fresh data lands in your inbox, S3 bucket, or API endpoint like clockwork. No follow-ups. No missed pulls.

It’s not only about avoiding the hassle of building your scraper. The real win is having Reddit data you can use the moment you get it, no extra cleanup, no patching scripts. That way, your hours go into the stuff that matters: digging into the numbers, spotting patterns, making calls.

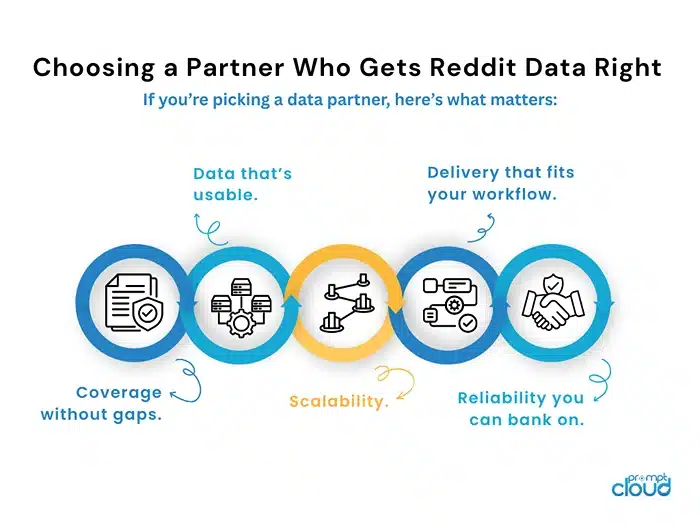

Choosing a Partner Who Gets Reddit Data Right

Not all “data providers” are built the same. Some will hand you a raw dump of posts and call it a day. Others scrape until they hit a block, then quietly skip chunks of your request. The difference between a vendor who gets Reddit data and one who just “does scraping” is night and day.

If you’re picking a data partner, here’s what matters:

Coverage without gaps.

You need a provider that can pull from every relevant subreddit, post type, and timeframe you care about, without hitting API ceilings or missing data during peak activity.

Data that’s usable.

Raw text is cheap. Clean, structured datasets with consistent formatting, deduplication, spam filtering, and relevant metadata are what save you weeks of prep time.

Scalability.

If you start with one subreddit today and scale to 100 tomorrow, your provider should handle it without breaking stride. You shouldn’t have to renegotiate or rebuild your data pipeline every time you grow.

Delivery that fits your workflow.

Getting your dataset should be as simple as downloading a file or calling an API endpoint, in the format you need, when you need it. No manual conversions. No one-off “special cases” that eat up your time.

Reliability you can bank on.

Downtime, missed updates, or incomplete pulls can kill your analysis. You need a partner who treats consistency like oxygen.

PromptCloud was built for exactly these needs. From custom crawl logic to ongoing delivery automation, we’ve refined our process so you get exactly the Reddit data you want, at the quality you expect, on the schedule you set.

Skip the DIY Struggle and Go Straight to Insights

You’ve seen how finance teams use it to catch market sentiment before it hits Wall Street, how product teams pull unfiltered feedback to fix issues before they explode, and how researchers mine niche conversations for trends the rest of the world hasn’t noticed yet.

What you don’t need is another side project building and babysitting a scraper that chews up weeks of your time, only to spit out messy data you can’t use without another round of cleanup. That’s not “agile.” That’s a distraction.

The teams winning with Reddit data aren’t winning because they scrape harder, they’re winning because they scrape smarter. They outsource the plumbing to people who do it at scale, every day, for clients across industries. That way, their analysts spend their hours running models, spotting patterns, and making moves… not debugging code at 2 a.m.

PromptCloud exists to make that your reality. Clean, structured Reddit datasets. Historical or real-time. Delivered exactly how you need them. No gaps. No guesswork. No grind.

If you’re ready to cut through the noise and get Reddit data you can trust from day one, let’s talk. Request your sample Reddit dataset now, and start analyzing, not scraping.

FAQs

1. How do I get Reddit data without building my own scraper?

Honestly, unless you’ve got a developer with time to burn, building one from scratch is a pain. The quick way? Work with a service that already does it, they’ll pull the subreddits and topics you care about, clean it up, and hand it to you in a usable format.

2. Can I just buy a ready-to-use Reddit dataset?

Yep. You can get datasets that are already filtered by subreddit, keyword, or even post type. For example, PromptCloud delivers them in CSV, JSON, or Excel so you can plug them straight into your analysis without touching a single line of code.

3. How far back can you go with historical Reddit data?

Depends on what you’re after. We’ve pulled data going back years for some projects, while others only need a few months. The point is, if it’s public, we can usually get it.

4. Is it legal to scrape Reddit?

If you’re talking about public content, yes, but you’ve got to stick to platform rules and privacy laws. This is why a lot of teams go through a vendor; it keeps things compliant and worry-free.

5. Is scraped Reddit data more complete than the API?

Most of the time, yes. The API has strict limits and can miss posts if you’re pulling at the wrong moment. Scraping done right can catch more, cover longer timeframes, and give you the context the API doesn’t always include.