**TL;DR** Most teams look at Google’s built-in tools and call it analysis. But if you scrape the SERP, follow the click, and mine the web around it—URLs, review sites, forums, pricing pages, job listings—you’ll start to see more. More signals. More patterns. More reasons behind the changes. Put it all into a dashboard, update it on a schedule, and what you’ve got is a repeatable workflow that makes every campaign smarter, faster, and harder.

Google Ads is one of the most competitive platforms in digital marketing today — and while Google provides basic data through its Keyword Planner and Auction Insights, that’s barely scratching the surface.

If you’re serious about Google Adwords competitor analysis, you need better answers to questions like:

- What keywords are your rivals bidding on?

- How much are they spending?

- What ad copy is working for them?

- How do they switch strategies over time?

This is where web scraping changes the game. With the right scraping stack, you can capture real-time insights that go far beyond manual research or basic dashboards. In this post, we’ll break down five smart ways to use web scraping for competitor analysis in Google Ads — and how brands are using these methods to win big.

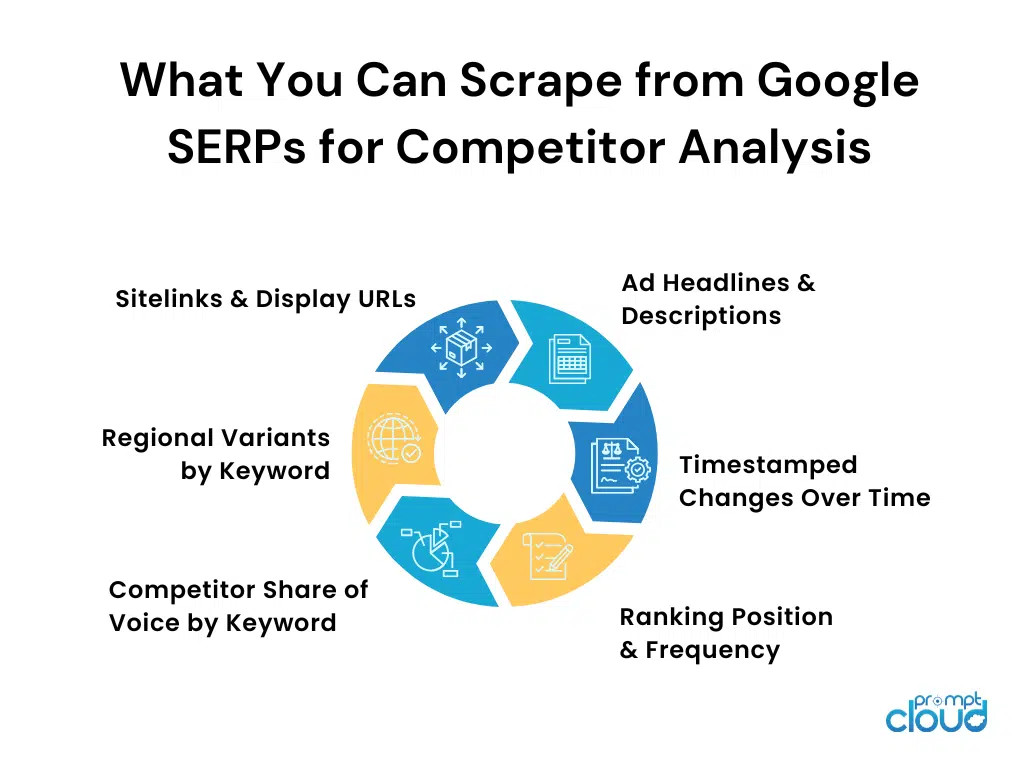

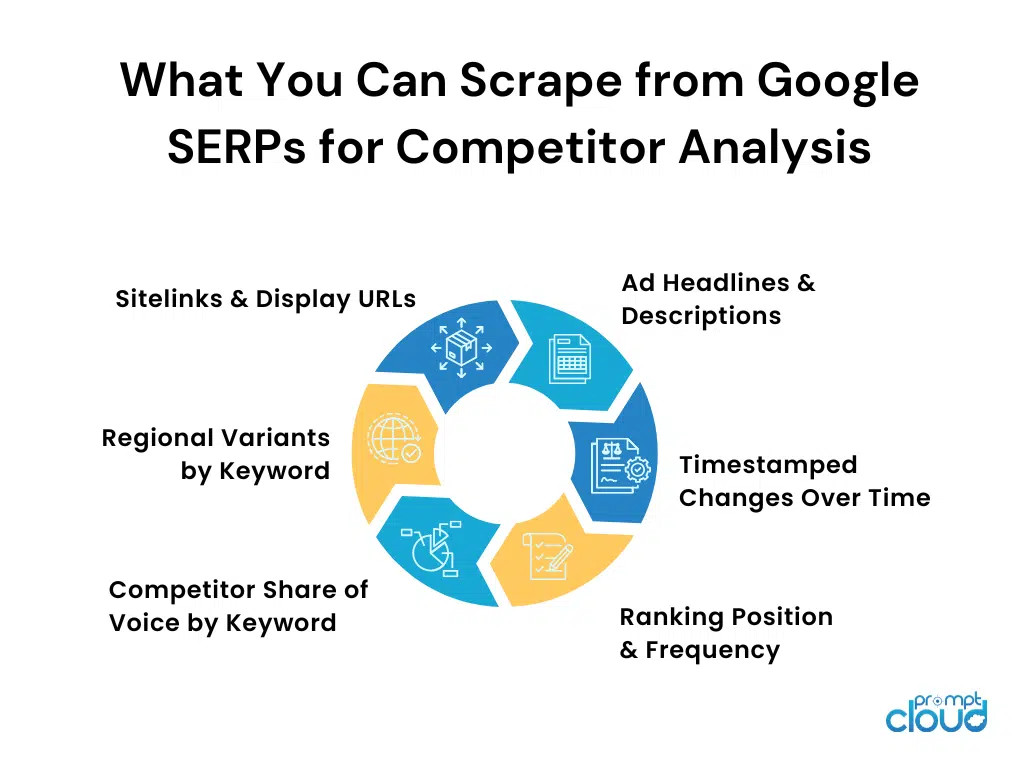

#1. Scraping Competitor PPC Ad Copies from SERPs

The most direct way to spy on your competitors’ Google Ads strategy? Search their target keywords and scrape the sponsored listings. Every SERP is a goldmine of insights — from the headline and description to the landing page URL and ad extensions.

Why this matters:

- You see exactly how competitors position their offers.

- You discover which USPs and CTAs they’re betting on.

- You track changes in copy and messaging over time.

What to scrape:

| Element | Insight |

| Headline | Value props competitors are pushing |

| Description | Messaging tone, urgency, and benefits |

| Display URL | Target landing pages |

| Sitelinks | Product categories or additional hooks |

| Ad labels | Variants like “Sponsored”, “Top Ads”, etc. |

Understanding how different industries use web scraping to decode sentiment gives more depth to ad analysis. Here’s how it works across sectors.

Example in action:

Let’s say you’re a SaaS company running CRM tools. A search for “best CRM software” returns 4–5 paid listings. Using a headless browser like Playwright or Puppeteer, you can:

- Rotate through multiple keywords

- Scrape ad copy elements per keyword

- Store them for comparison over time

- Detect shifts in seasonal messaging (e.g., “Limited Time Offer” during Q4)

You can even set alerts for copy changes in your top 5 competitors — an early signal that they’re A/B testing or launching a new offer.

Pro Tip:

Add timestamps to your SERP scrapes. Over time, you’ll be able to build a timeline of every headline, hook, or CTA your competitors have ever tested.

For a quick primer on how Google’s ad auction system works, Google’s official resource is here.

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

#2. Scraping Keyword Auctions & Ad Rankings Across Regions

Competitors don’t run the same ads in every region — and if you’re only tracking from one IP, you’re missing the bigger picture. Web scraping enables multi-region tracking of keyword auctions, giving you a real sense of where and how your rivals are prioritizing their spend.

Why this matters:

- Google Ads rankings vary drastically by geo-location

- You can detect localized campaigns that don’t appear in global searches

- It reveals which markets your competitors are aggressively targeting

How it works:

With rotating residential proxies and region-based headers, you can emulate users from multiple cities, states, or countries. For each region, your scraper pulls:

| Data Point | Value |

| Top 3–4 sponsored results | Ad dominance in each market |

| Ad rank position | Competitor share of voice |

| Keyword match type | Intent targeting (broad, phrase, exact) |

| Location-specific copy | Localized offers or CTAs |

This approach is especially useful if you’re in:

- eCommerce: Are they running different offers in Tier 2 cities?

- SaaS: Is there geo-based targeting for SMBs vs enterprises?

- Travel or Finance: Are regional trends affecting keyword competition?

Choosing the right format to store multi-region SERP data efficiently can affect speed and structure. This guide breaks down JSON vs CSV for crawlers.

Example in action:

Let’s say you’re monitoring the keyword “business accounting software.” In the US, your competitor might promote QuickBooks integrations. But in the UK, the same brand could be highlighting MTD (Making Tax Digital) compliance.

Scraping Google SERPs from London, New York, and Singapore gives you a clear view of which markets your competitors are localizing for — and where they’re not.

Pro Tip:

Build heatmaps to visualize ad presence by region. You’ll immediately spot expansion attempts or under-tapped zones.

#3. Monitoring Landing Page Content Changes for Ad-Funnel Mapping

Scraping the ad copy is step one — but the real gold is in where the ad takes the user. By regularly scraping and monitoring landing pages linked from Google Ads, you can reverse-engineer your competitors’ funnel strategies.

Why this matters:

- You understand the full customer journey your competitor is designing

- You can track changes in product messaging, CTAs, forms, and pricing

- You get ahead of new launches, feature pushes, or trial offers

What to track on landing pages:

| Element | Insight |

| Headlines & subheadlines | Value prop framing — what’s emphasized? |

| Hero visuals | Persona targeting clues |

| CTAs & button copy | Funnel depth: trial, demo, pricing, contact? |

| Forms | Lead capture strategy (long form vs. single field) |

| Dynamic elements | Pop-ups, countdowns, chatbot activation, etc. |

| URL variations | UTM codes can reveal campaign segmentation |

Landing page tracking is just one use case — explore more high-impact scraping applications for business insights.

Example in action:

Let’s say a competitor is running an ad with the copy:

“All-in-One Email Marketing for SMBs — Try Free for 30 Days.”

When you scrape their landing page today, it may lead to a free trial form. But next month, that same ad might direct to a product comparison page — or switch focus to enterprise demos. Tracking these changes over time gives you early warning on what part of their funnel they’re optimizing — and whether they’re shifting targeting.

Pro Tip:

Capture screenshots in parallel with raw HTML when scraping landing pages. It helps non-technical teams visualize content shifts and layout tests.

#4. Extracting Keyword Gaps via Competitor Sitemap and URL Scraping

Sometimes the easiest way to understand what your competitors are targeting is to scrape what they’ve already published — especially their landing pages and product URLs. These pages often hint at unadvertised but SEO-primed keywords — giving you keyword research fuel for both organic and paid strategy.

Why this matters:

- You uncover long-tail or niche keywords your competitors are banking on

- Identify gaps in your own PPC strategy

- See how SEO and Google Ads strategies complement each other

What to scrape:

- /sitemap.xml (or variations like page-sitemap.xml, post-sitemap.xml)

- Ad destination URLs from SERPs

- Navigation menus, footer links, and breadcrumb trails

- Blog categories and tags

- Meta titles, descriptions, and H1s from landing pages

| Scraped Source | Keyword Insight |

| Sitemap URLs | Full list of product/feature/geo pages |

| Page titles + meta desc | Keyword clusters competitors are targeting |

| Ad destination URLs | What Google Ads keywords are leading to which page |

| Blog topics | Complementary content targeting lower-funnel terms |

Example in action:

Suppose your competitor runs ads for “cloud ERP software.” Their sitemap includes landing pages titled:

- /cloud-erp-for-manufacturing

- /erp-for-smbs

- /erp-vs-accounting-software

These aren’t always actively advertised — but they’re highly targetable in Google Ads. Scraping and analyzing this structure lets you plug gaps in your own campaigns — or discover untapped keyword opportunities.

Pro Tip:

Capture screenshots in parallel with raw HTML when scraping landing pages. It helps non-technical teams visualize content shifts and layout tests.

Pro tip: Run your scraped URLs through a keyword density and on-page analyzer. It’s a fast way to predict which search terms those pages are optimized for — and how you can match or outperform them.

#5. Using Review & Forum Scraping to Predict Ad Messaging Shifts

Customer reviews and forums are where future ad copy gets tested—whether brands plan it or not. Scraping that feedback lets you see the angles your competitors are likely to lean into next.

Why this matters:

- Ad copy often echoes real customer language — scraping reviews gives you a preview

- You can identify features or frustrations that competitors might highlight soon

- Helps you align your own messaging to counter their strengths or exploit their gaps

What to scrape:

| Source | Insight Unlocked |

| Product reviews | Common pros/cons that may turn into ad hooks |

| Reddit threads | Authentic use cases, feature requests, pain points |

| Q&A sites (e.g., Quora) | Emerging keyword trends via real-world problems |

| YouTube comments | Sentiment around feature updates or industry shifts |

Sentiment data isn’t just for ad predictions — it powers smarter pricing and positioning. See how scraping transforms e-commerce performance.

Example in action:

Let’s say your competitor’s CRM software gets repeated praise in G2 reviews for “easy integration with Slack” — but customers also mention poor mobile UX. Weeks later, you see their ad copy evolve to: “Collaborate seamlessly — CRM + Slack built-in.”

You could’ve predicted that shift with smart review scraping. Now imagine tracking such mentions across 5 review platforms and 3 Reddit threads, all enriched with sentiment tagging — that’s strategic ad readiness.

Pro Tip:

Tag reviews with NLP-based sentiment scores and extract recurring phrases (like “love the dashboard” or “slow syncing”). Then compare this with ad copy evolution over time.

#6. Tracking Ad Frequency + Rotation with Periodic SERP Snapshots

Seeing a competitor’s ad is useful; seeing how often it appears and when it changes is powerful. Schedule recurring SERP scrapes to build a timeline of ad frequency and copy rotations, revealing budget, testing, and strategy shifts.

Why this matters:

- Identify top-performing ad variants (based on persistence)

- Detect A/B testing patterns (headline, CTA, format)

- Track seasonal campaigns and their durations

- Reveal how aggressively competitors are bidding on key terms

How to do it:

- Select 10–20 high-intent keywords

- Run SERP scraping every 6 hours or daily using tools like Puppeteer or Playwright

- Log and compare all sponsored placements

- Track timestamped changes across headline, CTA, extensions, and rankings

| Metric | Strategic Insight |

| Ad presence frequency | Budget allocation and dominance |

| Ad copy changes (by week) | A/B testing and new campaign rollout |

| Ranking shifts | Bid adjustments or competition level |

| Entry/exit of new players | New market entrants or campaign pauses |

Example in action:

If a competitor’s ad for “automated invoicing tool” appears twice daily for three weeks, then suddenly disappears, you know something changed — budget exhaustion, poor performance, or a pivot. If a new variant appears immediately after, that’s a copy test trigger.

Pro Tip:

Use lightweight DOM diffing tools to compare HTML snapshots over time. You’ll catch even subtle copy tweaks and test variants.

#7. Building a Competitor Intelligence Dashboard with Scraped Ad Data

Raw data isn’t strategy. But once you layer in time, geography, and format—and map it visually—you get a dashboard that shows not just what your competitors are doing, but how often, where, and why.

Why this matters:

- Centralizes insights from SERPs, landing pages, reviews, and forums

- Empowers real-time decision-making for your Google Ads team

- Helps justify spend, react to competitor moves, and plan faster

What to include in your dashboard:

| Data Feed | Dashboard Element |

| SERP ad data (text + rank) | Heatmap by keyword and region |

| Ad copy versioning | Timeline of changes with variant tagging |

| Landing page changes | Visual diffs with CTA shifts and pricing |

| Review/forum mentions | Word clouds, sentiment score trends |

| Competitor presence | Share of voice per keyword per week |

Example:

Imagine your dashboard shows that Competitor A increased ad frequency for “AI resume screening” in Q1, and reviews also show rising mentions of “fast filtering” features. Within weeks, their landing page copy shifts to highlight “20x faster screening” — and their Google Ads headline now leads with it.

#8. Detecting Ad Budget Shifts & Strategy Changes via Web Signal Aggregation

ERP data is just one layer. When you connect it with scraped insights from job boards, pricing pages, keyword trends, and third-party ad networks, you can tell whether a competitor is ramping up, testing something new, or quietly cutting back.

Why this matters:

- You get early signals of budget reallocation

- You spot changes in target audience or funnel strategy

- You understand why a campaign is succeeding — not just what it says

What to scrape (beyond SERPs):

| Source | Data Type | Signal Example |

| Job boards (e.g., Indeed) | Hiring patterns | Competitor hiring more performance marketers = budget boost |

| Third-party display networks | Retargeting ads | Seeing a surge in programmatic display = funnel expansion |

| Product listing ads (PLAs) | eComm catalog coverage | New product categories added = new keyword spend |

| Press release wires | Campaign launches, funding news | Funding round = likely ad scale-up |

| G2/Capterra profiles | Paid vs organic traffic trend | Ad share increases vs SEO = channel shift |

Real-world use case:

Suppose you notice that your competitor’s Google Ads presence has dropped for two key terms over the last 10 days. Most teams would assume budget cuts or strategy changes.

But if your scraper also picks up that they:

- Launched new product bundles via scraped Shopify feeds

- Increased programmatic ads seen on ad networks like Outbrain or Taboola

- Posted 3 open roles for “B2B Paid Search Manager”

You now know: it’s not a pullback. It’s a repositioning — likely towards a new campaign, audience, or funnel model. Your team can react faster and better, based on real-world signal triangulation — powered entirely by web scraping.

Pro Tip:

Use a change detection engine like Diffbot, ContentKing, or custom-built webhooks to monitor updates to pages like “Plans,” “Pricing,” or “Case Studies” on competitor domains.

Trend Watch: The Future of Adwords Competitor Analysis with Web Scraping

Web scraping isn’t static — and neither is the competitive landscape on Google Ads. As brands adopt more automation and AI in paid marketing, scraping practices are also evolving. Here are the key trends shaping how teams extract and act on Adwords intelligence in 2025 and beyond.

1. From Snapshots to Streams

Brands are moving from one-off SERP scrapes to live monitoring systems that stream ad data every few hours. This shift allows marketers to:

- React to competitor copy changes in near real time

- Spot daily shifts in ad rank or extension types

- Automate bid adjustments based on competitor presence

2. NLP-Powered Competitor Mapping

Instead of just storing scraped copy, teams are now applying natural language processing (NLP) to:

- Classify competitors by tone (price-led, feature-led, risk-reduction)

- Tag value props by frequency

- Detect new offer patterns (e.g., “only today,” “unlimited seats,” “integrates with X”)

3. Multi-Locale Precision Targeting

Thanks to improved proxy routing and headless browser accuracy, scraping ad data from hyperlocal SERPs (e.g., searches from Mumbai, Dallas, or Berlin) is finally reliable. This lets brands:

- Track regional rollouts by competitors

- Adjust local messaging and CTAs

- Detect early international expansion moves

4. Connecting Ads with Org Signals

Ad analysis is being paired with scraped data from:

- Job boards (marketing headcount = budget increases)

- Press releases (product launch = ad spike)

- G2 reviews (sentiment spike = messaging pivot)

This multi-source triangulation is becoming standard for forward-leaning performance marketers.

5. AI-Generated Ad Mockups (Based on Scraped Trends)

Some brands now use scraped ad copy as training data to generate predictive ad variants using GenAI — helping teams:

- Forecast competitor direction

- Pre-empt their messaging with counter-ads

- A/B test headlines before the competition does

If you're evaluating whether to continue scaling DIY infrastructure or move to govern global feeds, this is the conversation to have.

FAQs

1. What is Google Adwords competitor analysis?

It’s the process of monitoring and analyzing your competitors’ Google Ads campaigns — including their keywords, ad copy, landing pages, and bidding behavior — to gain strategic insights and optimize your own campaigns.

2. Can I track competitor ads without violating Google’s policies?

Yes. Scraping publicly available SERP data and landing pages is legal when done ethically and without violating terms of service. It’s important to avoid personal data and respect robots.txt where applicable.

3. How does web scraping help with keyword research in Google Ads?

Scraping lets you discover real-time keyword usage in competitor ads, identify long-tail opportunities in their URLs or metadata, and map regional differences in keyword targeting — all beyond what keyword planners show.

4. What tools are best for scraping Google Ads data?

Popular tools include Puppeteer, Playwright, Selenium (for headless browsing), rotating proxy services for geo-targeting, and custom scrapers built on Python frameworks. Many companies use PromptCloud to handle this as a managed solution.

5. How do I know if a competitor is increasing their ad spend?

You can detect spend surges by monitoring ad frequency across keywords, new campaign rollouts, hiring spikes in marketing roles, or sudden increases in display ads or press activity — all of which can be scraped and triangulated.