**TL;DR**

Web scraping doesn’t end at extraction. For scraped data to drive decisions, it needs to meet clear quality thresholds; freshness, accuracy, schema validity, and coverage. This playbook shows how to apply layered QA checks, track SLAs, and involve human review when automation falls short. It includes validation logic, sampling strategies, GX expectations, and what metrics to expose downstream.

Why Scraped Data Needs a Dedicated QA Stack

Web scraping is brittle by design. Sites change layouts, fields disappear, proxies fail, anti-bot systems block requests, and JavaScript loaders silently break page rendering. Without a dedicated QA layer, errors like these make their way into the dataset—and into decisions.

Most teams validate scraped data informally, if at all. They spot-check a few rows, re-run failed jobs, or trust that “no errors in the logs” means the data is fine. That doesn’t hold up in production. If your data powers dashboards, training sets, pricing logic, or ML models, QA isn’t optional—it’s infrastructure.

Why It’s Not Just a Scraper Problem

Data quality issues rarely originate from the scraper alone. They come from:

- Schema drift: The field price_usd becomes price, or disappears from the DOM

- Silent failures: Pages render blank, load alternate HTML, or return fallback prices

- Partial coverage: Only 65% of SKUs were collected, but the report assumes 100%

- Outdated snapshots: The dataset includes listings that went out of stock 12 hours ago

- Overwrites: Failed crawls overwrite clean data from earlier successful runs

These aren’t one-off bugs. They’re recurring patterns—especially at scale. QA makes them visible, traceable, and fixable before the data is handed off.

Why Web Data QA Is Different

Web data isn’t static. It changes by the minute. You’re not validating a single source—you’re validating tens of thousands of semi-structured pages, often with inconsistent fields, formats, or delivery times. Your QA stack must handle:

- Dynamic HTML and JSON formats

- Frequent layout updates

- Variable field presence and formats

- Region-specific content changes

- Different tolerances per client or use case

Traditional ETL QA won’t cut it. You need validation logic built for scraped data’s unique challenges.

The Cost of Ignoring QA

Without structured QA:

- Your pricing engine may react to wrong competitor data

- ML models may learn from mislabeled, outdated, or partial inputs

- Analysts may waste hours cleaning what should have been filtered upstream

- Clients may lose trust in your data and not come back

That’s why scraped data needs its own QA infrastructure; purpose-built for real-world web extraction.

Need reliable data that meets your quality thresholds?

Your data collection shouldn’t stop at the browser. If your scrapers are hitting limits or you’re tired of rebuilding after every site change, PromptCloud can automate and scale it for you.

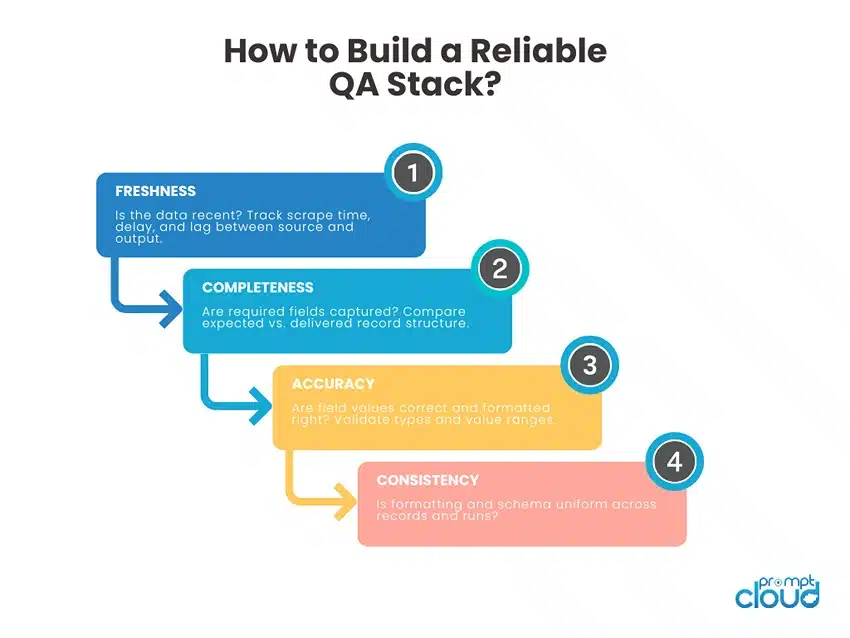

The Layers of Data Quality for Scraping Projects

Not all data issues are equal. Some break your pipeline. Others quietly distort analysis. To manage them, scraped data QA must be structured into layers. Each layer focuses on a specific dimension of quality. Below are the five core layers every scraping pipeline should track.

Freshness

Is the data recent enough to reflect the current state of the web?

- Was the product in stock when scraped, or 18 hours earlier?

- Are prices still valid, or have promotions expired?

- Did the job actually complete, or did it pick up stale cache data?

What to track:

- Timestamps per record (scrape_ts, last_seen)

- Data lag between detection and ingestion

- Coverage gaps from failed or delayed runs

Freshness SLAs often vary by industry. For ecommerce, 95 percent of updates should land within 15 minutes. For real estate, hourly or daily may be acceptable.

Completeness

Did you extract everything you expected?

- Were all required fields captured?

- Did the scraper skip a product tab or missing section?

- Were partial pages mistakenly marked as successful?

What to validate:

- Mandatory field presence checks

- Null ratios per field

- Comparison against known counts (e.g. 250 SKUs expected, only 194 scraped)

Accuracy

Is the data correct and formatted properly?

- Did a $1,199.00 price get parsed as 1199000?

- Did availability flip from “In stock” to “0” due to HTML changes?

- Are product specs matched to the correct variant?

How to catch it:

- Field-level validations (regex, numeric ranges, currency symbols)

- Field type coercion (e.g. price must be float, availability must be enum)

- GX expectations (covered in next section)

Consistency

Does the data align across runs and within the dataset?

- Are units and formats consistent across records?

- Are seller names or SKUs following the same patterns?

- Does the schema drift between pages or over time?

QA checks:

- Key format validation (e.g. all SKUs follow SKU-12345)

- Date format enforcement

- Field alignment across batches

Coverage

Are you scraping what you said you would?

- Did all product categories, city listings, or zip codes get included?

- Did 15 percent of URLs time out silently?

- Are some subdomains blocked by the target site?

Metrics to track:

- Total intended targets vs. successful scrape count

- Geo, category, or tag-based coverage

- Historical trend of scrape failures by segment

Each layer tells you something different. Freshness alerts you to crawl lags. Accuracy catches field-level issues. Coverage shows systemic gaps. Together, they give you a full picture of data quality.

To see QA in action, this automotive dataset page outlines how coverage and accuracy enable price benchmarking and part availability tracking.

Schema Validation, Field QA, and GX Expectations

Scraped data doesn’t come with guarantees. The schema can shift overnight. Fields can vanish or change formats silently. Without automated schema checks, you’re trusting every record blindly. Schema validation is the first QA filter your data should hit. It prevents malformed or incomplete rows from moving downstream.

What Schema Validation Should Cover

- Field presence: Every record should have required fields like url, sku, price, and availability.

- Field type enforcement:

- price should be a float, not a string or empty

- availability should be one of: in_stock, out_of_stock, low_stock

- last_seen should be a valid ISO 8601 datetime

- Value formatting:

- currency should match known codes (USD, INR)

- price should not contain symbols like $ or commas

- Regex validation:

- URLs must start with https://

- SKUs follow a specific alphanumeric format (e.g., SKU-XXXXX)

- Cross-field logic:

- If price_discounted exists, price_original must also exist

- If availability = in_stock, then price must not be null

Note: Scraped data powers decisions; this data validation breakdown covers why broken schemas and unmonitored fields hurt accuracy.

Introducing GX (Great Expectations) for Web Data

Great Expectations is an open-source framework that lets you define “expectations” for your data. While it’s often used in ETL, it’s also effective for scraped datasets.

Example: Define expectations for a price field.

expect_column_values_to_be_between:

column: price

min_value: 0.5

max_value: 100000

expect_column_values_to_match_regex:

column: currency

regex: “^[A-Z]{3}$”

These can run automatically after each scrape job. Failures can trigger alerts, retries, or fallback pipelines.

For field validation and rule-based schema checks, Great Expectations offers a powerful framework that works well with scraped datasets.

Sample Field QA Result (JSON)

{

“record_id”: “SKU-1289”,

“validation_errors”: [

{

“field”: “price”,

“error”: “Non-numeric value”,

“value”: “FREE”

},

{

“field”: “availability”,

“error”: “Unrecognized status”,

“value”: “maybe_later”

}

],

“last_validated”: “2025-09-24T14:20:00Z”

}

This level of field-level QA lets you track issues at scale, debug fast, and prevent downstream damage.

Real-Time Observability: What to Track and Why

Scraping isn’t reliable unless it’s observable. Logging success or failure per job isn’t enough. You need visibility into what’s being scraped, when, how often, and how well. Observability is what connects your raw data pipeline to the QA, reliability, and business outcomes that depend on it. Without it, you’re blind to problems until users report them or worse, act on bad data.

What Should Be Observable in a Scraping System?

- Job-level Metrics

- Start time, end time, duration

- Total URLs attempted, completed, failed

- Proxy usage, retries, headless fallback rate

- Page-level Health

- Time to first byte (TTFB)

- DOM render duration (for headless jobs)

- 200/404/429/500 response distributions

- Response size anomalies

- Field-level QA Stats

- Field null rate per batch

- Regex or type validation failures

- Rate of unexpected field values (e.g. price: null with availability: in_stock)

- Diff & Drift Tracking

- Schema drift detection (new, missing, renamed fields)

- Distribution shifts (e.g. 90% of prices suddenly zero)

- Variance in field structure across domain/subdomain

- Freshness Monitoring

- Last successful scrape per domain

- Time lag between event_ts and scrape_ts

- Job staleness by category or feed

- Coverage & SLA Gaps

- Missed targets vs scheduled targets

- Recovery time from job failures

- SLA compliance rates (95% on-time, 99% complete, etc.)

If you care about pipeline reliability, this guide to real-time scraping architectures explains how QA fits into streaming data pipelines.

Sample Observability Dashboard Widgets

| Metric | Threshold | Alert Type |

| Field validation error % | > 2% | Slack/Email Alert |

| Missing SKUs (weekly) | > 5% gap | Retry + Escalation |

| Median scrape delay | > 20 min | Dashboard Only |

| Proxy failure rate | > 8% | Rotate Pool Trigger |

| Schema drift detected | Yes | Annotator Review |

Real-time observability isn’t just about catching outages. It lets you detect quality regressions, root out silent failures, and prioritize human QA when automation misses the signal.

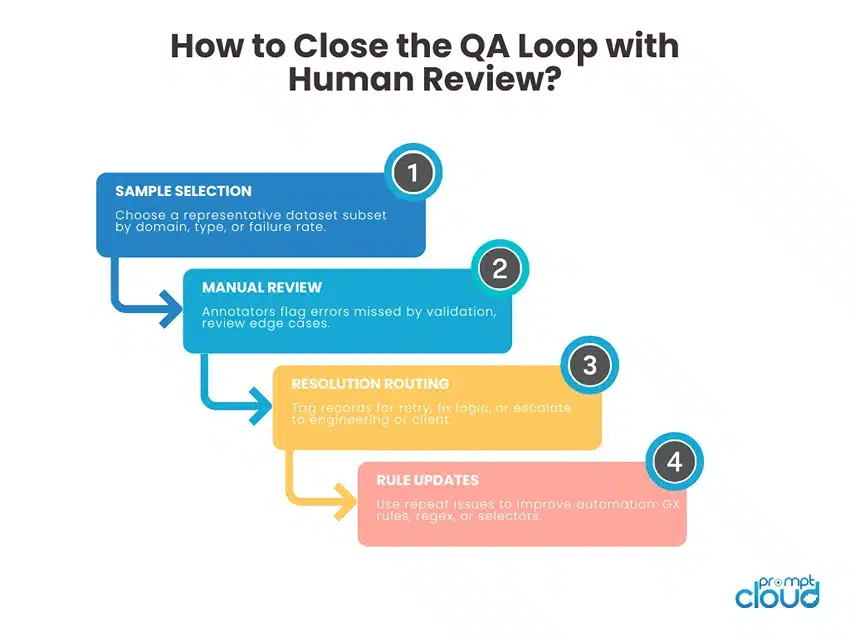

QA Sampling at Scale: When and How to Add Human Review

Automation catches a lot. But not everything. No matter how many schema checks or GX expectations you write, some issues slip through—especially issues related to language, context, or visual layout.

This is where human-in-the-loop QA comes in. Sampling a small percentage of output for manual review helps you catch what machines miss and gives you feedback loops that improve both the scraper and validation logic.

When to Trigger Manual QA Sampling

- After Schema Drift

- New or renamed fields detected

- Significant increase in validation errors

- New or renamed fields detected

- On Business-Critical Fields

- Price, availability, seller names, ratings

- Fields that directly impact dashboards or model input

- When Confidence Is Low

- High proxy failure rate

- Large drop in scrape volume

- Increased fallback to headless rendering

- For New or Changed Targets

- New domains or page templates added to the crawl set

- Recently updated JavaScript-rendered sections

- Periodically (for baseline)

- Weekly sampling for each pipeline

- Rolling review for long-term clients or datasets

Recommended Sampling Strategy

| Dataset Size | Sampling Rate | Sample Size |

| < 10,000 | 5% | 500 |

| 10K – 100K | 2% | 2,000 |

| 100K+ | 0.5% – 1% | 1,000 – 5,000 |

Choose a stratified sample across domains, categories, or record types. Random sampling is fine for general QA, but not enough when some categories carry more risk than others.

Also, for ecommerce signals, this sentiment analysis playbook shows how quality review data supports better trend prediction.

Sample QA Review Form (for Annotators)

| Field | Value | Issue? | Notes |

| SKU | SKU-4893 | No | |

| Price | 899.00 | Yes | Missing currency symbol |

| Availability | in_stock | No | |

| Image URL | [✓] Present | Yes | Broken link |

| Product Title | N/A | Yes | Field missing on live page |

Use this format to track errors per annotator, per sample, and per failure type.

Feedback Loop

- Push human-flagged issues back to the scraper team

- Convert repeat issues into automated GX expectations

- Add temporary overrides for problematic sites until resolved

- Track resolution rates and time-to-fix per issue type

Human QA doesn’t replace automation. It strengthens it. It helps you close the gap between synthetic validation and real-world correctness.

Annotation Layers: What Should Be Labeled, and by Whom?

Annotation is not just for training data. In web scraping, labeled outputs help QA teams, engineers, and clients understand what went wrong and why. When automated validation can’t resolve an issue, annotated metadata flags it for review, resolution, or exclusion.

But not all fields need annotation. And not all annotations should be manual. The key is to define which parts of the dataset require structured, explainable labeling and who is responsible for producing it.

When to Add Annotation

- When auto-validation fails repeatedly

Flag records that consistently fail schema or regex checks despite retries. - During partial page recovery

If a scraper renders only part of a product page (e.g. no reviews, no pricing), annotate which sections were missing. - For business-critical fields

Fields like price, stock_status, or rating that impact downstream revenue, analytics, or compliance need clearer QA tagging. - When escalation is needed

Annotate records that require human re-verification or need to be excluded from a scheduled delivery.

Types of Annotations in Scraping QA

| Annotation Type | Example | Generated By |

| Field-level error | price: non-numeric | Automated validation |

| Recovery action | retried_headless, fallback_ok | Scraper runtime |

| QA review tag | needs_review, ok, drop | Human reviewer |

| Confidence score | title_score: 0.78 | Model or heuristic |

| Reason code | promo_missing, img_broken | QA rule engine |

Example: Annotated Record (JSON)

{

“sku”: “SKU-4958”,

“price”: “N/A”,

“availability”: “in_stock”,

“annotations”: {

“price_error”: “non-numeric”,

“retry_attempted”: true,

“manual_review”: true,

“qa_tag”: “needs_review”

}

}

This annotated output lets your downstream pipeline filter, flag, or request resolution without guessing.

Who Should Annotate What?

- Scraper engine: Flags retry attempts, fallback use, and structural anomalies.

- Validation layer: Labels field errors, failed expectations, and schema mismatches.

- QA analyst: Tags reviewed records, flags issues for escalation, and overrides auto-labels when wrong.

- Client reviewer (optional): In high-stakes use cases (e.g. regulatory), clients may verify random samples via an annotation dashboard.

Annotation isn’t overhead. It’s how you turn a noisy, brittle extraction process into a traceable, auditable pipeline with explainable outcomes.

SLAs for Scraped Data: What’s Reasonable and What’s Not

SLAs tell teams what “good” looks like. They set shared expectations across engineering, QA, and stakeholders. For scraped data, SLAs should reflect how often targets change, how critical the fields are, and how the data is consumed downstream.

Core SLA Dimensions

- Freshness – Defines how quickly updates appear in your dataset after they change on source pages. Typical targets:

- Ecommerce prices and stock: 90–95 percent within 15–30 minutes, 99 percent within 60 minutes

- Travel and real estate: 95 percent within 2–4 hours

- Reviews and UGC: daily or twice daily

- Completeness – Measures whether you delivered the intended scope. Typical targets:

- URLs or SKUs covered: 98 percent per run

- Required fields present per record: 99 percent

- Accuracy – Covers correctness of parsed values and field formats. Typical targets:

- Critical fields (price, availability, title): 99.5 percent valid

- Noncritical fields (breadcrumbs, badges): 97–99 percent valid

- Consistency – Ensures uniform formats, units, and enumerations across the dataset. Typical targets:

- Standardized currency and units across 100 percent of records

- Enumerated statuses mapped correctly across 99.5 percent of records

- Coverage Verifies that geography, categories, or segments match the agreed scope. Typical targets:

- Category or geo coverage: 98 percent of planned segments per cycle

- Missed segments resolved in the next cycle

SLA Table: Targets and Impact

| Metric | Typical Target | What It Impacts | Notes for Buyers and Teams |

| Freshness | 95% ≤ 30 min for price and stock | Repricing, buy box, stock alerts | Align crawl cadence with event triggers |

| Completeness | 98% URLs, 99% required fields | Analytics, dashboards | Track nulls per field, per domain |

| Accuracy | 99.5% for price and availability | Finance, merchandising | Validate types, ranges, and enums |

| Consistency | 100% currency and unit standardization | Modeling, joins, multi-source merge | Enforce mapping dictionaries |

| Coverage | 98% categories or regions | Market share, benchmarking | Monitor gaps and re-run missed segments |

What Is Not Reasonable

- One hundred percent accuracy on dynamic sites at scale

- Instantaneous freshness for every target without event triggers or APIs

- Guaranteed coverage when targets rate-limit or rotate layouts aggressively without notice

How to Operationalize SLAs

- Publish targets and measurement methods in your runbook

- Track SLA compliance per run and per client in your observability dashboard

- Tie retries and escalation rules to SLA breaches

- Share monthly QA summaries with failure types, root causes, and fixes

Clear SLAs prevent surprises. They help teams negotiate tradeoffs and keep data consumers confident in what they receive.

Building the Feedback Loop: QA → Retrain → Retry → Replace

Catching errors isn’t enough. A mature data quality system doesn’t just log what went wrong—it fixes it, learns from it, and prevents it from happening again. This is where the QA loop closes. The goal is to turn every failure into a signal: for retraining models, improving field logic, updating selectors, or alerting clients with transparency.

What a Functional QA Feedback Loop Looks Like

- Detection

- Field-level failures are logged (e.g., price out of range, image missing)

- Schema drifts or null spikes are flagged in observability

- Routing

- Issues are automatically routed by type: scraper team, annotators, validation layer

- Critical issues (e.g., broken price fields) are escalated instantly

- Resolution Paths

- Auto-retry: If scraper failed due to timeout, rotate proxies and reattempt

- Fallback: Use cached version, alternate DOM selector, or structured feed if available

- Manual Review: Send to human annotator for correction or confirmation

- Learning from Errors

- Update GX expectations or schema rules based on failure patterns

- Retrain regexes or field extractors using corrected samples

- Refactor page-specific extractors or reclassify domain templates

- Client Transparency

- Include flags or tags in the dataset when a record was corrected, retried, or inferred

- Provide audit logs and sampling reports where needed

- Share root cause summaries and action taken in monthly QA briefings

What This Loop Prevents

- Silent data corruption from layout changes

- Repeated human review for fixable automation gaps

- Loss of trust from downstream consumers

- Wasted cycles chasing bugs already resolved in other pipelines

High-quality scraped data doesn’t come from one perfect run. It comes from a repeatable system that catches, resolves, learns, and improves with every cycle.

Need reliable data that meets your quality thresholds?

Your data collection shouldn’t stop at the browser. If your scrapers are hitting limits or you’re tired of rebuilding after every site change, PromptCloud can automate and scale it for you.

FAQs

1. What is data quality in web scraping?

It refers to how accurate, complete, timely, and usable your scraped data is. Quality means you can trust the data to make decisions, train models, or power automation without cleanup.

2. How do you validate scraped data fields?

Use field-level checks like type enforcement, regex rules, required field presence, and value ranges. Add schema validation and track nulls, mismatches, and failed expectations.

3. Why is human QA still needed with automation?

Some errors—like missing context, layout drift, or mislabeled content—are hard to catch with rules. Human sampling catches what automation misses and improves the system over time.

4. What are typical SLAs for scraped data delivery?

Freshness: 90–95% of updates in 30 minutes. Completeness: 98–99% of fields filled. Accuracy: 99.5% for core fields. SLAs vary by domain and use case.

5. Can I customize data QA thresholds per project?

Yes. High-risk projects (like pricing) may require tighter thresholds. Others may accept looser SLAs. Good QA systems allow per-client or per-domain customization.