**TL;DR**

Training foundation models used to mean massive labeled datasets, manual annotation, and endless cleaning. Today, by combining synthetic datasets scraping with web-collected data, enterprises can feed models without traditional labels. You scrape, transform, and augment into high-volume synthetic data that supports fine-tuning and retrieval workflows.

What you’ll learn in this article:

- How scraping web data feeds AI datasets through synthetic generation

- What pipelines look like when you skip manual annotation entirely

- How you balance scale, quality and bias when using synthetic scraped data

- Real use cases across AI training, sentiment systems and foundation-model fine tuning

Takeaways:

- Web scraping becomes training data generation, not just monitoring

- Synthetic scraping unlocks scale without labeling bottlenecks

- Quality controls and bias mitigation matter more than ever

Here is how the story begins: you need to fine-tune a large language model. You know you need millions of examples. But you don’t want to wait months for annotation teams. Instead you tap into the web. You scrape reviews, forums, comment threads, product listings – the raw material of inference. Then you feed that into a synthetic generation pipeline. The model uses those scraped inputs to generate new examples, label them, and expand them. Suddenly you have a full training set.

That is the promise of synthetic datasets scraping. Scraping no longer stops at data collection. It becomes the first stage in dataset creation: acquisition, cleaning, augmentation, and synthetic generation. In this article we’ll walk you through:

- Why scraped web data becomes synthetic dataset fuel

- Pipeline architecture for converting scraped web data into synthetic training sets

- Bias, quality and governance considerations when you skip labels

- Use cases across foundation model training, review generation, and AI datasets

- Best practices, tooling, and what “unlabeled” training really means

Ready? Let’s flip the switch on dataset creation.

Why Scraped Web Data Is the New Synthetic Dataset Fuel

Every large AI model needs data, and not just a little. Billions of tokens, millions of examples, and endless context diversity. For years, that meant one thing: labeled datasets built by hand. But manual labeling doesn’t scale. It’s slow, expensive, and biased toward what annotators understand.

That is where scraped web data comes in. The open web is already the largest, most diverse data lake in existence. It contains opinions, structures, instructions, and narratives in every format imaginable. When collected responsibly, this raw material can be transformed into synthetic datasets that rival, and sometimes outperform traditional labeled corpora.

What Makes Web Data So Powerful

- Diversity of expression

Scraped data captures natural variation. One person says “five stars,” another writes “worth every penny.” That linguistic spread trains models to handle nuance. - Context depth

Product listings, reviews, FAQs and more help models understand relationships between attributes, sentiment, and facts (structured and unstructured). - Constant refresh

The web changes every second. Scraping gives you a living dataset, perfect for continuous fine-tuning rather than one-off training. - Label-free potential

You can derive pseudo-labels automatically. A review with “terrible service” becomes a negative sample. A post with “great quality” becomes a positive one. No human tag required.

Why Synthetic Generation Fits

Synthetic dataset creation takes scraped data and amplifies it. You start with a few examples, then use generative models to produce more in the same style or tone. The synthetic layer fills gaps in missing languages, rare classes, under-represented categories; while preserving statistical realism.

In essence, scraping gives you the seed data, and synthetic generation grows the forest.

Want to know more? Learn about this approach described in car marketplaces web scraping case study.

Want to generate synthetic datasets directly from your scraped feeds?

See how our managed data pipelines deliver clean, structured inputs ready for model training and fine-tuning.

How Synthetic Datasets Are Built from Scraped Sources

Turning scraped data into a usable synthetic dataset is not a single command. It’s a careful pipeline where raw, messy text becomes structured, balanced, and model-ready. The process starts with scraping but ends with simulation.

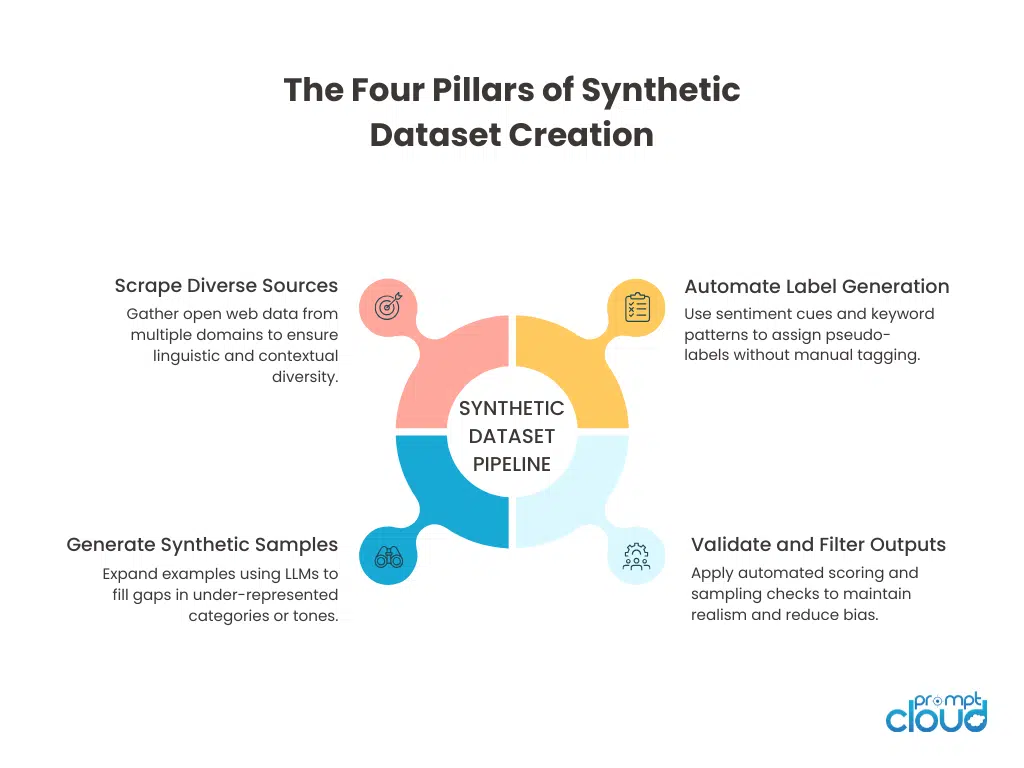

Step 1: Collect plus Clean

Scraping is the foundation. Teams gather data from multiple open sources — product listings, social posts, reviews, or support forums. The cleaning phase removes spam, duplicates, boilerplate text, and off-topic noise. It’s not about volume alone; quality at this stage determines whether the synthetic expansion phase works.

Step 2: Structure and Annotate Automatically

Once you have clean text, you add structure. This includes basic parsing (titles, ratings, timestamps) and automatic pseudo-labeling. For instance, sentences with “amazing,” “excellent,” or “would buy again” become positive examples, while “poor quality” or “never again” become negative.

Step 3: Generate the Synthetic Data

Using generative AI models, teams expand the dataset by prompting them to create similar examples. A review like “Battery life is short” might spawn ten new variants expressing the same idea differently.

This is where “synthetic datasets scraping” becomes literal; scraped input seeds with endless variations that give the model broader generalization power.

Step 4: Validate and Filter

Synthetic doesn’t mean unchecked. Validation filters out duplicates, contradictions, or extreme bias. Models like GPT-4 or Claude can score each record for relevance and coherence. The remaining high-quality set becomes your synthetic corpus.

Step 5: Feed and Fine-Tune

The finished dataset moves into the training pipeline. Some companies use it for fine-tuning existing foundation models; others blend it with labeled data to improve performance in low-resource categories.

Figure 1: The four essential stages that turn scraped data into high-quality synthetic datasets ready for model training.

The Synthetic Dataset Creation Pipeline

| Stage | Input Source | Process | Output |

| Collection | Web pages, reviews, listings, articles | Scrape, clean, deduplicate | Raw text corpus |

| Auto-Annotation | Cleaned text | Keyword and sentiment detection | Pseudo-labeled data |

| Synthetic Generation | Labeled samples | AI model expands examples | Large synthetic dataset |

| Validation | Synthetic outputs | Scoring, deduplication, bias checks | Filtered, coherent data |

| Training | Validated dataset | Fine-tune or augment models | Improved performance |

This loop can run continuously. As new data is scraped, the system regenerates synthetic samples, updates the fine-tuned model, and closes the feedback cycle.

It’s the same adaptive loop that drives many real-time scraping frameworks, like the dynamic processes used in social media scraping for sentiment analysis. The only difference here is that the output isn’t a dashboard, it’s an AI-ready dataset.

Real-World Use Cases of Synthetic Datasets from Scraping

Let’s look at how enterprises are already putting this to work.

1. Sentiment and Opinion Modeling

Customer reviews, comments, and social media posts are the perfect raw material for synthetic sentiment datasets. AI models can analyze scraped reviews, label tone automatically, and generate new examples that express the same emotion in different words.

That expansion helps fine-tune LLMs to detect nuance, a big leap from binary positive or negative scoring. For instance, a team scraping Twitter-like posts can use them to train models for emotion detection, then synthetically expand the dataset to include sarcasm, mild frustration, or exaggerated praise.

2. Product and Review Generation

E-commerce platforms use synthetic review datasets to fine-tune recommendation or summarization models. Scraped product data provides structure (price, category, brand), while scraped user reviews provide tone and detail. Synthetic generation fills in missing classes, for example creating balanced samples of both praise and complaints.

A beauty retailer might scrape thousands of product listings and generate synthetic reviews that reflect typical customer sentiment. The resulting dataset trains the company’s LLM to summarize or predict buyer satisfaction without needing manual tags.

3. Market Intelligence and Forecasting

Synthetic datasets are also valuable for industries that need predictive intelligence but lack labeled training data. Take the automotive sector. By scraping car listings, feature descriptions, and pricing patterns, teams can generate synthetic data to simulate demand scenarios or detect feature-price correlations.

4. News and Event Stream Training

Scraped news data gives models a timeline of public events, opinions, and policy changes. Synthetic augmentation creates additional phrasing and related event contexts, improving how models summarize or classify breaking stories.

This mirrors workflows in how to scrape news aggregators, except the target here is not journalists but machine readers, foundation models that learn to identify patterns in narrative tone, political framing, or topic clustering.

5. Electric Vehicle and Energy Market Models

EV companies and analysts scrape vehicle specifications, charging data, and market announcements to build synthetic datasets for performance and pricing predictions. Synthetic generation expands these datasets with new combinations of attributes such as mileage, charging capacity, and region, helping LLMs predict adoption trends or optimize fleet configurations.

That is the same raw data captured in real-time EV market data scraping, only now it’s used for machine learning rather than monitoring. Each of these examples highlights the same pattern: scrape, synthesize, retrain, repeat. Synthetic datasets from scraping are not a shortcut; they are a smarter route to model maturity.

Quality, Bias, and Validation in Synthetic Scraped Data

Synthetic datasets only work if they reflect the real world accurately. The danger with web-sourced data is that while it’s rich, it’s also noisy, inconsistent, and opinion-heavy. When you amplify that through synthetic generation, any flaw multiplies.

That’s why quality control and bias mitigation are not side steps; they are the spine of the process.

Why Bias Appears in Synthetic Datasets

Bias often creeps in through source imbalance. If most scraped content comes from a narrow demographic or region, the model’s synthetic outputs will mirror those same gaps. Another issue is sentiment skew: online platforms often attract stronger opinions, which can distort tone distribution in training data.

Synthetic generation can also introduce new bias by over-representing certain patterns the model finds “safe.” For instance, when prompted to create neutral reviews, it might default to repetitive phrasing like “good quality and decent price.” Without oversight, the dataset becomes bland and predictable.

Quality Validation Techniques

- Sampling and manual review

Even in automated pipelines, humans remain critical. Reviewing a small random slice of synthetic output reveals tone drift, bias amplification, and hallucination. - Cross-source balancing

Mix data from different platforms and formats, product reviews, news text, support forums, and Q&A sites. The wider the base, the more balanced the generated dataset. - Automated scoring

Use LLMs themselves to rate coherence, diversity, and bias indicators. A scoring script can flag samples that look repetitive or lack sentiment balance. - Distribution monitoring

Track word frequency and sentiment distribution across iterations. A sudden spike in positivity or uniform phrasing often signals synthetic feedback loops. - Benchmark comparison

Validate model performance on downstream tasks before and after using synthetic scraped data. If results improve in recall but drop in nuance, it’s a sign of over-smoothing.

Why Validation Never Ends

Quality isn’t a single checkpoint. Each retraining cycle needs another audit because scraped sources evolve. A shift in platform language, new product categories, or seasonal reviews can quietly alter dataset tone. Continuous validation ensures your synthetic corpus grows with the market, not away from it.

This emphasis on traceability and evaluation is what separates experimental pipelines from production-grade AI data operations. The more synthetic your data gets, the more human your validation should be.

Tools and Frameworks Powering Synthetic Dataset Generation from Scraping

Building synthetic datasets from scraped data isn’t just about collection, it’s about orchestration. The tools you choose decide how well your system scales, how fast it validates, and how safely it learns.

A recent analysis from Dataversity explores how generative AI and synthetic data pipelines are reshaping model training. The article highlights that scraping, augmentation, and synthetic generation are merging into one workflow, making it possible for enterprises to build high-volume datasets without touching a single label.

That vision is already taking shape through modern scraping and generation frameworks.

1. ScraperGPT and LangChain Extensions

ScraperGPT and LangChain-based scrapers allow users to move from text prompts to fully functional scraping workflows. Combined with synthetic data prompts, they can collect, label, and generate variations automatically. Each loop enriches the dataset further without new manual input.

2. Data Augmentation APIs

APIs like OpenAI’s fine-tuning endpoints or Cohere’s dataset expansion modules can take scraped data as input and output balanced, bias-reduced synthetic text. They help fill gaps in low-frequency examples, such as niche product categories or underrepresented languages.

3. Managed Web Data Platforms

Enterprise platforms such as PromptCloud provide the foundation — scalable, structured, and compliant data feeds ready for AI training. Once the web data is extracted, teams plug it directly into their synthetic generation stack. This eliminates the bottleneck of data engineering and ensures all scraped data follows privacy and consent boundaries before model ingestion.

4. Synthetic Validation Frameworks

Frameworks like Cleanlab, Snorkel, and Giskard bring auditability. They detect labeling errors, monitor bias propagation, and score dataset diversity. These tools are becoming essential when synthetic data volume overtakes labeled data volume.

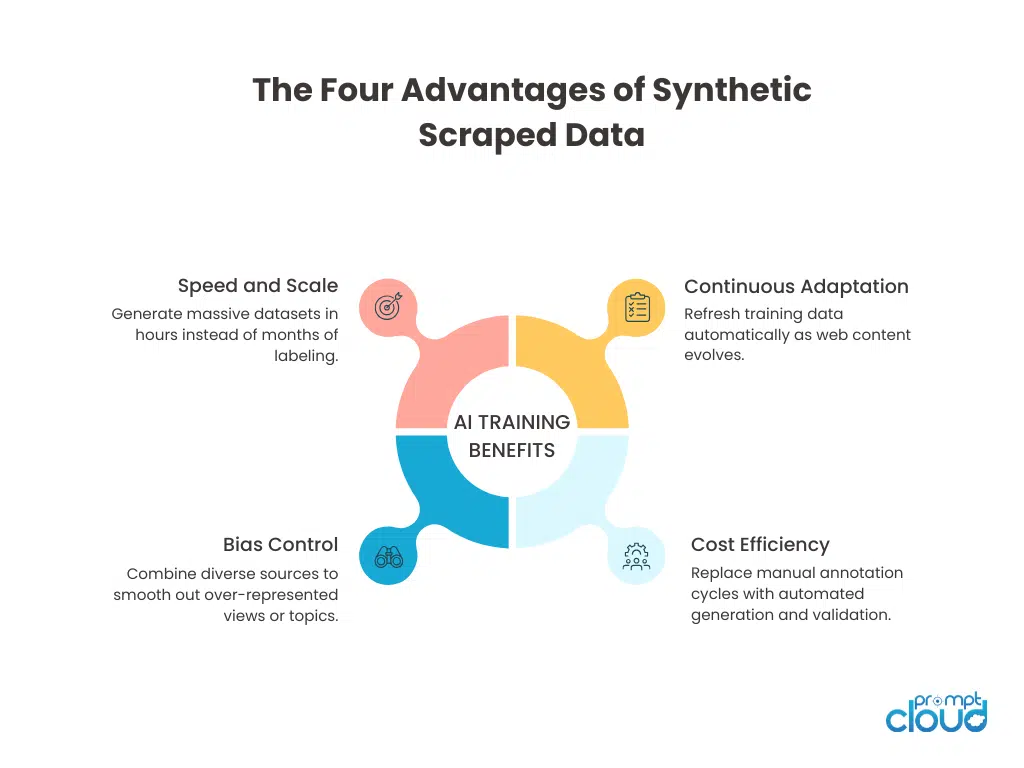

Figure 2: Key advantages that make synthetic datasets from scraping the fastest and most flexible data source for modern AI models.

What Each Tool Solves

| Tool Type | Core Function | Primary Benefit |

| ScraperGPT / LangChain | Automatic scraper and prompt generation | Converts raw web data into synthetic-ready structure |

| Augmentation APIs | Data balancing and gap filling | Expands low-frequency samples |

| Managed Platforms (PromptCloud) | Reliable, compliant data feeds | Ensures legality and structure before training |

| Validation Frameworks | Quality and bias monitoring | Keeps synthetic data realistic and explainable |

These systems together create a self-sustaining ecosystem. The scraper collects, the generator expands, the validator refines, and the managed platform governs. Synthetic datasets no longer feel artificial; they behave like living, learning organisms that evolve with every scrape.

The Future of Scraped Synthetic Data – What It Means for AI Training in 2025

Scraped data was once seen as a shortcut to something quick, messy, and good enough for exploratory analysis. That perception has flipped. Today, it is becoming the backbone of scalable synthetic data generation. The reason is simple: it’s not just abundant; it’s alive.

Foundation models need context, tone, and diversity to stay relevant. The web provides that constantly, and synthetic generation makes it usable without human labeling. This partnership between scraping and synthesis is now a strategic advantage.

In the coming years, we’ll see models trained almost entirely on synthetic scraped data that never touched a manual annotation pipeline. Each cycle of scraping and generation will sharpen the model’s understanding of natural language and edge cases.

Enterprises that build their own scraping-to-synthesis ecosystems will gain three things:

- Speed: faster dataset generation and retraining

- Adaptability: continuous updates as web data evolves

- Control: the ability to fine-tune model behavior without external dependencies

Synthetic datasets will also improve the ethical side of AI training. When built correctly, they minimize exposure to private user data and allow teams to simulate diversity without scraping sensitive content. It’s a way to balance scale with responsibility.

The most interesting shift, however, will be in how teams think about ownership. Instead of paying for labeled datasets, companies will own their data generation engines with pipelines that never run dry. The result is a world where every scrape can become a new dataset, every dataset can evolve into a better model, and every model feeds the next wave of synthetic creation.

That is how AI begins to sustain itself.

Ready to explore synthetic dataset generation from your own web data?

See how our managed data pipelines deliver clean, structured inputs ready for model training and fine-tuning.

FAQs

Synthetic datasets from scraping are AI training corpora built by collecting raw data from public web sources, cleaning it, and using generative models to expand it into labeled or semi-labeled examples. The scraped data serves as the seed, while AI generates variations to create scale and diversity.

Scraped data provides real-world structure and linguistic diversity. It’s cost-effective and constantly updating. When combined with generative models, it becomes the perfect foundation for training LLMs and other AI systems without depending on manual annotation or static datasets.

It accelerates data availability. Teams can fine-tune models more often, test edge cases, and reduce bias by generating balanced examples. Because the data pipeline is continuous, models stay aligned with live trends, tone, and language evolution.

Yes, if done ethically and within compliance limits. Only publicly available, non-sensitive, and non-restricted content should be collected. Platforms like PromptCloud ensure that every scrape adheres to robots rules, data privacy laws, and source permissions before it becomes training material.

When labeled data is scarce, scraped data provides the context and format needed to generate synthetic samples. For instance, a few examples of customer reviews can be expanded into thousands of similar entries that help a model learn sentiment, tone, and writing style.

The biggest challenges are bias, data noise, and validation. Poor-quality sources can distort results, and overgeneration can create repetitive or unrealistic samples. Continuous evaluation, filtering, and source diversity are essential to maintain data reliability.

Not entirely. They complement rather than replace them. Synthetic data fills gaps, improves coverage, and accelerates training, but real labeled datasets are still necessary for ground-truth benchmarking and bias correction.

Frameworks such as LangChain, ScraperGPT, Snorkel, and Cleanlab are popular choices. They automate scraping, labeling, validation, and augmentation in one flow, turning raw web data into fine-tuning material for foundation models.

![Export Website To CSV A Practical Guide for Developers and Data Teams [2025 Edition]](https://www.promptcloud.com/wp-content/uploads/2025/10/Export-Website-To-CSV-A-Practical-Guide-for-Developers-and-Data-Teams-2025-Edition-100x80.webp)