**TL;DR**

Imagine you didn’t have to write a new scraper every time a site changed. Instead, AI web scraping agents, call them ScrapeChain, CrawlGPT, or autonomous crawlers that detect change, rewire themselves, and spin up mini-pipelines on demand.

In this article, you’ll learn how these next-gen agents:

- Build multi-step scraping workflows automatically

- Handle layout drift, anti-bot defenses, and path routing

- Scale without human intervention once configured

- Balance autonomy with governance, QA, and compliance

Takeaways:

- Agentic scraping is shifting scraping from code maintenance to orchestration

- These agents layer LLMs, graph planning, and logic chaining

- Governance, monitoring, and fallback modes are still critical

Let me paint a picture. You’re a data ops lead. A new competitor launches a site with dozens of product pages. You need to get specs, prices, images ; fast. Usually that’d mean spinning up a manual scraper, testing selectors, fixing breaks.

But with AI web scraping agents, the game changes. These agents examine a URL, infer data structure, deploy themselves, adapt when layouts shift, and even trigger new pipeline branches when new entity types appear. They act like little dev teams living inside your scraping stack.

The name “ScrapeChain” comes from combining “scrape” + “pipeline chain”, agents that self-orchestrate a chain of tasks: crawling, extraction, validation, redirection, cleanup. Coupled with tools like CrawlGPT, AutoScraper, and emergent agent architectures, scraping is no longer a collection of fragile scripts; it becomes a modular, agentic ecosystem.

In this article, we’ll walk through:

- What AI web scraping agents really are (beyond buzz)

- How they build pipelines — architecture, planning, fallback paths

- How they handle anti-bot defenses and layout drift

- Where governance, QA, and compliance fit in

- Real use case scenarios and future directions

Ready? Let’s dig in.

What Are AI Web Scraping Agents (and Why They Matter)

Let’s get one thing straight: these aren’t your traditional crawlers with smarter branding. AI web scraping agents are self-directed programs that can interpret, plan, and act, all within your data collection ecosystem.

Traditional crawlers follow instructions. Agents write them.

Where a typical scraper waits for selectors, an AI agent infers the pattern of a page, identifies what’s valuable, and decides how to extract it. It doesn’t just scrape; it thinks about how to scrape. That’s the key shift.

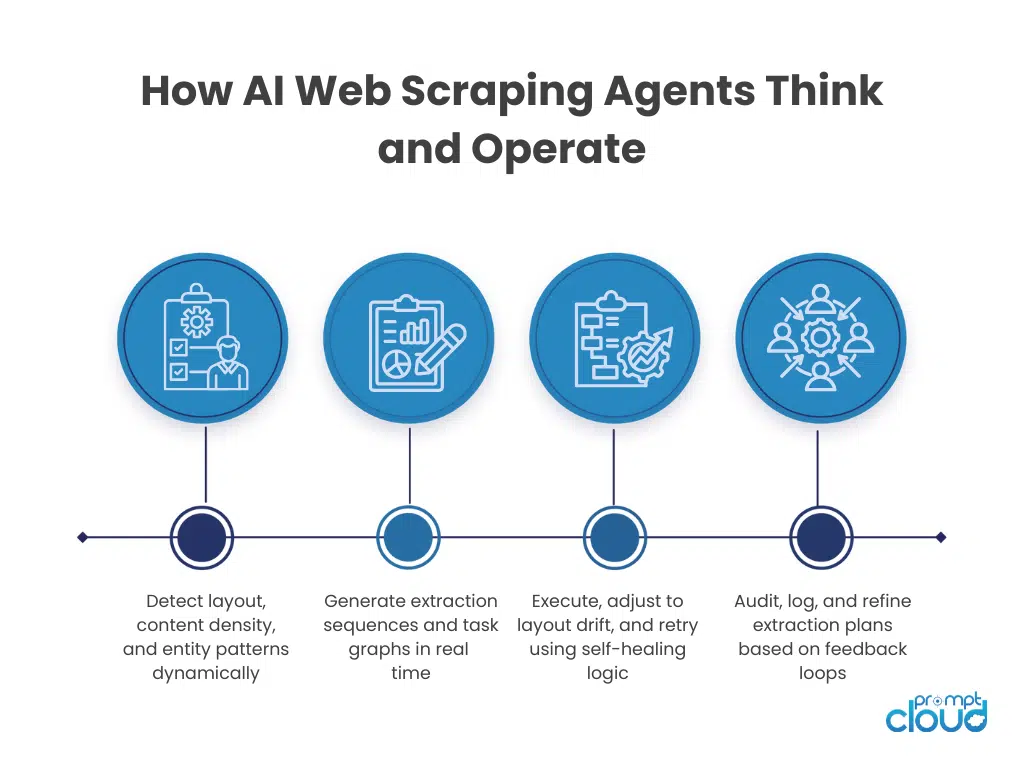

Figure 1: How AI web scraping agents reason and adapt through an observe–plan–act loop that keeps pipelines running even when sites change.

How They Work at a High Level

Each agent combines three key layers:

- Observation Layer: It scans the DOM, identifies structure, content density, and data hierarchies.

Example: On an eCommerce page, it can tell that “price,” “availability,” and “title” belong to one product card — even if there’s no schema markup. - Planning Layer: The agent then generates a workflow graph. It decides which pages to visit, how often, and which links represent new entities versus repetitive noise.

- Action Layer: It executes the crawl autonomously — adapting to JavaScript, retries, and anti-bot mechanisms on the fly.

This three-step reasoning loop mirrors how human engineers think: observe, plan, act, review.

Why This Matters for Enterprises

Enterprises don’t just want data; they want continuity. Most scraper breakages happen because of layout drift — small HTML or structure changes that invalidate old selectors. AI agents overcome this by learning patterns, not rules.

Instead of relying on brittle CSS paths, they look for semantic signals. So when a site relabels “Price” to “Amount” or moves “Reviews” into a tab, the agent still finds it.

Another major advantage is parallelization. A single ScrapeChain deployment can spawn hundreds of smaller task-specific agents; each focused on one subdomain, category, or dataset — all feeding into a shared schema.

It’s automation layered on top of automation.

The Strategic Payoff

For data teams, this means fewer firefights. Less time patching scripts, more time refining datasets.

For product teams, it means getting web data that’s always fresh, not just recent. And for compliance and governance? It’s a step closer to traceable automation; every action logged, every schema versioned.

Agentic scraping is not hype; it’s the inevitable evolution of scale. When you can delegate crawling logic itself, the bottleneck shifts from development to orchestration.

Want to see how autonomous agents can manage scraping at enterprise scale?

Your data collection shouldn’t stop at the browser. If your scrapers are hitting limits or you’re tired of rebuilding after every site change, PromptCloud can automate and scale it for you.

Inside the ScrapeChain: How AI Agents Build Autonomous Pipelines

A ScrapeChain isn’t a single crawler; it’s a network of agents that build, adjust, and maintain pipelines without human supervision. Think of it as a living organism inside your data infrastructure, constantly adapting to the web’s shifting structure.

The Anatomy of a ScrapeChain

Every ScrapeChain pipeline operates as a chain of specialized agents, each trained for one job. Together, they replace the manual tasks that once took developers days to coordinate.

- Discovery Agent: This one scouts the terrain. It crawls a given domain, identifies recurring URL patterns, and maps all the unique entity types it finds — products, reviews, listings, or posts.

- Extraction Agent: Once the structure is known, this agent identifies data-rich zones and learns how to extract them without being told exact selectors. It adapts when content shifts from HTML to JSON or when layouts are dynamically rendered.

- Validation Agent: Every dataset it collects passes through a validation step that checks schema integrity, duplicates, and content consistency. If the data fails, the agent triggers a self-corrective feedback loop to adjust extraction logic automatically.

- Governance Agent: This layer keeps logs, validates ethical collection, respects robots.txt, and checks rate limits to ensure responsible behavior.

The result is a chain of self-correcting tasks where one agent’s output becomes another’s input; forming a continuous cycle of discovery, extraction, and refinement.

Figure 2: The four-agent ScrapeChain framework showing how discovery, extraction, validation, and governance layers collaborate to build autonomous data pipelines.

Planning, Chaining, and Reuse

What makes a ScrapeChain powerful isn’t that it scrapes autonomously. It’s that it learns from itself.

When an agent completes a crawl, it logs the workflow; what worked, what failed, and which strategies avoided blocks. The next agent uses that data as a blueprint for its own run.

Over time, the system develops a shared knowledge graph: a network of crawl logic that gets richer with every scrape. That’s what allows AI scraping frameworks to scale horizontally across industries without retraining from scratch.

Multi-Step Workflows in Action

Picture this: A ScrapeChain is tasked with monitoring a car rental site.

- The Discovery Agent maps city-level listing URLs.

- The Extraction Agent scrapes details like availability, pricing, and vehicle type.

- The Validation Agent catches a spike in missing data for one region and triggers a feedback loop.

- A Governance Agent pauses scraping on that section until validation clears.

In human terms, that’s the equivalent of a full data engineering team working overnight, except it happens automatically.

Integration with LLM Planning Systems

Modern ScrapeChain deployments often plug into LLM planners that handle task orchestration and error correction. If an agent hits a new site type, an LLM determines the sequence of steps: which sub-agents to spawn, which APIs to hit, and which output schema to follow.

These LLMs also help agents “explain” themselves — producing logs in natural language that describe why certain paths were taken, which selectors changed, or why a source was skipped. That explainability is what makes agentic systems enterprise-ready.

DYK: Many of these pipelines borrow design logic from real-time data orchestration systems like those used for real-time SKU tracking in F&B. The same agentic logic that adjusts to product updates now powers ScrapeChain architectures across industries.

How LLMs, Planning, and Self-Correction Power ScrapeChain Intelligence

The real intelligence behind AI web scraping agents doesn’t come from their ability to fetch data; it comes from how they plan, reason, and recover. Modern ScrapeChain systems integrate LLMs not as operators but as coordinators; deciding what each agent should do, in what order, and how to react when something breaks.

Let’s unpack how this triad of logic works.

The LLM Layer: Planning and Context Awareness

In traditional pipelines, scrapers follow static workflows: crawl, extract, validate, repeat. But LLM-driven agents operate more like project managers. They interpret the target domain, decide the best approach, and instruct specialized agents to execute subtasks.

Here’s how the roles differ:

| Pipeline Type | Traditional Web Scraper | AI Web Scraping Agent (LLM-Driven) |

| Workflow Definition | Hardcoded rules and paths | Dynamically planned via prompt logic and feedback |

| Error Handling | Manual debugging | Self-healing based on logs and anomaly detection |

| Adaptation to Layout Drift | Requires manual fix | Adjusts selector strategy autonomously |

| Scalability | Linear (1 scraper per site) | Exponential (1 ScrapeChain orchestrating 100+ agents) |

| Explainability | Minimal logs | Natural-language reports generated by LLM reasoning |

This shift replaces static logic trees with prompt-based reasoning loops. Agents can “talk” to each other, share results, and decide if a task was successful before handing it off downstream.

Multi-Agent Coordination: The Chain-of-Thought in Action

When a site introduces new layouts or embedded content, ScrapeChain agents don’t simply fail; they negotiate. The Extraction Agent sends a failure signal, the LLM planner interprets it, and the Discovery Agent is instructed to remap the DOM structure. That negotiation loop is what keeps pipelines running through site changes without developer intervention.

How ScrapeChain Agents Communicate and Self-Correct

| Trigger Event | Detected By | Response | Result |

| Page layout changes | Validation Agent | LLM planner generates new extraction plan | Workflow updated automatically |

| Missing schema fields | Governance Agent | Alert triggers schema reconciliation | Data gap filled and re-validated |

| Captcha or bot block | Extraction Agent | Proxy rotation and delay rules auto-adjusted | Crawl resumes without manual patching |

| API response changes | LLM planner | Updates mapping logic via chain memory | Pipeline continuity preserved |

The Role of Memory and Feedback

Each run builds historical memory — storing site structure, response behavior, and performance metrics. When a similar pattern appears later, the planner recalls the best approach instead of starting from scratch. That’s what makes a ScrapeChain self-evolving: every crawl makes it smarter.

In other words, your web scraping infrastructure begins to operate like an autonomous research team — learning, documenting, and optimizing its own methods.

The Practical Upside

This architecture brings measurable gains:

- Maintenance costs drop because fixes become automated.

- Pipeline uptime rises because recovery is continuous.

- Data quality improves because every failure becomes training material.

And all of this happens while maintaining traceability, compliance, and audit trails — key requirements for enterprise data operations.

FYI: The same agentic planning models now power dynamic orchestration in complex tasks such as real-time stock market data scraping. These multi-agent frameworks reuse the same principles of self-correction and adaptive throttling.

Governance, Safety, and Human Oversight in Autonomous Scraping

The promise of autonomy always brings a question: who’s really in control? With AI web scraping agents, this question isn’t just philosophical; it’s operational. When agents learn, adapt, and deploy themselves, enterprises must enforce a framework that keeps automation auditable, ethical, and under control.

Let’s look at how responsible teams design guardrails without slowing innovation.

The Three Layers of Governance

Governance in ScrapeChain systems works on three levels:

| Layer | What It Governs | Purpose |

| Operational Governance | Access permissions, proxy usage, request frequency | Prevents overreach and IP blocks |

| Data Governance | Schema validation, freshness, duplication | Keeps the dataset compliant and traceable |

| Ethical Governance | Source eligibility, content sensitivity, consent checks | Ensures legal and moral data use |

Each layer functions as a checkpoint before data moves downstream.

For example, the operational layer ensures that a crawler never exceeds rate limits. The data layer validates each record before ingestion. The ethical layer screens domains; ensuring no gated, paywalled, or personally identifiable information slips through.

The Role of the Human-in-the-Loop

Autonomy doesn’t eliminate humans; it elevates them. ScrapeChain engineers review logs, verify anomaly reports, and oversee high-impact domains. They act as governance auditors — signing off before an agent expands to new categories or client pipelines.

A balanced setup looks like this:

- Agents do 90% of the work autonomously.

- Human reviewers inspect 10% of events flagged by deviation or sentiment thresholds.

- Compliance teams receive weekly reports with full lineage of what each agent scraped, when, and why.

This keeps oversight efficient without killing the speed advantage of AI-driven systems.

Compliance as a Feature, Not a Burden

Modern agent frameworks like ScrapeChain or CrawlGPT embed compliance directly into architecture.

That means no data is stored without validation, no request is made without rate limiting, and no output is passed downstream without timestamp verification. In enterprise-grade deployments, this compliance-first model is critical for both security and reputation. A single questionable scrape can turn into a regulatory headache especially under laws like GDPR or CCPA.

FYI: That’s why large data platforms integrate similar validation logic used in weekly housing market data pipelines, balancing automation with data lineage, freshness, and source verification.

Why Oversight Scales Trust

Autonomous agents work best when stakeholders trust their outputs. By enforcing transparent governance – every request logged, every decision traceable, enterprises gain confidence that their agent-driven pipelines operate with accountability.

When an audit or client review happens, they can produce evidence, not explanations. That’s how AI-driven scraping scales responsibly: through measurable, monitored autonomy.

Use Cases — How Enterprises Deploy ScrapeChain in the Real World

It’s one thing to talk about agent-based scraping in theory. It’s another to see how it reshapes day-to-day enterprise operations. From eCommerce to finance to research, ScrapeChain systems are already replacing brittle scraping setups with adaptive, intelligent workflows.

Let’s walk through a few real-world scenarios where these agents deliver tangible value.

1. ECommerce: Real-Time Catalog Intelligence

Retailers and marketplaces rely on data freshness — prices, stock levels, and promotions change by the minute. Traditional crawlers often fall behind or break when product layouts update. ScrapeChain agents continuously adapt.

How it works:

- Discovery Agents scan entire category trees.

- Extraction Agents capture product details, pricing, and images.

- Validation Agents detect missing SKUs and automatically retry.

- Governance Agents ensure each scrape respects rate limits and site directives.

Result: product monitoring becomes continuous, not scheduled.

2. Finance: Market Sentiment and Signal Extraction

Financial analysts depend on second-by-second data shifts — press releases, filings, news, and even social signals. AI web scraping agents can monitor hundreds of financial domains simultaneously, learning patterns in how tickers, topics, and tone move together.

How it works:

- Agents classify sources by reliability and sentiment intensity.

- LLMs summarize news context into structured variables.

- Data governance ensures no licensed or non-public information is captured.

Result: faster signal extraction, higher model precision, and auditable compliance.

3. Real Estate: Dynamic Listing Aggregation

Housing data changes daily: listings expire, photos update, and price drops go unnoticed without automation. ScrapeChain agents create continuous monitoring systems that track every movement across property portals and classifieds.

How it works:

- Agents detect new or removed listings automatically.

- LLM planners classify listing type, region, and amenities.

- Deduplication agents remove reposted or redundant entries.

Result: a unified real estate feed that stays updated with minimal human input.

4. Research and Policy: Monitoring Public Information

Governments, NGOs, and think tanks use agentic scraping to collect regulatory updates, climate reports, and policy publications. ScrapeChain agents help track when new data drops or when official sources quietly update historical records.

How it works:

- Agents follow institutional websites, public APIs, and document repositories.

- LLM layers summarize documents, extract metadata, and flag new versions.

- Governance rules ensure collection is limited to publicly released data only.

Result: researchers get cleaner, more structured, and always-current datasets for decision-making.

5. Media and Brand Monitoring: Sentiment at Scale

In digital reputation management, timing matters more than reach. ScrapeChain agents operate as persistent crawlers scanning media coverage, forums, and social chatter — adapting when tone or keywords shift.

How it works:

- Discovery Agents identify brand mentions across non-API sources.

- LLM layers interpret emotion and sentiment with contextual reasoning.

- Alerts route automatically when negative sentiment clusters emerge.

Result: a living feedback loop between reputation, customer insight, and operational response.

The Broader Payoff

Across industries, ScrapeChain agents transform web scraping from maintenance-heavy pipelines into self-managing ecosystems. They don’t just automate extraction; they automate resilience, error recovery, and insight delivery. That’s the leap from crawling to intelligence.

Know more: For an in-depth look at autonomous data agents, see “The Rise of AI Agents for Web Scraping and Automation” on TechTarget. It explores real-world deployments of agentic crawlers across industries.

Challenges, Limitations, and the Future of ScrapeChain Agents

Every new paradigm starts to get messy. AI web scraping agents are powerful, but they’re not magic.

Behind the automation lies a list of very human challenges that teams still need to solve before agentic scraping becomes a default enterprise standard.

1. Model Drift and Context Loss

LLM planners get smarter with feedback, but without regular retraining, they start forgetting context. A site that once worked perfectly may break silently because the model stopped recognizing layout cues.

Fix: establish retraining intervals, version models like code, and log every reasoning chain.

2. Governance vs. Agility

Autonomy loves freedom; compliance demands control. Finding the balance is tricky. Too many restrictions slow agents down; too few create risk.

Fix: build tiered governance. Let low-risk sources run with full autonomy while sensitive or regulated domains route through human approval.

3. Data Verification Bottlenecks

ScrapeChain systems can generate enormous volume. Without matching verification capacity, QA becomes the choke point.

Fix: automate multi-stage validation pipelines and apply sampling audits instead of manual review of full datasets.

4. Explainability and Debugging

LLMs can tell you what they did but not always why. When a planner rewrites a workflow mid-run, engineers need clear traceability.

Fix: embed explainability layers that record reasoning steps, output summaries, and change history.

5. Cost of Autonomy

Autonomous agents require significant compute for inference, orchestration, and validation. That overhead can erase the efficiency gains if not optimized.

Fix: adopt event-driven triggers. Run reasoning only when structural drift or failure is detected, not on every routine crawl.

The Future: Self-Organizing Data Ecosystems

Over the next few years, we’ll see ScrapeChain evolve from isolated systems into self-organizing data ecosystems. Agents will collaborate across companies, negotiating access, exchanging schemas, and updating each other’s playbooks.

For enterprises, this means:

- Near-zero downtime across thousands of domains

- Transparent data provenance baked into every request

- Lower maintenance costs and faster adaptation to web changes

The final step will be governed autonomy; AI agents operating freely within human-defined boundaries.

Scraping will shift from engineering to orchestration, and “broken scrapers” will become a relic of the past.

Ready to explore how AI web scraping agents can streamline your data pipelines?

Your data collection shouldn’t stop at the browser. If your scrapers are hitting limits or you’re tired of rebuilding after every site change, PromptCloud can automate and scale it for you.

FAQs

1. What are AI web scraping agents?

AI web scraping agents are autonomous crawlers that can plan, adapt, and execute scraping workflows without manual coding. They use reasoning models like LLMs to interpret site structure, generate extraction logic, and repair workflows automatically when something breaks.

2. How is a ScrapeChain different from a normal web scraping framework?

A ScrapeChain is a chain of specialized agents that handle different scraping stages — discovery, extraction, validation, and governance. Each agent communicates with the next, forming a self-correcting loop that maintains continuity even when websites change.

3. Can AI web scraping agents replace human developers?

Not entirely. They automate repetitive scraping tasks and recovery processes but still need human oversight for governance, compliance, and complex interpretation. Think of them as copilots for data engineers, not replacements.

4. What kind of AI models power ScrapeChain systems?

ScrapeChain systems combine large language models for reasoning and planning with smaller task-specific models for validation, deduplication, and classification. The LLM decides what to do, while the lightweight agents execute and verify.

5. How do autonomous scraping systems stay compliant with data privacy laws?

Compliance is built into the architecture. Agents follow robots.txt rules, avoid gated or personal data, and log every request for auditability. Managed partners like PromptCloud embed GDPR and CCPA safeguards directly into their orchestration frameworks.

6. What are the main advantages of using AI web scraping agents?

They minimize maintenance, reduce downtime, and adapt instantly to layout changes. They also produce cleaner, validated datasets by learning from every run, which improves both quality and reliability for downstream analytics.

7. What industries benefit most from agent-based scraping?

Any sector where web data changes fast — eCommerce, finance, travel, media, and research — benefits from autonomous pipelines. These systems handle volatility better than traditional crawlers and ensure that data stays accurate in real time.

8. Can ScrapeChain agents collaborate with existing ETL or data warehouse systems?

Yes. Most enterprise deployments integrate agents through APIs or message queues, allowing scraped data to flow directly into ETL, BI, or LLM-based analytics pipelines without reconfiguration.