Ever since the world wide web started growing in terms of data size and quality, businesses and data enthusiasts have been looking for methods to extract web data smoothly. Today, the best web scraping tools can acquire data from websites of your preference with ease and prompt. Some are meant for hobbyists, and some are suitable for enterprises.

DIY web scraping software belongs to the former category. If you need data from a few websites of your choice for quick research or project, these web scraping tools are more than enough. DIY web scraping tools are much easier to use in comparison to programming your own data extraction setup. You can acquire data without coding with these web scraper tools. Here are some of the best data acquisition software, also called web scraping software, available in the market right now.

Outwit Hub

Outwit hub is a Firefox extension that can be easily downloaded from the Firefox add-ons store. Once installed and activated, it gives scraping capabilities to your browser. Extracting data from sites using Outwit hub doesn’t demand programming skills. The set-up is fairly easy to learn. You can refer to our guide on using Outwit hub to get started with extracting data using the web scraping tool. As it is free of cost, it makes for a great option if you need to crawl some data from the web quickly.

-

Web Scraper Chrome Extension

Web scraper is a great alternative to Outwit hub, which is available for Google Chrome, that can be used to acquire data without coding. It lets you set up a sitemap (plan) on how a website should be navigated and what data should be extracted. It can crawl multiple pages simultaneously and even have dynamic data extraction capabilities. The plugin can also handle pages with JavaScript and Ajax, which makes it all the more powerful. The tool lets you export the extracted data to a CSV file. The only downside to this web scraper tool extension is that it doesn’t have many automation features built-in. Learn how to use a web scraper to extract data from the web.

-

Spinn3r

Spinn3r is a great choice for scraping entire data from blogs, news sites, social media and RSS feeds. Spinn3r uses a firehose API that manages 95% of the web crawling and indexing work. It gives you the option to filter the data that it crawls using keywords, which helps in weeding out the irrelevant content. The indexing system of Spinn3r is similar to Google and saves the extracted data in JSON format. Spinn3r’s scraping tool works by continuously scanning the web and updating its data sets. It has an admin console packed with features that lets you perform searches on the raw data. Spinn3r is one of the best web scraping tools if your data requirements are limited to media websites.

-

Fminer

Fminer is one of the easiest web scraping tools out there that combines top-in-class features. Its visual dashboard makes web data extraction from sites as simple and intuitive as possible. Whether you want to crawl data from simple web pages or carry out complex data fetching projects that require proxy server lists, Ajax handling and multi-layered crawls, Fminer can do it all. If your project is fairly complex, Fminer is the web scraper software you need.

-

Dexi.io

Dexi.io is a web-based scraping application that doesn’t require any download. It is a browser-based tool for web scraping that lets you set up crawlers and fetch data in real-time. Dexi.io also has features that will let you save the scraped data directly to Box.net and Google Drive or export it as JSON or CSV files. It also supports scraping the data anonymously using proxy servers. The crawled data will be hosted on their servers for up to 2 weeks before it’s archived.

-

ParseHub

Parsehub is a tool that supports complicated data extraction from sites that use AJAX, JavaScript, redirects, and cookies. It is equipped with machine learning technology that can read and analyze documents on the web to output relevant data. Parsehub is available as a desktop client for windows, mac, and Linux and there is also a web app that you can use within the browser. You can have up to 5 crawl projects with the free plan from Parsehub.

-

Octoparse

Octoparse is a visual scraping tool that is easy to configure. The point-and-click user interface lets you teach the scraper how to navigate and extract fields from a website. The software mimics a human user while visiting and scraping data from target websites. Octoparse gives the option to run your extraction on the cloud and on your own local machine. You can export the scraped data in TXT, CSV, HTML, or Excel formats.

Web Scraping Tools vs DaaS Providers

Although web scraping sites tools can handle simple to moderate data extraction requirements, these are not recommended if you are a business trying to acquire data for competitive intelligence or market research. DIY scraping tools can be the right choice if your data requirements are limited and the sites you are looking to crawl are not complicated.

When the requirement is large scale and complicated, tools for web scraping cannot live up to the expectations. If you need an enterprise-grade data solution, outsourcing the requirement to a DaaS (Data-as-a-Service) provider could be the ideal option. Find out if your business needs a DaaS provider.

Dedicated web scraping service providers such as PromptCloud take care of end-to-end data acquisition and will deliver the required data the way you need it. If your data requirement demands a custom-built setup, a DIY tool cannot cover it. Even with the best web scraping tools, the customization options are limited and automation is almost non-existent. Tools also come with the downside of maintenance, which can be a daunting task.

A web scraping service provider will set up monitoring for the target websites and make sure that the web scraper setup is well maintained. The flow of data will be smooth and consistent with a hosted solution.

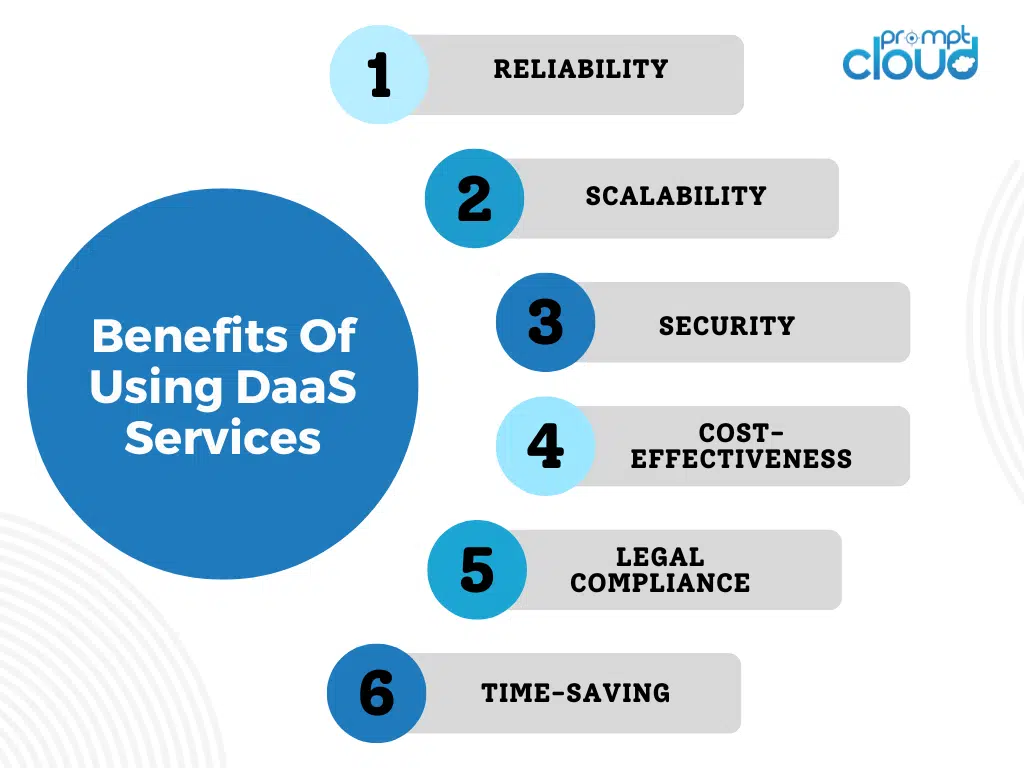

Here are some of the benefits of using DaaS services such as PromptCloud for web scraping over DIY web scraping tools:

- Reliability: DaaS providers are more reliable than web scraping tools as they provide access to high-quality data that is accurate, timely, and consistent. In contrast, web scraping tools may be affected by changes in website structure or coding, leading to inconsistent or incomplete data.

- Scalability: DaaS services are highly scalable and can handle large volumes of data with ease. This makes it possible to extract data from multiple sources simultaneously, saving time and effort. Web scraping tools, on the other hand, may struggle to handle large volumes of data, resulting in slower processing times and increased risk of errors.

- Security: DaaS services provide better security features than web scraping tools, including encryption, authentication, and authorization. This makes it possible to protect sensitive data and comply with data privacy regulations. Web scraping tools may lack these security features, leaving businesses vulnerable to data breaches and cyber-attacks.

- Cost-effectiveness: DaaS services can be more cost-effective than web scraping tools as they offer a pay-per-use pricing model. This means businesses only pay for the data they need, reducing the risk of wasted resources or overspending. Web scraping tools often require upfront costs and ongoing maintenance expenses, making them less cost-effective over time.

- Legal compliance: DaaS providers are responsible for ensuring that the data they collect is obtained legally and ethically, which can be challenging for web scraping tools. DaaS providers have experience in navigating legal and ethical issues related to web scraping, which can help you avoid legal issues.

- Time-saving: DaaS providers can help you save time by automating the web scraping process. This can be particularly useful if you need to scrape data frequently or in large volumes. With web scraping tools, you may need to manually configure each scrape, which can be time-consuming and error-prone.

Conclusion

While DIY web scraping sites tools can be useful for some businesses, Data as a Service (DaaS) providers offer several key advantages that make them a superior option. DaaS providers can offer scalable, reliable, and high-quality web scraping services that are tailored to your specific needs. They can also provide technical support, legal compliance, and integration with your existing systems, which can save you time and money.

Additionally, DaaS providers can customize their services and offer flexible pricing, making it a cost-effective option for businesses of all sizes. By leveraging the expertise and infrastructure of DaaS providers, businesses can obtain the scraped data they need with greater ease and accuracy, allowing them to make more informed business decisions.

Frequently Asked Questions (FAQs)

What is best tool for web scraping?

One of the best tools for web scraping is PromptCloud itself. It offers a robust, scalable, and customizable solution that caters to various web scraping needs. With PromptCloud, you can efficiently handle large-scale data extraction while ensuring data quality and reliability. Its services are designed to meet specific client requirements, making it a versatile choice for businesses of all sizes.

Is web scraping for beginners?

Web scraping can be beginner-friendly, especially with the right tools and resources. While it involves understanding web technologies like HTML and possibly programming languages like Python, many tools and platforms simplify the process. For beginners, starting with user-friendly tools and educational resources is key. As skills develop, one can gradually take on more complex scraping tasks. PromptCloud, for example, offers services that can be very accessible for beginners, providing a more straightforward entry into web scraping.

What is used for web scraping?

Web scraping typically involves tools and software like web crawlers or scrapers, which automate the process of extracting data from websites. These tools are programmed to navigate web pages and collect specific data, often using languages like Python and frameworks such as Scrapy or BeautifulSoup. Additionally, services like PromptCloud offer customized web scraping solutions, handling the complexity and providing the extracted data in a structured format, ideal for various business needs.

Can ChatGPT do web scraping?

No, ChatGPT cannot perform web scraping. It’s designed to assist with information and answer questions based on the knowledge it has been trained on, but it does not have the capability to browse the internet or extract data from websites in real-time. For web scraping, you would typically use specialized tools or services designed for that purpose, such as web scraping software or platforms like PromptCloud.

Which tool is best for web scraping?

The “best” web scraping tool largely depends on your specific needs, including the complexity of the websites you aim to scrape, your technical expertise, and the scale of your scraping project. However, two of the most popular and widely used tools are:

- Scrapy: Ideal for large-scale web scraping and crawling projects, Scrapy is a fast, open-source framework designed for Python developers. It excels in extracting data efficiently and is highly customizable for complex web scraping needs.

- Beautiful Soup: Best suited for smaller projects or those new to web scraping, Beautiful Soup is a Python library that makes it easy to scrape information from web pages. It’s user-friendly and great for simple scraping tasks, especially when used in conjunction with the Requests library.

Both tools have their strengths and are highly regarded in the web scraping community. Your choice should be based on your project requirements and programming skills.

What is web scraper tool?

A web scraper tool is a software application designed to automate the process of extracting data from websites. These tools navigate the web, access specified websites, and systematically collect data from those sites according to predefined criteria. The extracted data can then be processed, stored, and analyzed for various purposes, such as market research, competitive analysis, price monitoring, and sentiment analysis.

Web scraper tools vary in complexity and functionality, ranging from simple browser extensions that can capture specific data points from web pages to sophisticated software frameworks that can crawl entire websites, handle login authentication, and extract data hidden behind web forms or JavaScript-generated content. They can be tailored to respect a site’s robots.txt file, handle CAPTCHAs, and mimic human browsing behavior to avoid detection.

Popular web scraper tools include:

- Beautiful Soup: A Python library for parsing HTML and XML documents, often used in conjunction with the Requests library for making HTTP requests.

- Scrapy: An open-source and collaborative Python framework designed for crawling websites and extracting structured data.

- Selenium: A tool that automates web browsers, allowing for scraping of dynamic content rendered by JavaScript.

Web scraper tools are invaluable for individuals and organizations looking to leverage publicly available web data for insights, decision-making, and automation in a scalable and efficient manner.

How do I scrape an entire website?

Scraping an entire website involves systematically accessing and extracting data from all its pages. This process can be complex and should be approached with careful planning, technical preparation, and ethical consideration. Here’s a general guide on how to scrape an entire website:

Understand the Website’s Structure

Analyze the website to understand its navigation, categorization, and how content is organized. Tools like web browser developer tools can help in inspecting the HTML structure.

Check Legal and Ethical Boundaries

- Review the website’s robots.txt file to see if scraping is allowed and which parts of the site are off-limits.

- Ensure your scraping activities comply with relevant laws (e.g., copyright laws, data protection regulations) and the website’s terms of use.

Choose the Right Tools

- For simple, static sites, tools like Beautiful Soup and Requests (Python) might suffice.

- For dynamic content, consider using Selenium or Puppeteer to automate browser interactions.

- For large-scale scraping, frameworks like Scrapy offer robust features for crawling and data extraction.

Plan Your Scraping Strategy

- Decide on the data you need to extract and how you’ll store it (e.g., CSV, database).

- Determine how you’ll navigate the site and in what order pages will be scraped. Map out pagination and any forms that need to be submitted.

- Implement rate limiting to avoid overwhelming the website’s server.

Implement and Run Your Scraper

- Write scripts or configure your scraping tool according to the plan. Include error handling for network issues or unexpected page structures.

- Test your scraper on a small section of the site to ensure it works correctly and respects the site’s rules.

- Run your scraper, monitoring its progress and adjusting as necessary.

Post-Processing

- Clean and structure the scraped data as required for your use case.

- Check the data for completeness and accuracy.

Continuous Monitoring (If Applicable)

- If you need to scrape the website regularly, set up monitoring for your scraper to adapt to any changes in the website’s structure.

- Ensure ongoing compliance with legal and ethical standards.

Is web scraping Zillow illegal?

The legality of web scraping, including sites like Zillow, depends on various factors, including how the scraping is done, what data is scraped, and how that data is used. Additionally, the legal landscape regarding web scraping is complex and can vary by jurisdiction. There are a few key points to consider when evaluating the legality of scraping data from websites like Zillow:

Terms of Service

Websites typically have Terms of Service (ToS) agreements that specify how their content can be used. Zillow’s ToS, like those of many other websites, may prohibit automated data extraction or web scraping without permission. Violating these terms could potentially lead to legal action or loss of access to the site.

Copyright Laws

Data collected through web scraping might be protected by copyright. While factual data (like prices or square footage) may not be copyrighted, the collective work or the specific presentation of data on a website could be. Using scraped data in a way that infringes on copyright can lead to legal issues.

Computer Fraud and Abuse Act (CFAA)

In the United States, the CFAA has been used to address unauthorized access to computer systems, which can include violating a website’s terms of service. However, legal interpretations of the CFAA regarding web scraping have evolved, and courts have reached different conclusions in various cases.

Data Protection and Privacy Laws

Laws such as the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) impose restrictions on how personal data can be collected, used, and stored. Scraping personal data without consent could violate these regulations.

Given these considerations, it’s essential to proceed with caution and seek legal advice before scraping data from websites like Zillow. In some cases, websites offer APIs or other legal means to access their data, which can be a safer and more reliable approach than scraping.

Which is the best web scraper?

Determining the “best” web scraper depends on your specific needs, technical skills, and the complexity of the web scraping tasks you intend to perform. Some tools excel in simplicity and user-friendliness for beginners, while others offer extensive features and customization for advanced users and complex projects. Here are several highly regarded web scraping tools and libraries, each with its own strengths:

Beautiful Soup (Python)

- Best for: Beginners and simple projects.

- Features: Easy to use for extracting data from HTML and XML files. Works well with Python’s requests library for fetching web content.

- Limitation: Doesn’t handle JavaScript-rendered content out of the box.

Scrapy (Python)

- Best for: More complex projects and full-fledged web crawling.

- Features: An open-source and collaborative framework that allows for efficient crawling and data extraction, as well as handling requests asynchronously.

- Limitation: Has a steeper learning curve than Beautiful Soup.

Selenium (Multiple Languages)

- Best for: Interacting with web pages that rely heavily on JavaScript.

- Features: Automates web browsers, allowing you to perform actions on websites just like a human user. Supports multiple programming languages including Python, Java, and C#.

- Limitation: Slower compared to other tools because it simulates a real user’s actions in a browser.

Puppeteer (JavaScript)

- Best for: Node.js developers and scraping dynamic content.

- Features: Provides a high-level API over Chrome DevTools Protocol to control Chrome or Chromium, making it great for scraping SPA (Single-Page Applications) that require JavaScript rendering.

- Limitation: JavaScript/Node.js knowledge required; primarily works with Chrome or Chromium.

Octoparse

- Best for: Non-programmers and those who prefer a graphical interface.

- Features: A user-friendly, point-and-click interface for extracting data without writing code. Offers cloud-based data extraction.

- Limitation: Free version has limitations, and complex websites might require a paid plan.

ParseHub

- Best for: Users who need advanced features without extensive coding knowledge.

- Features: Supports sites that use AJAX and JavaScript. Offers both a free and paid version, with a visual editor for selecting elements.

- Limitation: More complex usage scenarios might require a learning curve.

Apify

- Best for: Users looking for a scalable cloud solution.

- Features: Offers a cloud-based platform that can turn websites into APIs, with support for JavaScript-heavy sites.

- Limitation: Might be overkill for simple projects and has costs associated with cloud processing.

Choosing the Best Tool

- Project Complexity: Consider whether your project requires handling dynamic content, how large-scale it is, and your specific data needs.

- Technical Skill Level: Tools range from no-code solutions to libraries requiring programming knowledge.

- Budget: While many tools have free versions, large-scale or complex scraping needs might require a paid solution.

Ultimately, the best scraper is one that aligns with your project requirements, skill level, and budget. It’s often beneficial to experiment with a few different tools to find the one that best suits your needs.

Can websites detect web scraping?

Yes, websites can detect web scraping through various means, and many implement measures to identify and block scraping activities. Here are some common ways websites detect scraping and some strategies they might use to prevent it:

Unusual Access Patterns

Websites can monitor access patterns and detect non-human behavior, such as:

- High frequency of page requests from a single IP address in a short period.

- Rapid navigation through pages that is faster than humanly possible.

- Accessing many pages or data points in an order that doesn’t follow normal user browsing patterns.

Headers Analysis

Websites analyze the HTTP request headers sent by clients. Scrapers that don’t properly mimic the headers of a regular web browser (including User-Agent, Referer, and others) can be flagged.

Behavioral Analysis

Some sites implement more sophisticated behavioral analysis, such as:

- Tracking mouse movements and clicks to distinguish between human users and bots.

- Analyzing typing patterns in form submissions.

- Using CAPTCHAs to challenge suspected bots.

Rate Limiting and Throttling

Websites might limit the number of requests an IP can make in a given timeframe. Exceeding this limit can lead to temporary or permanent IP bans.

Analyzing Traffic Sources

Sites may scrutinize the referrer field in the HTTP headers to check if the traffic is coming from expected sources or directly to pages in a way that typical users would not.

Integrity Checks

Some websites use honeypots, which are links or data fields invisible to regular users but detectable by scrapers. Interaction with these elements can indicate automated scraping.

Challenges and Tests

Implementing tests, such as CAPTCHAs or requiring JavaScript execution for accessing site content, can effectively distinguish bots from humans, as many simpler scraping tools cannot execute JavaScript or solve CAPTCHAs.

Prevention and Mitigation Strategies for Scrapers

- Respect robots.txt: Adhering to the directives in the site’s robots.txt file is the first step in polite scraping.

- Rate Limiting: Implementing delays between requests to mimic human browsing speeds can help avoid detection.

- Rotating IP Addresses: Using proxy servers or VPN services to rotate IP addresses can prevent a single IP from being banned.

- Mimicking Human Behavior: Randomizing delays, using headless browsers that execute JavaScript, and accurately setting request headers can make scraping activities harder to detect.

- CAPTCHA Solving: Some advanced scrapers use CAPTCHA solving services, though this can be ethically and legally questionable.

It’s crucial to scrape data ethically and legally, respecting the website’s terms of service and legal regulations surrounding data use. Additionally, consider reaching out to the website owner for API access or permission to scrape, as this can often provide a more stable and legal avenue for data access.

How do I completely scrape a website?

Completely scraping a website involves systematically downloading data from all of its accessible parts. This task requires careful planning, the right tools, and an understanding of legal and ethical considerations. Here’s a step-by-step approach to thoroughly scrape a website:

Understand the Website’s Structure

- Explore the website to understand its navigation, content layout, and how data is organized.

- Identify the data you want to scrape: product details, articles, images, etc.

- Inspect the website’s robots.txt file to see if the website restricts crawlers from accessing certain parts.

Check Legal and Ethical Considerations

- Review the website’s Terms of Service to ensure that scraping is not prohibited.

- Be aware of legal restrictions, including copyright laws and privacy regulations like GDPR.

- Plan to scrape responsibly to avoid overloading the website’s server.

Choose the Right Tools

- For simple, static sites, tools like Beautiful Soup with Python can be sufficient.

- For dynamic websites that load content with JavaScript, consider using Selenium or Puppeteer.

- Scrapy is a powerful framework for large-scale web scraping and crawling projects.

Plan Your Scraping Strategy

- Decide how you’ll navigate the site. Will you follow links from a homepage, or do you have a list of URLs to visit directly?

- Determine how you’ll handle pagination and any dynamically loaded content.

- Consider how to store the scraped data: CSV, JSON, a database, etc.

Implement Rate Limiting and Ethical Scraping Practices

- Respect the robots.txt file directives.

- Implement delays between requests to mimic human browsing and prevent server overload.

- Use headers that include a User-Agent string to identify your bot.

Start Scraping

- Develop your script or configure your scraping tool based on the strategy you’ve planned.

- Test your script on a small portion of the website to ensure it works as expected and respects ethical guidelines.

Monitor and Adjust

- Monitor the scraping process for errors or issues, especially if the website changes its layout or navigation.

- Be prepared to adjust your scripts if the website updates its structure or if you encounter anti-scraping measures.

Process and Store the Data

- Clean and process the scraped data as needed for your project or analysis.

- Store the data in a structured format that suits your usage: relational databases, flat files, or cloud storage solutions.

Continuous Maintenance

- Websites often change, which can break your scraping setup. Regular maintenance and updates to your scripts may be necessary.

Ethical Considerations

Always prioritize ethical scraping practices. This means scraping at a reasonable rate, handling the data responsibly, and respecting copyright and privacy laws. If you’re scraping data for analysis or public use, consider anonymizing any personal information and avoiding scraping sensitive data without consent.

Given the complexity and potential legal implications of scraping an entire website, consider reaching out to the site owner for permission or to inquire about API access, which might offer a more straightforward and legal method to obtain the data you need.

Which website can I scraping?

Web scraping, the process of automatically extracting data from websites, is a powerful tool for data analysis, market research, and automating tasks. However, whether you can legally and ethically scrape a website depends on several factors:

Terms of Service: Before scraping a website, it’s crucial to review its Terms of Service (ToS). Many websites explicitly prohibit scraping in their ToS. Ignoring these terms can lead to legal consequences and your IP being blocked.

Robots.txt: Websites use the robots.txt file to indicate which parts of the site can be crawled by automated agents. Respecting these rules is a basic courtesy and helps avoid overloading the website’s servers.

Data Sensitivity and Privacy: Even if a website doesn’t explicitly prohibit scraping, consider the nature of the data you’re extracting. Personal data and sensitive information are protected under laws like GDPR in Europe and CCPA in California. Always prioritize user privacy and legal compliance.

Public vs. Private Content: Publicly available data, such as product listings or public posts, is generally considered fair game for scraping. However, accessing data behind login forms or that requires user consent is ethically and legally questionable.

Rate Limiting: To avoid negatively impacting a website’s performance, implement rate limiting in your scraping scripts. This ensures you’re not sending too many requests in a short period.

Recommended Practices for Ethical Scraping

- Always read and comply with a website’s Terms of Service and robots.txt file.

- Consider the ethical implications of scraping, particularly concerning personal data.

- Use official APIs if available, as they are provided by the website for data access and are a legal way to obtain data.

- Implement rate limiting and be mindful of the website’s server load.

- Seek permission from the website owner if you’re unsure about the legality or ethics of scraping their site.

How do I scrape an undetected website?

Scraping data from websites in a way that avoids detection involves ethical considerations and legal implications. It’s important to remember that if a website has measures in place to detect and block scrapers, it’s likely because they do not want their data to be scraped. This can be for various reasons, including protecting user privacy, preserving server resources, or maintaining the integrity of their data.

However, if you have legitimate reasons for scraping data and you’ve ensured that your activities are in compliance with the website’s Terms of Service, privacy laws like GDPR or CCPA, and other relevant legal frameworks, here are some general tips to minimize the likelihood of your scraping activities being detected and blocked:

Respect Robots.txt: Before beginning, check the website’s robots.txt file to understand which parts of the site you’re allowed to crawl.

Use a User-Agent String: Identify your scraper as a legitimate browser by including a user-agent string in your requests. However, don’t fake being a browser you’re not, as this can be seen as deceptive.

Limit Request Rate: Rapid-fire requests can easily overwhelm a website’s server and will likely lead to your IP being blocked. Implement delays between your requests to mimic human browsing behavior.

Rotate IPs and User Agents: If the site has strict limitations, consider rotating your IP address and user-agent strings to avoid detection. This should be done judiciously and ethically, using services that respect legal standards.

Use Headless Browsers Sparingly: Tools like Puppeteer or Selenium can simulate a real user’s browser behavior but are more easily detectable and resource-intensive. Use them when necessary and in moderation.

Leverage Browser Automation with Caution: Automating browser activities can mimic human interaction more closely but also draws more attention if used excessively.

Avoid Scraping at Peak Times: Websites are more likely to implement anti-scraping measures during high-traffic periods. Consider scraping during off-peak hours.

Consider Using APIs: If the website offers an API for accessing the data you need, use it. APIs are a legitimate way to access data and usually come with guidelines to prevent abuse.

Ethical Considerations: Always consider the impact of your scraping. Avoid overloading the website’s servers, and never scrape personal or sensitive information without clear consent and legal justification.

Seek Permission: When in doubt, the most straightforward approach to avoid legal and ethical issues is to ask for permission to scrape the website.

Remember, the goal should be to access data responsibly, without causing harm to the website or its users. Engaging in scraping activities that are against the website’s policies or the law can lead to serious consequences, including legal action. Always prioritize transparency, respect for privacy, and legal compliance in your data collection efforts.